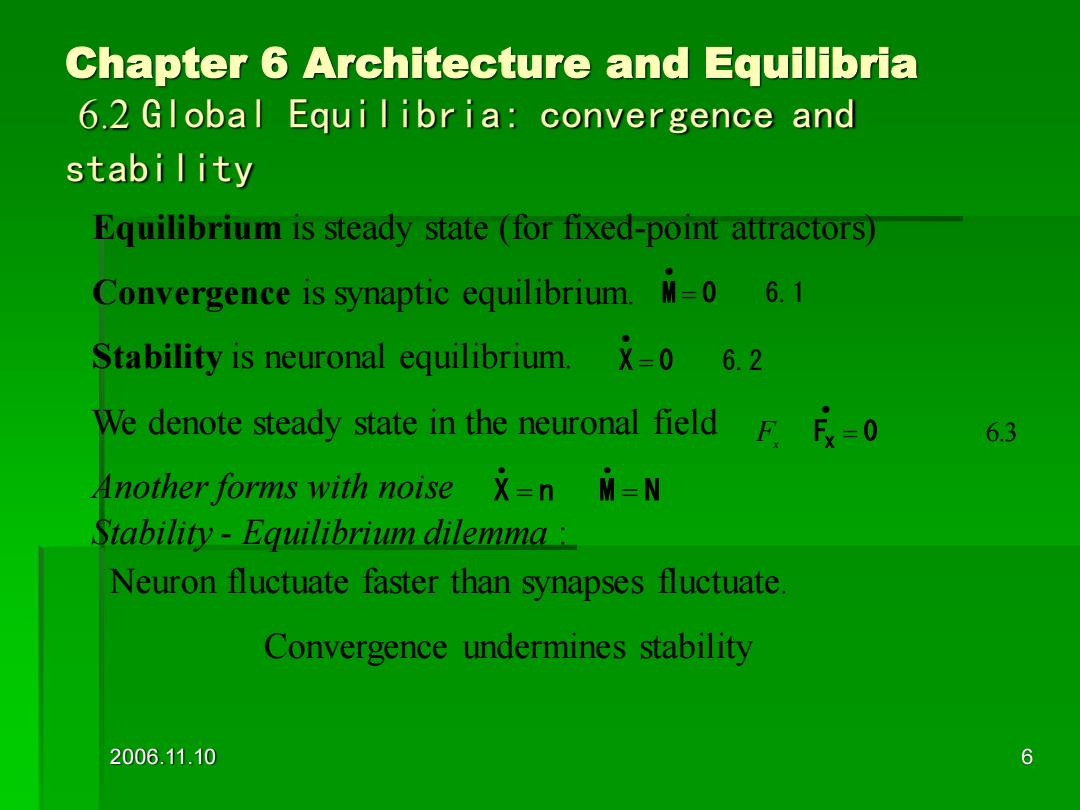

Chapter 6 Architecture and Equilibria 6.2 Global Equilibria:convergence and stabi lity Equilibrium is steady state (for fixed-point attractors) Convergence is synaptic equilibrium.M=0 6.1 Stability is neuronal equilibrium.X=0 6.2 We denote steady state in the neuronal field F=0 6.3 Another forms with noise x=n M=N Stability-Equilibrium dilemma: Neuron fluctuate faster than synapses fluctuate Convergence undermines stability 2006.11.10 6

2006.11.10 6 Chapter 6 Architecture and Equilibria 6.2 Global Equilibria: convergence and stability Equilibrium is steady state (for fixed-point attractors) Convergence is synaptic equilibrium. Stability is neuronal equilibrium. We denote steady state in the neuronal field Another forms with noise Stability - Equilibrium dilemma : Neuron fluctuate faster than synapses fluctuate. Convergence undermines stability M = 0 6.1 • X = 0 6.2 • F x Fx = 0 6.3 • • • X n M N = =

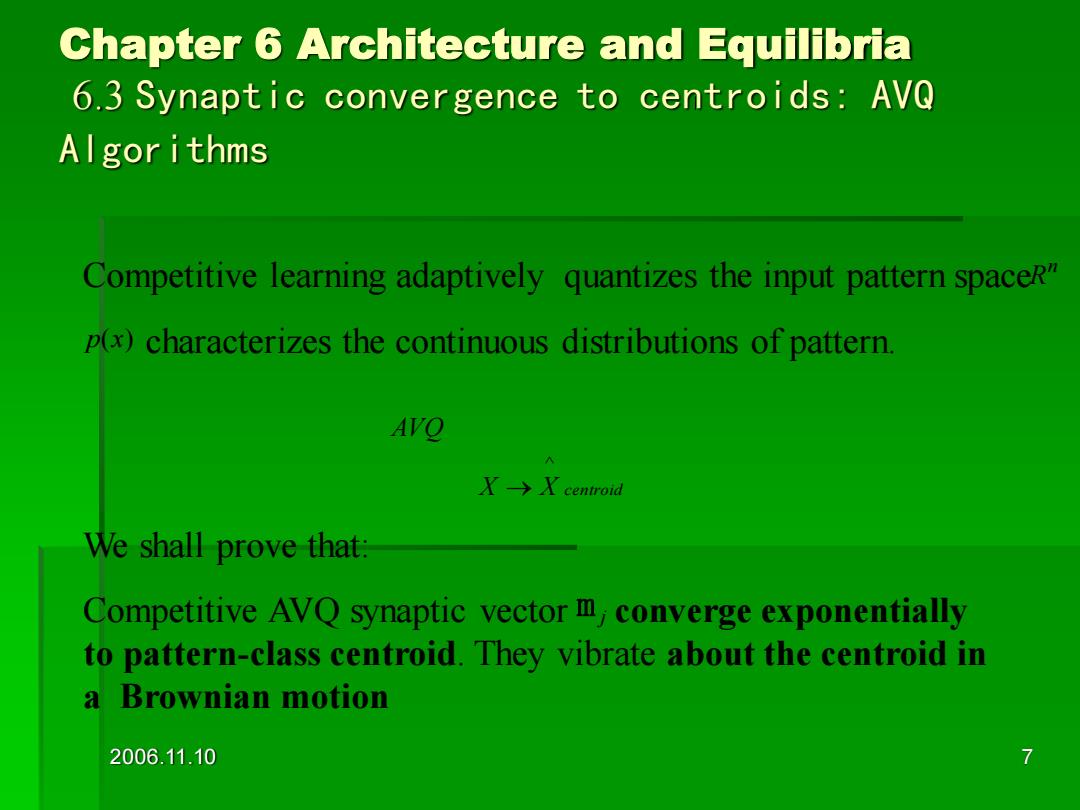

Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Al gor i thms Competitive learning adaptively quantizes the input pattern spaceR" p(x)characterizes the continuous distributions of pattern AVO X-→X centroid We shall prove that: Competitive AVQ synaptic vector m,converge exponentially to pattern-class centroid.They vibrate about the centroid in a Brownian motion 2006.11.10 7

2006.11.10 7 Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids: AVQ Algorithms We shall prove that: Competitive AVQ synaptic vector converge exponentially to pattern-class centroid. They vibrate about the centroid in a Brownian motion m j Competitive learning adaptively quantizes the input pattern space characterizes the continuous distributions of pattern. n R p(x) X X centroid AVQ ^ →

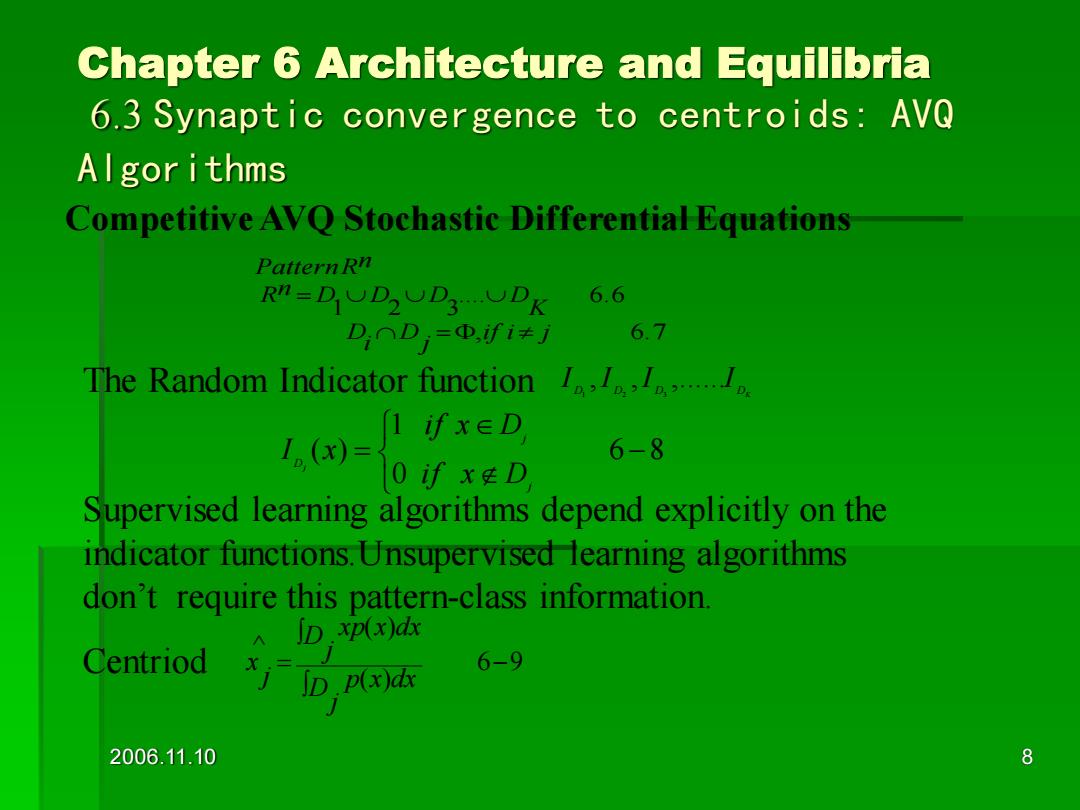

Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Algor i thms Competitive AVQ Stochastic Differential Equations Pattern Rn Rn=DOD2UD3DK 6.6 DnD=D,fi≠j 6.7 The Random Indicator function... 1.()=ixeD 6-8 oif xD Supervised learning algorithms depend explicitly on the indicator functions.Unsupervised learning algorithms don't require this pattern-class information. ID.xp(x)dx Centriod 6-9 (x)dx 2006.11.10 8

2006.11.10 8 Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids: AVQ Algorithms , 6.7 .... 6.6 1 2 3 if i j j D i D K Rn D D D D PatternRn = = The Random Indicator function Supervised learning algorithms depend explicitly on the indicator functions.Unsupervised learning algorithms don’t require this pattern-class information. Centriod D D D DK I ,I ,I ,......I 1 2 3 6 8 0 1 ( ) − = j j D i f x D i f x D I x j 6 9 ( ) ( ) ^ − = j D p x dx j D xp x dx j x Competitive AVQ Stochastic Differential Equations

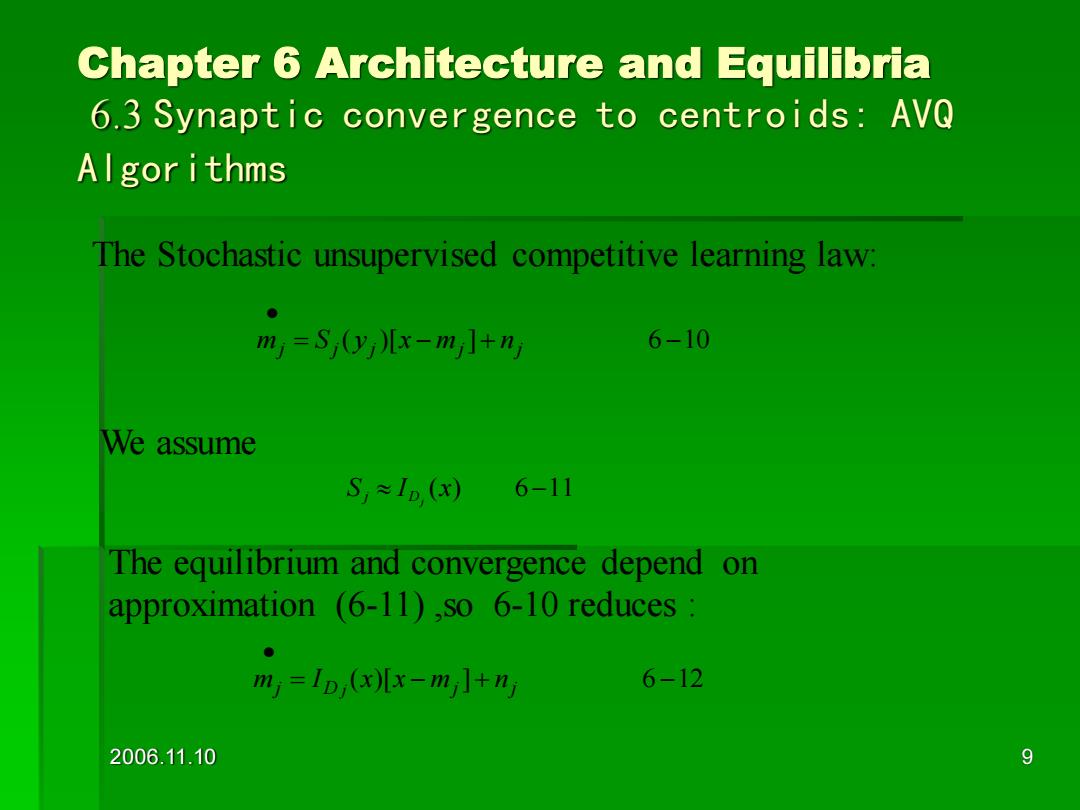

Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Al gor i thms The Stochastic unsupervised competitive learning law: m)=S0y儿x-m]+n 6-10 We assume S≈ID(x)6-11 The equilibrium and convergence depend on approximation (6-11),so 6-10 reduces m=D(x儿x-m]+n 6-12 2006.11.10 9

2006.11.10 9 Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids: AVQ Algorithms The Stochastic unsupervised competitive learning law: = ( )[ − ]+ 6 −10 • j j j mj nj m S y x S I (x) 6−11 Dj j We assume The equilibrium and convergence depend on approximation (6-11) ,so 6-10 reduces : = ( )[ − ]+ 6 −12 • j D mj nj m I x x j

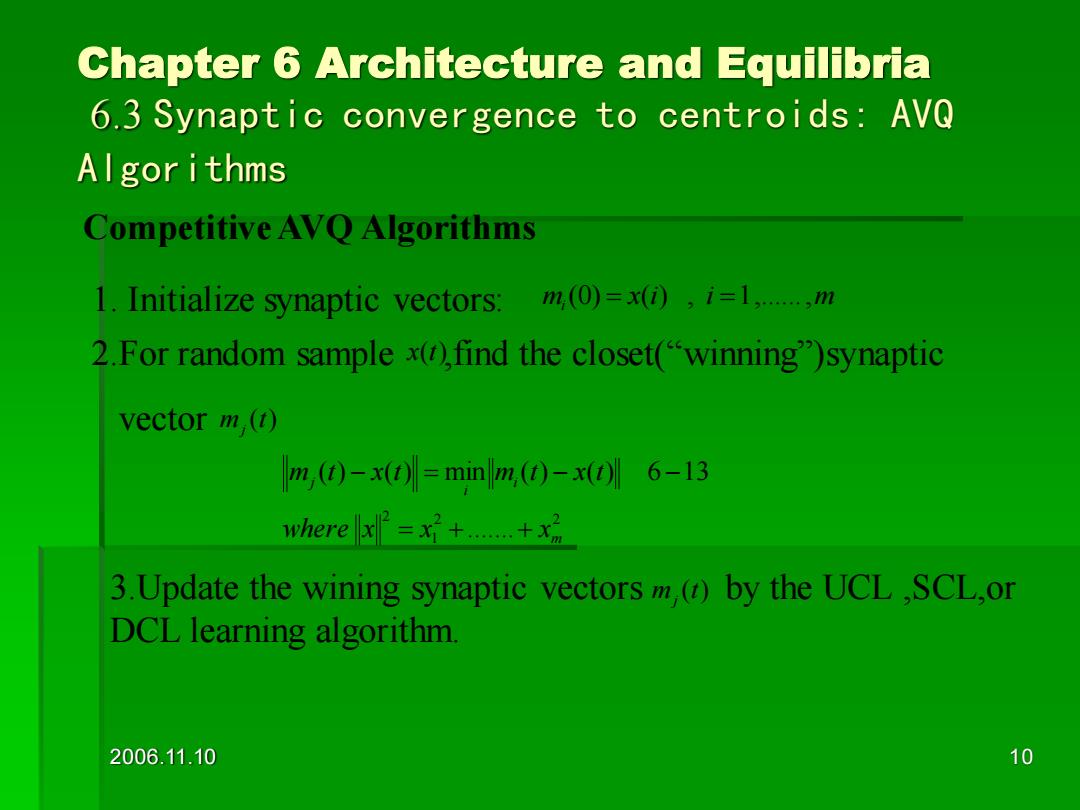

Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Al gor ithms Competitive AVQ Algorithms 1.Initialize synaptic vectors: m,(0)=x(0,i=1,…,m 2.For random sample x()find the closet("winning")synaptic vector m (t) m,(0)-x0)=minm,(0-x06-13 where x=++x 3.Update the wining synaptic vectors()by the UCL.SCL,or DCL learning algorithm. 2006.11.10 10

2006.11.10 10 Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids: AVQ Algorithms Competitive AVQ Algorithms 1. Initialize synaptic vectors: mi (0) = x(i) , i =1,......,m 2.For random sample ,find the closet(“winning”)synaptic vector x(t) m (t) j 2 2 1 2 ....... ( ) ( ) min ( ) ( ) 6 13 m i i j where x x x m t x t m t x t = + + − = − − 3.Update the wining synaptic vectors by the UCL ,SCL,or DCL learning algorithm. m (t) j