Advanced Algorithms Hashing and Sketching 尹一通Nanjing University,2022Fall

尹⼀通 Nanjing University, 2022 Fall Advanced Algorithms Hashing and Sketching

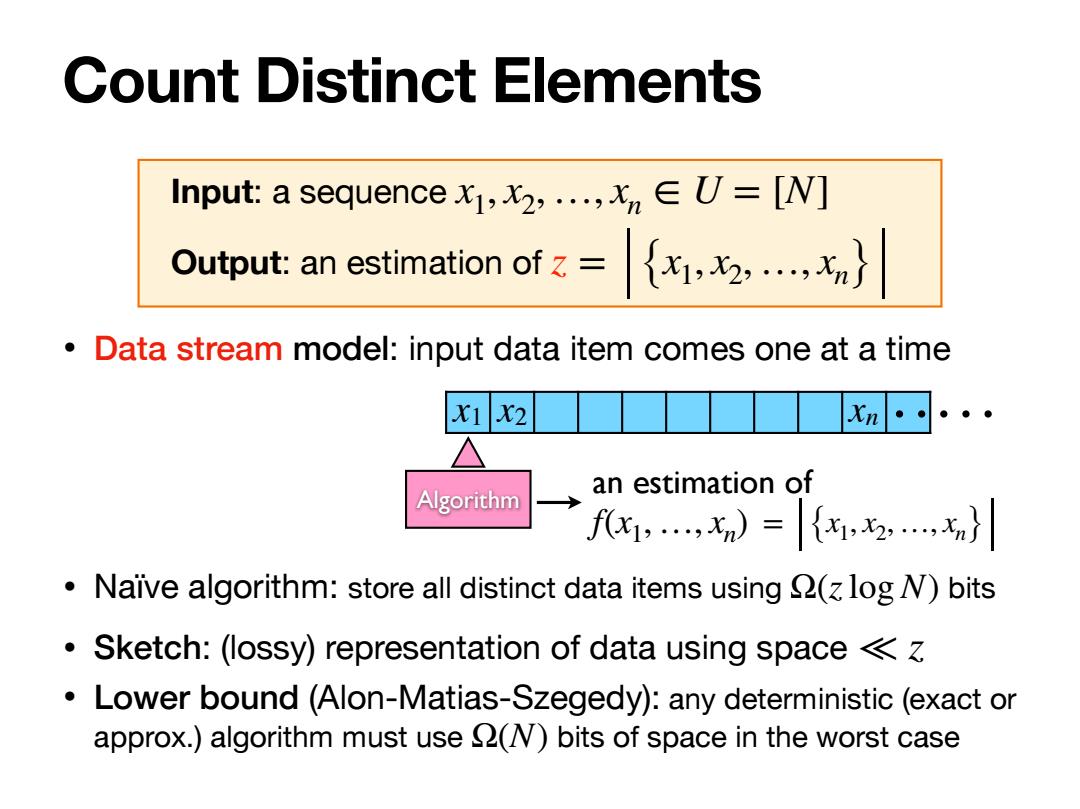

Count Distinct Elements Input:a sequence,,...,=[N] Output:an estimation of= {x,,} Data stream model:input data item comes one at a time x12 Xn Algorithm an estimation of f,X)={,,,x} Naive algorithm:store all distinct data items using (log N)bits Sketch:(lossy)representation of data using space Lower bound (Alon-Matias-Szegedy):any deterministic(exact or approx.)algorithm must use (N)bits of space in the worst case

Count Distinct Elements Input: a sequence Output: an estimation of x1, x2,…, xn ∈ U = [N] z = {x1, x2, …, xn} • Data stream model: input data item comes one at a time • Naïve algorithm: store all distinct data items using bits • Sketch: (lossy) representation of data using space • Lower bound (Alon-Matias-Szegedy): any deterministic (exact or approx.) algorithm must use bits of space in the worst case Ω(zlog N) ≪ z Ω(N) x1 x2 xn Algorithm an estimation of f(x1,…, xn) = {x1, x2,…, xn}

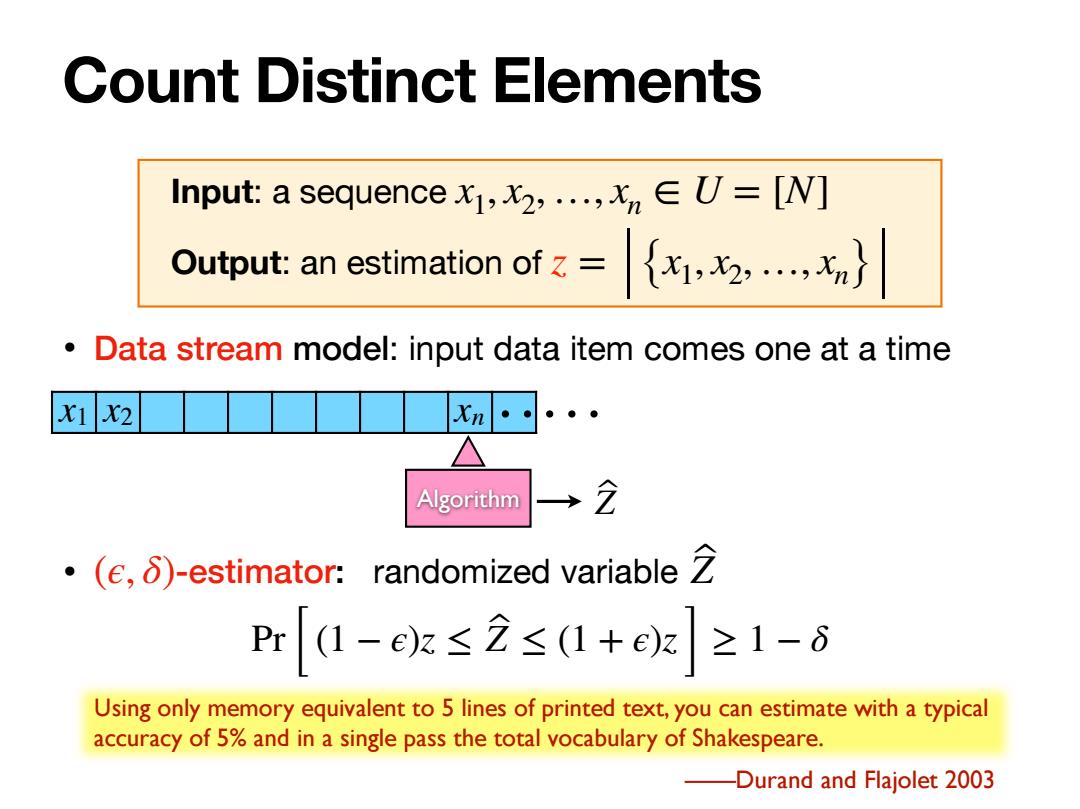

Count Distinct Elements Input:a sequence,,...,=[N] Output:an estimation of= {出,,} Data stream model:input data item comes one at a time X1 X2 Xn Algorithm 2 ·(e,δ)-estimator: randomized variable Pr1-ek≤2≤1+ez≥1-6 Using only memory equivalent to 5 lines of printed text,you can estimate with a typical accuracy of 5%and in a single pass the total vocabulary of Shakespeare. -Durand and Flajolet 2003

Count Distinct Elements • Data stream model: input data item comes one at a time • (ϵ, δ)-estimator: randomized variable Z ̂ Pr [ (1 − ϵ)z ≤ Z ̂ ≤ (1 + ϵ)z ] ≥ 1 − δ Using only memory equivalent to 5 lines of printed text, you can estimate with a typical accuracy of 5% and in a single pass the total vocabulary of Shakespeare. ——Durand and Flajolet 2003 x1 x2 xn Algorithm Z ̂ Input: a sequence Output: an estimation of x1, x2,…, xn ∈ U = [N] z = {x1, x2, …, xn}

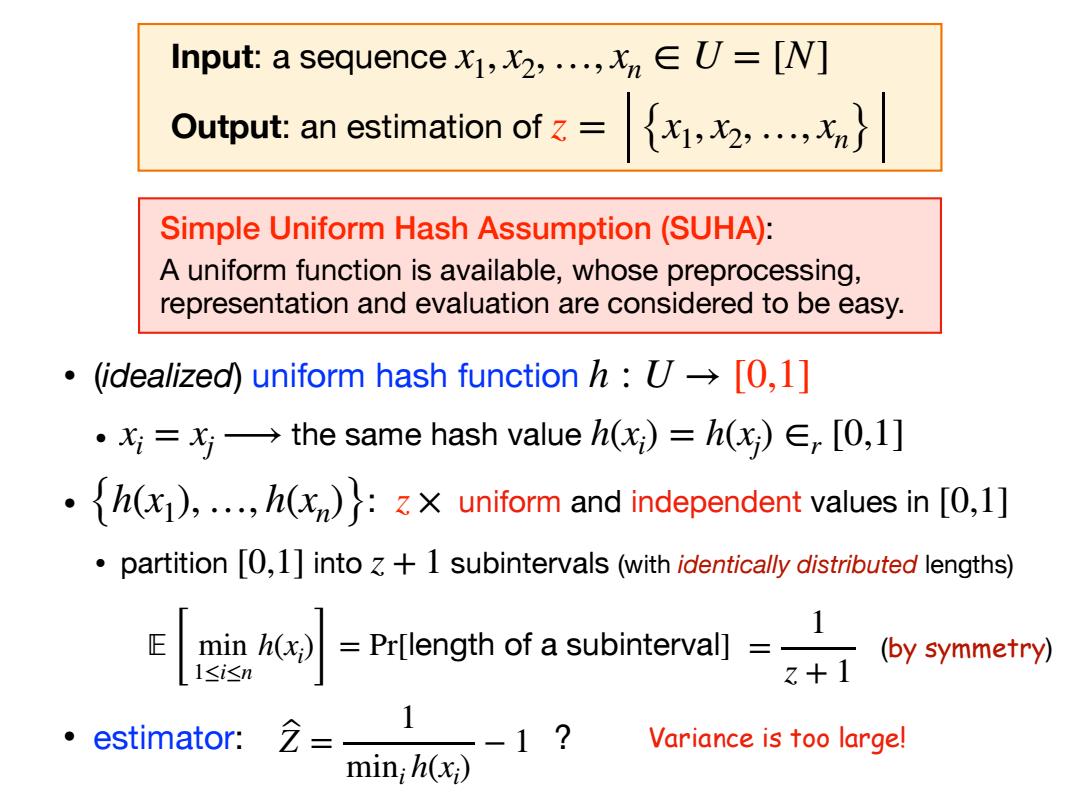

Input:a sequencex,,...,=[N] Output:an estimation of ={,2,,} Simple Uniform Hash Assumption(SUHA): A uniform function is available,whose preprocessing, representation and evaluation are considered to be easy. (idealized)uniform hash function h:U->[0,1] ·x:=x→the same hash value(x)=h(x)∈,[0,l] h),...,h:uniform and independent values in [,1] partition [0,1]into +1 subintervals (with identically distributed lengths) Prlongth of a subintervall 1 (by symmetry) 。estimator: 2= -1? Variance is too large! min;h(xi)

• (idealized) uniform hash function • the same hash value • : uniform and independent values in • partition into subintervals (with identically distributed lengths) h : U → [0,1] xi = xj⟶ h(xi ) = h(xj ) ∈r [0,1] {h(x1), …, h(xn)} z × [0,1] [0,1] z + 1 Simple Uniform Hash Assumption (SUHA): A uniform function is available, whose preprocessing, representation and evaluation are considered to be easy. 𝔼 [ min 1≤i≤n h(xi ) ] = Pr[length of a subinterval] = 1 z + 1 (by symmetry) • estimator: ? Z ̂ = 1 mini h(xi ) − 1 Variance is too large! Input: a sequence Output: an estimation of x1, x2,…, xn ∈ U = [N] z = {x1, x2, …, xn}

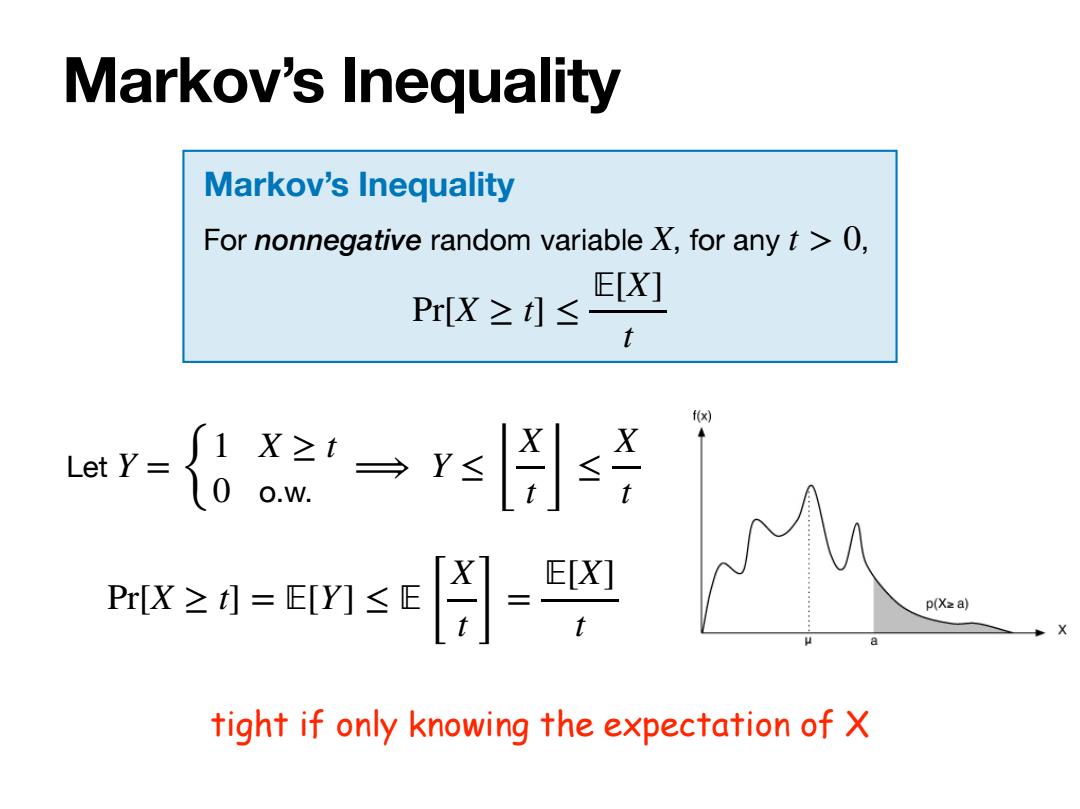

Markov's Inequality Markov's Inequality For nonnegative random variable X,for any t >0, E[X] Pr[X≥t1≤ t f(x) LetY= 1 X 0 PX≥0=n≤E月- E[X] p(Xza) tight if only knowing the expectation of X

Markov’s Inequality Markov’s Inequality For nonnegative random variable , for any , X t > 0 Pr[X ≥ t] ≤ 𝔼[X] t Let Y = { 1 X ≥ t 0 o.w. ⟹ Y ≤ ⌊ X t ⌋ ≤ X t Pr[X ≥ t] = 𝔼[Y] ≤ 𝔼 [ X t ] = 𝔼[X] t tight if only knowing the expectation of X