Gradient descent

Gradient Descent

Review:Gradient Descent In step 3,we have to solve the following optimization problem: θ*=arg min L(0) L:loss functionθ:parameters Suppose that 0 has two variables {01,02} Randomly start at 00 09] 7L(0)= 0L(01)/a61 1aL(02)/a02 [aL(0)/a61 02 aL(0)/a62 01=00-nL(0) aL(0)/a61 aL(02)/a02 → 02=01-n7L(01)

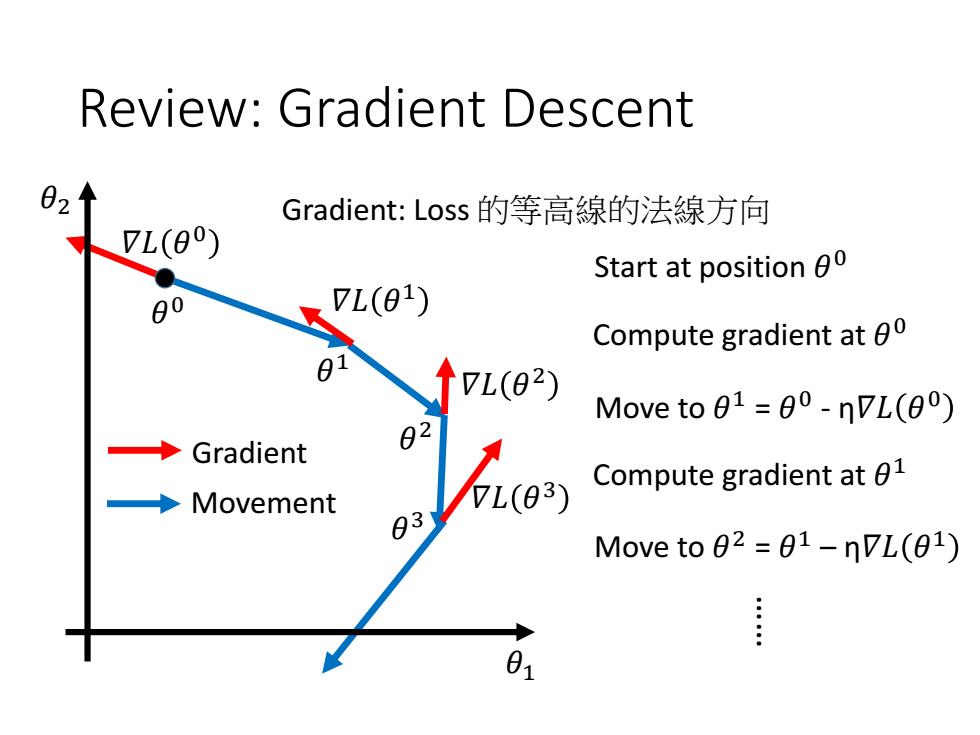

Review: Gradient Descent • In step 3, we have to solve the following optimization problem: 𝜃 ∗ = arg min 𝜃 𝐿 𝜃 L: loss function 𝜃: parameters Suppose that θ has two variables {θ1 , θ2 } Randomly start at 𝜃 0 = 𝜃1 0 𝜃2 0 𝛻𝐿 𝜃 = 𝜕𝐿 𝜃1 Τ𝜕𝜃1 𝜕𝐿 𝜃2 Τ𝜕𝜃2 𝜃1 1 𝜃2 1 = 𝜃1 0 𝜃2 0 − 𝜂 𝜕𝐿 𝜃1 Τ 0 𝜕𝜃1 𝜕𝐿 𝜃2 Τ 0 𝜕𝜃2 𝜃 1 = 𝜃 0 − 𝜂𝛻𝐿 𝜃 0 𝜃1 2 𝜃2 2 = 𝜃1 1 𝜃2 1 − 𝜂 𝜕𝐿 𝜃1 Τ 1 𝜕𝜃1 𝜕𝐿 𝜃2 Τ 1 𝜕𝜃2 𝜃 2 = 𝜃 1 − 𝜂𝛻𝐿 𝜃 1

Review:Gradient Descent Gradient:Loss的等高線的法線方向 L(0) Start at position 00 80 7L(01) Compute gradient at 0 7L(02) Move to 01=00-nVL(00) ◆ Gradient 82 Movement L(03) Compute gradient at 01 83 Move to 02 =01-nVL(01) 01

Review: Gradient Descent Start at position 𝜃 0 Compute gradient at 𝜃 0 Move to 𝜃 1 = 𝜃 0 - η𝛻𝐿 𝜃 0 Compute gradient at 𝜃 1 Move to 𝜃 2 = 𝜃 1 – η𝛻𝐿 𝜃 1 Movement Gradient …… 𝜃 0 𝜃 1 𝜃 2 𝜃 3 𝛻𝐿 𝜃 0 𝛻𝐿 𝜃 1 𝛻𝐿 𝜃 2 𝛻𝐿 𝜃 3 𝜃1 𝜃2 Gradient: Loss 的等高線的法線方向

Gradient descent Tip 1:Tuning your learning rates

Gradient Descent Tip 1: Tuning your learning rates

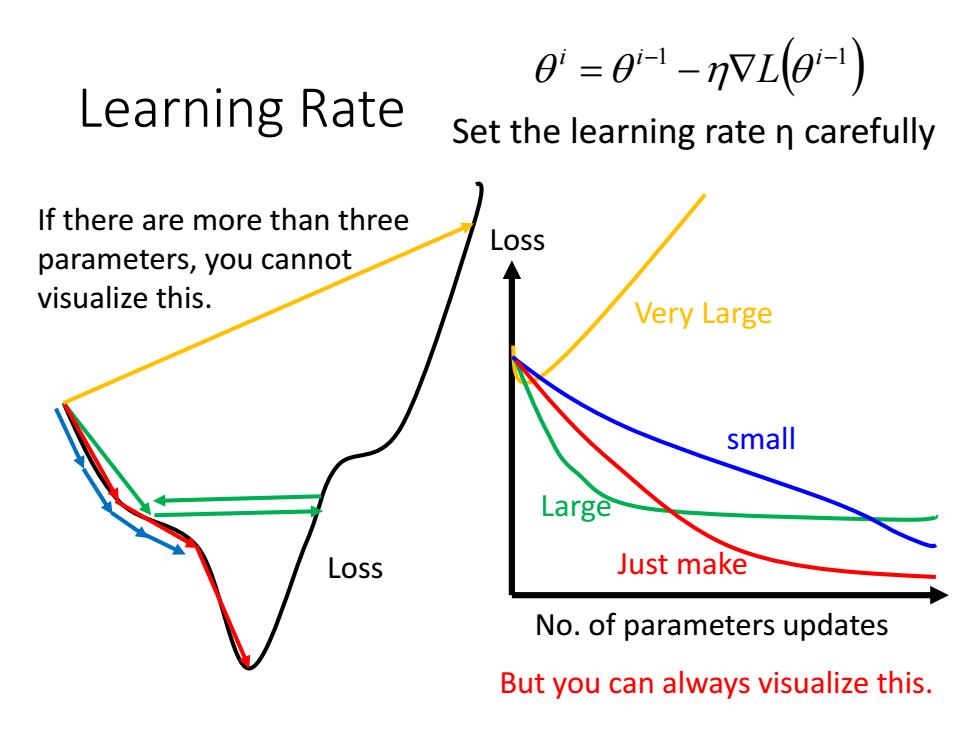

0=0-1-VL0-1) Learning Rate Set the learning rate n carefully If there are more than three Loss parameters,you cannot visualize this. Very Large small Large Loss Just make No.of parameters updates But you can always visualize this

Learning Rate No. of parameters updates Loss Loss Very Large Large small Just make 1 1 i i i L Set the learning rate η carefully If there are more than three parameters, you cannot visualize this. But you can always visualize this