MACHINE LEARNING BERKELEY Naive Solution (imageGPT) Another problem:time complexity o Recall:transformers are O(n^2)w.r.t.input length o AND input length is O(n^2)w.r.t.length of each side o 256x 256 image=>65536 pixels o For reference,BERT only has a max length of 512 tokens ● Solution:just use smaller images Imao o Max size of 64 x 64 Trained on a similar objective to language models(next pixel prediction instead of next token prediction)

Naive Solution (imageGPT) ● Another problem: time complexity :( ○ Recall: transformers are O(n^2) w.r.t. input length ○ AND input length is O(n^2) w.r.t. length of each side ○ 256 x 256 image => 65536 pixels ○ For reference, BERT only has a max length of 512 tokens ● Solution: just use smaller images lmao ○ Max size of 64 x 64 ● Trained on a similar objective to language models (next pixel prediction instead of next token prediction)

MACHINE LEARNING BERKELEY The good PRE-TRAINED ON ●' Nice image representations EVALUATION MODEL ACCURACY LARSLAE CIFAR-10 ResNet-15210 94.0 ● SOTA on semi-supervised classification Linear Probe SimCLR12 95.3 o Task:classification with limited labeled samples iGPT-L 32x32 96.3 CIFAR-100 ResNet-152 78.0 0 Model:linear classifer on iGPT representations Linear Probe SimCLR 80.2 o Competitive results with a naive method iGPT-L32x32 82.8 lots of compute ● Nice image generations o Effective at modeling visual information

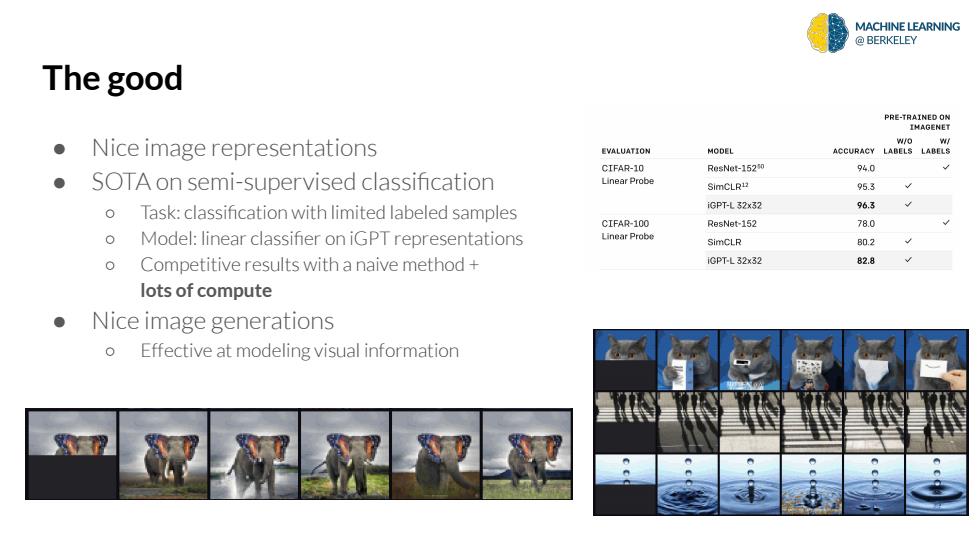

The good ● Nice image representations ● SOTA on semi-supervised classification ○ Task: classification with limited labeled samples ○ Model: linear classifier on iGPT representations ○ Competitive results with a naive method + lots of compute ● Nice image generations ○ Effective at modeling visual information

MACHINE LEARNING BERKELEY The bad lots of compute

The bad lots of compute

MACHINE LEARNING BERKELEY The bad ●' "We train iGPT-S,iGPT-M,and iGPT-L,transformers containing 76M,455M,and 1.4B parameters respectively,on ImageNet.We also train iGPT-XL,a 6.8 billion parameter transformer,on a mix of ImageNet and images from the web." ● "iGPT-L was trained for roughly 2500 V100-days while a similarly performing MoCo model can be trained in roughly 70 V100-days" o For reference,MoCo is another self-supervised model but it has a ResNet backbone that is capable of handling a 224 x 224 image resolution All that for only a 64x64 resolution!

The bad ● “We train iGPT-S, iGPT-M, and iGPT-L, transformers containing 76M, 455M, and 1.4B parameters respectively, on ImageNet. We also train iGPT-XL, a 6.8 billion parameter transformer, on a mix of ImageNet and images from the web.” ● “iGPT-L was trained for roughly 2500 V100-days while a similarly performing MoCo model can be trained in roughly 70 V100-days” ○ For reference, MoCo is another self-supervised model but it has a ResNet backbone that is capable of handling a 224 x 224 image resolution ● All that for only a 64x64 resolution!

MACHINE LEARNING BERKELEY So...why? ● Mostly a proof of concept Paradigm of transformers +m a ss i ve self-supervised pre-training but applied to a new domain o A general method for learning representations o Same method,new modes

So… why? ● Mostly a proof of concept ● Paradigm of transformers + m a s s i v e self-supervised pre-training but applied to a new domain ○ A general method for learning representations ○ Same method, new modes