2021级大数据专业机器学习 广工业大学 GUANGDONG UNNERSITY OF TECHNOLOGY BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding 授课:周郭许 庆工业大学

2021级大数据专业机器学习 授课: 周郭许

Outline Research context Main ideas BERT Experiments Conclusions 国产之小丝

Outline 2024/5/13 2 Research context Main ideas BERT Experiments Conclusions

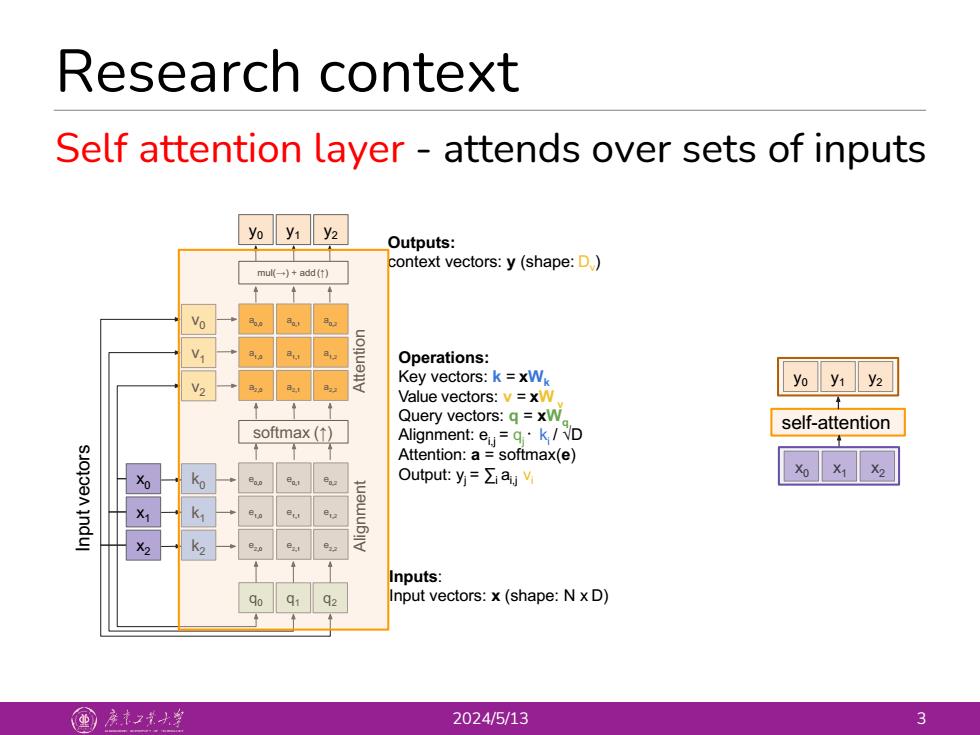

Research context Self attention layer-attends over sets of inputs yo y1 y2 Outputs: context vectors:y(shape:D) mu一)+add(t) Vo Operations: V2 Key vectors:k =xW yo y1 y2 Value vectors:v=xW Query vectors:q=xW self-attention softmax(↑) Alignment:e=g·k/D Attention:a softmax(e) sJojoen indul Ko Output::y=∑a, K> Inputs: Input vectors:x(shape:N x D) 国产之大丝 2024/5/13 3

Research context 2024/5/13 3 mul(→) + add (↑) Self attention layer - attends over sets of inputs Alignment q0 Attention Inputs: Input vectors: x (shape: N x D) softmax (↑) y1 Outputs: context vectors: y (shape: Dv) Operations: Key vectors: k = xWk Value vectors: v = xW v Query vectors: q = xWq Alignment: ei,j = qj ᐧ ki / √D Attention: a = softmax(e) Output: yj = ∑i ai,j vi x2 x1 x0 e2,0 e1,0 e0,0 a2,0 a1,0 a0,0 e2,1 e1,1 e0,1 e2,2 e1,2 e0,2 a2,1 a1,1 a0,1 a2,2 a1,2 a0,2 q1 q2 y0 y2 Input vectors k2 k1 k0 v2 v1 v0 self-attention x0 x1 x2 y0 y1 y2

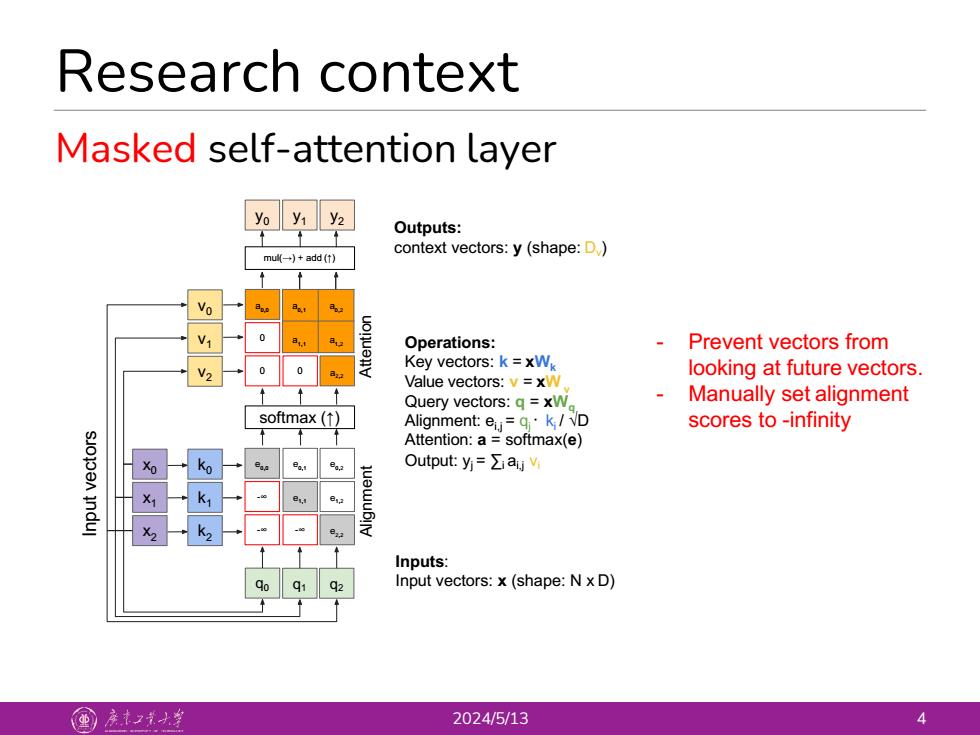

Research context Masked self-attention layer yo y1 y2 Outputs: mul(-)+add (t) context vectors:y (shape:D) Vo Operations: Prevent vectors from Key vectors:k=xW 2 looking at future vectors. Value vectors:v =xW Query vectors:g =xW Manually set alignment softmax (1) Alignment:e.=q·k/VD scores to-infinity Attention:a softmax(e) a. Output::y=∑ay Inputs: q1 Input vectors:x(shape:N x D) 重)亲大学 2024/5/13 4

Research context 2024/5/13 4 Masked self-attention layer mul(→) + add (↑) Alignment q0 Attention Inputs: Input vectors: x (shape: N x D) softmax (↑) y1 Outputs: context vectors: y (shape: Dv) Operations: Key vectors: k = xWk Value vectors: v = xW v Query vectors: q = xWq Alignment: ei,j = qj ᐧ ki / √D Attention: a = softmax(e) Output: yj = ∑i ai,j vi x2 x1 x0 -∞ -∞ 0 0 a0,0 -∞ e2,2 e1,1 e1,2 e0,0 e0,1 e0,2 0 a2,2 a0,1 a0,2 a1,1 a1,2 q1 q2 y2 y0 Input vectors k2 k1 k0 v2 v1 v0 - Prevent vectors from looking at future vectors. - Manually set alignment scores to -infinity

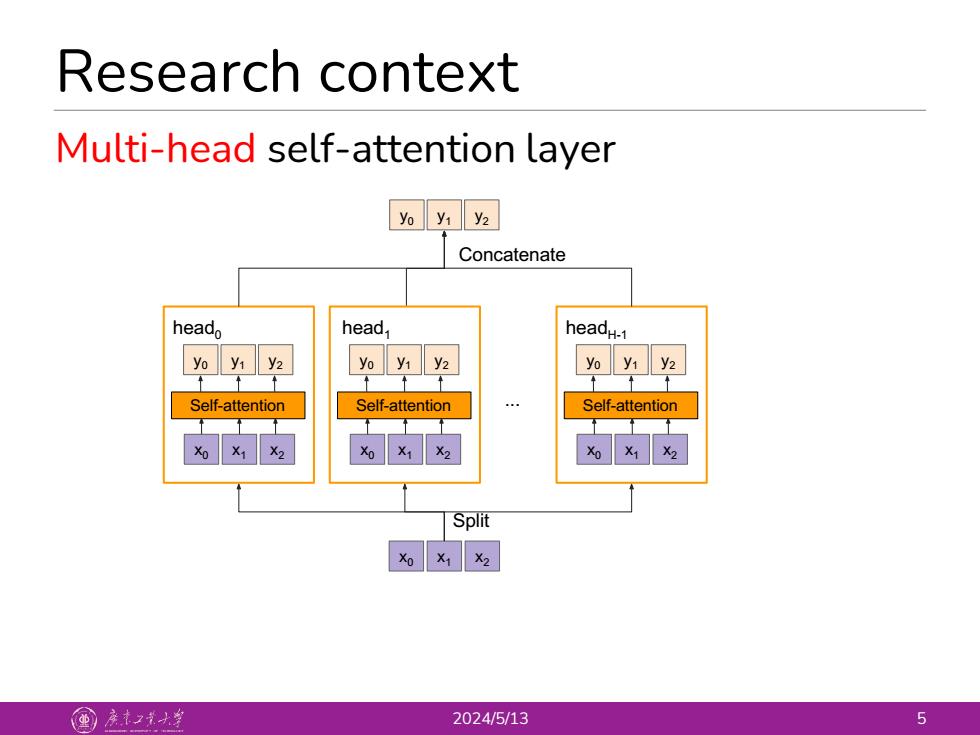

Research context Multi-head self-attention layer yo y:y2 Concatenate head head headH-1 yo y1 y2 yo y1 y2 yo y1 y2 ↑↑↑ Self-attention Self-attention Self-attention Xo x1 x2 Split X1X2 国产之大当 2024/5/13 5

Research context 2024/5/13 5 Multi-head self-attention layer x2 x1 x0 Self-attention y0 y1 y2 x2 x1 x0 Self-attention y0 y1 y2 x2 x1 x0 Self-attention y0 y1 y2 head0 head1 ... headH-1 x2 x1 x0 y0 y1 y2 Concatenate Split