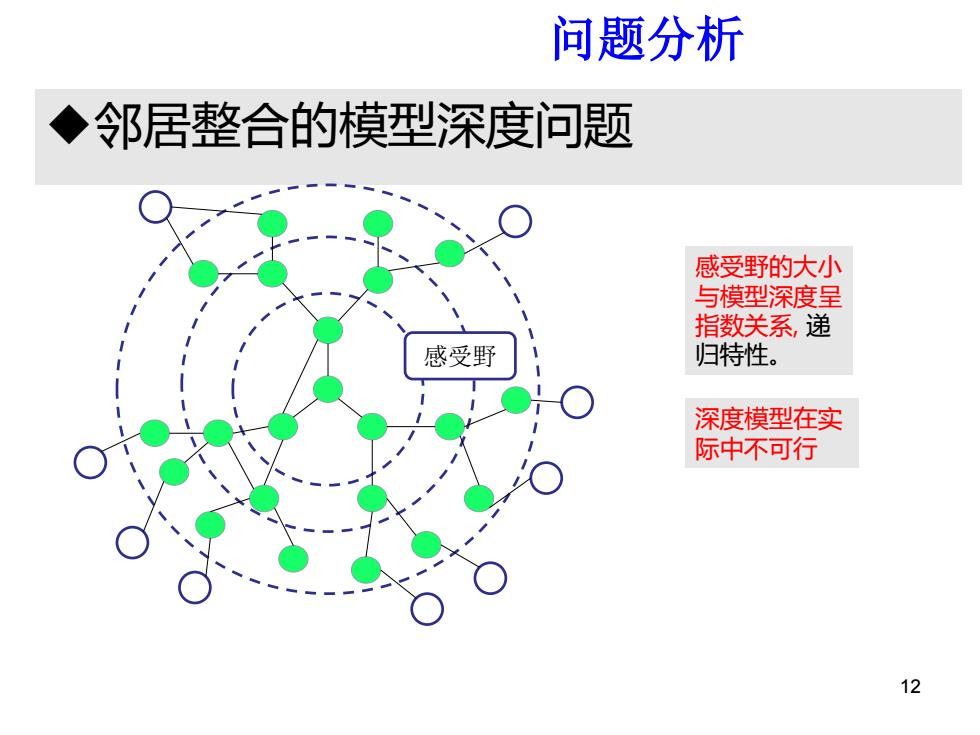

问题分析 ◆邻居整合的模型深度问题 感受野的大小 与模型深度呈 指数关系,递 感受野 归特性。 深度模型在实 际中不可行 12

邻居整合的模型深度问题 空域问题分析卷积 12 感受野 感受野的大小 与模型深度呈 指数关系, 递 归特性。 深度模型在实 际中不可行

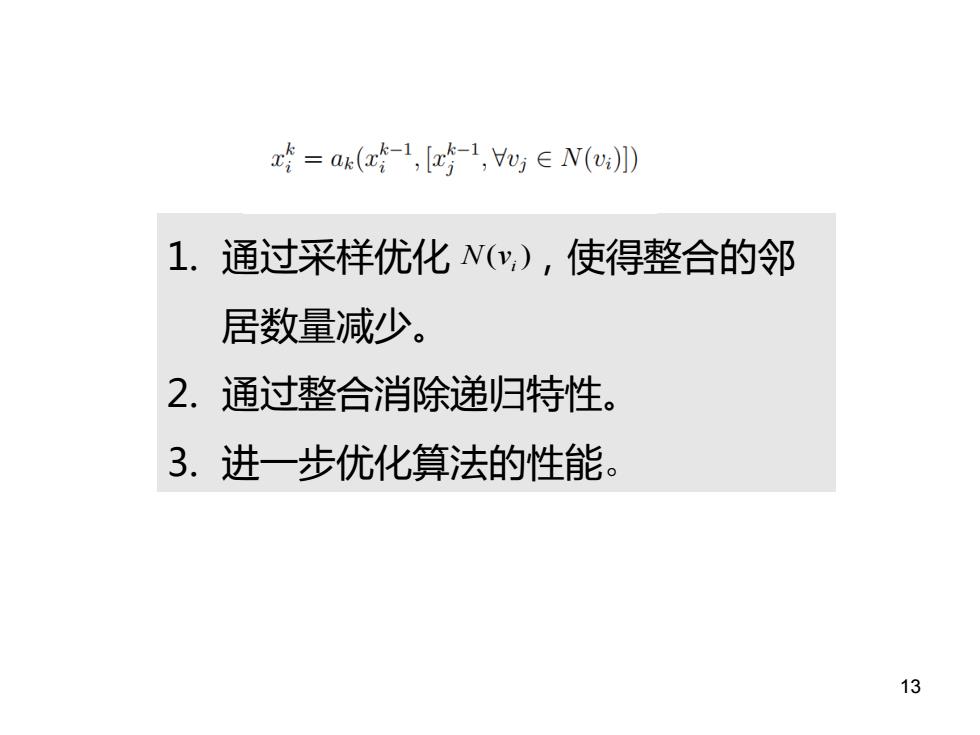

x=a(a-1,[-1,∈N() 1.通过采样优化(,),使得整合的邻 居数量减少。 2.通过整合消除递归特性。 3.进一步优化算法的性能。 13

论文思路 13 1. 通过采样优化 ,使得整合的邻 居数量减少。 2. 通过整合消除递归特性。 3. 进一步优化算法的性能。 ( )i N v

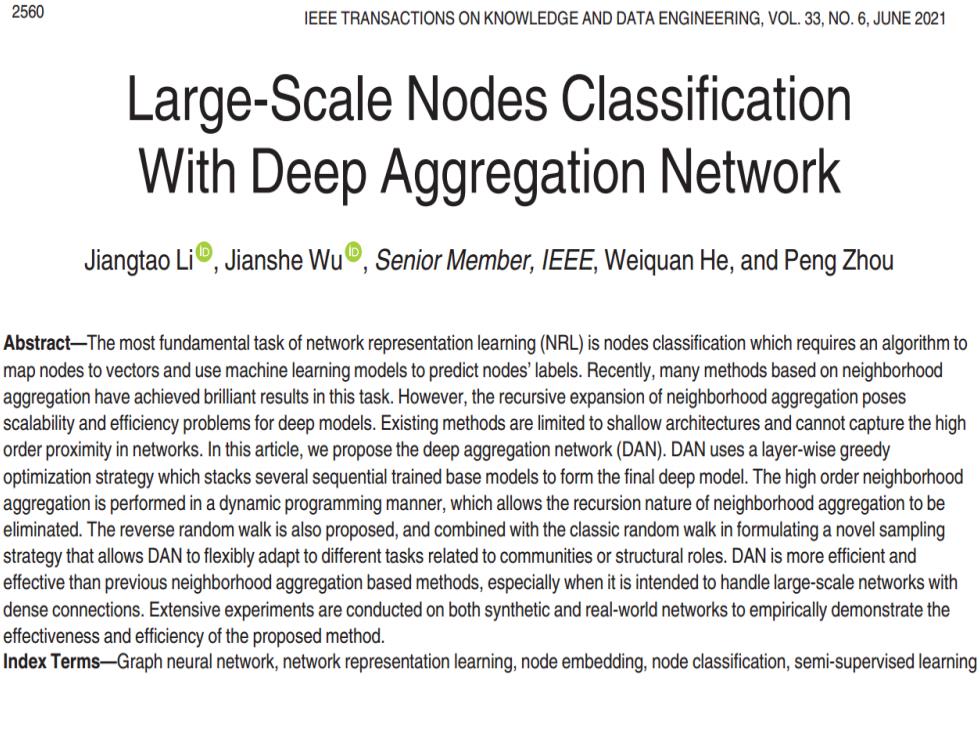

2560 IEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERING,VOL.33,NO.6,JUNE 2021 Large-Scale Nodes Classification With Deep Aggregation Network Jiangtao Li,Jianshe Wu,Senior Member,IEEE,Weiquan He,and Peng Zhou Abstract-The most fundamental task of network representation learning(NRL)is nodes classification which requires an algorithm to map nodes to vectors and use machine learning models to predict nodes'labels.Recently,many methods based on neighborhood aggregation have achieved brilliant results in this task.However,the recursive expansion of neighborhood aggregation poses scalability and efficiency problems for deep models.Existing methods are limited to shallow architectures and cannot capture the high order proximity in networks.In this article,we propose the deep aggregation network(DAN).DAN uses a layer-wise greedy optimization strategy which stacks several sequential trained base models to form the final deep model.The high order neighborhood aggregation is performed in a dynamic programming manner,which allows the recursion nature of neighborhood aggregation to be eliminated.The reverse random walk is also proposed,and combined with the classic random walk in formulating a novel sampling strategy that allows DAN to flexibly adapt to different tasks related to communities or structural roles.DAN is more efficient and effective than previous neighborhood aggregation based methods,especially when it is intended to handle large-scale networks with dense connections.Extensive experiments are conducted on both synthetic and real-world networks to empirically demonstrate the effectiveness and efficiency of the proposed method. ndex Terms-Graph neural network,network representation learning,node embedding,node classification,semi-supervised learning

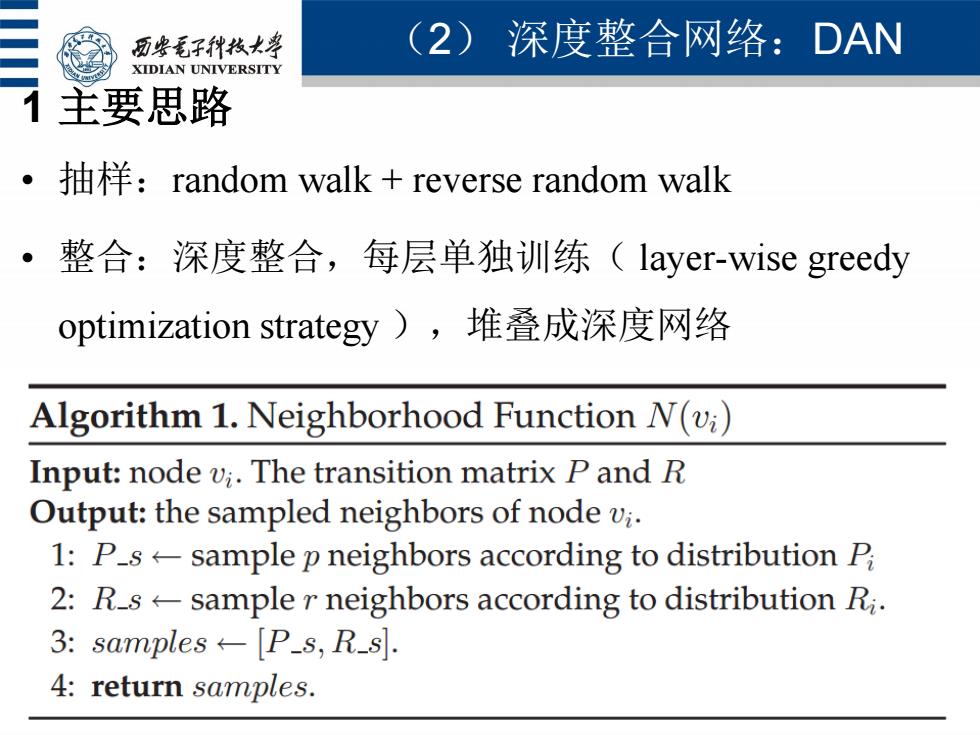

历些毛子代枚大学 (2)深度整合网络:DAN XIDIAN UNIVERSITY 主要思路 抽样:random walk+reverse random walk 整合:深度整合,每层单独训练(layer-wise greedy optimization strategy),堆叠成深度网络 Algorithm 1.Neighborhood Function N(vi) Input:node vi.The transition matrix P and R Output:the sampled neighbors of node vi. 1:P-s-sample p neighbors according to distribution P 2:R-s-sample r neighbors according to distribution Ri. 3:samples P_s,R_s. 4:return samples

(2) 深度整合网络:DAN 15 1 主要思路 • 抽样:random walk + reverse random walk • 整合:深度整合,每层单独训练( layer-wise greedy optimization strategy ),堆叠成深度网络