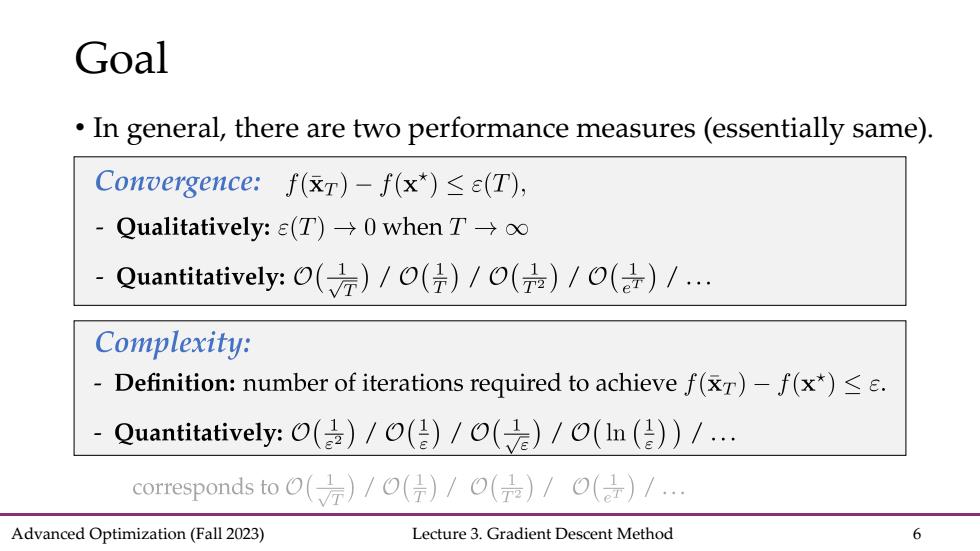

Goal In general,there are two performance measures (essentially same) Convergence:f(xr)-f(x*)<e(T), Qualitatively::e(T)→0 when T→o Quantitatively:O(元)/o()/O()/o()/.… Complexity: Definition:number of iterations required to achieve f(r)-f(x*)<s. Quantitatively:()/()/()/0(In())/... corresponds to(元)/O()/O(是)/O()/ Advanced Optimization(Fall 2023) Lecture 3.Gradient Descent Method 6

Advanced Optimization (Fall 2023) Lecture 3. Gradient Descent Method 6 • In general, there are two performance measures (essentially same). Goal

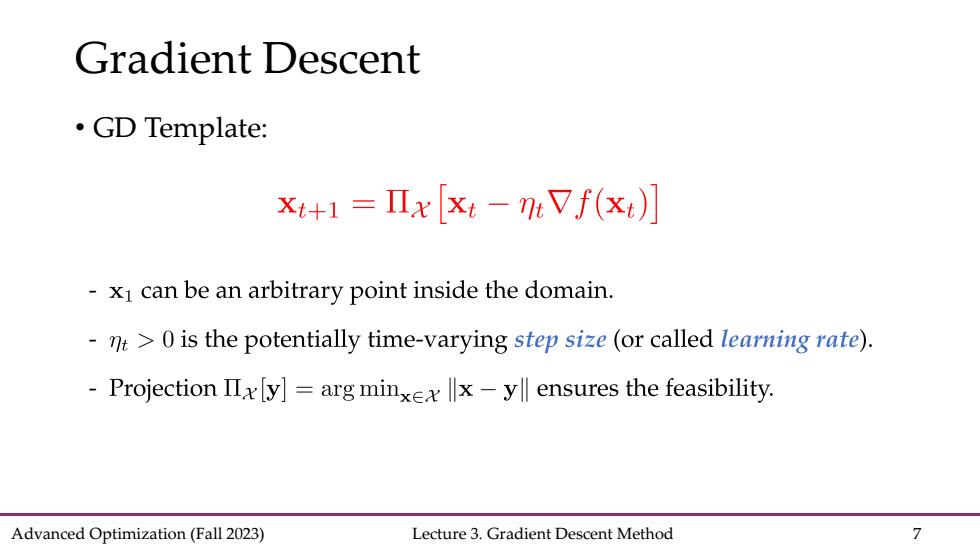

Gradient Descent ·GD Template: xt+1=Πxxt-EVf(xt)] -x1 can be an arbitrary point inside the domain. -n>0 is the potentially time-varying step size (or called learning rate). -ProjectionⅡxy]=arg minx∈x‖x-y ensures the feasibility. Advanced Optimization(Fall 2023) Lecture 3.Gradient Descent Method 7

Advanced Optimization (Fall 2023) Lecture 3. Gradient Descent Method 7 • GD Template: Gradient Descent

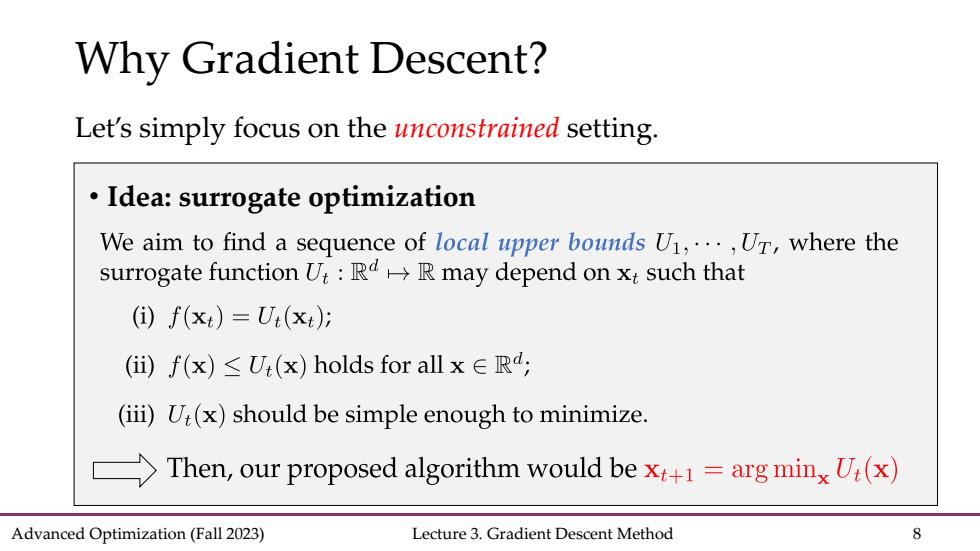

Why Gradient Descent? Let's simply focus on the unconstrained setting. Idea:surrogate optimization We aim to find a sequence of local upper bounds U1,...,Ur,where the surrogate function U::RdR may depend on xt such that ()f(x)=U(xt); (i)f(x)≤U(x)holds for all x∈Ra, (iii)U(x)should be simple enough to minimize. Then,our proposed algorithm would bex+1=arg minx U:(x) Advanced Optimization(Fall 2023) Lecture 3.Gradient Descent Method 8

Advanced Optimization (Fall 2023) Lecture 3. Gradient Descent Method 8 Why Gradient Descent? Let’s simply focus on the unconstrained setting. • Idea: surrogate optimization

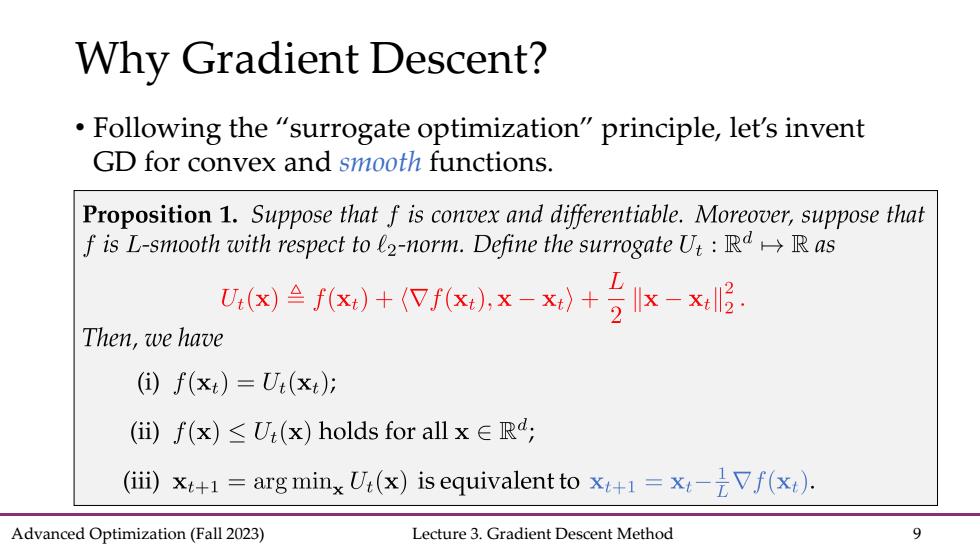

Why Gradient Descent? Following the "surrogate optimization"principle,let's invent GD for convex and smooth functions. Proposition 1.Suppose that f is convex and differentiable.Moreover,suppose that f is L-smooth with respect to l2-norm.Define the surrogate U:RRas 凶f)+fxx-x+51x-xg Then,we have (①)f(xt)=U(xt); ()f(x)≤U(x)holds for all x∈R, (iii)xt+1=arg minx Ui(x)is equivalent to x+=x-Vf(x). Advanced Optimization(Fall 2023) Lecture 3.Gradient Descent Method 9

Advanced Optimization (Fall 2023) Lecture 3. Gradient Descent Method 9 Why Gradient Descent? • Following the “surrogate optimization” principle, let’s invent GD for convex and smooth functions

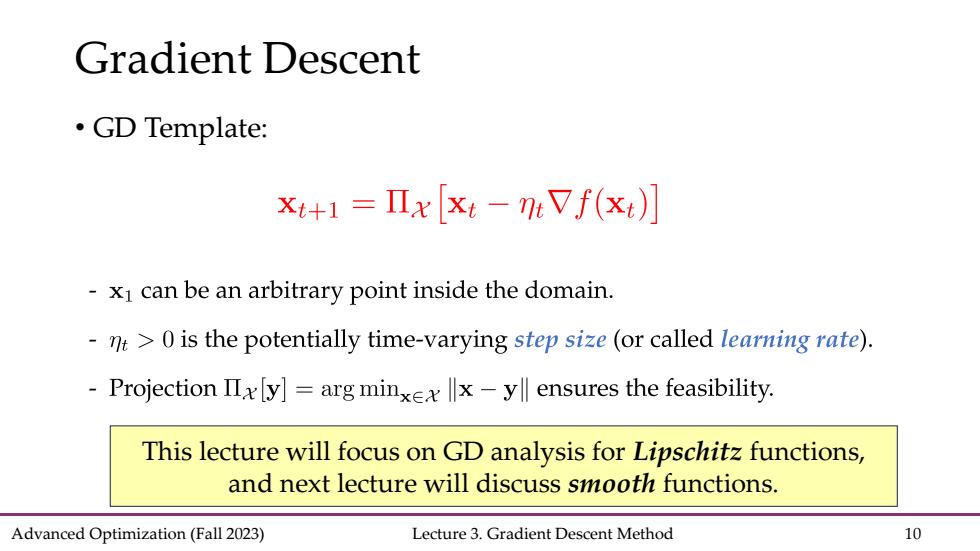

Gradient Descent ·GD Template: xt+1=Πx[xt-:Vf(xt)] -xI can be an arbitrary point inside the domain. nt>0 is the potentially time-varying step size(or called learning rate). -Projection IIy]=arg minxex-yll ensures the feasibility. This lecture will focus on GD analysis for Lipschitz functions, and next lecture will discuss smooth functions. Advanced Optimization(Fall 2023) Lecture 3.Gradient Descent Method 10

Advanced Optimization (Fall 2023) Lecture 3. Gradient Descent Method 10 • GD Template: Gradient Descent This lecture will focus on GD analysis for Lipschitz functions, and next lecture will discuss smooth functions