NJUAT 南京大学 人工智能学院 SCHOOL OF ARTFICIAL INTELUGENCE,NANJING UNFVERSITY Lecture 9.Optimistic Online Mirror Descent Advanced Optimization(Fall 2023) Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University

Lecture 9. Optimistic Online Mirror Descent Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University Advanced Optimization (Fall 2023)

Beyond the Worst-Case Analysis All above regret guarantees hold against the worst case Matching the minimax optimality oblivious adversary adaptive adversary The environment is fully adversarial examination interview However,in practice: We are not always interested in the worst-case scenario Environments can exhibit specific patterns:gradual change,periodicity... We are after some more problem-dependent guarantees. Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 2

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 2 Beyond the Worst-Case Analysis • All above regret guarantees hold against the worst case • Matching the minimax optimality • The environment is fully adversarial interview oblivious adversary adaptive adversary examination We are after some more problem-dependent guarantees. • However, in practice: • We are not always interested in the worst-case scenario • Environments can exhibit specific patterns: gradual change, periodicity…

Beyond the Worst-Case Analysis Beyond the worst-case analysis,achieving more adaptive results. (1)adaptivity:achieving better guarantees in easy problem instances; (2)robustness:maintaining the same worst-case guarantee. VS OPTIMISTS PESSIMISTS Be cautiously optimistic Real world Easy Data Worst-Case Data [Slides from Dylan Foster,Adaptive Online Learning @NIPS'15 workshop] Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 3

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 3 Beyond the Worst-Case Analysis • Beyond the worst-case analysis, achieving more adaptive results. (1) adaptivity: achieving better guarantees in easy problem instances; (2) robustness: maintaining the same worst-case guarantee. [Slides from Dylan Foster, Adaptive Online Learning @NIPS’15 workshop]

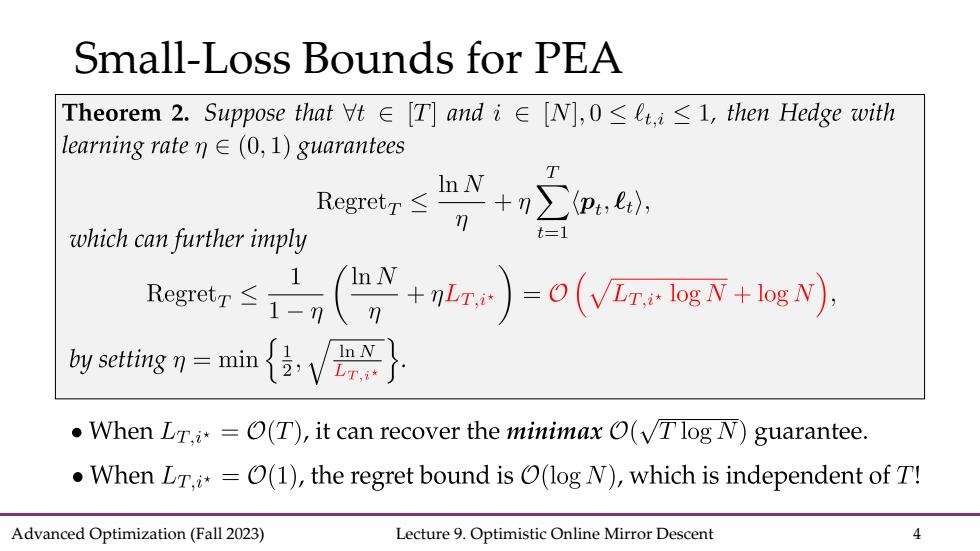

Small-Loss Bounds for PEA Theorem2.Suppose that∀t∈[T]andi∈[N],0≤Lt,i≤l,then Hedge with learning rate nE(0,1)guarantees which can further imply t=1 7≤(,+)=o(vg+s by setting n=min When LT.i=O(T),it can recover the minimax O(VTlog N)guarantee. When Lr.=O(1),the regret bound is (log N),which is independent of T! Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 4

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 4 Small-Loss Bounds for PEA

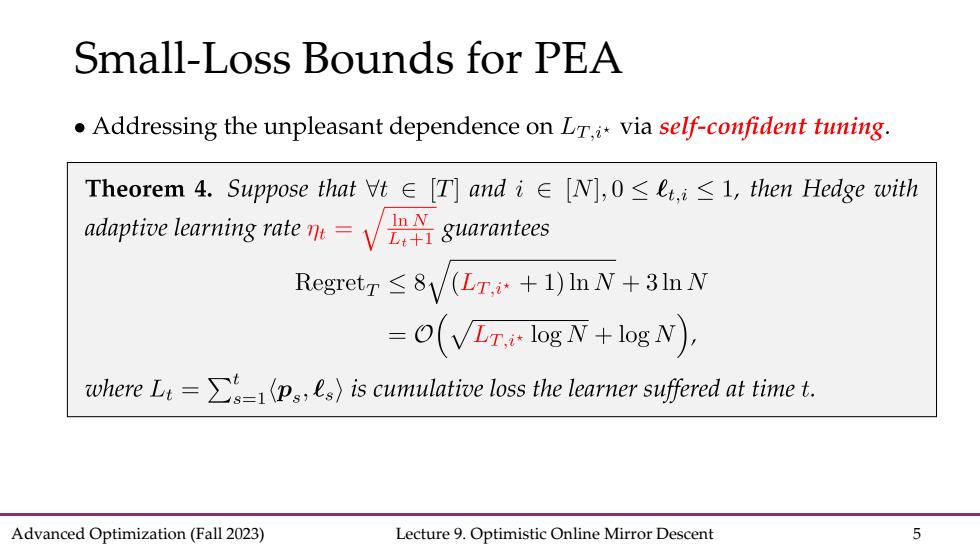

Small-Loss Bounds for PEA Addressing the unpleasant dependence on Lr.ivia self-confident tuning. Theorem4.Suppose that∀t∈[T]andi∈[N],0≤,i≤l,then Hedge with adaptive learning rate gurantees Regretr <8(Lr.+1)In N+3In N =O(V,1ogN+logN), whereL is cumulative loss the learner suffered at time t. Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 5

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 5 Small-Loss Bounds for PEA