吸窖 NJUAT 南京大学 人工智能学院 SCHODL OF ARTIFICIAL INTELUGENCE,NANJING UNIVERSITY Lecture 7.Online Mirror Descent Advanced Optimization(Fall 2023) Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University

Lecture 7. Online Mirror Descent Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University Advanced Optimization (Fall 2023)

Outline Algorithmic Framework ·Regret Analysis Interpretation from Primal-Dual View Follow-the-Regularized Leader Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 2

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 2 Outline • Algorithmic Framework • Regret Analysis • Interpretation from Primal-Dual View • Follow-the-Regularized Leader

Recap:Reinvent Hedge Algorithm Proximal update rule for OGD: X1=竖{mx,c》+x-x卧 Proximal update rule for Hedge: x+1=arg minn(x Vfi(x))+KL(x) x∈X More possibility:changing the distance measure to a more general form using Bregman divergence =arg min n f()+D(xx) x∈X Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 3

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 3 Recap: Reinvent Hedge Algorithm • Proximal update rule for OGD: • Proximal update rule for Hedge: • More possibility: changing the distance measure to a more general form using Bregman divergence

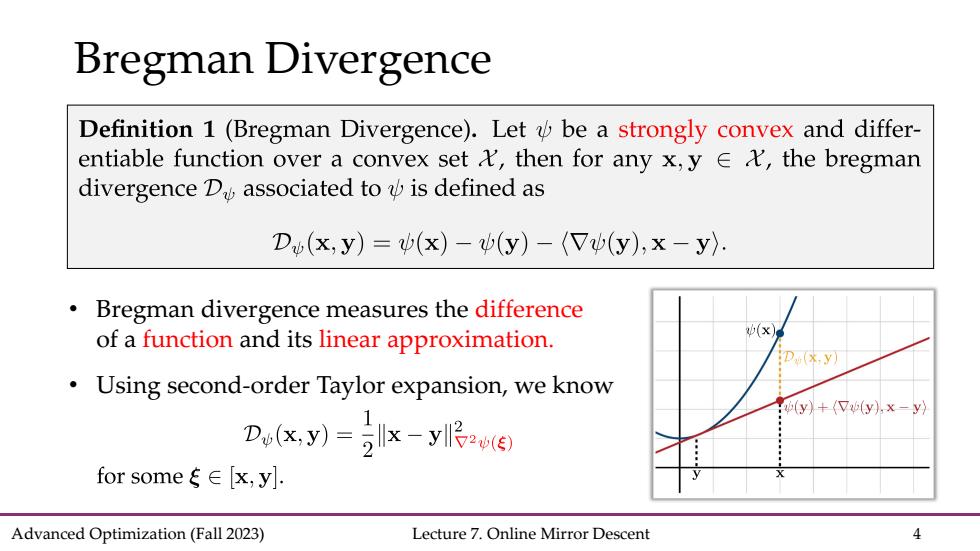

Bregman Divergence Definition 1(Bregman Divergence).Let be a strongly convex and differ- entiable function over a convex set t,then for any x,y e A,the bregman divergence D associated to is defined as D(x,y)=(x)-(y)-(V(y),x-y Bregman divergence measures the difference of a function and its linear approximation. D.x.y Using second-order Taylor expansion,we know 0(y)+(7y),x-y〉 Do(y)llx-yo for some∈[x,yl. Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 4

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 4 Bregman Divergence • Bregman divergence measures the difference of a function and its linear approximation. • Using second-order Taylor expansion, we know

Bregman Divergence Definition 1(Bregman Divergence).Let be a strongly convex and differ- entiable function over a convex set t,then for any x,y e A,the bregman divergence D associated to is defined as Db(x,y)=(x)-(y)-((y),x-y). Table 1:Choice of ()and the corresponding Bregman divergence. (x) D(x,y) Squared L2-distance Ilxl llx-yll Mahalanobis distance x☒ llx-yll Negative entropy ∑i log KL(xlly) Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 5

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 5 Bregman Divergence