版像 NJUAT 南京大学 人工智能学院 SCHODL OF ARTIFICIAL INTELUGENCE,NANJING UNIVERSITY Lecture 4.Gradient Descent Method Il Advanced Optimization(Fall 2023) Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University

Lecture 4. Gradient Descent Method II Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University Advanced Optimization (Fall 2023)

Outline GD for Smooth Optimization Smooth and Convex Functions Smooth and Strongly Convex Functions Nesterov's Accelerated GD Extension to Composite Optimization Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 2

Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 2 Outline • GD for Smooth Optimization • Smooth and Convex Functions • Smooth and Strongly Convex Functions • Nesterov’s Accelerated GD • Extension to Composite Optimization

Part 1.GD for Smooth Optimization ·Smooth and Convex Smooth and Strongly Convex Extension to Constrained Case Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 3

Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 3 Part 1. GD for Smooth Optimization • Smooth and Convex • Smooth and Strongly Convex • Extension to Constrained Case

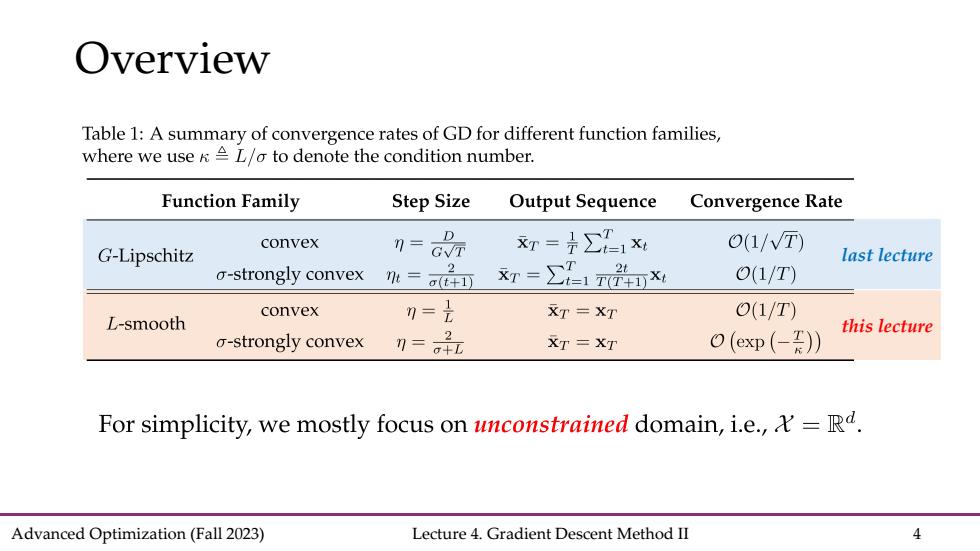

Overview Table 1:A summary of convergence rates of GD for different function families, where we use KL/o to denote the condition number. Function Family Step Size Output Sequence Convergence Rate convex 刀 xr=∑1x 0(1/T) G-Lipschitz last lecture a-strongly convex 2 m=a(t+1) xr=∑1nX O(1/T) convex 0=是 XT =XT O(1/T) L-smooth this lecture o-strongly convex 刀=品 XT =XT O(exp(-I)) For simplicity,we mostly focus on unconstrained domain,i.e.,=Rd. Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 4

Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 4 Overview last lecture this lecture

Convex and Smooth Theorem 1.Suppose the function f:Ra>R is convex and differentiable,and also L-smooth.GD updates by x=xt-mVf(xt)with step size n=1,and then GD enjoys the following convergence guarantee: -s2-x-。 T-1 T Note:we are working on unconstrained setting and using a fixed step size tuning. Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 5

Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 5 Convex and Smooth Note: we are working on unconstrained setting and using a fixed step size tuning