NJUA 南京大学 人工智能学院 SCHOOL OF ARTFICIAL INTELUGENCE,NANJING UNFVERSITY Lecture 8.Adaptive Online Convex Optimization Advanced Optimization(Fall 2023) Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University

Lecture 8. Adaptive Online Convex Optimization Peng Zhao zhaop@lamda.nju.edu.cn Nanjing University Advanced Optimization (Fall 2023)

Outline ·Motivation ·Small-Loss Bounds Small-Loss bound for PEA Self-confident Tuning Small-Loss bound for OCO Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 2

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 2 Outline • Motivation • Small-Loss Bounds • Small-Loss bound for PEA • Self-confident Tuning • Small-Loss bound for OCO

General Regret Analysis for OMD OMD update: =arg min n(x Vfi()D(x) x∈X Lemma 1(Mirror Descent Lemma).Let D be the Bregman divergence w.r.t.: X→R and assume少to be X-strongly convex with respect to a norm‖·‖.Then, ∀u∈X,the following inequality holds x)-im)≤最D,ux)-D,ux)+癸f --Du(xt+1,xt) bias term(range term) variance term(stability term) negative term Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 3

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 3 General Regret Analysis for OMD OMD update: bias term (range term) variance term (stability term) negative term

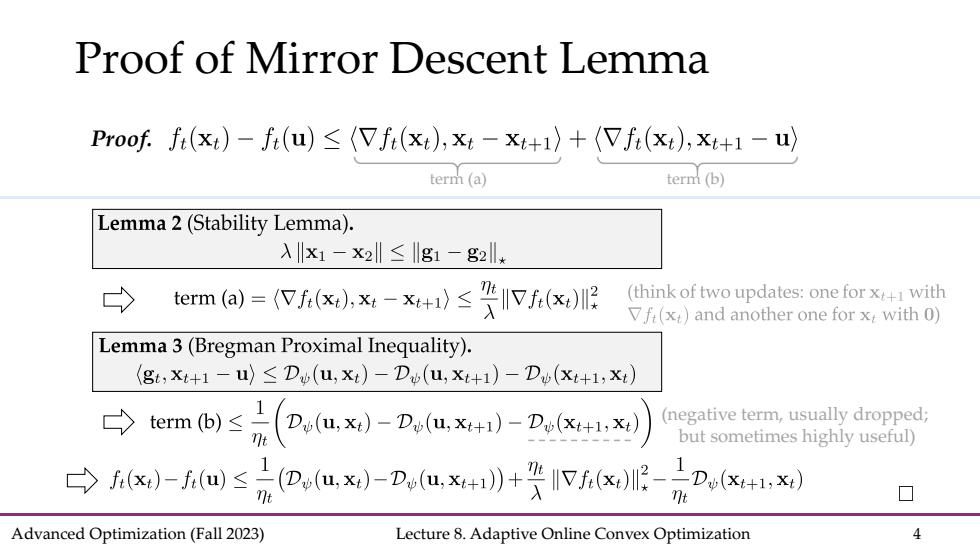

Proof of Mirror Descent Lemma Proof.fi(xt)-fi(u)<(Vfi(xt),xt-x++1)+(Vfi(xi),xi+1-u) term (a) term(b) Lemma 2(Stability Lemma). 入x1-x2‖≤g1-g2ll* tem(a)=(fx,x-x+)≤哭lVf.(x,) (think of two updates:one for x with Vfi(x)and another one for x with 0) Lemma 3(Bregman Proximal Inequality). (gi,Xi+1-u)<Du(u,xi)-Do(u,x+1)-Dv(xi+1,xi) emo)≤(aux)-nux+)-nK】 (negative term,usually dropped; but sometimes highly useful) →fx)-f四≤(D(u,x)-Deu,x+》+IVfi(xI2-D,x+1,x) Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 4

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 4 Proof of Mirror Descent Lemma Proof. term (a) term (b) (negative term, usually dropped; but sometimes highly useful)

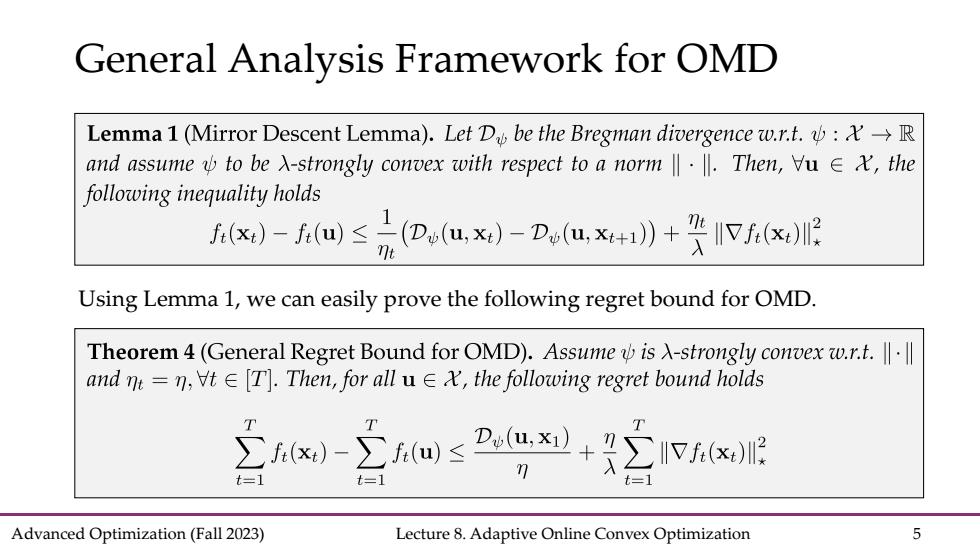

General Analysis Framework for OMD Lemma 1 (Mirror Descent Lemma).Let Du be the Bregman divergence w.r.t.->R and assume v to be入-strongly convex with respect to a norm‖·‖.Then,∀u∈X,the following inequality holds fix)-fiu)≤是(D(u,x)-D,u,x》+IVfx Using Lemma 1,we can easily prove the following regret bound for OMD. Theorem 4(General Regret Bound for OMD).Assumeis A-strongly convex w.r.t. andt=n,∀t∈[T].Then,for all u∈X,the following regret bound holds x)-≤Dg+I T T T Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 5

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 5 General Analysis Framework for OMD Using Lemma 1, we can easily prove the following regret bound for OMD