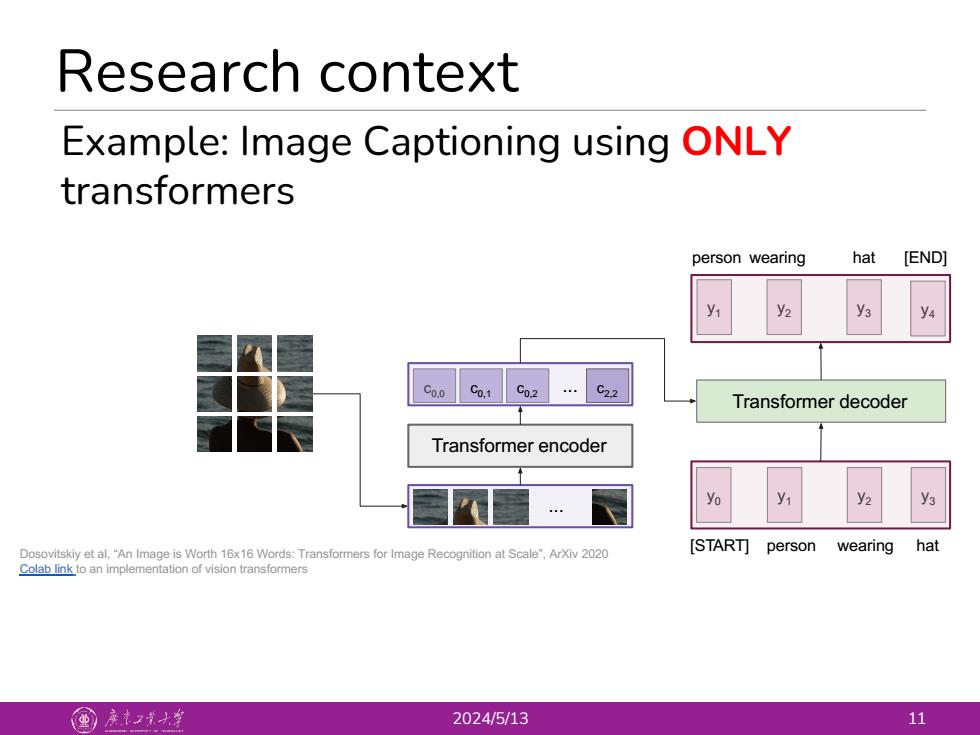

Research context Example:Image Captioning using ONLY transformers person wearing hat [END] y1 Coo Co.1 Co.2.C22 Transformer decoder Transformer encoder y3 Dosovitskiy et al,"An Image is Worth 16x16 Words:Transformers for Image Recognition at Scale",ArXiv 2020 [START]person wearing hat Colab link to an implementation of vision transformers 国产之大当 2024/5/13 11

Research context 2024/5/13 11 Example: Image Captioning using ONLY transformers ... Transformer encoder c0,0 c0,1 c0,2 c2,2 ... y0 [START] person wearing hat y1 y2 y1 y3 y2 y4 y3 person wearing hat [END] Transformer decoder Dosovitskiy et al, “An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale”, ArXiv 2020 Colab link to an implementation of vision transformers

Research context Language model pre-training has been used to improve many NLP tasks .ELMo(Peters et al.,2018) OpenAl GPT (Radford et al.,2018) ULMFit(Howard and Rudder,2018) ·BERT 。Unidirectional JLM-FIT Feature-Based(ELMo) Fine-tuning(OpenAl GPT). 。Bidirectional 。BERT 国产之小连

Research context 2024/5/13 12 • Language model pre-training has been used to improve many NLP tasks • ELMo (Peters et al., 2018) • OpenAI GPT (Radford et al., 2018) • ULMFit (Howard and Rudder, 2018) • BERT ● Unidirectional ○ Feature-Based(ELMo) ○ Fine-tuning(OpenAI GPT). ● Bidirectional ○ BERT

Research context Two existing strategies for applying pre-trained language representations to downstream tasks Feature-based:include pre-trained representations as additional features (e.g., ELMo) Fine-tunning:introduce task-specific parameters and fine-tune the pre-trained parameters (e.g., OpenAl GPT,ULMFit) 国产之大丝

Research context 2024/5/13 13 • Two existing strategies for applying pre-trained language representations to downstream tasks • Feature-based: include pre-trained representations as additional features (e.g., ELMo) • Fine-tunning: introduce task-specific parameters and fine-tune the pre-trained parameters (e.g., OpenAI GPT, ULMFit)

Limitations of current techniques Language models in pre-training are unidirectional,they restrict the power of the pre-trained representations .OpenAl GPT used left-to-right architecture ELMo concatenates forward and backward language models 。Solution BERT:Bidirectional Encoder Representations from Transformers 国产之小丝

Limitations of current techniques 2024/5/13 14 •Language models in pre-training are unidirectional, they restrict the power of the pre-trained representations •OpenAI GPT used left-to-right architecture •ELMo concatenates forward and backward language models • Solution BERT: Bidirectional Encoder Representations from Transformers

Differences in pre-training model architectures: BERT,OpenAl GPT,and ELMo TN TN (Trm) (Trm) (rm… (Trm) LSTM(LSTM+·(LSTM LSTM(LSTM·LSTM m Trm Trm (Trm LSTM)(LSTM)·(LSTM LSTM(LSTM(LSTM Ea…w E… EN BERT OpenAl GPT ELMo 国产之大当 2024/5/13 15

Differences in pre-training model architectures: BERT, OpenAI GPT, and ELMo 2024/5/13 15 E1 E2 EN Trm Trm Trm Trm Trm Trm T1 T2 … TN … … … E1 E2 EN Trm Trm Trm Trm Trm Trm T1 T2 … TN … … … E1 E2 EN LSTM LSTM LSTM LSTM LSTM LSTM T1 T2 … TN … … … LSTM LSTM LSTM LSTM LSTM LSTM … … BERT OpenAI GPT ELMo