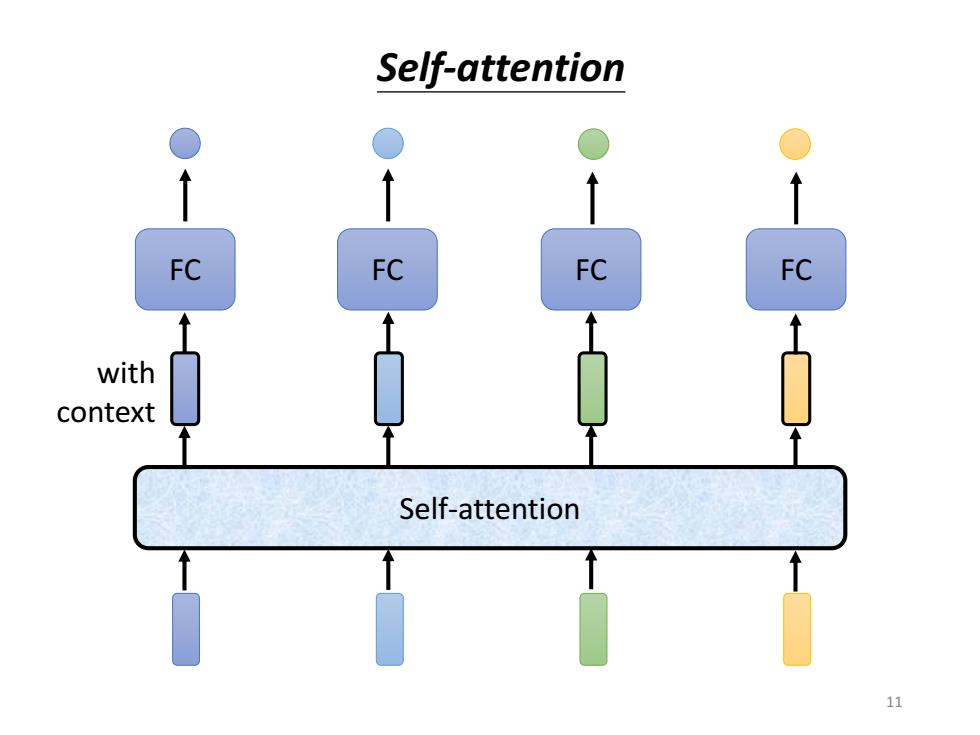

Self-attention FC FC FC with context Self-attention 11

FC FC FC FC Self-attention with context Self-attention 11

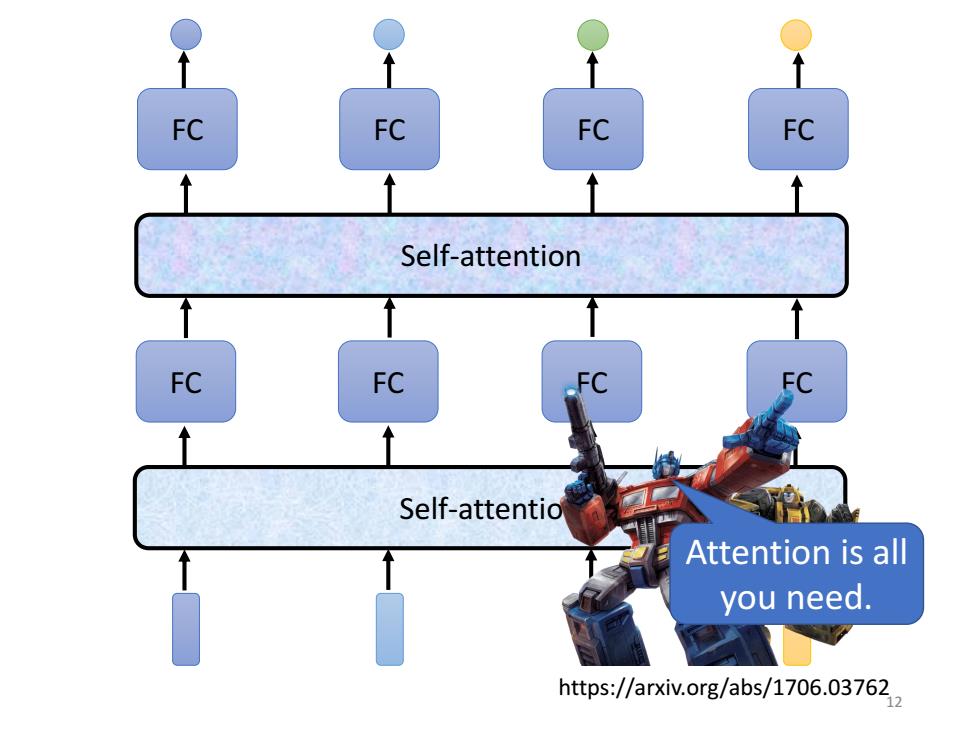

FC FC FC FC Self-attention FC FC FC Self-attentio Attention is all you need. https://arxiv.org/abs/1706.03762 2

FC FC FC FC Self-attention Self-attention FC FC FC FC Attention is all you need. https://arxiv.org/abs/1706.03762 12

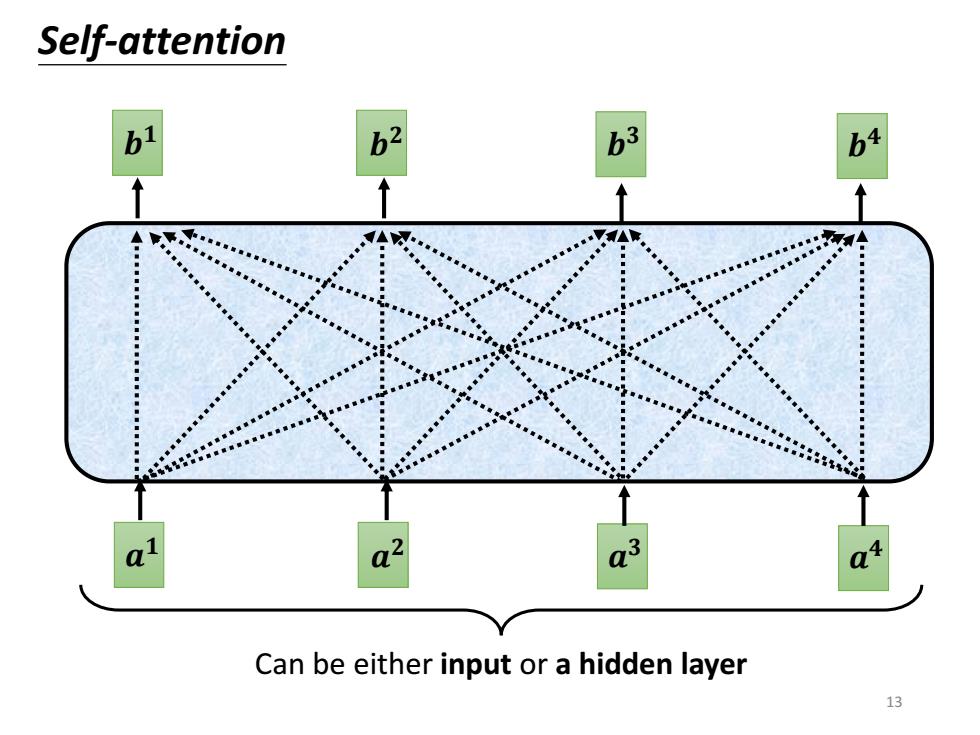

Self-attention b1 b3 ◆ 1 5 a2 Q1 Can be either input or a hidden layer 13

Self-attention 𝒂 𝟒 𝒂 𝟑 𝒂 𝟐 𝒂 𝟏 𝒃 𝟒 𝒃 𝟑 𝒃 𝟐 𝒃 𝟏 Can be either input or a hidden layer 13

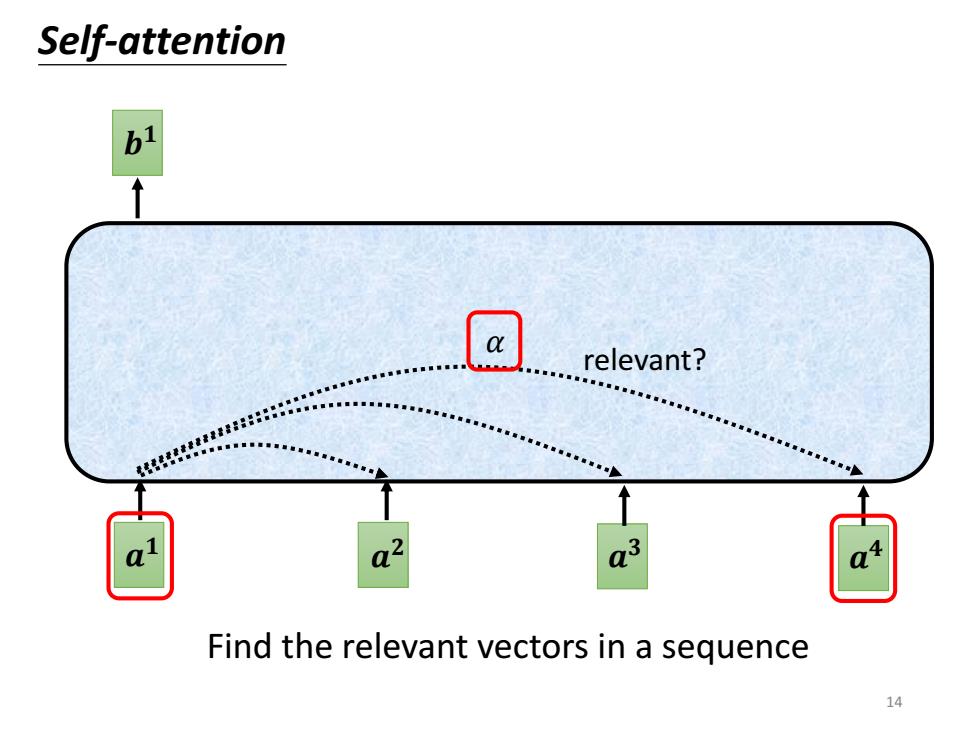

Self-attention b1 relevant? 688888852558* Q3 Find the relevant vectors in a sequence

Self-attention 𝒂 𝟒 𝒂 𝟑 𝒂 𝟐 𝒂 𝟏 relevant? 𝛼 Find the relevant vectors in a sequence 𝒃 𝟏 14

C Self-attention Additive Dot-product W C= q·k tanh y Wq Wk Wq 15

Self-attention 𝑊𝑞 𝑊𝑘 Dot-product 𝛼 𝒒 𝒌 = 𝒒 ∙ 𝒌 Additive 𝑊𝑞 𝑊𝑘 𝛼 + 𝑡𝑎𝑛ℎ 𝑊 15