Smooth and Strongly Convex f is o-strongly convex f is L-smooth f(x)+(Vf(x).y-+f(y)sf(*)+(Vf(x),y-+y f(x)+Vf(x),y-x)+x-yll f(y) f(x)+(Vf(x),y-x)+x-yl3 f(x)+Vf(x).y-x) Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 16

Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 16 Smooth and Strongly Convex

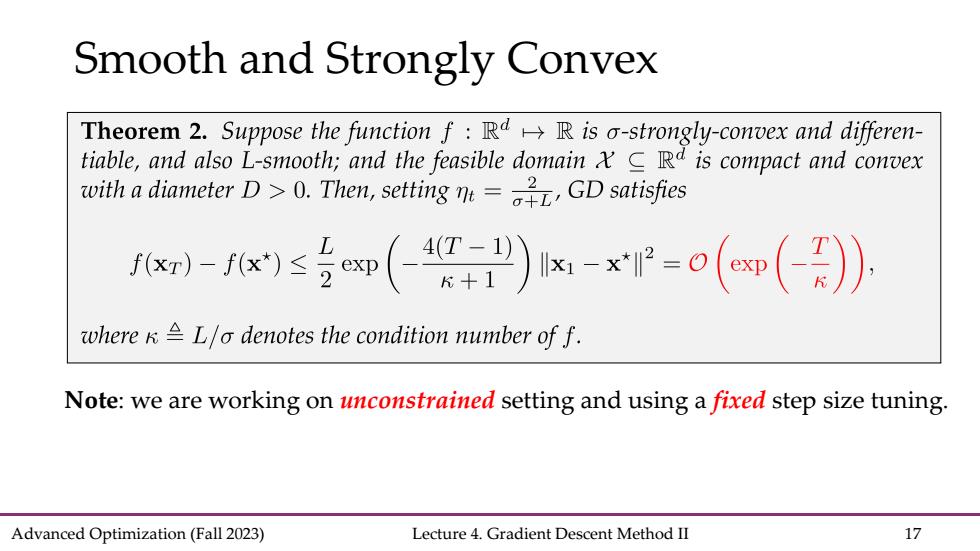

Smooth and Strongly Convex Theorem 2.Suppose the function f:RdR is o-strongly-convex and differen- tiable,and also L-smooth;and the feasible domain Rd is compact and convex with a diameter D.Then,settingGD satisfies x-1≤台(-g)x-x=o(@m(-) where kL/o denotes the condition number of f. Note:we are working on unconstrained setting and using a fixed step size tuning. Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 17

Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 17 Smooth and Strongly Convex Note: we are working on unconstrained setting and using a fixed step size tuning

Smooth and Strongly Convex Poo听Ix+1-x*I2=-neVf(x】-x*2GD xt-nVf(xt)-x*2 (Pythagoras Theorem) =x4-x*2-2m了f(x),x:-x+m呢IVf(x)2 how to exploiting the strong convexity and smoothness simultaneously Lemma 4(co-coercivity of smooth and strongly convex function).Let f be L- smooth and o-strongly convex on Rd.Then for all x,y Rd,one has -0x-0≥2x-y+NW-wP Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 18

Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 18 Smooth and Strongly Convex Proof: (Pythagoras Theorem) (GD) how to exploiting the strong convexity and smoothness simultaneously

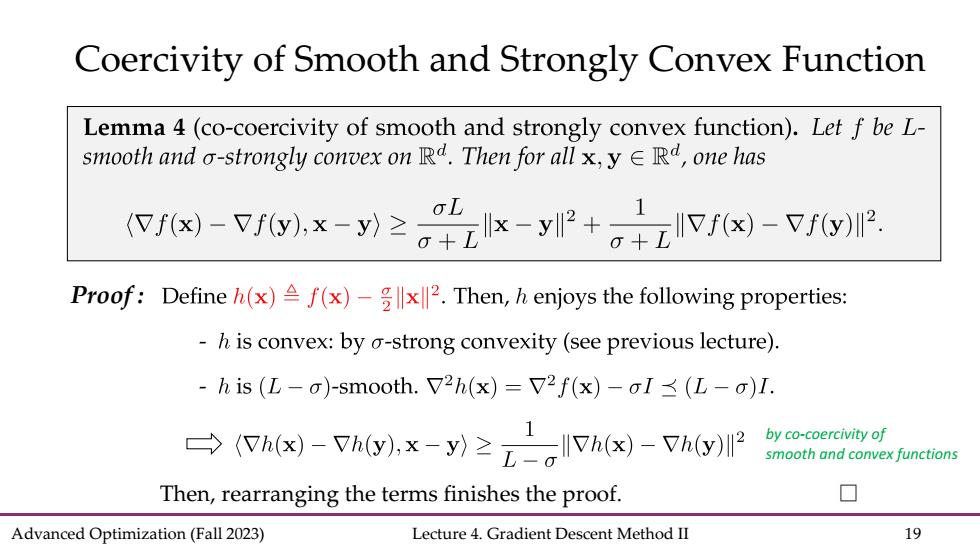

Coercivity of Smooth and Strongly Convex Function Lemma 4(co-coercivity of smooth and strongly convex function).Let f be L- smooth and o-strongly convex on Rd.Then for all x,y Rd,one has x)-fwx-y≥+zx-y2+,+五Vx)-P Proof:Define h(x)Af(x)x2.Then,h enjoys the following properties: h is convex:by o-strong convexity(see previous lecture). -his(L-σ)-smooth.V2h(x)=V2f(x)-oI≤(L-σ)I. →(Wx)-Tayx-y≥'IVax)-TayP by co-coercivity of smooth and convex functions Then,rearranging the terms finishes the proof. Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 19

Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 19 Coercivity of Smooth and Strongly Convex Function Then, rearranging the terms finishes the proof. Proof : by co-coercivity of smooth and convex functions

Smooth and Strongly Convex Proof:x+1-x*2=IΠxxt-7Vf(x】-x*2(GD xt-n Vf(xt)-x*2 (Pythagoras Theorem) =x-x*2-2meVf(x),x:-x*)+7呢川Vf(x)2 s(-2)x-x2+(-)v exploiting co-coercivity of smooth and strongly convex function →x+1-x*2≤(1-2)Ix-x*2+(呢-2兴)Vfx serving as the "one-step improvement"in the analysis Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 20

Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 20 Smooth and Strongly Convex Proof: (Pythagoras Theorem) (GD) exploiting co-coercivity of smooth and strongly convex function serving as the “one-step improvement” in the analysis