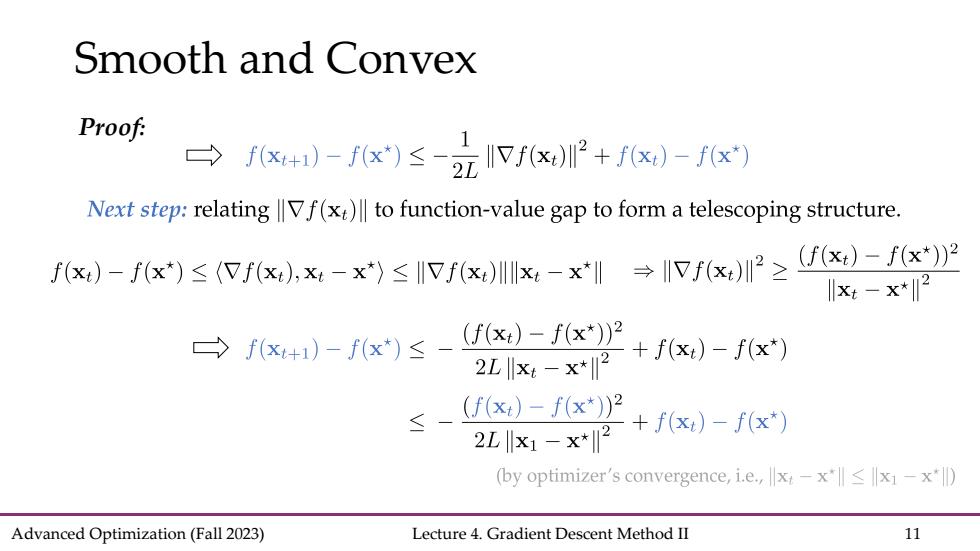

Smooth and Convex Proof: →4)-)≤-立xI2+f- Next step:relating Vf(xt)to function-value gap to form a telescoping structure. fx)-fx)≤fx,x-x)≤IVfx,)Ix-xl→x)P≥fx)-f6x2 川xt-x*2 →f+)-fx)≤-fx,)-fx》2 2L1x-x*2 +f(x)-f(x*) s、 (f(x)-f(x*)2 f(x)-f(x*) 2Lx1-x*2 (by optimizer's convergence,i.e.,x-x"lx1-x*) Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 11

Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 11 Smooth and Convex Proof:

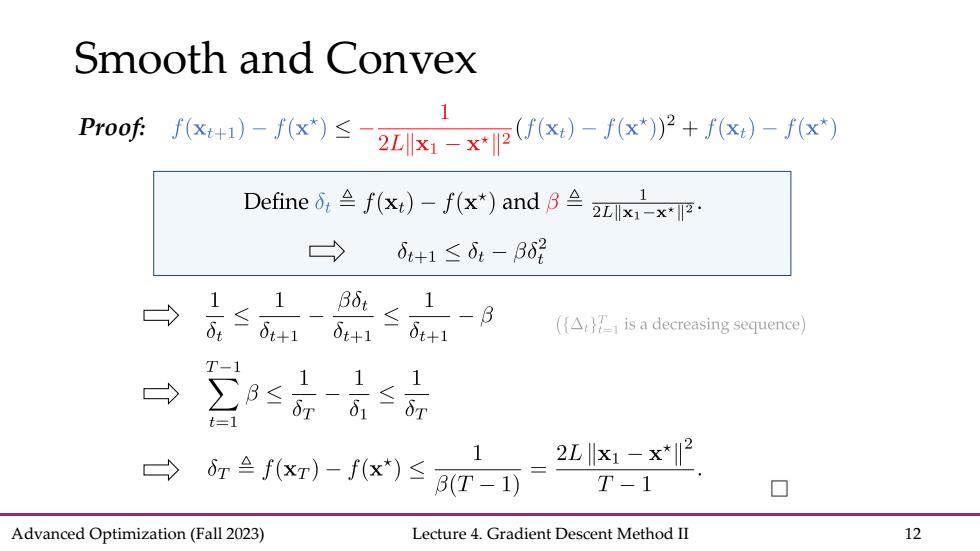

Smooth and Convex 1 Proof ()-f(x)(x))+fx)-f(x) Definef()-f(x")and > d+1≤6:-B02 1 长6个 --3 δt+1 (A is a decreasing sequence) T- 1 ∑B≤ 11 t= 61 ≤7 6ref(xr)-f(x*)≤aT- 2Lx1-x*2 T-1 口 Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 12

Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 12 Smooth and Convex Proof:

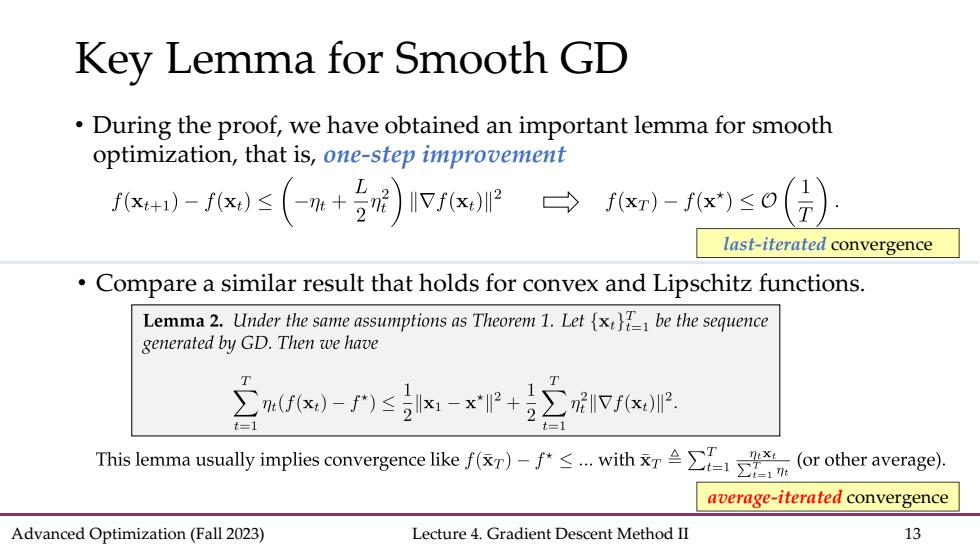

Key Lemma for Smooth GD During the proof,we have obtained an important lemma for smooth optimization,that is,one-step improvement 1x)-fs(m+5)IvxP→x)-x)≤o(份) last-iterated convergence Compare a similar result that holds for convex and Lipschitz functions. Lemma 2.Under the same assumptions as Theorem 1.Let {x be the sequence generated by GD.Then we have ∑x)-f≤x1-xP+号∑vfx This lemma uually implie convergence like)-产≤.withxr色∑I15}m x(or other average). average-iterated convergence Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 13

Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 13 Key Lemma for Smooth GD • During the proof, we have obtained an important lemma for smooth optimization, that is, one-step improvement • Compare a similar result that holds for convex and Lipschitz functions. last-iterated convergence average-iterated convergence

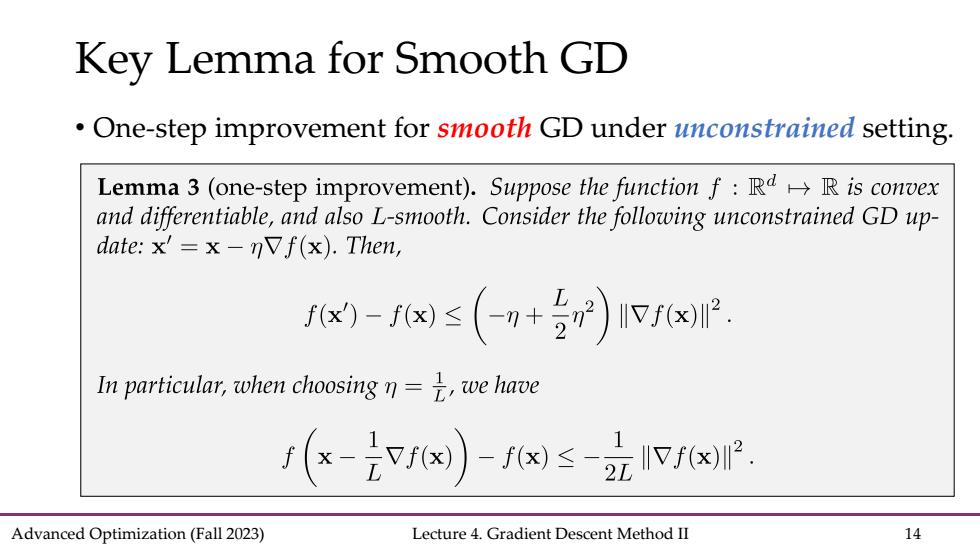

Key Lemma for Smooth GD One-step improvement for smooth GD under unconstrained setting. Lemma 3 (one-step improvement).Suppose the function f:RR is convex and differentiable,and also L-smooth.Consider the following unconstrained GD up- date:x'=x-nvf(x).Then, fx-1x≤(+)x2. In particular,when choosing)=是,we have f(x-2f)-f≤2时xP. Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 14

Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 14 Key Lemma for Smooth GD • One-step improvement for smooth GD under unconstrained setting

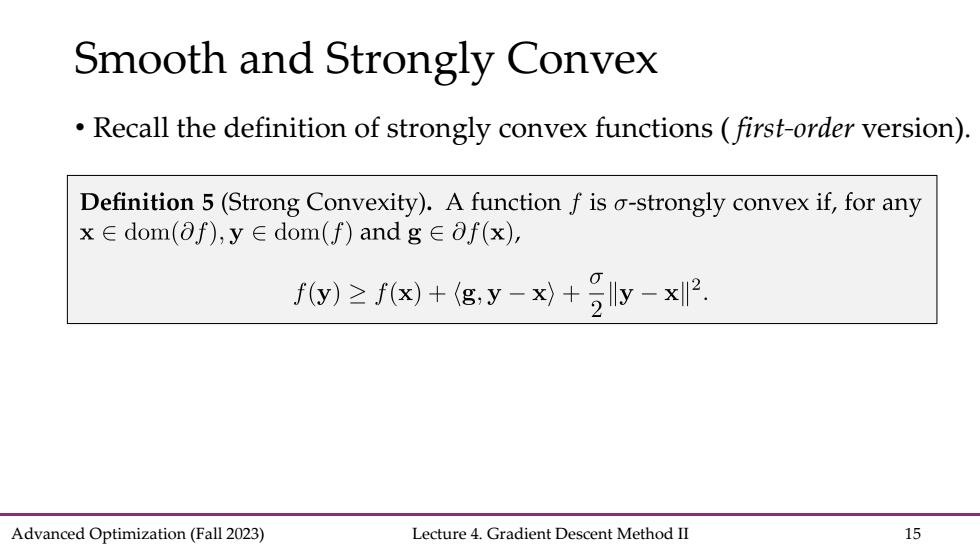

Smooth and Strongly Convex Recall the definition of strongly convex functions first-order version). Definition 5(Strong Convexity).A function f is o-strongly convex if,for any x∈dom(of),y∈dom(f)andg∈af(x), fy)≥fx)+gy-x)+2y-x2 Advanced Optimization(Fall 2023) Lecture 4.Gradient Descent Method II 15

Advanced Optimization (Fall 2023) Lecture 4. Gradient Descent Method II 15 Smooth and Strongly Convex • Recall the definition of strongly convex functions ( first-order version)