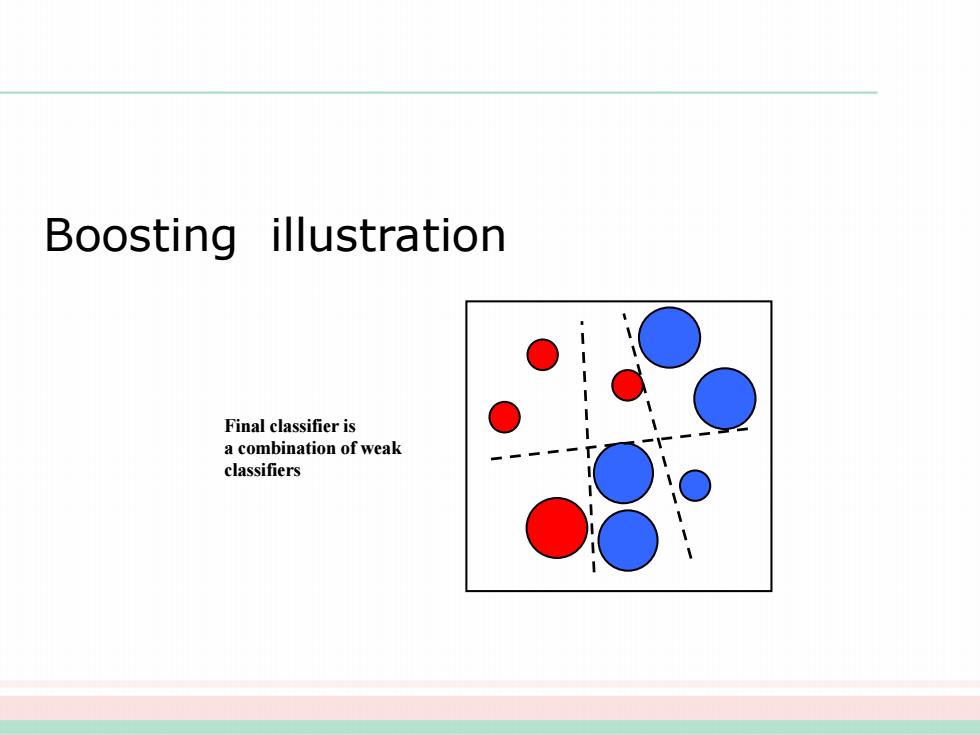

Boosting illustration Final classifier is a combination of weak classifiers

Final classifier is a combination of weak classifiers Boosting illustration

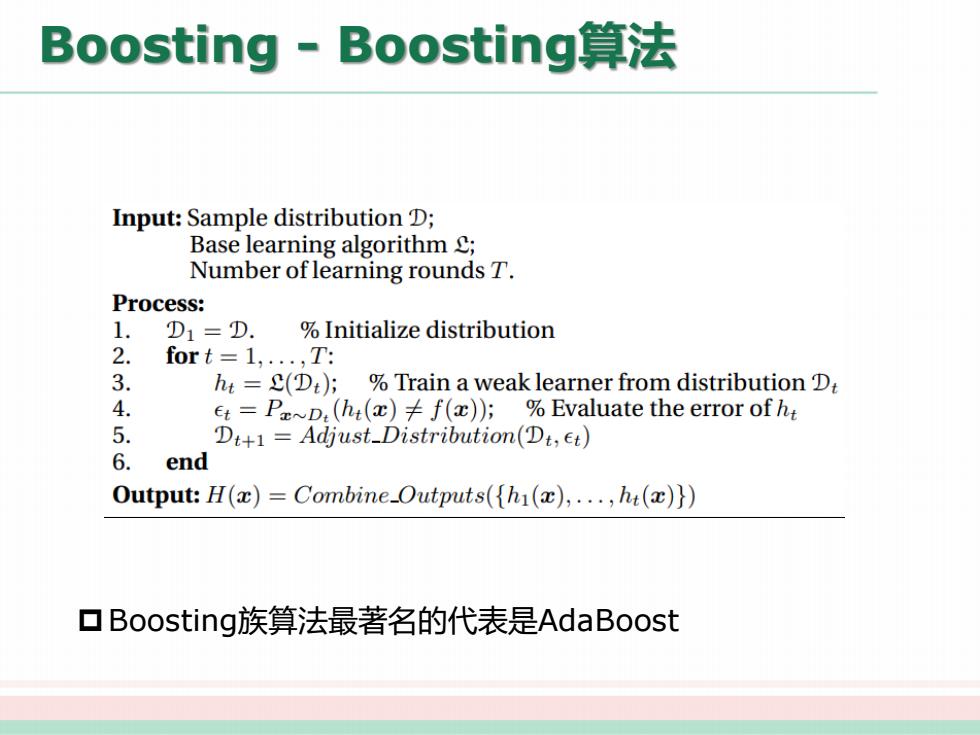

Boosting-Boosting算法 Input:Sample distribution D; Base learning algorithm C; Number of learning rounds T. Process: 1. D1=D. Initialize distribution 2. fort=1,...T: 3. ht=(D:);Train a weak learner from distribution D 4. et=P~D.(ht(a)f(x));Evaluate the error of ht 5. Dt+1 Adjust-Distribution(Dt,et) 6. end Output:H(x)=Combine_Outputs((h(x),...,hi(x)}) 口Boosting族算法最著名的代表是AdaBoost

Boosting - Boosting算法 pBoosting族算法最著名的代表是AdaBoost

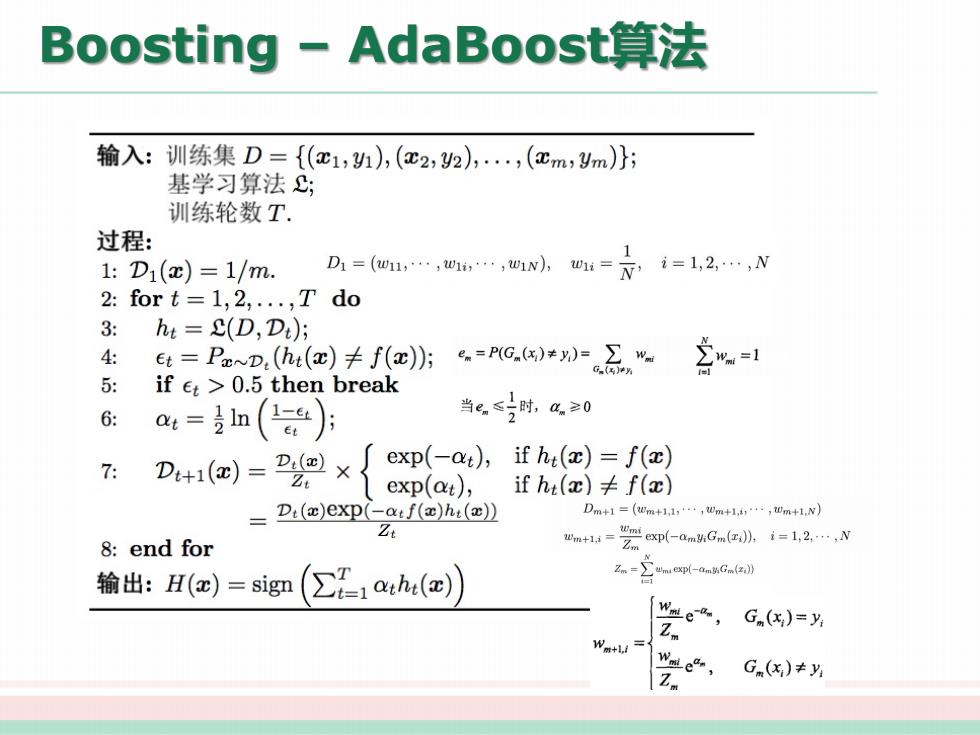

Boosting-AdaBoost算法 输入:训练集D={(c1,1),(c2,2),,(cm,ym)}: 基学习算法: 训练轮数T. 过程: 1:D1(x)=1/m. D=,…,,,0w小.0:=是,i=1,2,N 2:for t=1,2,...,T do 3: hi=E(D,Di); 4: et=Pxp,(ht(c)卡f(c): 5: if et >0.5 then break 6: a4=血(安)月 当e.≤时,a.≥0 7: D+1()=P0× exp(-at),if ht(x)=f(x) exp(at), ifht(c)≠f(x) _D:(x)exp(-a:f(x)hi(x)) Dm+1=(em+ll,+,0m+l,,0m+1.N) Z 8:end for a+1i=会ep-o.hG.(》).=l2,N 输出:H(x)=sig即 (∑1ah()) 么-动 雕ea, Z. G(x)=y: Wm+1/= G(x)≠y

Boosting – AdaBoost算法

清蒋大兰 Tsinghua University 例子: 序号 1 3 6 8 9 10 0 1 2 6 7 8 9 -1 -1 -1 1 1 -1 ·初始化 D=(%1,州2,…,%10】 %:=0.1,i=1,2,…,10 ·对m=1 务美套权值分布为D1的数据集 ,阈值取2.5,分类误差率最小,基本弱 o0-62 ·b、G1(x的误差率:g=P(G(x)≠y)=0.3 ·c、G1W的系数:4=2og二8=0,4236 2 e

• 初始化 • 对m=1 • a、在权值分布为D1的数据集上,阈值取2.5,分类误差率最⼩,基本弱 分类器: • b、G1(x)的误差率 : • c、G1(x)的系数: 例⼦:

清蒂大兰 Tsinghua University 例子: ·d、更新训练数据的权值分布 D2=(w21…,W21,…,W210) %exp(-a4y,G,xi=1,2,10 D2=(0.0715,0.0715,0.0715,0.0715,0.0715,0.0715, 0.1666,0.1666,0.1666,0.0715) f(x)=0.4236G(x) ·弱基本分类器G1(x)=sign[f1(x)]在更新的数据集上有3 个误分类点

• d、更新训练数据的权值分布 • 弱基本分类器G1(x)=sign[f1(x)]在更新的数据集上有3 个误分类点 例⼦: