Several Remarks Remark 1:about the non-negative assumption When the online functions are non-negative,it is possible to redefine the small-loss quantity by incorporating each-round minimal function value. Remark 2:about the smoothness assumption Smoothness is necessary to obtain small-loss regret bound by the first-order method(can be proved by the online-to-batch conversion and existing lower bounds for deterministic optimization). Remark 3:take care of the way dealing with variance term In OGD here we use Lemma 1,while in PEA Hedge for PEA we use Lemma 2. Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 11

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 11 Several Remarks • Remark 1: about the non-negative assumption When the online functions are non-negative, it is possible to redefine the small-loss quantity by incorporating each-round minimal function value. • Remark 2: about the smoothness assumption Smoothness is necessary to obtain small-loss regret bound by the first-order method (can be proved by the online-to-batch conversion and existing lower bounds for deterministic optimization). • Remark 3: take care of the way dealing with variance term In OGD here we use Lemma 1, while in PEA Hedge for PEA we use Lemma 2

Towards a Unified Framework Previous small-loss bounds seem to be ad-hoc designed. Is there a unified framework to get problem-dependent bounds? A reflection:Adaptive to the niceness of the environment.What does a“nice”environment actually mean? The environment is“predictable”. Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 12

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 12 Towards a Unified Framework • Previous small-loss bounds seem to be ad-hoc designed. • Is there a unified framework to get problem-dependent bounds? • A reflection: Adaptive to the niceness of the environment. What does a “nice” environment actually mean? The environment is “predictable

Outline Optimistic Online Mirror Descent ·A Unified Framework ·Small-Loss bound Gradient-Variance bound Gradient-Variation bound Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 13

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 13 Outline • Optimistic Online Mirror Descent • A Unified Framework • Small-Loss bound • Gradient-Variance bound • Gradient-Variation bound

Optimistic Online Learning Intuition:what if the environment is "predictable"? We can to some extent“guess'”the next move.. Mon. Tues. Wed. Thurs. Fri. If it is within the same season and no extreme weather Guess:It still seems to rain on Friday? Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 14

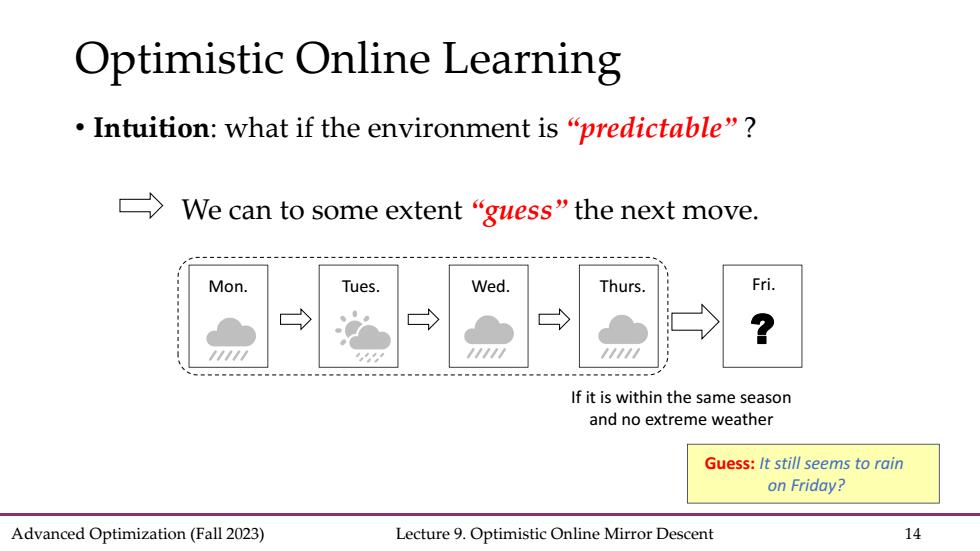

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 14 • Intuition: what if the environment is “predictable” ? Optimistic Online Learning We can to some extent “guess” the next move.? Mon. Tues. Wed. Thurs. Fri. Guess: It still seems to rain on Friday? If it is within the same season and no extreme weather

Our Previous Efforts ·Review OMD update: OMD updates:=arg minxexnx Vfi(x)+(xx) This framework provides a unified framework for the algorithmic design and regret analysis for the worst-case scenarios. ·We aim to encode“predictable”information in the update such that the overall algorithm can adapt to the niceness of environments. Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 15

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 15 Our Previous Efforts • Review OMD update: OMD updates: • This framework provides a unified framework for the algorithmic design and regret analysis for the worst-case scenarios. • We aim to encode “predictable” information in the update such that the overall algorithm can adapt to the niceness of environments