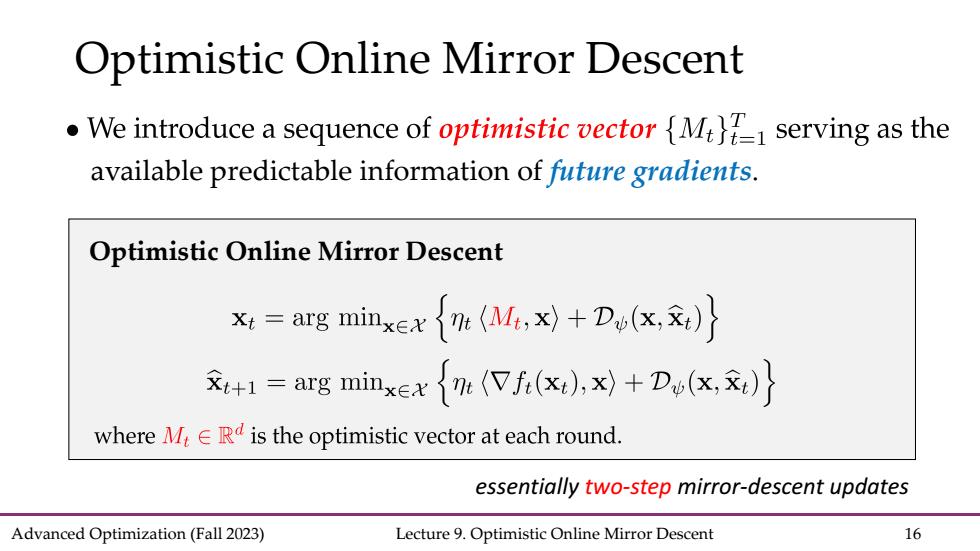

Optimistic Online Mirror Descent We introduce a sequence of optimistic vector {M serving as the available predictable information of future gradients. Optimistic Online Mirror Descent x=arg minxex+D(x) =arg minxexim(Vfi(x:),)+Do(x) where M E Ra is the optimistic vector at each round. essentially two-step mirror-descent updates Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 16

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 16 Optimistic Online Mirror Descent available predictable information of future gradients. Optimistic Online Mirror Descent essentially two-step mirror-descent updates

Understand Optimistic OMD x=arg minxexM)+Du(x,) 文+1=arg minx∈x{e(Vfx),x)+Dw(x,x)} t+2 Vf(x:) t+1 Vj+1(X+1) M+z X:+1 Xt+2 Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 17

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 17 Understand Optimistic OMD ……

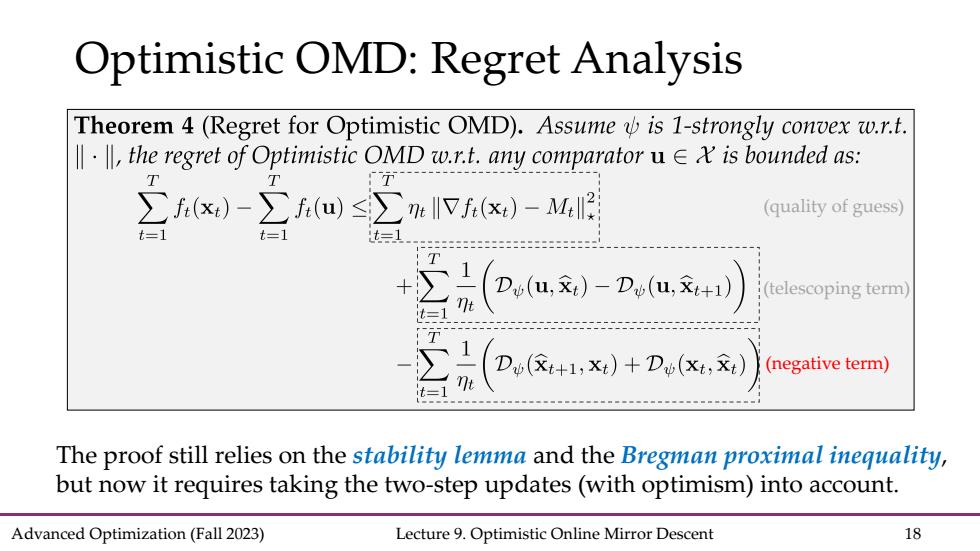

Optimistic OMD:Regret Analysis Theorem 4 (Regret for Optimistic OMD).Assume is 1-strongly convex w.r.t. the regret of Optimistic OMD w.r.t.any comparatoru is bounded as: ∑x)-m∑mIx)-Me (quality of guess) (u刻-Du文 (telescoping term) t=1 (negative term) The proof still relies on the stability lemma and the Bregman proximal inequality, but now it requires taking the two-step updates(with optimism)into account. Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 18

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 18 Optimistic OMD: Regret Analysis The proof still relies on the stability lemma and the Bregman proximal inequality, but now it requires taking the two-step updates (with optimism) into account. (negative term) (telescoping term) (quality of guess)

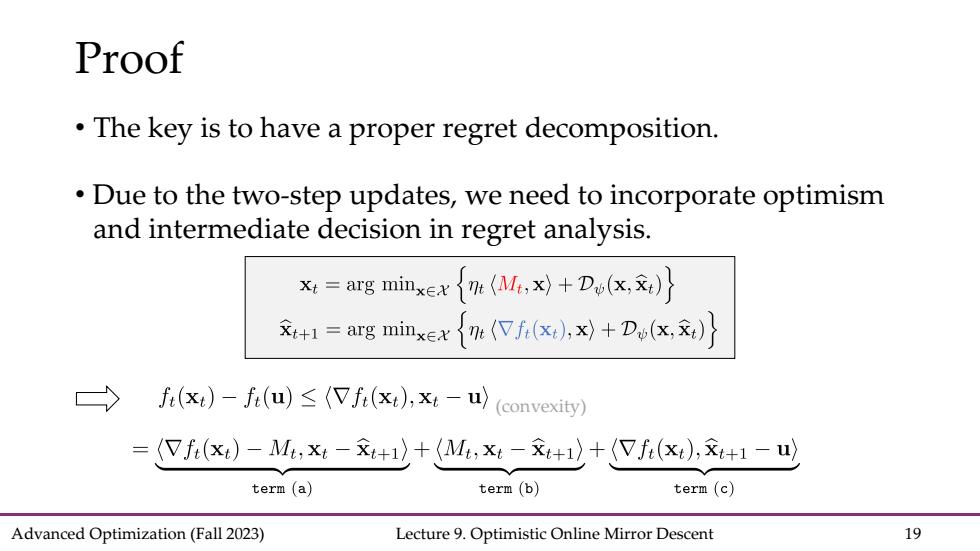

Proof The key is to have a proper regret decomposition. Due to the two-step updates,we need to incorporate optimism and intermediate decision in regret analysis. x:=arg minxexn(Mx)+D(x) =arg minxex m(fi(x:),x)+Du(x,) f(x)-f(u)≤(fi(xt),xt-u)(convexity) (V fi(x)-Mi;x-x+1)+(Mi,x:-+1)+(Vfi(x),x+1-u) term (a) term(b) term (c) Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 19

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 19 Proof • The key is to have a proper regret decomposition. • Due to the two-step updates, we need to incorporate optimism and intermediate decision in regret analysis. (convexity)

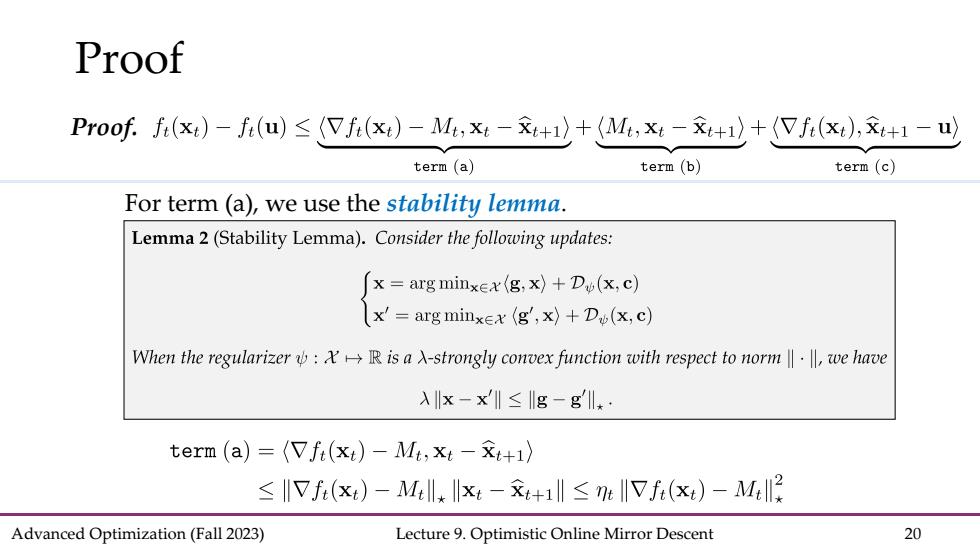

Proof Proof.fu(xt)-f(u)≤(Vf(x)-M,xt-x+1〉+(M,xt-xt+1)+(Tf(xt),+1-u) term(a) term (b) term(c) For term(a),we use the stability lemma. Lemma 2(Stability Lemma).Consider the following updates: x=arg minxex(g,x)+D(x,c) x'=arg minxex (g',x)+D(x,c) When the regularizer:X→R is a-strongly co1 ex function with respect to norm‖·‖l,we have λx-x'‖≤g-gl* term(a)=(Vfi(x)-Mi,x:-+) ≤I1Vf(x)-M4*小xE-x+1l≤形IVf(x)-M Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 20

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 20 Proof Proof.For term (a), we use the stability lemma