Key Analysis in Self-confident Tuning Proof.From the potential-based proof,we already know that T T ∑m.-≤-1+mN+-p: t=1 ≤Vr-1+nN+∑ P,) 1=V V∑ip,)+1 (L-1-∑号p》 How to bound this term? This is actually a common structure to handle. Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 6

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 6 Key Analysis in Self-confident Tuning Proof. From the potential-based proof, we already know that How to bound this term? This is actually a common structure to handle

Small-Loss Bound for PEA:Proof Proof.From previous potential-based proof,we already known that 含m-sv+m同 Pe) Lemma 2.Let a1,a2,...,ar be non-negative real numbers.Then T T te T] Lr-Lr,≤V(亿r-1+1)lnN+4y1+Lr+1 (C,≤L∈N) <V(Lr+1)In N+4v1+Lr+1 Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 7

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 7 Small-Loss Bound for PEA: Proof Proof. From previous potential-based proof, we already known that

Small-Loss Bounds for OCO Definition 4(Small Loss).The small-loss quantity of the OCO problem(online function f:R)is defined as T Fr=min x∈X fix) t=1 One essential property for small-loss bound for OCO:self-bounding property. Corollary 1.For an L-smooth and non-negative function f:RdR,we have that IVf(x)2≤V2Lf(x),x∈X. Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 8

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 8 Small-Loss Bounds for OCO One essential property for small-loss bound for OCO: self-bounding property

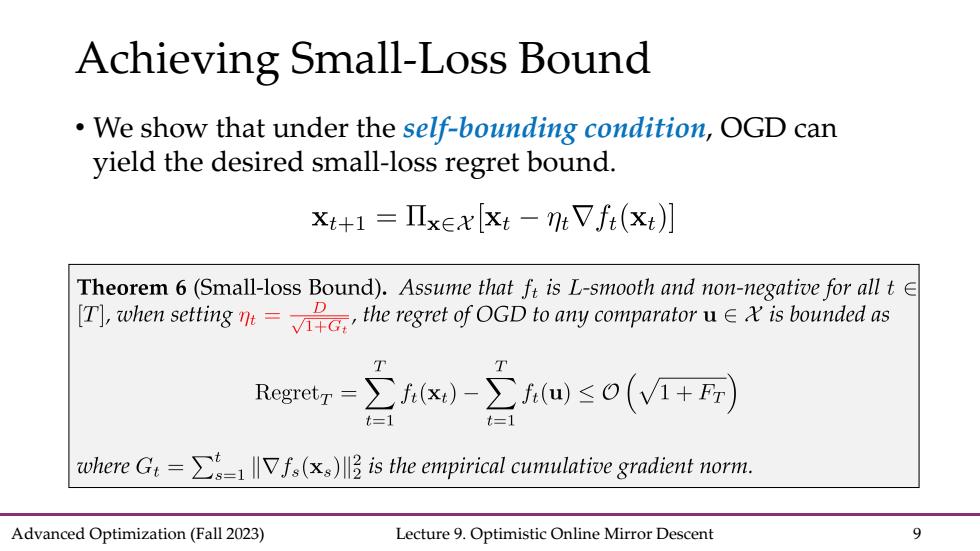

Achieving Small-Loss Bound We show that under the self-bounding condition,OGD can yield the desired small-loss regret bound. xt+1=Πx∈xXt-:Vf(xt)J Theorem 6(Small-loss Bound).Assume that fr is L-smooth and non-negative for all t E when settingthe regret of OGD to any comparator is bounded as Regretr=∑ix)-∑fm≤o(V+同) where Gis the empirical cumulative gradient norm. Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 9

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 9 Achieving Small-Loss Bound • We show that under the self-bounding condition, OGD can yield the desired small-loss regret bound

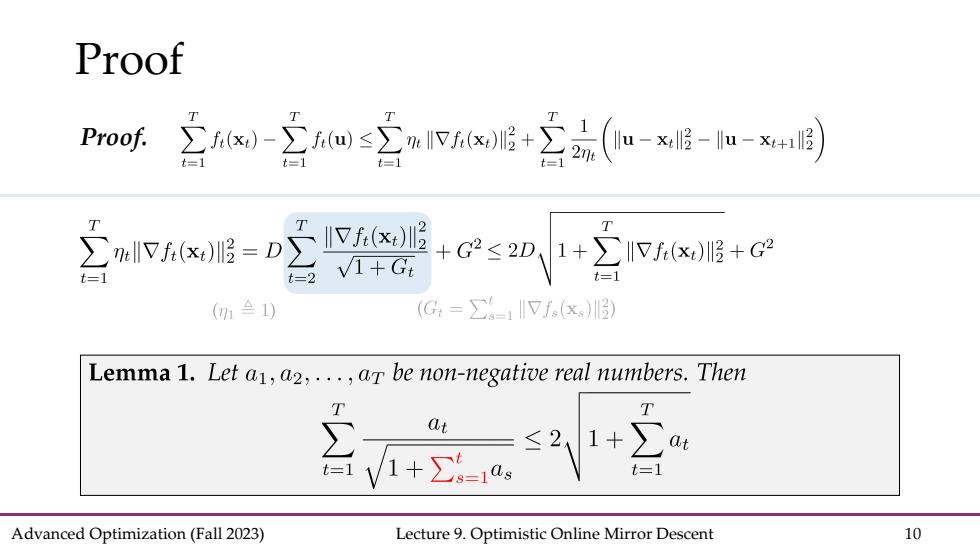

Proof Pof)-m≤ix+2品(u--u- 玄u度 +≤01+空+ (11) (G=∑1Vf(x)) Lemma 1.Let a,a2,...,ar be non-negative real numbers.Then T T -≤1+ at t=11 Advanced Optimization(Fall 2023) Lecture 9.Optimistic Online Mirror Descent 10

Advanced Optimization (Fall 2023) Lecture 9. Optimistic Online Mirror Descent 10 Proof Proof