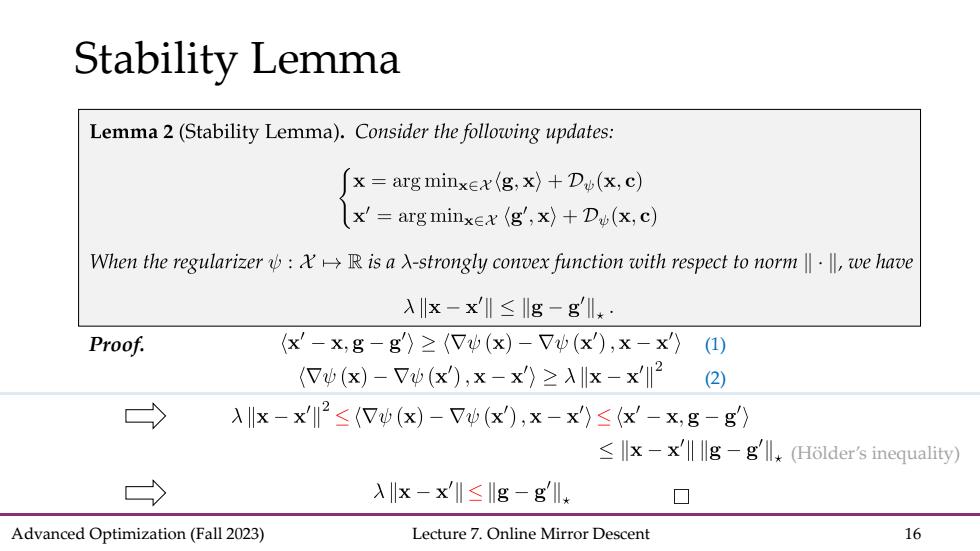

Stability Lemma Lemma 2(Stability Lemma).Consider the following updates: x=arg minxex(g:x)+D (x,c) x'=arg minxex (g',x)+D (x,c) When the regularizer:XR is a X-strongly convex function with respect to norm,we have 入x-x'I≤g-gl*: Proof. x'-x,g-g〉≥(7(x)-7(x),x-x')(1) Vψ(x)-Vb(x),x-x)≥Ax-x12 (2) > λx-x≤(7b(x)-7b(x),x-x)≤x-x,g-g) <lx-x'll lg-g'll,(Holder's inequality) > λx-x'l≤g-gl 口 Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 16

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 16 Stability Lemma (2) (1) (Hölder’s inequality) Proof

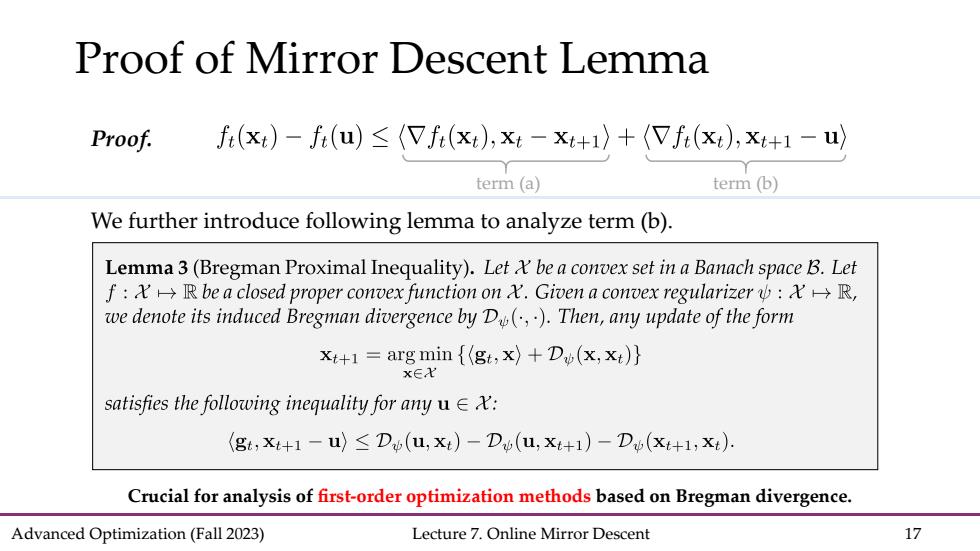

Proof of Mirror Descent Lemma Proof. fi(x:)-fi(u)<(Vfi(x:),xi-x+1)+Vfi(x:),x+1-u) term (a) term (b) We further introduce following lemma to analyze term(b). Lemma 3(Bregman Proximal Inequality).Let Y be a convex set in a Banach space B.Let f:X→R be a closed proper convex function onX.Given a convex regularizerψ:X→R, we denote its induced Bregman divergence by D(,).Then,any update of the form x+1 arg min {(gt,x)+D(x,x:)} x∈X satisfies the following inequality for any uX: (gt;x+1-u)<D(u,xt)-Do(u,xt+1)-D(xi+1;xi). Crucial for analysis of first-order optimization methods based on Bregman divergence. Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 17

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 17 Proof of Mirror Descent Lemma Proof. We further introduce following lemma to analyze term (b). term (a) term (b) Crucial for analysis of first-order optimization methods based on Bregman divergence

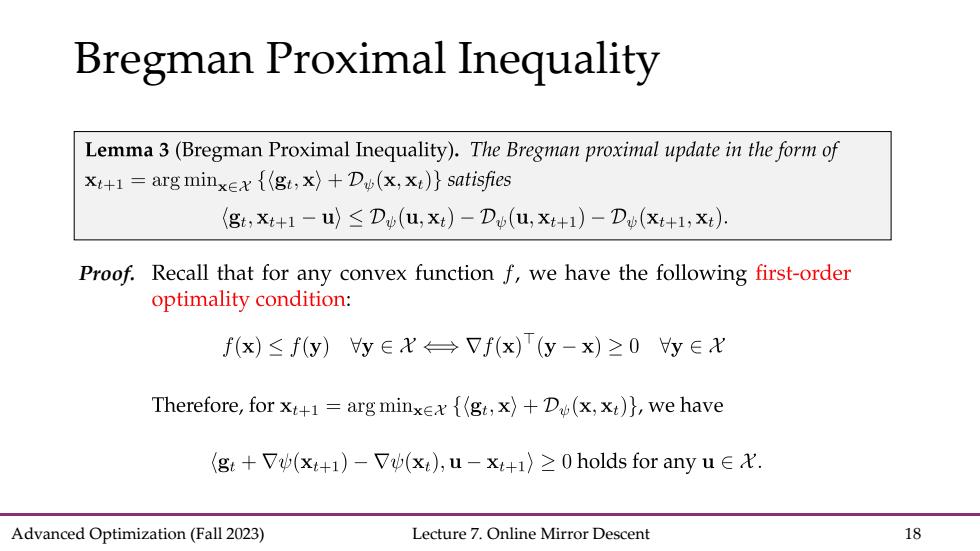

Bregman Proximal Inequality Lemma 3(Bregman Proximal Inequality).The Bregman proximal update in the form of Xt+1=arg minxex {(gt,x)+Du(x,xt)}satisfies (gt,xt+1-u)≤Db(u,xt)-Db(u,xt+1)-Db(xt+1,xt). Proof.Recall that for any convex function f,we have the following first-order optimality condition: f(x)≤f(y)y∈X→Vf(x)T(y-x)≥0y∈X Therefore,for x+1=arg minxex {(gt,x)+D(x,x)},we have (gt+7(x+1)-7(xt),u-x+i〉≥0 holds for any u∈X. Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 18

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 18 Bregman Proximal Inequality Proof

Bregman Proximal Inequality Lemma 3(Bregman Proximal Inequality).The Bregman proximal update in the form of x+1=arg minxex {(gt,x)+Du(x,x)}satisfies (gt,X:+1-u)<Du(u,xi)-Do(u,xt+1)-Du(xt+1;xi). Proof. (gt +Vu(x+1)-Vu(x),u-x+1)>0holds for any uE On the other hand,the right side of Lemma 3 is: Dv(u,xt)-Dv(u,xi+1)-Dv(Xt+i;xi) =-xt)-(V(xt),u)-)+(41)+(7(xt+1),u-xt+1〉》 -(x+1)+)+((xt),xt+1一x) =(V(xt+1)-7(xt),u-xt+1). Rearranging the terms can finish the proof. 口 Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 19

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 19 Bregman Proximal Inequality Proof

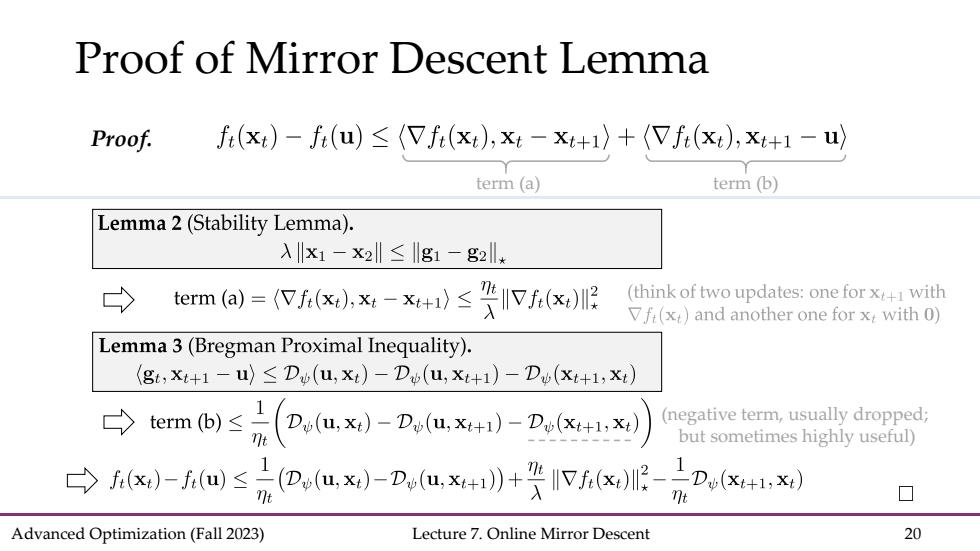

Proof of Mirror Descent Lemma Proof. f(x)-f(u)≤(Tf(xt),xt-xt+1)+(f(x),xt+1-u term (a) term (b) Lemma 2(Stability Lemma). 入x1-X2‖≤g1-g2l* tema)=W(x),x-x+)≤IVfx,服 (think of two updates:one for x with Vfi(x)and another one for x,with 0) Lemma 3(Bregman Proximal Inequality). (gi,Xi+1-u)<Du(u,xi)-Du(u,Xi+1)-Du(xi+1;xi) temb)≤L(Dw(u,x-Do(u,x+i)-Du(x+1,x (negative term,usually dropped; nt but sometimes highly useful) →ix)-i@≤D,(ux)-D,(ux+》+张IVx训2-D(x+,x刘 t Advanced Optimization(Fall 2023) Lecture 7.Online Mirror Descent 20

Advanced Optimization (Fall 2023) Lecture 7. Online Mirror Descent 20 Proof of Mirror Descent Lemma Proof. term (a) term (b) (negative term, usually dropped; but sometimes highly useful)