7 Analysis of neural networks: a random matrix approach The inherent intractability of neural network performances,which mainly originates from the non linearity of the neural activations (as well as from learning by back-propagation of the error). With this observation in mind,we propose here a theoretical study of the performance of large dimensional neural networks (in the sense of large datasets and number of neurons)

The inherent intractability of neural network performances, which mainly originates from the non linearity of the neural activations (as well as from learning by back-propagation of the error). 7 Analysis of neural networks: a random matrix approach With this observation in mind, we propose here a theoretical study of the performance of large dimensional neural networks (in the sense of large datasets and number of neurons)

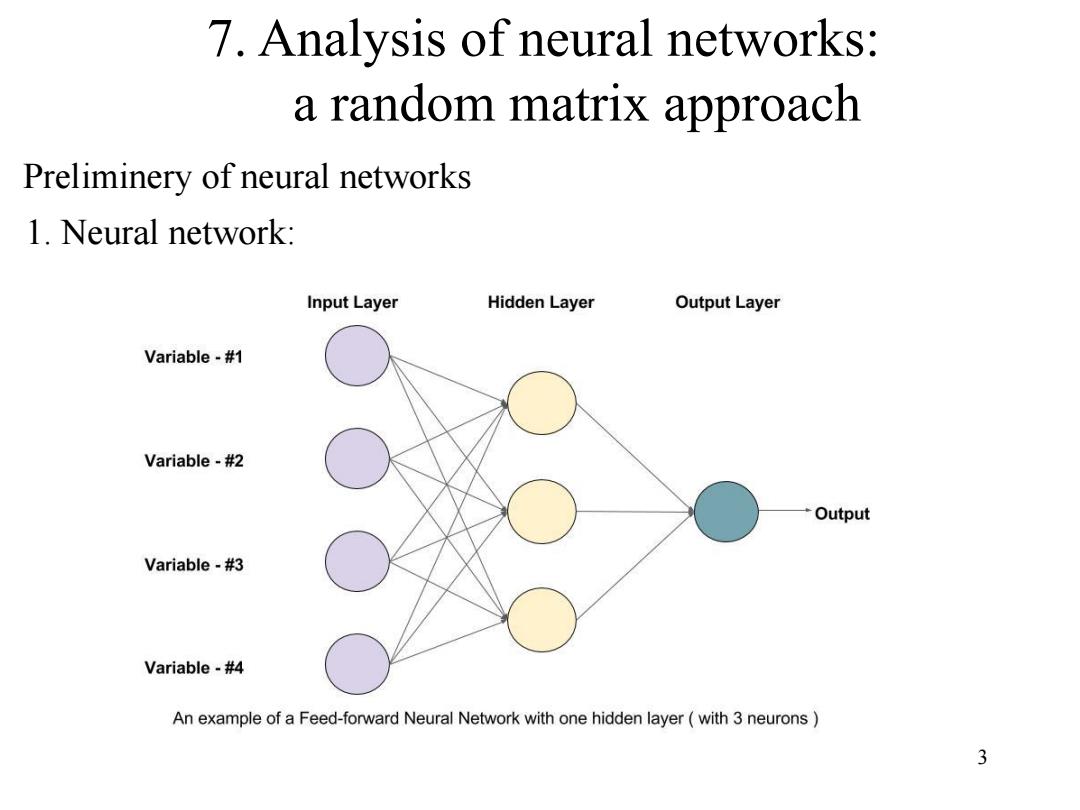

7.Analysis of neural networks: a random matrix approach Preliminery of neural networks 1.Neural network: Input Layer Hidden Layer Output Layer Variable-#1 Variable-#2 Output Variable-#3 Variable-#4 An example of a Feed-forward Neural Network with one hidden layer(with 3 neurons 3

3 7. Analysis of neural networks: a random matrix approach Preliminery of neural networks 1. Neural network:

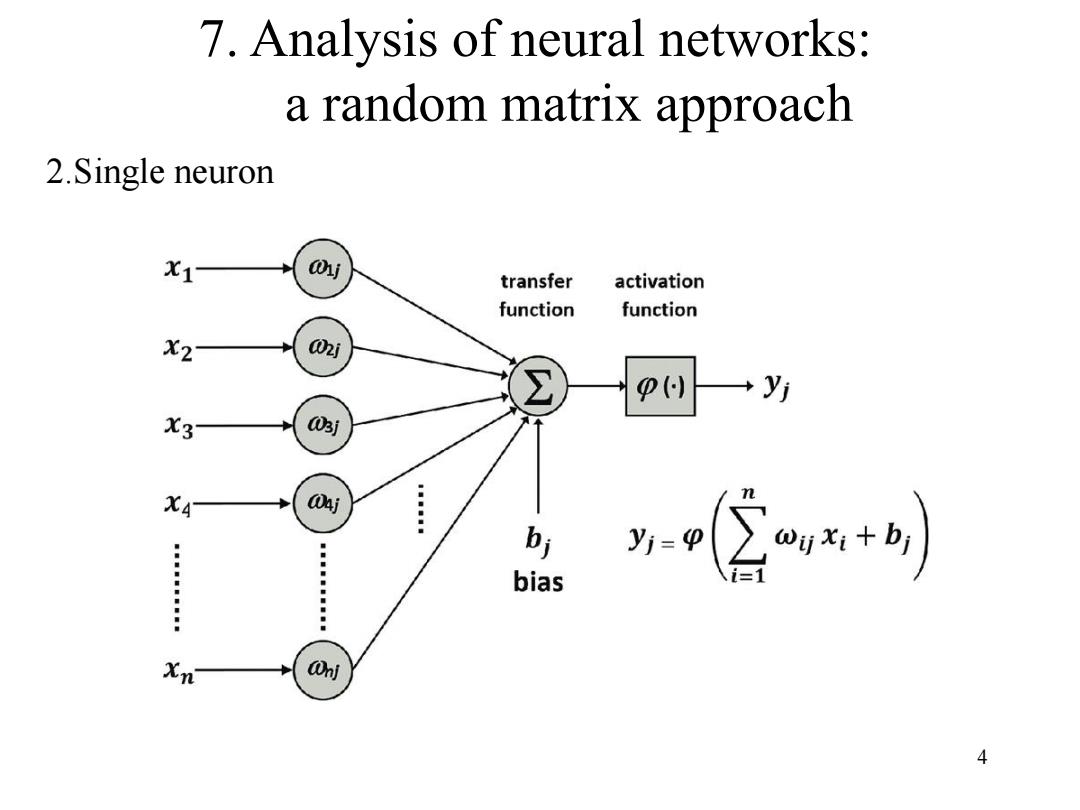

7.Analysis of neural networks: a random matrix approach 2.Single neuron x1 j transfer activation function function X2 02j P() X3 03j XA 04j bi bias y公+ Xn Onj 4

4 7. Analysis of neural networks: a random matrix approach 2.Single neuron

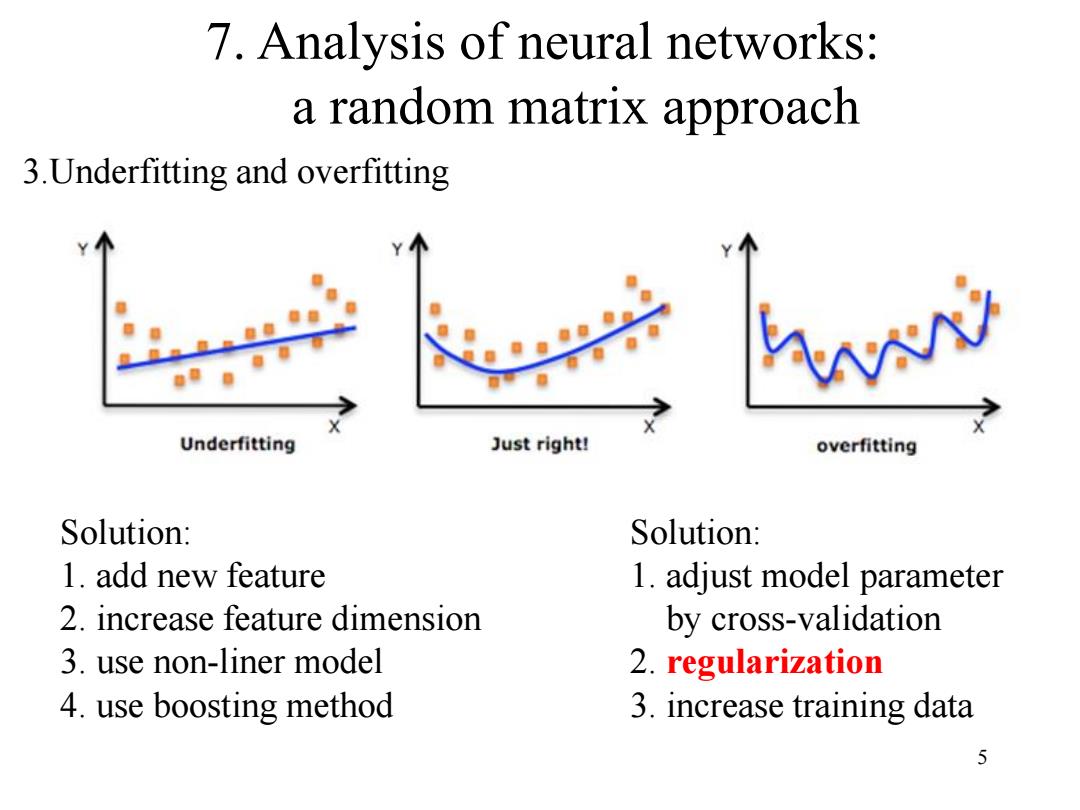

7.Analysis of neural networks: a random matrix approach 3.Underfitting and overfitting Underfitting Just right! overfitting Solution: Solution: 1.add new feature 1.adjust model parameter 2.increase feature dimension by cross-validation 3.use non-liner model 2.regularization 4.use boosting method 3.increase training data 5

5 7. Analysis of neural networks: a random matrix approach 3.Underfitting and overfitting Solution: 1. add new feature 2. increase feature dimension 3. use non-liner model 4. use boosting method Solution: 1. adjust model parameter by cross-validation 2. regularization 3. increase training data

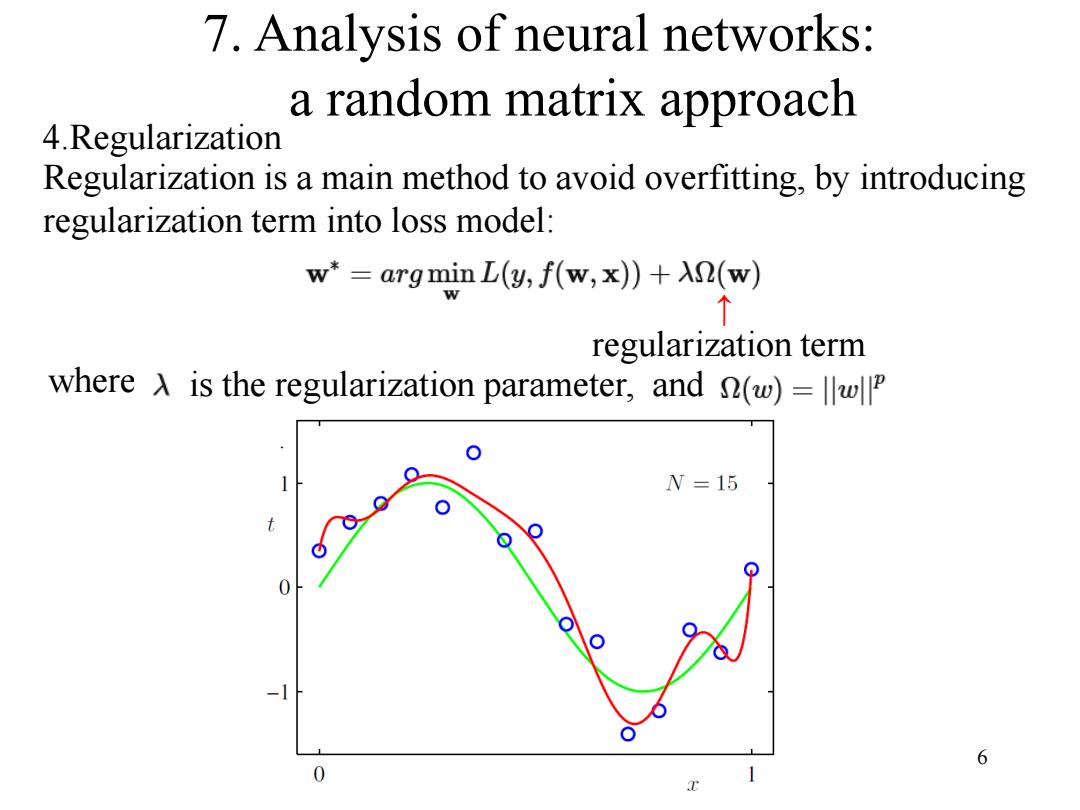

7.Analysis of neural networks: a random matrix approach 4.Regularization Regularization is a main method to avoid overfitting,by introducing regularization term into loss model: w*=argmin L(y,f(w,x))+XR(w) 个 regularization term where A is the regularization parameter,and (w)=w N=15 6 0

6 7. Analysis of neural networks: a random matrix approach 4.Regularization Regularization is a main method to avoid overfitting, by introducing regularization term into loss model: ↑ regularization term where is the regularization parameter, and