Prediction with Expert Advice Hedge algorithm:taking the "softmax"operation At each round t=l,2,· (I)compute p:∈△such that p,.x exp(-7Lt-1,i)fori∈[N] (2)the player submits p,suffers loss (pe),and observes loss eRN (3)update Lt =Lt-1+et Another greedy update:P+1 pt,iexp(-mt.)for i[N] where we set the uniform initialization as po.:=1/N,Vi [N]. The two updates are significantly different when learning rate is changing. Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 11

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 11 Prediction with Expert Advice • Hedge algorithm: taking the “softmax” operation • Another greedy update: • The two updates are significantly different when learning rate is changing

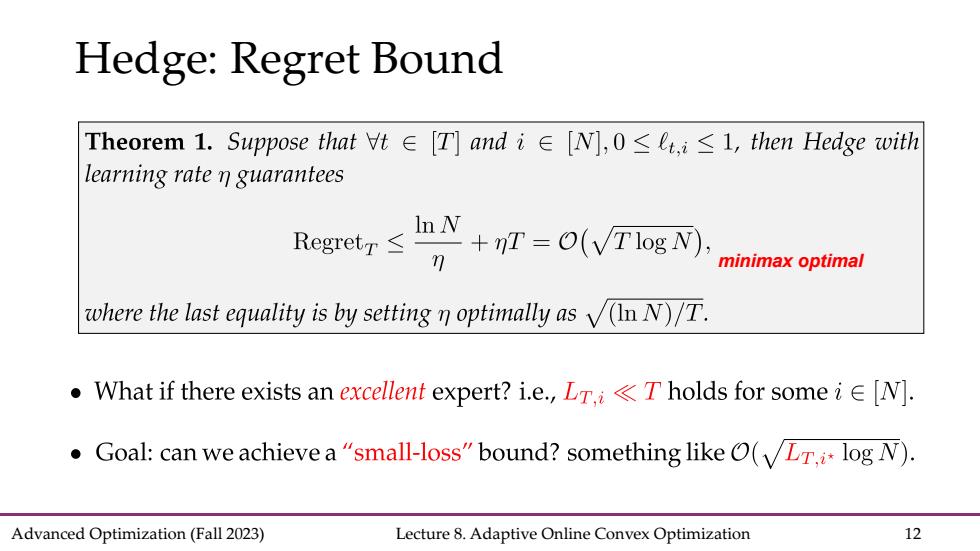

Hedge:Regret Bound Theorem1.Suppose that∀t∈[T]andi∈[N],0≤Lt,i≤l,then Hedge with learning rate n guarantees Rge≤+T=0ovTa网 minimax optimal where the last equality is by settingnoptimally asN)T. .What if there exists an excellent expert?i.e.,Tholds for someiN]. Goal:can we achieve a"small-loss"bound?something like (Lr.log N). Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 12

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 12 Hedge: Regret Bound minimax optimal

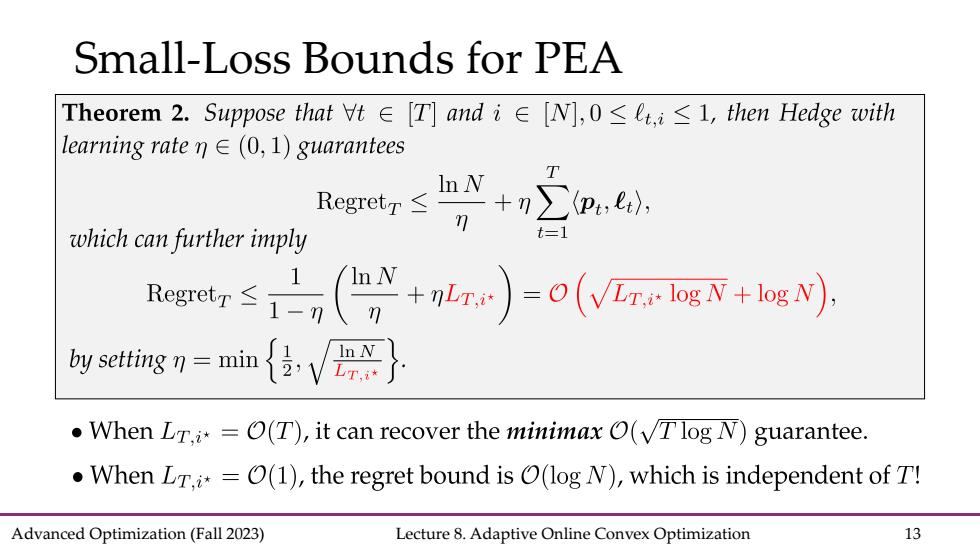

Small-Loss Bounds for PEA Theorem2.Suppose that∀t∈[T]andi∈[N],0≤lt,i≤l,then Hedge with learning rate nE(0,1)guarantees +n.6 T Regretr≤ which can further imply t=1 kg7≤亡,(+r)=o(rg+g .When LT.=O(T),it can recover the minimax (VTlog N)guarantee. When Lr.=(1),the regret bound is (log N),which is independent of T! Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 13

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 13 Small-Loss Bounds for PEA

Small-Loss Bounds for PEA Theorem2.Suppose that∀t∈[T]andi∈[N],0≤Lt,i≤l,then Hedge with learning rate nE (0,1)guarantees ns+m6 T which can further imply t=1 Rger≤',(n+re)=o(vnsN-s by setting7=min{位,V} When LT.=O(T),it can recover the minimax (VTlog N)guarantee. When LT.=(1),the regret bound is O(log N),which is independent of T! Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 14

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 14 Small-Loss Bounds for PEA

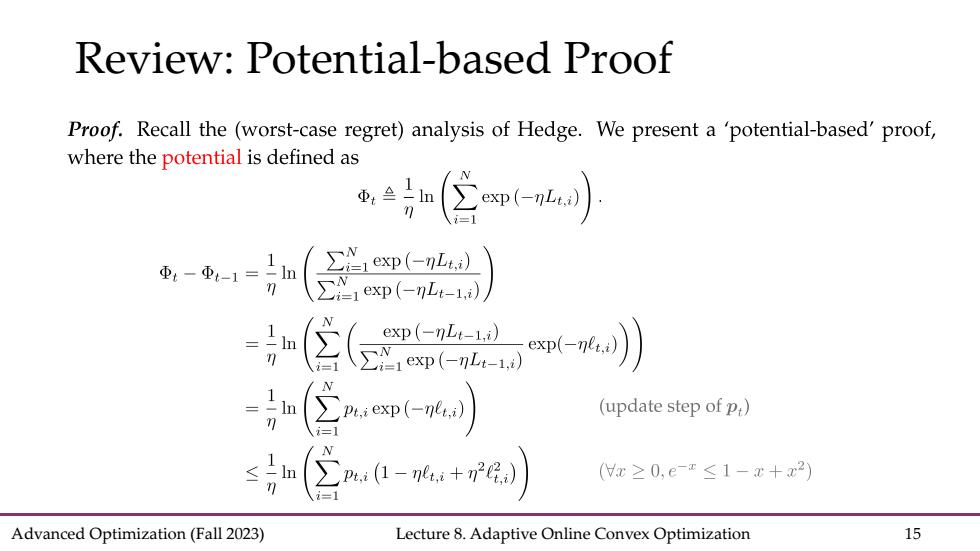

Review:Potential-based Proof Proof.Recall the (worst-case regret)analysis of Hedge.We present a 'potential-based'proof, where the potential is defined as ④-④-1=1n =!h exp(-nLt-1.i) (update step of p,) ∑a1-+n) (x≥0,e-x≤1-x+x2) Advanced Optimization(Fall 2023) Lecture 8.Adaptive Online Convex Optimization 15

Advanced Optimization (Fall 2023) Lecture 8. Adaptive Online Convex Optimization 15 Review: Potential-based Proof