Chebyshev inequality Theorem (Chebyshev Inequality).If X is a random variable with mean u and variance o2, then 02 P(IX-≥C)≤ c2 for all c 0 If a random variable has small variance,then the probability that it takes a value far from its mean is also small. Note:does not require X to be nonnegative

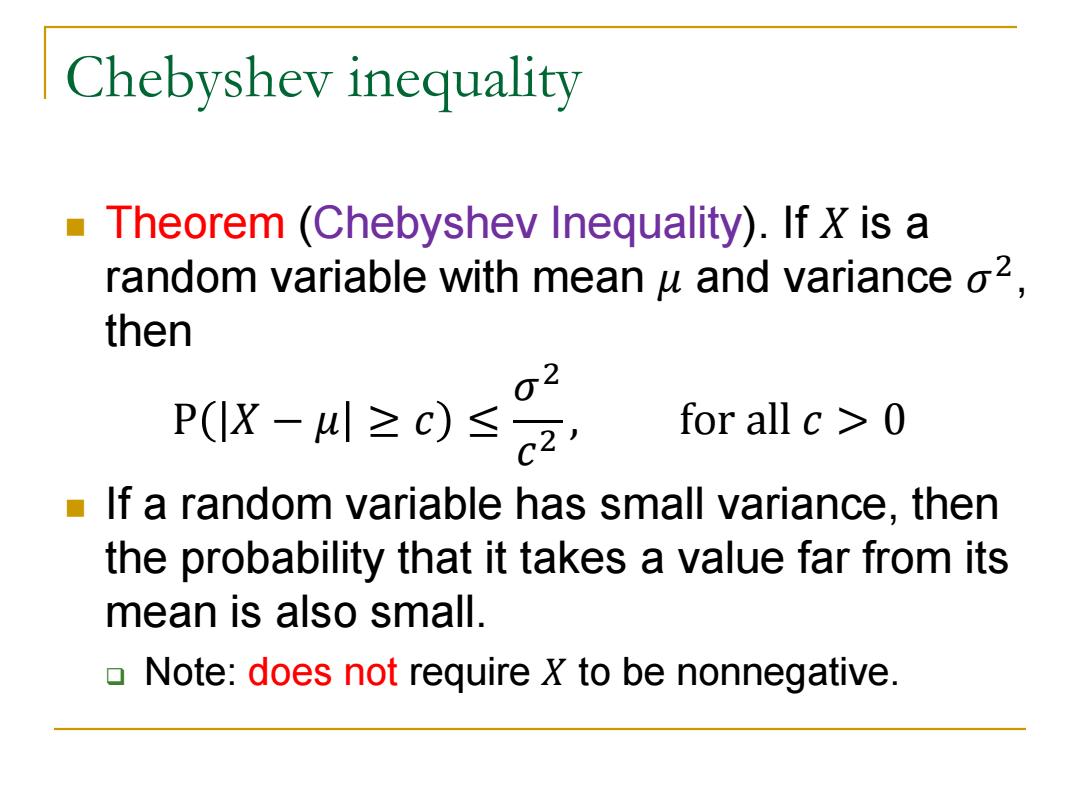

Chebyshev inequality Theorem (Chebyshev Inequality). If 𝑋 is a random variable with mean 𝜇 and variance 𝜎 2 , then P 𝑋 − 𝜇 ≥ 𝑐 ≤ 𝜎 2 𝑐 2 , for all 𝑐 > 0 If a random variable has small variance, then the probability that it takes a value far from its mean is also small. Note: does not require 𝑋 to be nonnegative

Chebyshev inequality (Proof) Let's prove the Chebyshev inequality P(IX-u≥c)≤σ2/c2 Consider the nonnegative random variable (X-u)2 and apply the Markov inequality: P(X-W)2≥c2)≤ E[(X-)2]σ2 c2 c2 o Finally note that the event (X-u)2 =c2 is identical to the event X-u=c.Thus P(IX-川≥c)=P(X-4)2≥c2)≤o2/c2

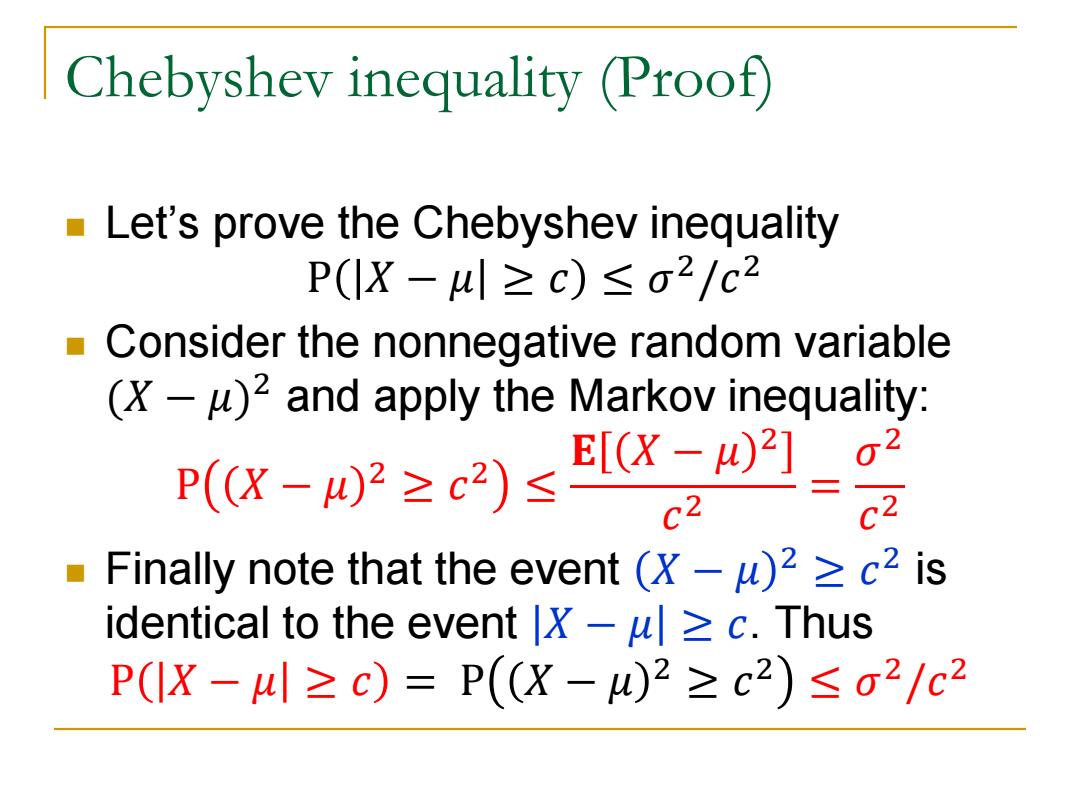

Chebyshev inequality (Proof) Let’s prove the Chebyshev inequality P 𝑋 − 𝜇 ≥ 𝑐 ≤ 𝜎 2 /𝑐 2 Consider the nonnegative random variable (𝑋 − 𝜇) 2 and apply the Markov inequality: P 𝑋 − 𝜇 2 ≥ 𝑐 2 ≤ 𝐄 𝑋 − 𝜇 2 𝑐 2 = 𝜎 2 𝑐 2 Finally note that the event 𝑋 − 𝜇 2 ≥ 𝑐 2 is identical to the event 𝑋 − 𝜇 ≥ 𝑐. Thus P 𝑋 − 𝜇 ≥ 𝑐 = P 𝑋 − 𝜇 2 ≥ 𝑐 2 ≤ 𝜎 2 /𝑐 2

An alternative form An alternative form is obtained by letting c ko,where k is positive,which yields σ2 1 P(X-4川≥ko)≤k2o2=K2 The probability that a random variable that is more than k standard deviations away from its mean is at most径

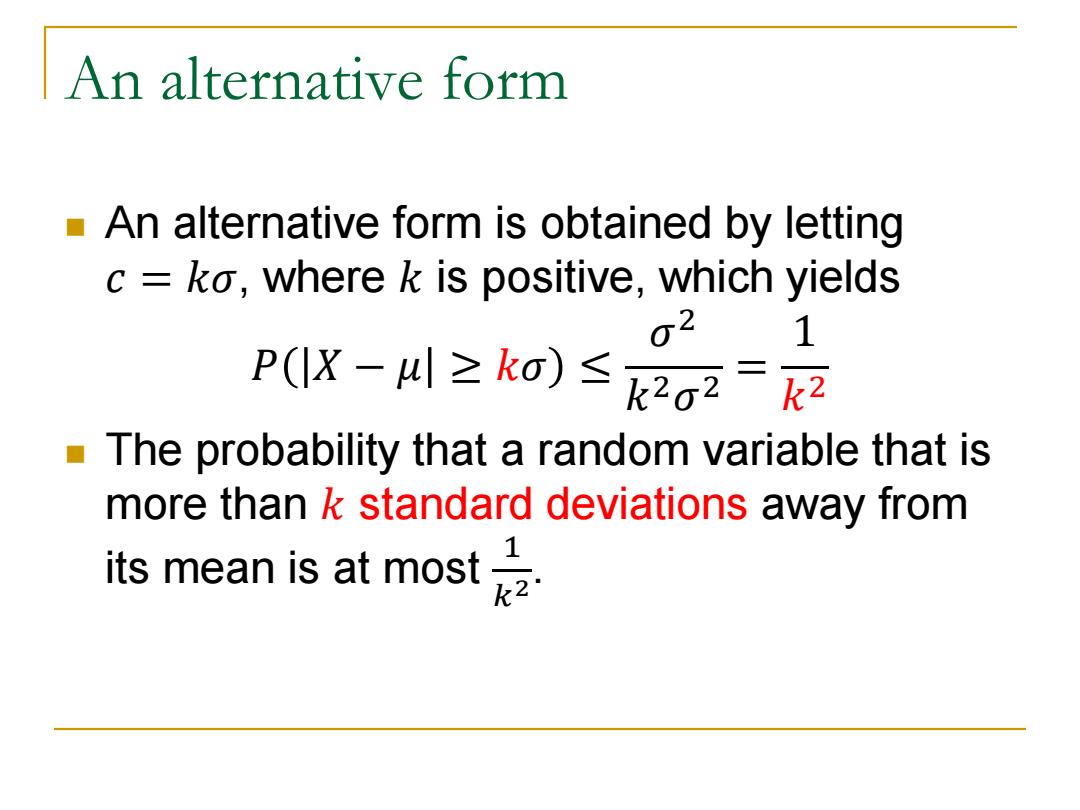

An alternative form An alternative form is obtained by letting 𝑐 = 𝑘𝜎, where 𝑘 is positive, which yields 𝑃 𝑋 − 𝜇 ≥ 𝑘𝜎 ≤ 𝜎 2 𝑘 2𝜎 2 = 1 𝑘 2 The probability that a random variable that is more than 𝑘 standard deviations away from its mean is at most 1 𝑘2

Comparisons The Chebyshev inequality tends to be more powerful than the Markov inequality o the bounds are more accurate,because it also uses information on the variance of X The mean and the variance of a random variable are only a rough summary of its properties we cannot expect the bounds to be close approximations of the exact probabilities

Comparisons The Chebyshev inequality tends to be more powerful than the Markov inequality the bounds are more accurate, because it also uses information on the variance of 𝑋 The mean and the variance of a random variable are only a rough summary of its properties we cannot expect the bounds to be close approximations of the exact probabilities

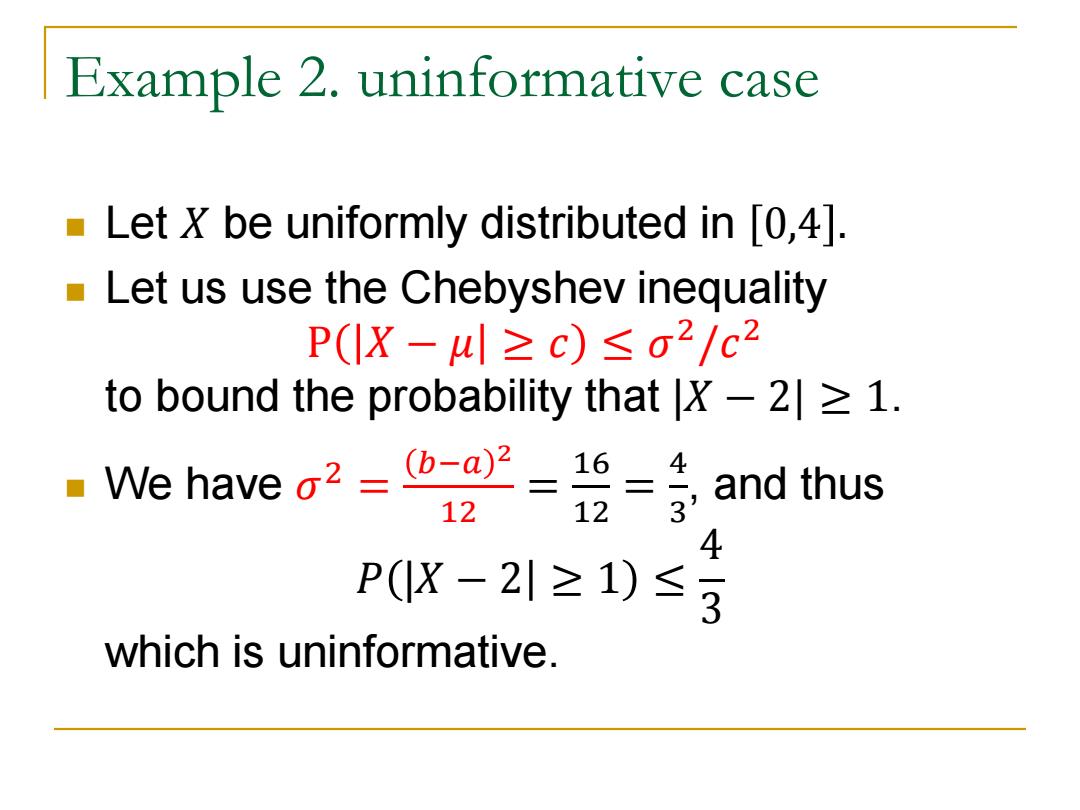

Example 2.uninformative case Let X be uniformly distributed in 0,4. Let us use the Chebyshev inequality P(IX-W≥c)≤σ2/c2 to bound the probability that X-2 1. We have o2 (b-a)2 16 = 4 and thus 12 12 4 P(X-2|≥1)≤ 3 which is uninformative

Example 2. uninformative case Let 𝑋 be uniformly distributed in 0,4 . Let us use the Chebyshev inequality P 𝑋 − 𝜇 ≥ 𝑐 ≤ 𝜎 2 /𝑐 2 to bound the probability that |𝑋 − 2| ≥ 1. We have 𝜎 2 = 𝑏−𝑎 2 12 = 16 12 = 4 3 , and thus 𝑃 |𝑋 − 2 ≥ 1 ≤ 4 3 which is uninformative