Works if... o The base classifiers should be independent o The base classifiers should do better than a classifier that performs random guessing.(error 0.5) o In practice,it is hard to have base classifiers perfectly independent.Nevertheless,improvements have been observed in ensemble methods when they are slightly correlated. 5/41

Works if … The base classifiers should be independent. The base classifiers should do better than a classifier that performs random guessing. (error < 0.5) In practice, it is hard to have base classifiers perfectly independent. Nevertheless, improvements have been observed in ensemble methods when they are slightly correlated. 5 / 41

Rationale o One important note is that: When we generate multiple base-learners,we want them to be reasonably accurate but do not require them to be very accurate individually,so they are not,and need not be, optimized separately for best accuracy. The base learners are not chosen for their accuracy,but for their simplicity. 6/41

Rationale One important note is that: When we generate multiple base-learners, we want them to be reasonably accurate but do not require them to be very accurate individually, so they are not, and need not be, optimized separately for best accuracy. The base learners are not chosen for their accuracy, but for their simplicity. 6 / 41

7.2.多分类器结合 o Average results from different models o Why? Better classification performance than individual classifiers More resilience to noise o Why not? Time consuming o Overfitting 7/41

7.2. 多分类器结合 Average results from different models Why? Better classification performance than individual classifiers More resilience to noise Why not? Time consuming Overfitting 7 / 41

Why o Better classification performance than individual classifiers o More resilience to noise Beside avoiding the selection of the worse classifier under particular hypothesis,fusion of multiple classifiers can improve the performance of the best individual classifiers o This is possible if individual classifiers make"different" errors For linear combiners,Turner and Ghosh (1996)showed that averaging outputs of individual classifiers with unbiased and uncorrelated errors can improve the performance of the best individual classifier 8/41

Why Better classification performance than individual classifiers More resilience to noise Beside avoiding the selection of the worse classifier under particular hypothesis, fusion of multiple classifiers can improve the performance of the best individual classifiers This is possible if individual classifiers make ”different” errors For linear combiners, Turner and Ghosh (1996) showed that averaging outputs of individual classifiers with unbiased and uncorrelated errors can improve the performance of the best individual classifier 8 / 41

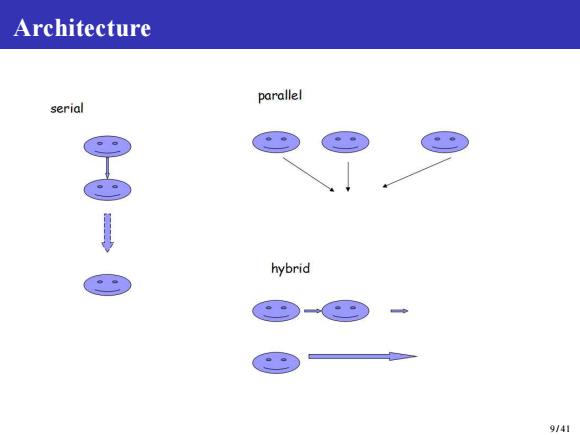

Architecture parallel serial hybrid 9/41

Architecture 9 / 41