Chapter 6 Architecture and Equilibria 6.2 Global Equi libra:convergence and stability Neural network synapses neurons three dynamical systems: synapses dynamical systems M neuons dynamical systems X joint synapses-neurons dynamical systems (X,M) Historically,Neural engineers study the first or second neural network.They usually study learning in feedforward neural networks and neural stability in nonadaptive feedback neural networks.RABAM and ART network depend on joint equilibration of the synaptic and neuronal dynamical systems. 2004.11.10 6

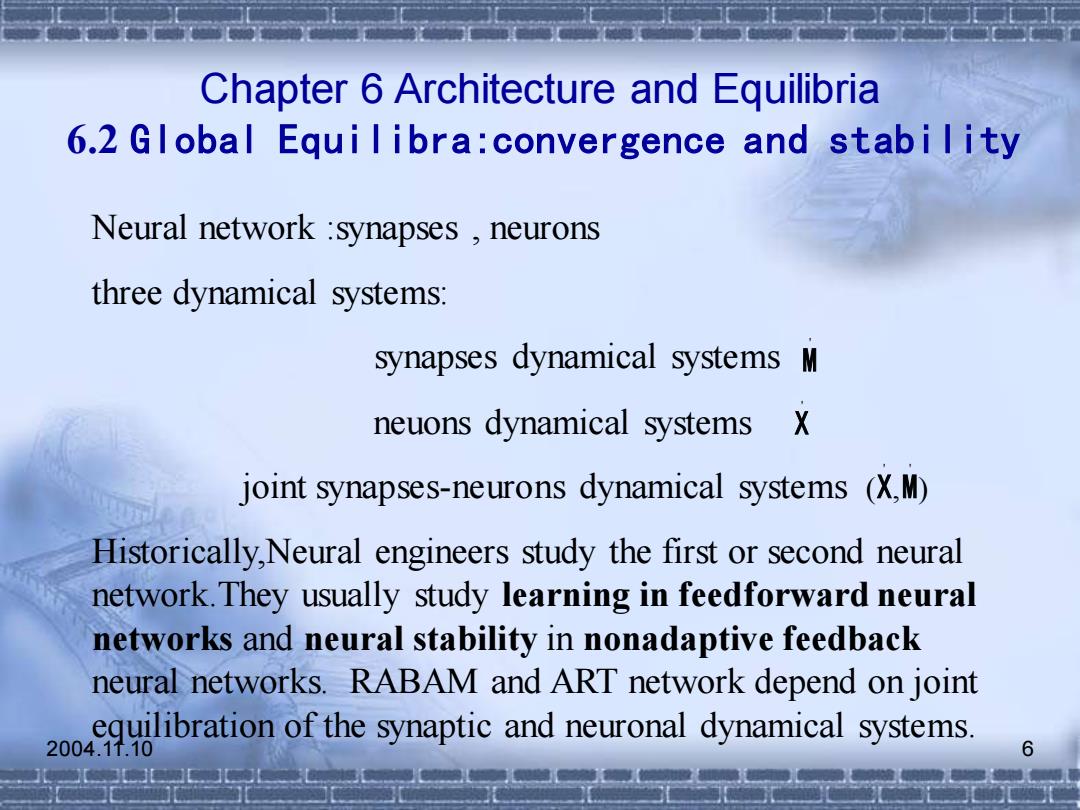

2004.11.10 6 Chapter 6 Architecture and Equilibria 6.2 Global Equilibra:convergence and stability Neural network :synapses , neurons three dynamical systems: synapses dynamical systems neuons dynamical systems joint synapses-neurons dynamical systems Historically,Neural engineers study the first or second neural network.They usually study learning in feedforward neural networks and neural stability in nonadaptive feedback neural networks. RABAM and ART network depend on joint equilibration of the synaptic and neuronal dynamical systems. ' M ' X ( , ) ' ' X M

Chapter 6 Architecture and Equilibria 6.2 Global Equi libra:convergence and stability Equilibrium is steady state Convergence is synaptic equilibrium.M=0 6.1 Stability is neuronal equilibrium. X=0 6.2 More generally neural signals reach steady state even though the activations still change.We denote steady state in the neuronal field F =0 6.3 Neuron fluctuate faster than synapses fluctuate Stability-Convergence dilemma The synapsed slowly encode these neural patterns being learned;but when the synapsed change ,this tends 2004d ihdo the stable neuronal patterns

2004.11.10 7 Chapter 6 Architecture and Equilibria 6.2 Global Equilibra:convergence and stability Equilibrium is steady state . Convergence is synaptic equilibrium. Stability is neuronal equilibrium. More generally neural signals reach steady state even though the activations still change.We denote steady state in the neuronal field Neuron fluctuate faster than synapses fluctuate. Stability - Convergence dilemma : The synapsed slowly encode these neural patterns being learned; but when the synapsed change ,this tends to undo the stable neuronal patterns. M = 0 6.1 • X = 0 6.2 • Fx Fx = 0 6.3 •

Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Al gor ithms Competitve learning adpatively qunatizes the input pattern space R" p(x)charcaterizes the continuous distributions of pattern AVO X→X centroid We shall prove that: Competitve AVQ synaptic vector converge to pattern-class centroid.They vibrate about the cemtroid in a Browmian motion 2004.11.10 8

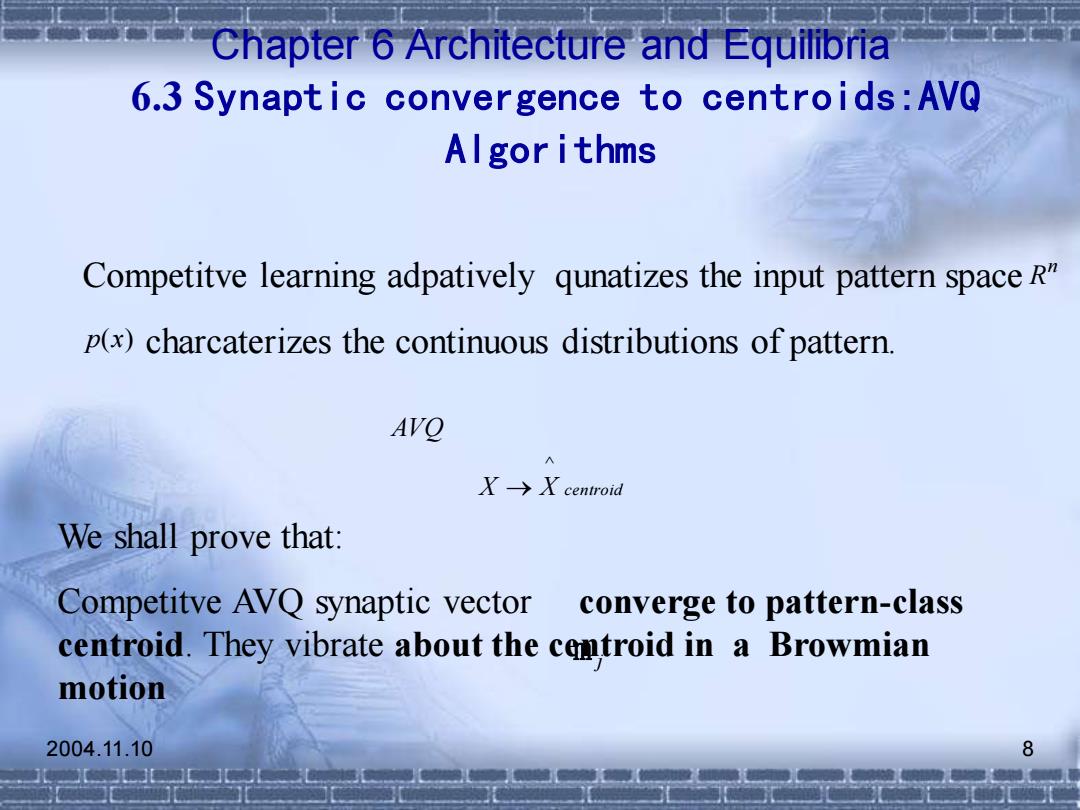

2004.11.10 8 Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Algorithms We shall prove that: Competitve AVQ synaptic vector converge to pattern-class centroid. They vibrate about the centroid in a Browmian motion m j Competitve learning adpatively qunatizes the input pattern space charcaterizes the continuous distributions of pattern. n R p(x) X X centroid AVQ ^ →

Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Algor ithms Comptetive AVQ Stochastic Differential Equations Pattern Rn R”=DUD2UD3UDK 6.6 D,∩Dj=Φ,fi≠j 6.7 The Random Indicator function... [1fx∈D Io()0if xeD 6-8 Supervised learning algorithms depend explicitly on the indicator functions.Unsupervised learning algorthms don't require this pattern-class information. ID.xp(x)dx Centriod 6-9 ID.p(x)dx 2004.11.10 9

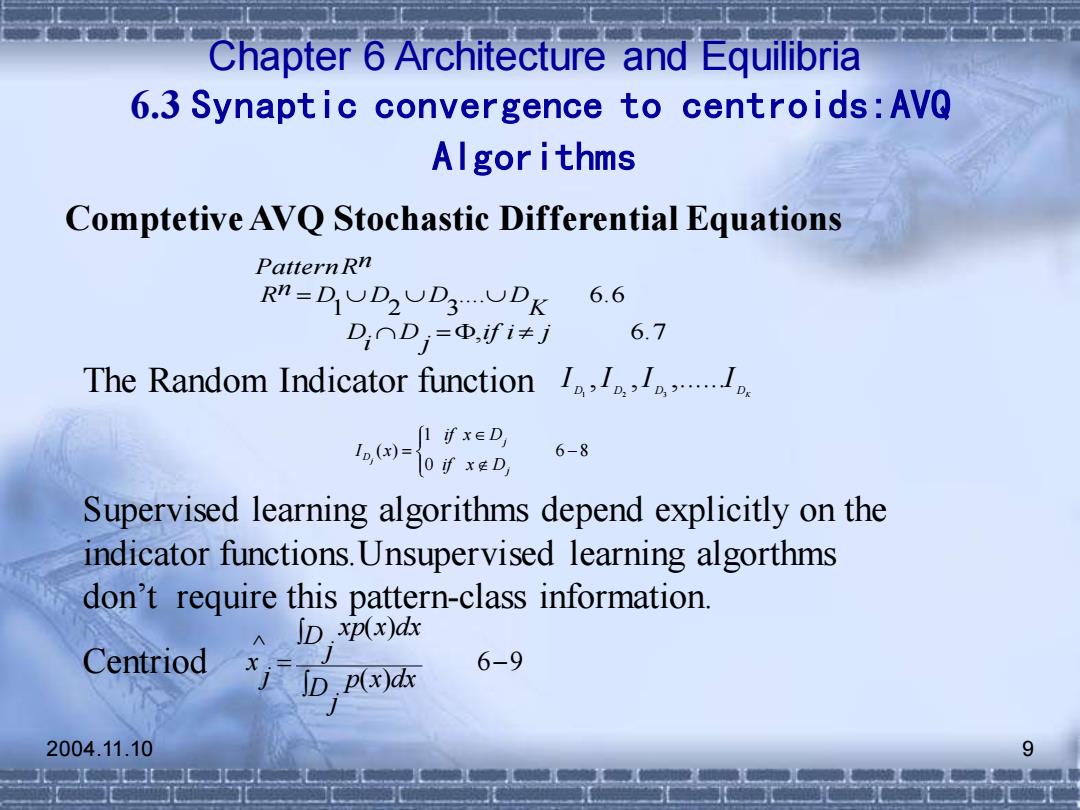

2004.11.10 9 Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Algorithms , 6.7 .... 6.6 1 2 3 if i j j D i D K Rn D D D D PatternRn = = The Random Indicator function Supervised learning algorithms depend explicitly on the indicator functions.Unsupervised learning algorthms don’t require this pattern-class information. Centriod D D D DK I ,I ,I ,......I 1 2 3 6 8 0 1 ( ) − = j j D if x D if x D I x j 6 9 ( ) ( ) ^ − = j D p x dx j D xp x dx j x Comptetive AVQ Stochastic Differential Equations

Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Al gor ithms The Stochastic unsupervised competitive learning law: ● m)=S0yj儿x-m]+nj 6-10 We want to show that at equilibrium m=x We assume S,≈I,(x) 6-11 The equilibrium and convergence depend on approximation (6-11),so 6-10 reduces m=1D,(x)[x-m]+n 6-12 2004.11.10 10

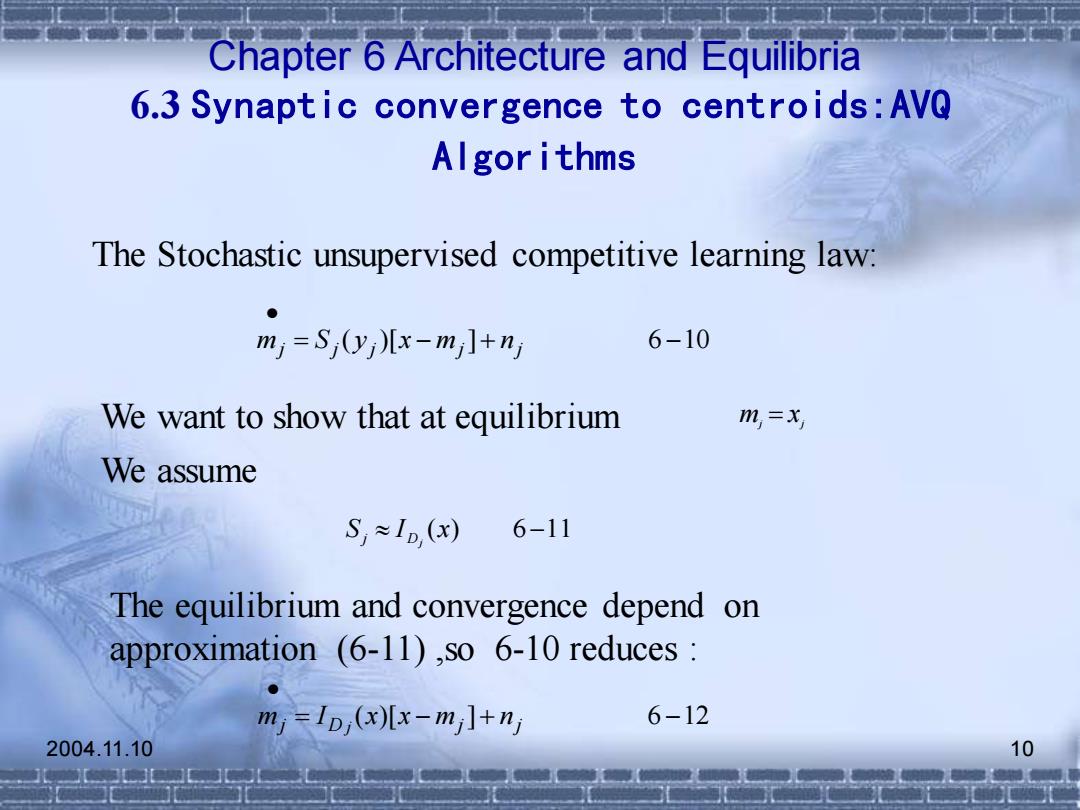

2004.11.10 10 Chapter 6 Architecture and Equilibria 6.3 Synaptic convergence to centroids:AVQ Algorithms The Stochastic unsupervised competitive learning law: = ( )[ − ]+ 6 −10 • j j j mj nj m S y x We want to show that at equilibrium mj = xj S I (x) 6−11 Dj j We assume The equilibrium and convergence depend on approximation (6-11) ,so 6-10 reduces : = ( )[ − ]+ 6 −12 • j D mj nj m I x x j