Performance Measure Recall in the statistical learning:risk R(h)≌E(x,p[(h(x),y. In online learning:sequential risk T ({w})≌∑f(w)=∑(wx,): t-1 t=1 meaning:cumulative loss of models trained on growing data stream St={(x1,y1),...,(x,)} Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 11

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 11 Performance Measure • Recall in the statistical learning: risk • In online learning: sequential risk

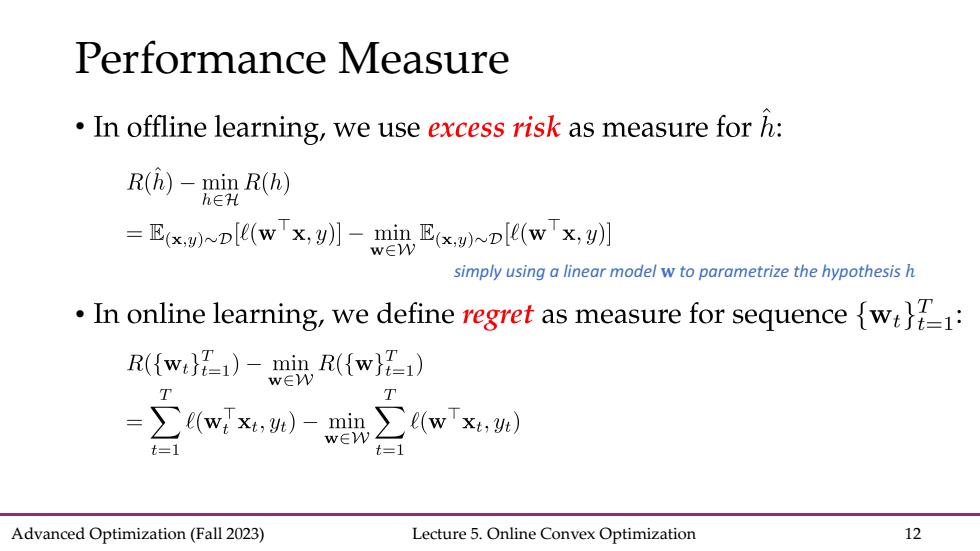

Performance Measure In offline learning,we use excess risk as measure for h: R(h)-min R(h) h∈TH =E(x)D[e(wx,y)]-min E(x.)D[e(w x,y)] simply using a linear model w to parametrize the hypothesis h In online learning,we define regret as measure for sequence {w1: R({w)-R(fw) 7 ∑wx,w)-∑ewTx,】 Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 12

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 12 Performance Measure • simply using a linear model � to parametrize the hypothesis ℎ •

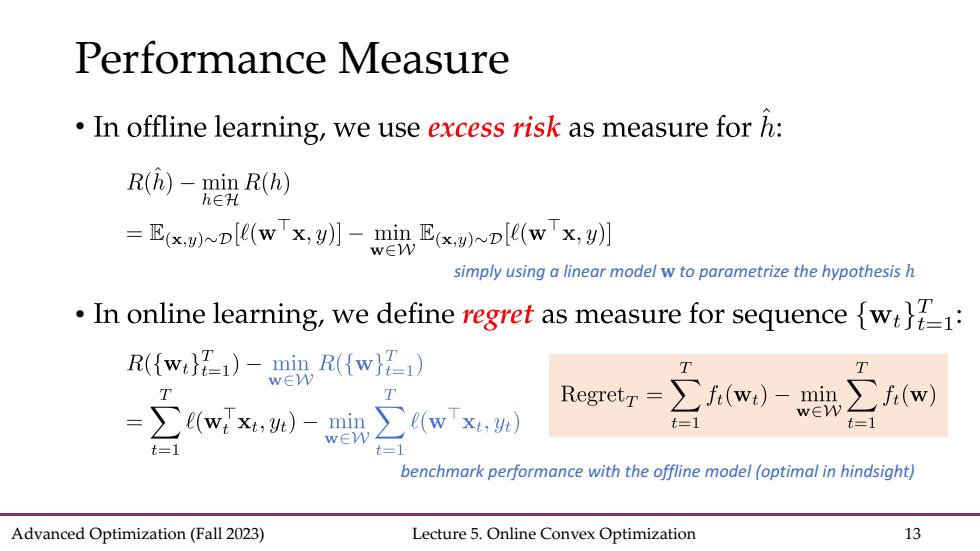

Performance Measure In offline learning,we use excess risk as measure for h: R(h)-min R(h) h∈TH =xDl(wTx,y】-典xD(wTx,w小 simply using a linear model w to parametrize the hypothesis h In online learning,we define regret as measure for sequence {w R(fwi}1)-min,R({w)1) w∈ Regretr- ∑(wx,)- (wx,) iw,)- ∑w) t=1 t= t= wEW =1 benchmark performance with the offline model (optimal in hindsight) Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 13

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 13 Performance Measure • simply using a linear model � to parametrize the hypothesis ℎ benchmark performance with the offline model (optimal in hindsight) •

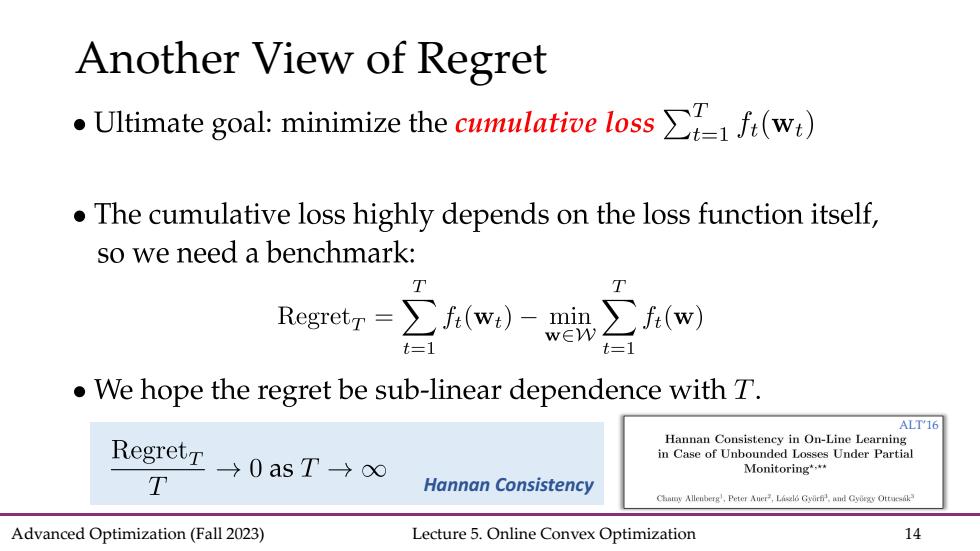

Another View of Regret .Ultimate goal:minimize the cumulative lossf(w) The cumulative loss highly depends on the loss function itself, so we need a benchmark: T T Regretr=∑fiw,)-∑fiw t=1 t=1 We hope the regret be sub-linear dependence with T. Hannan Consistency in On-Line Learning Regretr→0asT→o in Case of Unbounded Losses Under Partial Monitoring'. T Hannan Consistency Chamy Allenberg,Peter Aur Lsdo Gvr,and Gyogy Ottuesik Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 14

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 14 Another View of Regret ALT’16 Hannan Consistency

Compared with Statistical Offline Learning ·Memory efficient Do not need i.i.d.assumption the environment can be even adversarial typically,the regret analysis does not need concentration Strictly harder than statistical learning under non-i.i.d.assumption online to batch conversion Advanced Optimization(Fall 2023) Lecture 5.Online Convex Optimization 15

Advanced Optimization (Fall 2023) Lecture 5. Online Convex Optimization 15 Compared with Statistical Offline Learning • Memory efficient • Do not need i.i.d. assumption • the environment can be even adversarial • typically, the regret analysis does not need concentration • Strictly harder than statistical learning • under non-i.i.d. assumption • online to batch conversion