GPU Teaching Kit LECTURE 1 - INTROdUCTION TO cudA c CUDA C vs. Thrust vs. CUDA Libraries

CUDA C vs. Thrust vs. CUDA Libraries Accelerated Computing GPU Teaching Kit

Introduction to Heterogeneous Parallel Computing CUDA C vs.CUDA Libs vs.OpenACC Memory Allocation and Data Movement API Functions Data Parallelism and Threads 十件发女亨 University of Electrei Science and TachnolopfChina

Introduction to Heterogeneous Parallel Computing CUDA C vs. CUDA Libs vs. OpenACC Memory Allocation and Data Movement API Functions Data Parallelism and Threads

OBJECTIVES To learn the major differences between latency devices (CPU cores)and throughput devices(GPU cores) To understand why winning applications increasingly use both types of devices 电子料做女学 Universityof ElectriScience and TachnolopChina O

OBJECTIVES ▪ To learn the major differences between latency devices (CPU cores) and throughput devices (GPU cores) ▪ To understand why winning applications increasingly use both types of devices

CPU AND GPU ARE DESIGNED VERY DIFFERENTLY CPU GPU Latency Oriented Cores Throughput Oriented Cores Chip Chip Core Compute Unit Cache/Local Mem Local Cache Registers Registers SIMD Unit Contro SIMD Unit Threading 电子料发女学 Universityof Electrei Science and TachnolopChina O

CPU AND GPU ARE DESIGNED VERY DIFFERENTLY CPU Latency Oriented Cores Chip Core Local Cache Registers SIMD Unit Control GPU Throughput Oriented Cores Chip Compute Unit Cache/Local Mem Registers SIMD Unit Threading

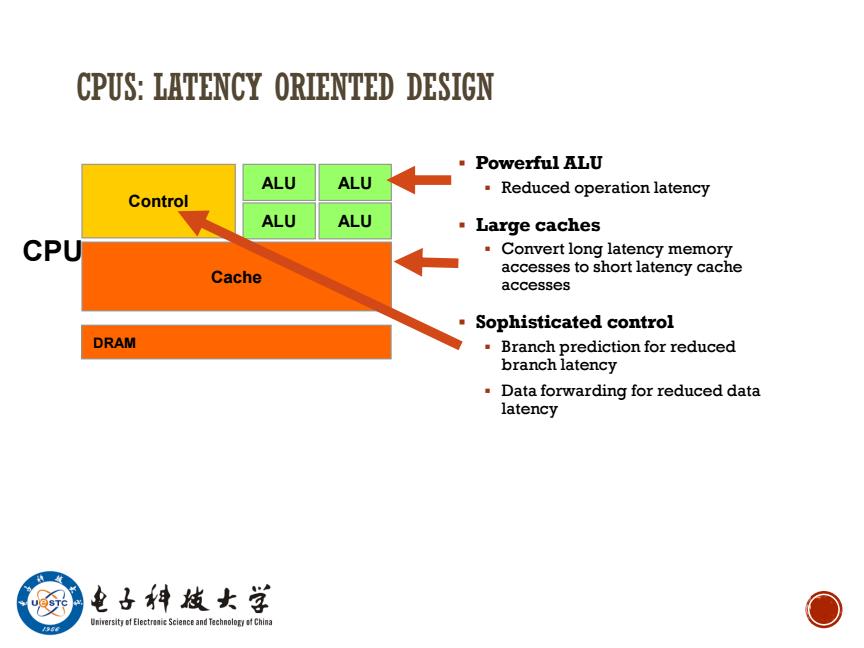

CPUS:LATENCY ORIENTED DESIGN Powerful ALU ALU ALU Reduced operation latency Control ALU ALU Large caches CPU Convert long latency memory Cache accesses to short latency cache accesses Sophisticated control DRAM Branch prediction for reduced branch latency Data forwarding for reduced data latency 电子料皮女学 Universityof Electrei Science and TachnolofChina O

CPUS: LATENCY ORIENTED DESIGN ▪ Powerful ALU ▪ Reduced operation latency ▪ Large caches ▪ Convert long latency memory accesses to short latency cache accesses ▪ Sophisticated control ▪ Branch prediction for reduced branch latency ▪ Data forwarding for reduced data latency Cache ALU Control ALU ALU ALU DRAM CPU