LECTURE 7: JOINT CUDA-MPI PROGRAMMING

© David Kirk/NVIDIA and Wen-mei W. Hwu, 2007-2012 ECE408/CS483, University of Illinois, Urbana-Champaign 1

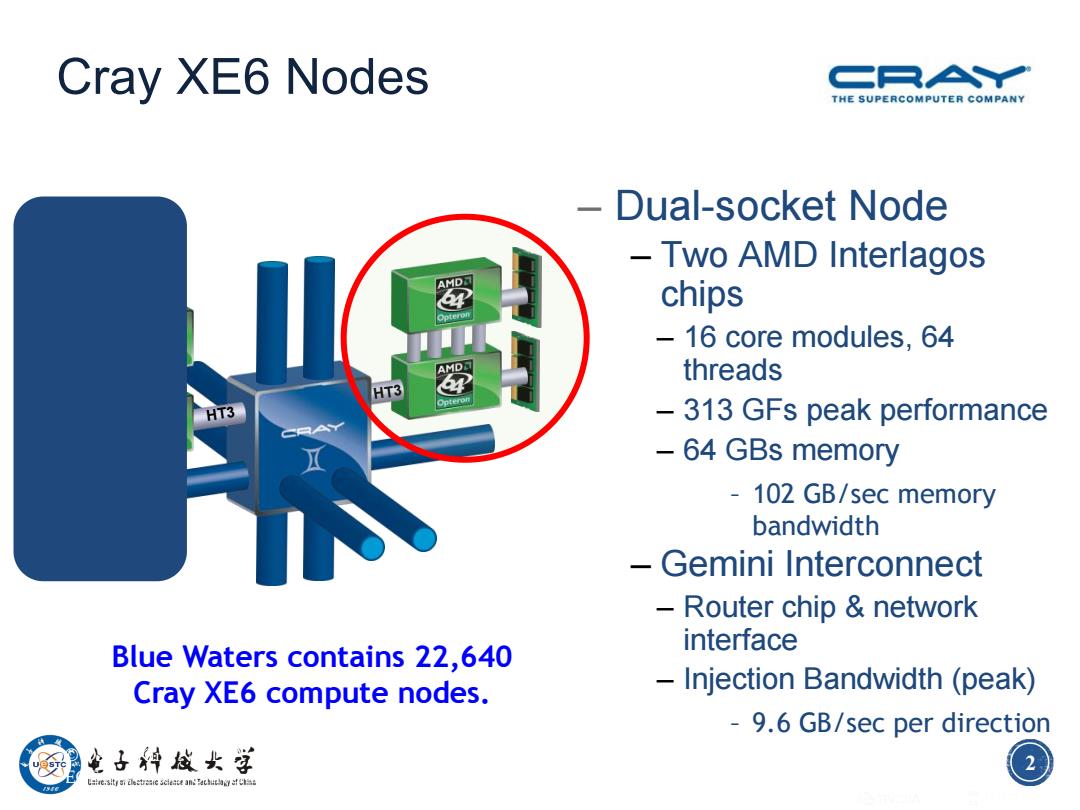

Cray XE6 Nodes THE SUPERCOMPUTER COMPANY -Dual-socket Node Two AMD Interlagos AMD网 chips -16 core modules,64 threads HT3 HT3 -313 GFs peak performance -64 GBs memory 102 GB/sec memory bandwidth -Gemini Interconnect Router chip network interface Blue Waters contains 22,640 Cray XE6 compute nodes. Injection Bandwidth (peak) -9.6 GB/sec per direction 电子料战女学 Uae.sitytree Sciaae anachufC

2 Cray XE6 Nodes – Dual-socket Node – Two AMD Interlagos chips – 16 core modules, 64 threads – 313 GFs peak performance – 64 GBs memory – 102 GB/sec memory bandwidth – Gemini Interconnect – Router chip & network interface – Injection Bandwidth (peak) – 9.6 GB/sec per direction © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007-2012 ECE408/CS483, University of Illinois, Urbana-Champaign 2 HT3 HT3 Blue Waters contains 22,640 Cray XE6 compute nodes

Cray XK7 Nodes THE SUPERCOMPUTER COMPANY -Dual-socket Node -One AMD Interlagos chip -8 core modules,32 nVIDIA threads PCle Gen2 -156.5 GFs peak AMD万 HT3 performance 32 GBs memory 51 GB/s bandwidth One NVIDIA Kepler chip -1.3 TFs peak performance -6 GBs GDDR5 memory -250 GB/sec bandwidth Blue Waters contains 3,072 -Gemini Interconnect Cray XK7 compute nodes. Same as XE6 nodes 电子料战女学 Uae.sitytree Sclaace anachuf

3 Cray XK7 Nodes – Dual-socket Node – One AMD Interlagos chip – 8 core modules, 32 threads – 156.5 GFs peak performance – 32 GBs memory – 51 GB/s bandwidth – One NVIDIA Kepler chip – 1.3 TFs peak performance – 6 GBs GDDR5 memory – 250 GB/sec bandwidth – Gemini Interconnect – Same as XE6 nodes © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007-2012 ECE408/CS483, University of Illinois, Urbana-Champaign 3 PCIe Gen2 HT3 HT3 Blue Waters contains 3,072 Cray XK7 compute nodes

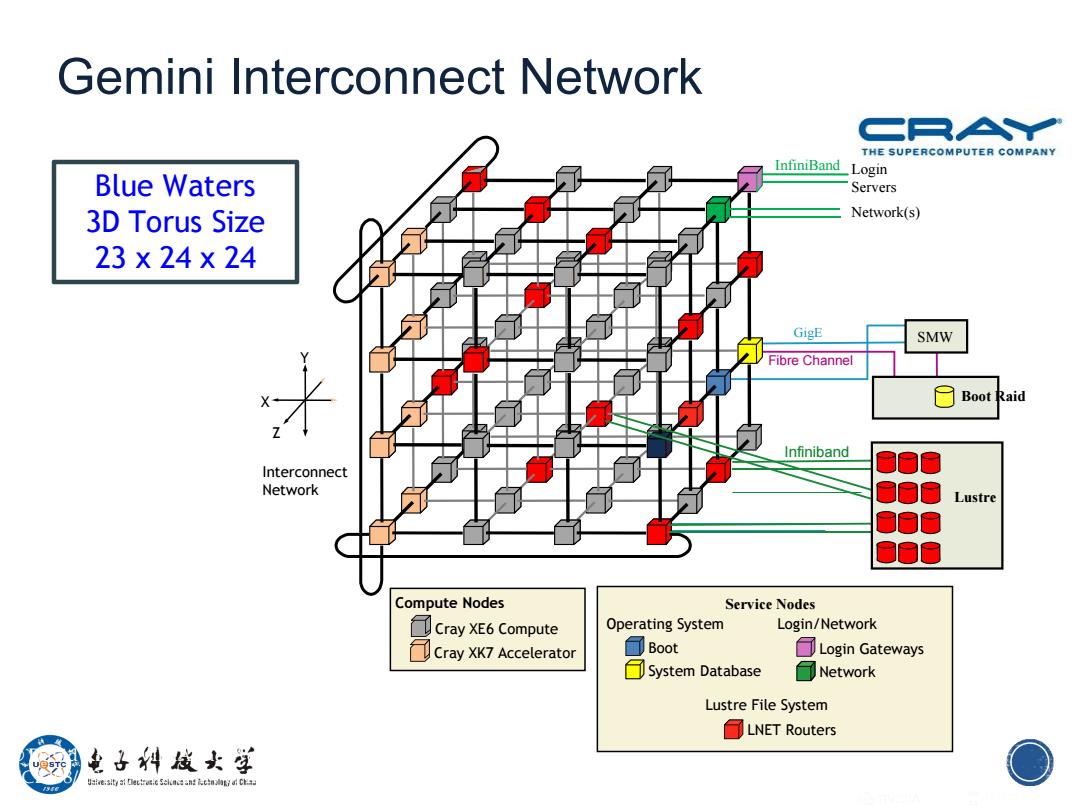

Gemini Interconnect Network THE SUPERCOMPUTER COMPANY InfiniBand Login Blue Waters Servers 3D Torus Size Network(s) 23×24×24 GigE SMW Fibre Channel 日Boot aid Infiniband Interconnect Network Lustre Compute Nodes Service Nodes Cray XE6 Compute Operating System Login/Network Cray XK7 Accelerator ▣Boot ☐Login Gateways 日System Database Network Lustre File System LNET Routers 电子州发女学

4 Gemini Interconnect Network © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007-2012 ECE408/CS483, University of Illinois, Urbana-Champaign 4 Blue Waters 3D Torus Size 23 x 24 x 24 InfiniBand GigE SMW Login Servers Network(s) Boot Raid Fibre Channel Infiniband Compute Nodes Cray XE6 Compute Cray XK7 Accelerator Service Nodes Operating System Boot System Database Login Gateways Network Login/Network Lustre File System LNET Routers Y X Z Interconnect Network Lustre

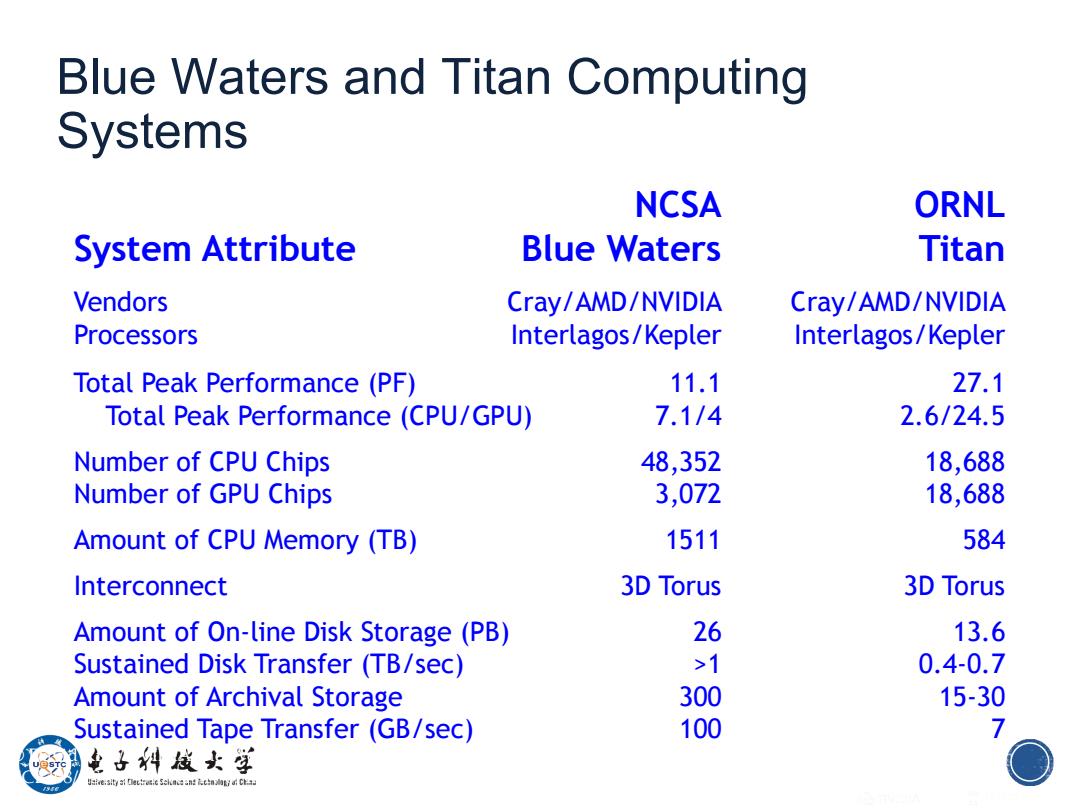

Blue Waters and Titan Computing Systems NCSA ORNL System Attribute Blue Waters Titan Vendors Cray/AMD/NVIDIA Cray/AMD/NVIDIA Processors Interlagos/Kepler Interlagos/Kepler Total Peak Performance (PF) 11.1 27.1 Total Peak Performance (CPU/GPU) 7.1/4 2.6/24.5 Number of CPU Chips 48,352 18,688 Number of GPU Chips 3,072 18,688 Amount of CPU Memory(TB) 1511 584 Interconnect 3D Torus 3D Torus Amount of On-line Disk Storage (PB) 26 13.6 Sustained Disk Transfer (TB/sec) >1 0.4-0.7 Amount of Archival Storage 300 15-30 Sustained Tape Transfer (GB/sec) 100 7 电子料线女学

5 Blue Waters and Titan Computing Systems © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007-2012 ECE408/CS483, University of Illinois, Urbana-Champaign 5 NCSA ORNL System Attribute Blue Waters Titan Vendors Cray/AMD/NVIDIA Cray/AMD/NVIDIA Processors Interlagos/Kepler Interlagos/Kepler Total Peak Performance (PF) 11.1 27.1 Total Peak Performance (CPU/GPU) 7.1/4 2.6/24.5 Number of CPU Chips 48,352 18,688 Number of GPU Chips 3,072 18,688 Amount of CPU Memory (TB) 1511 584 Interconnect 3D Torus 3D Torus Amount of On-line Disk Storage (PB) 26 13.6 Sustained Disk Transfer (TB/sec) >1 0.4-0.7 Amount of Archival Storage 300 15-30 Sustained Tape Transfer (GB/sec) 100 7