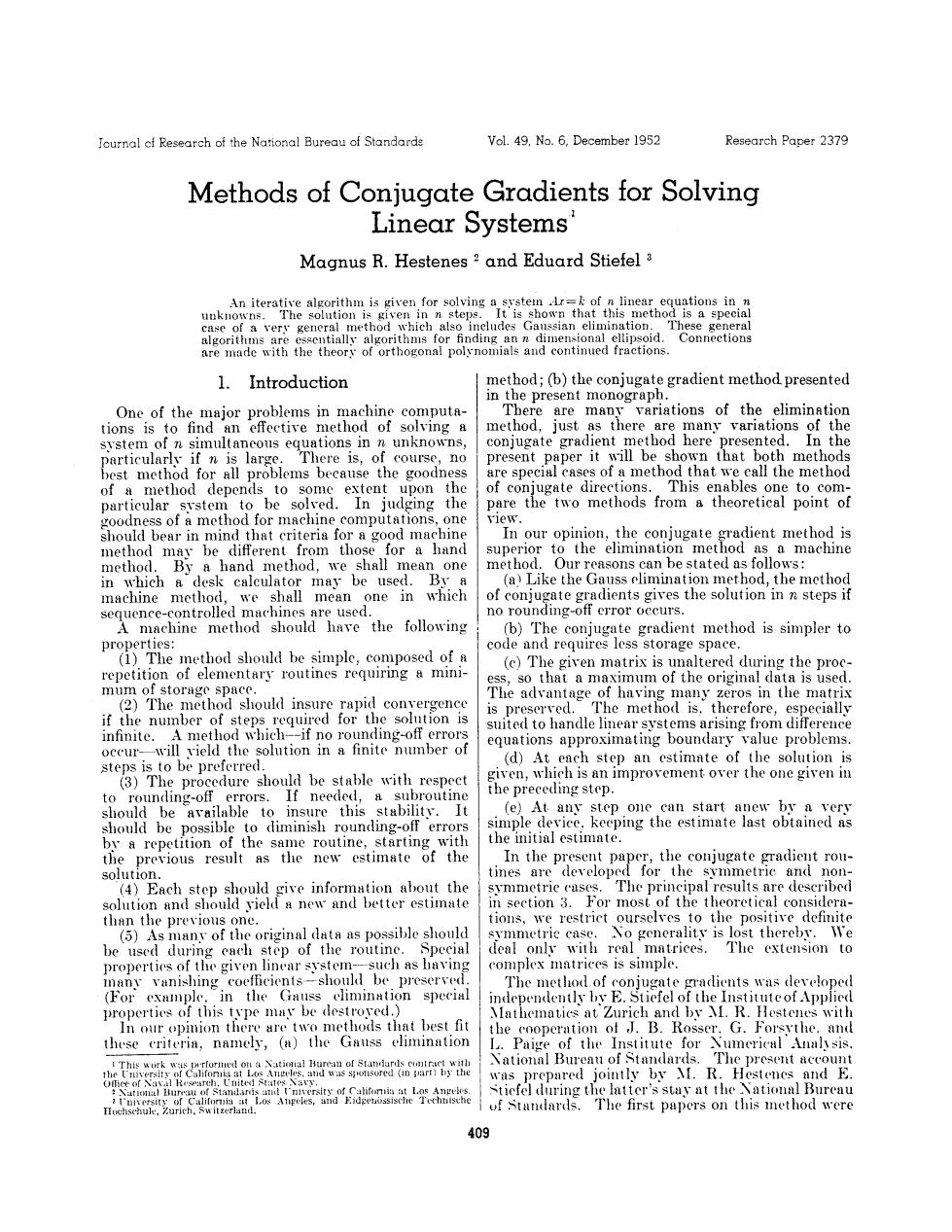

Journal of Research of the National Bureau of Standarde Vol.49.No.6.December 1952 Research Paper 2379 Methods of Conjugate Gradients for Solving Linear Systems Magnus R.Hestenes 2 and Eduard Stiefel 3 An iterative algorithm is given for solving a system r=k of n linear equations in n The solution is given in n steps. It is shown that this method is a special case of a very general method which also includes Gaussian elimination These general algorithms are essentially algorithms for finding an n dimensional ellipsoid. onnect1oǖs are made with the theory of orthogonal polynomials and continued fractions. 1.Introduction method;(b)the conjugate gradient method presented in the present monograph One of the major problems in machine computa- Tbere are many variations of the elimination tions is to find an effective method of solving a method,just as there are many variations of the svstem of n simultaneous equations in n unknowns, conjugate gradient method here presented. In the particularly if n is large. There is,of course,no present paper it will be shown that both methods best method for all problems because the goodness are special cases of a method that we call the method of a method depends to some extent upon the of conjugate directions.This enables one to com- particular system to be solved.In judging the pare the two methods from a theoretical point of goodness of a method for machine computations,one vlew. should bear in mind that criteria for a good machine In our opinion,the conjugate gradient method is method may be different from those for a hand superior to the elimination method as a machine method.By a hand method,we shall mean one method.Our reasons can be stated as follows: in which a desk calculator may be used.By a (a)Like the Gauss elimination method,the method machine method,we shall mean one in which of conjugate gradients gives the solution in n steps if sequence-controlled machines are used. no rounding-off error occurs. A machine method should have the following (b)The conjugate gradient method is simpler to properties: code and requires less storage space (1)The method should be simple,composed of a (c)The given matrix is unaltered during the proc- repetition of elementary routines requiring a mini- ess,so that a maximum of the original data is used mum of storage space. (2)The method should insure rapid convergence The advantage of having many zeros in the matrix is preserved. The method is.therefore,especially if the number of steps required for the solution is suited to handle linear systems arising from differeuce infinite.A method which-if no rounding-off errors equations approximating boundary value problems. occur-will vield the solution in a finite number of steps is to be preferred. (d)At each step an estimate of the solution is (3)The procedure should be stable with respect given,which is an improvement over the one given in to rounding-off errors.If needed,a subroutine the preceding step. should be available to insure this stability.It (e)At any step one can start anew by a very should be possible to diminish rounding-off errors simple device,keeping the estimate last obtained as by a repetition of the same routine,starting with the initial estimate. the previous result as the new estimate of the In the present paper,the conjugate gradient rou- solution. tines are developed for the symmetric and non- (4)Each step should give information about the symmetrie cases.The principal results are described solution and should yield a new and better estimate in section 3.For most of the theoretical considera- than the previous one. tions,we restrict ourselves to the positive definite (5)As many of the original data as possible should symmetric case.No generality is lost therebv.We be used during each step of the routine.Special deal only with real matrices.The extension to properties of the given linear system-such as having complex matrices is simple. many vanishing coefficients-should be preserved. The method of conjugate gradients was developed (For example.in the Gauss elimination special independently by E.Stiefel of the Institute of Applied properties of this type may be destroyed.) Mathematics at Zurich and by M.R.Hestenes with In our opinion there are two methods that best fit the cooperation of J.B.Rosser.G.Forsythe.and these criteria,namely,(a)the Gauss elimination L.Paige of the Institute for Xumerical Analy sis orfornud ont a xatioul Hurean of statrlards cuIract wilh Xational Bureau of Standards.The present account rsity of Califoruia at Los Angeles.and was sponsored (in part)by the was prepared jointly by A.R.Hestenes and E. National Bureau of Stiefel during the latter's stay at the National Bureau of Standards. The first papers on this inethod were 409

given by E.Stiefel and by X.R.Hestenes.5 Reports nll了,1n.11s1il10i4t4tir,If.1issy1i1- on this method were given by E.Stiefel and J.B. metrie,then two veetors and y are said to be con Rosser at a Symposium s on August 23-25,1951. jugate or .-orthogonal if the relation (r..Iy Recently,C.Lanczos developed a closely related (.=0 holds.This is an extension of the ortho- routine based on his carlier paper on eigenvalue gonality relation (.y)=0. problem.Examples and numerical tests of the By an eigenralue of a matrix .I is meant a number method have been by R.Hayes,U'.Hochstrasser, 入such that dy=入has a solution y≠,and y is and AI.Stein called a corresponding eigenrector. Inless otherwise expressly stated the matrix .1. 2.Notations and Terminology with which we are coneerned,will be assumed fo be symmetric and positire definite.Clearly no loss of Throughout the following pages we shall be con- generality is caused thereby from a theoretical point cerned with the problem of solving a system of linear of view,because the system dr=k is equivalent to equations the system Br=l,where B=4*.4,l=.1*k.From a 011十01:十·,,十01mtn= numerical point of view,the two systems are differ- ent,because of rounding-off errors that occur in a1X:十02dg十··.十Q2mt=k2 joining the product 4*.Our applications to the (2:1) nonsymmetric case do not involve the computation 。4 of1*.1 In the sequel we shall not have oceasion to refer to aud1十uw2十·,·+0na这n=ka a particular coordinate of a vector.Accordinglv we may use subseripts to distinguish vectors instead These equations will be written in the vector form of components.Thus zo will denote the vector 1x=k. Here d is the matrix of coefficients (a:), Io01,· .ro)and r;the vector (u,....).In case r and k are the vectors (n,...,n)and (t,...). a symbol is to be interpreted as a component,we shall It is assumed that d is nonsingular.Its ingerse A-1 call attention to this fact unless the interpretation is therefore exists.We denote the transpose of by evident from the context. 1* The solution of the system Ar=k will be denoted by Given two vectors r=(n,...,r)and y= h;that is,Ah=k.If x is an estimate of h,the differ- (yi,..),their sum +y is the vector ence r=k-r will be called the residual of x as an (+yi,.:.,In+yn),and az is the vector (ar,...,azn), estimate of h.The quantityr will be called the where a is a scalar.The sum squared residual.The vector h-z will be called the error rector of r,as an estimate of h. (x,)=11十12y十.·.十xmym 3. Method of Conjugate Gradients((cg- is their scalar product.The length of r will be denoted Method) b x=(+...+=(x,x) The present section will be devoted to a description The Cauchy-Schwarz inequality states that for all of a method of solving a system of linear equations Az=k.This method will be called the conjugate 工, (c,)2≤(x,x)(y,y)or(c,y)i≤xy.(2:2) gradient method or,more briefly,the cg-method,for reasons which will unfold from the theory developed The matrix A and its transpose d*satisfy the in later sections.For the moment,we shall limit ourselves to collecting in one place the basic formulas relation upon which the method is based and to deseribing (任,A0=之ax=(A*z,20. briefly how these formulas are used. =1 The cg-method is an iterative method which terminates in at most n steps if no rounding-off If a=a,that is,if A=A*,then d is said to be errors are encountered.Starting with an initia symmetric.A matrix A is said to be positive definite estimate ro of the solution h,one determines succes- in case(x,Ax)>0 whenever z≠0.If(x,A)≥0for sively new estimates ro,rr2,...of h,the estimate x being closer to h than z+.At each step the residual r:=k-Ar is computed. Normally this E.Stiefel,Tehereinige Methoden der Relaxationsrechnung,Z.angew.Xath, vector can be used as a measure of the "goodness" hods of relara of the estimate z.However,this measure is not a reliable one because,as will be seen in section 18 Proceedings of the symposium (see footnote s). J.B.Rosser,Rapidly converging iterative methods for solving linear equa. it is possible to construet cases in which the squared tions.to appear in the heTPo residual ri increases at each step (except for the last)while the length of the error vector h-z the campus of the University of California at Los Angeles (August 23-25.1951). Lancsos.Solution of systems of linear equations by minimised iterations, decreases monotonically.If no rounding-off error N L Report 52-13, is encountered,one will reach an estimate rm(msn) ymposium'on Machinery,Cambridge,\iuss 191,pages 164-200. -Seale Digitai Calculating at which rm=0.This estimate is the desired solu- tion h.Normally,m=n.However,since rounding- 410

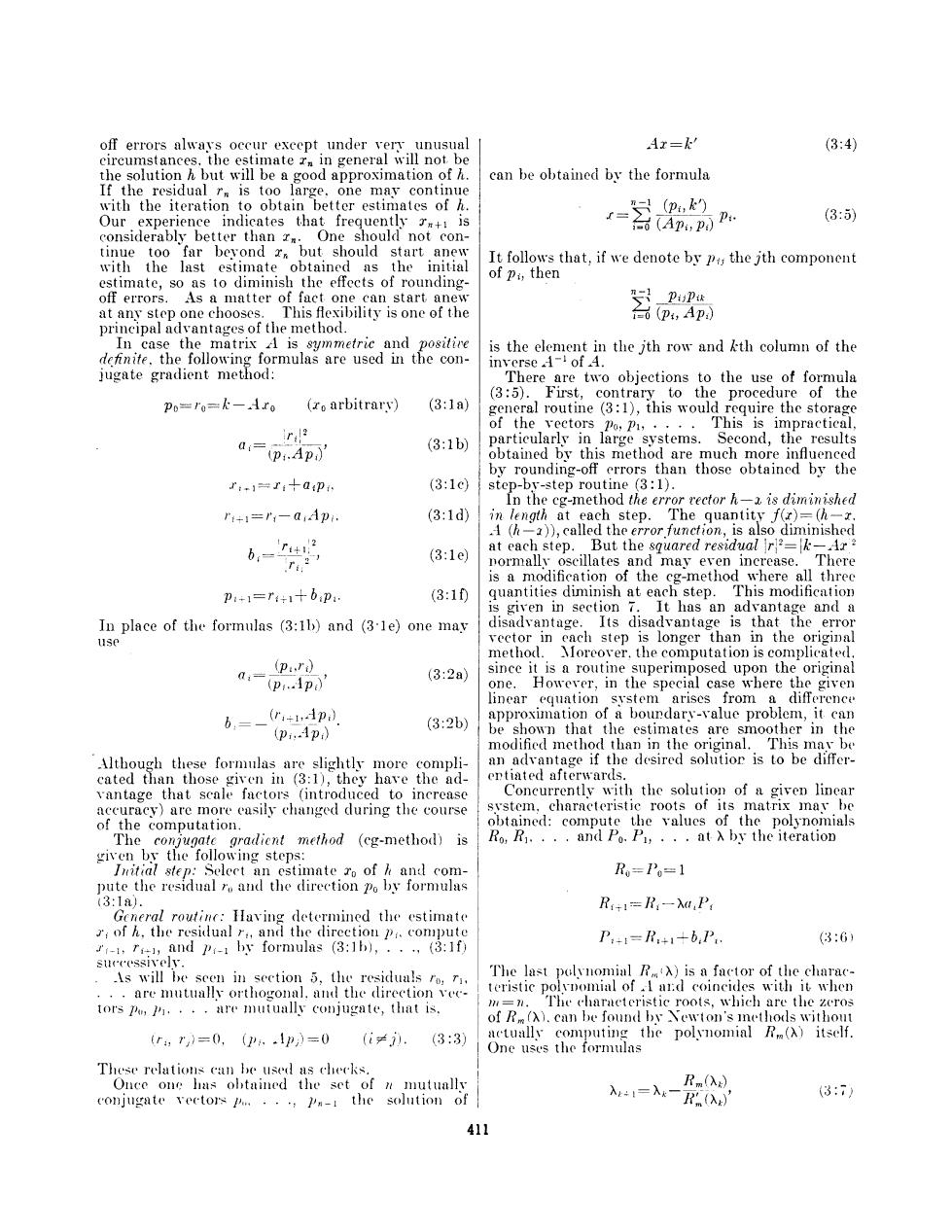

off errors always occur except under very unusual Ar=k (3:4) circumstances.the estimate r in general will not be the solution h but will be a good approximation of h can be obtained by the formula If the residual r is too large,one may continue with the iteration to obtain better estimates of h (pik) Our experience indicates that frequently+is 1- (3:5) considerably better than r.One should not con- 白(An,pD. tinue too far bevond r but should start anew with the last estimate obtained as the initial It follows that,if we denote by pi the jth component estimate,so as to diminish the effects of rounding- of pi,then off errors.As a matter of fact one can start anew 罗子卫D at any step one chooses.This fexibility is one of the =0(p,Ap:) principal advantages of the method. In case the matrix A is symmetric and positire is the element in the jth row and kth column of the definite.the following formulas are used in the con- inverse of A. Jugate gradient method: There are two objections to the use of formula (3:5).First,contrary to the procedure of the Po=ro=k-Hro (o arbitrarv) (3:1a) general routine (3:1),this would require the storage of the veetors po,p,....This is impractical. irde apAp (3:1b) particularly in large systems.Second,the results obtained by this method are much more influenced by rounding-off crrors than those obtained by the :+1=I:十ap (3:1c step-by-step routine (3:1). In the cg-method the error rector h-z is diminished "+1="一01p (3:1d) in length at each step.The quantity f(r)=(h-r A (h-)),called the error function,is also diminished b,=42 at each step.But the squared residualr2=k-Ar 2 .r2 (3:1e normally oscillates and may even increase.There is a modification of the cg-method where all threc p1+1=T1十bp (3:1fD quantities diminish at each step.This modification is given in section 7.It has an advantage and a In place of the formulas (3:1b)and (3le)one may disadvantage.Its disadvantage is that the error use vector in each step is longer than in the original method. Xoreover.the computation is complicated (p:,r) -pp (3:2a since it is a routine superimposed upon the original one. However,in the special case where the given linear equation system arises from a difference 6,=- (r+,1p approximation of a boundarv-value problem,it can (3:2b) (p.1p》 be shown that the estimates are smoother in the modified method than in the original.This mav be Although these formulas are slightly more compli- an advantage if the desired solutior is to be differ- cated than those given in (3:1),they have the ad- entiated afterwards. vantage that scale factors (introduced to increase Concurrently with the solution of a given linear accuracy)are more casily changed during the course svstem,characteristic roots of its matrix mnav be of the computation. obtained:compute the values of the polynomials The conjugate gradient method (cg-method)is Ro,R1....and Po.Pi,...at A by the iteration given by the following steps: Initial step:Select an estimate ro of h and com- R=1P0=1 pute the residual ro and the direction po by formulas (3:1a). R+1=R-λa,P General routinc:Having determined the estimate r;of h,the residual r.,and the direction pi compute P:+1=R:+1+6.Pr (8:6) 1,r1,and pi-by formulas (3:1b),...(3:1f) successively. As will be seen in section 5,the residuals ro,r. The last polvnomial R)is a factor of the charac- ..are mutually orthogonal,and the direction vee- teristic polynomial of.I ard coincides with it when )=7. tors pu,h.···re mutually conjugate,that is, The characteristic roots,which are the zeros of R().can be found by Newton's methods without r,r》=0,(P,.1p)=0(i≠.(3:3) actually computing the polynomial R()itself. One uses the formulas These relations can be used as checks. Once one las obtained the set of n mutuallv Rm(入) (3:7) conjugate vectors p...,p-i the solution of 入1=入k一 Rn入) 411

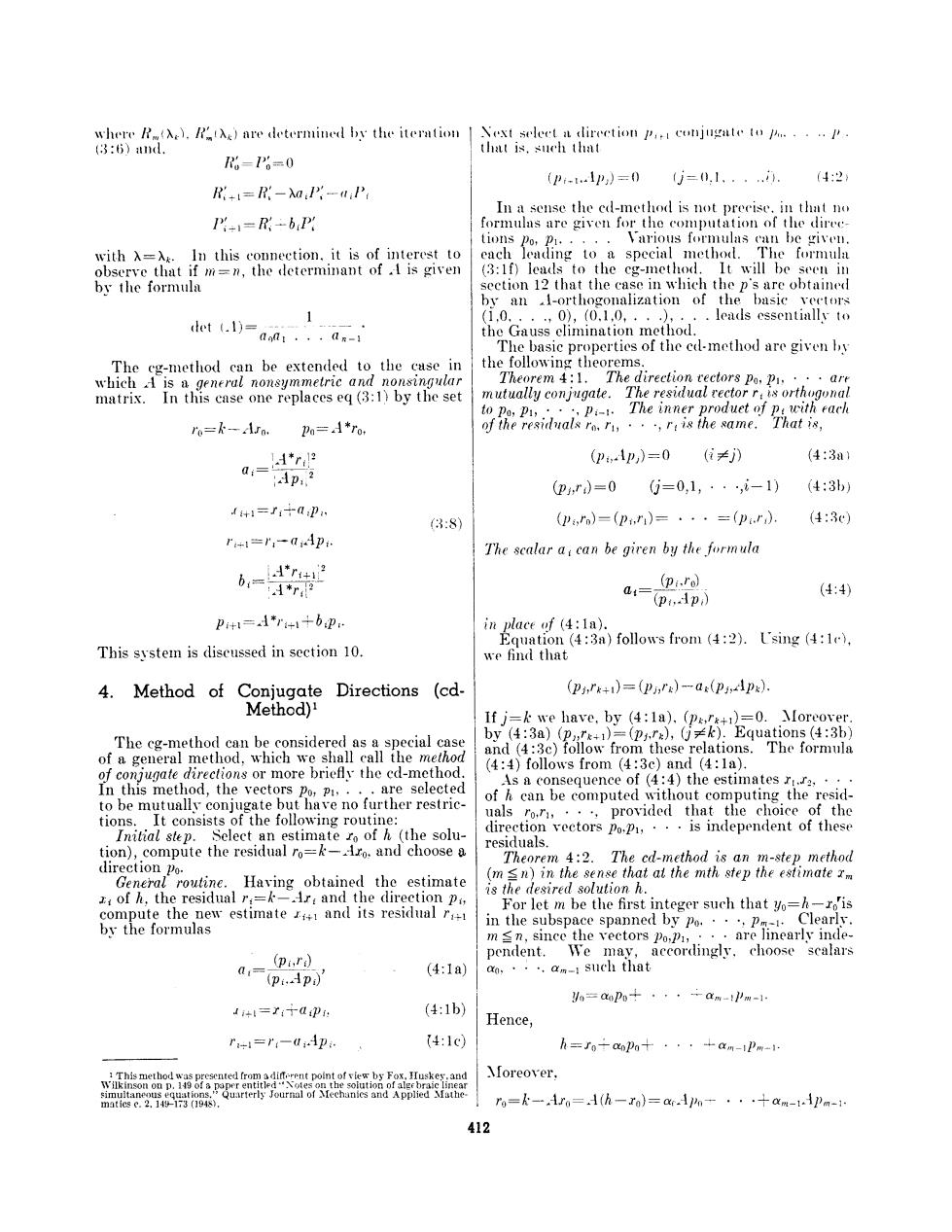

where R).B)are determined by the iteration xt seleet a direction p,conjugate top.·…P (3:5)11. that is.such that =1P6=0 (P-t1p)=0 j=0.1、... (4:2 R:+1=R-λa:-WP In a sense the cd-method is not precise.in that no P'-1=R,-bP formulas are given for the computation of the diree- tions2o,p.··.·Various formulas can be giveu. with =A.In this connection.it is of interest to each leading to a special method.The formula observe that if m=n,the determinant of is given (3:1f)leads to the cg-method.It will be seen in by the formula section 12 that the case in which the p's are obtained by an .1-orthogonalization of the basic vectors 1 (1,0,..,0),(0,1,0,...),.·.leads essentially to let(.1)= a0:.,·aR-1 the Gauss elimination method. The basic properties of the cd-method are given by The cg-method can be extended to the case in the following theorems. which A is a general nonsymmetric and nonsingular Theorem 4:1.The direction tectors po,P,. matrix.In this case one replaces eq (3:1)by the set mutually conjugate.The residual rector r:is orthogonal to po,pi,..pi.The inner produet of pwith each ro=k-ro. 卫n=1*ro, of the residuals ro.r,..,r is the same.That is, 1*r2 (p,1p)=0 (≠》 (4:3a1 d=Ap:? ,r)=0 (j=0,1,··,i-1) (4:3b) +1=:十0p 3:8】 (2,rn)=(p,r1)=···=(pr). (4:3e) r+1=r,-0p The sealar a:can be giren by the formula 6=1r+ Ar a:- (pi.rol (4:4) (p,1p,】 卫+1=1*r+1+bP in place of (4:1a). Equation (4:3a)follows from (4:2).Using (4:1e), This svstem is discussed in section 10. we find that 4.Method of Conjugate Directions (cd- (pn广k+i)=(P,rx)-a(pdpx). Method)' If j=k we have.by (4:1a).(perx+1)=0.Moreover. The cg-method can be considered as a special case by (4:3a)(p,r+1)=(ps.r:),(jk).Equations (4:3b) of a general method,which we shall call the method and (4:3c)follow from these relations.The formula of conjugate directions or more briefly the cd-method. (4:4)follows from (4:3c)and (4:1a). In this method,the vectors po,p,...are selected As a consequence of (4:4)the estimates ri.re,... of h can be computed without computing the resid- to be mutually conjugate but have no further restric- tions.It consists of the following routine: uals ro,r,··,provided that the choice of the Initial step.Select an estimate ro of h (the solu- direction vectors po,p,···is independent of these tion),compute the residual ro=k-ro:and choose a residuals. Theorem 4:2.The cd-method is an m-step method direction Po. General routine.Having obtained the estimate (m sn)in the sense that at the mth step the estimate rm of h,the residual r:=k-Ar and the direction p is the desired solution h. compute the new estimate ri and its residual r. For let m be the first integer such that yo=k-rois by the formulas in the subspace spanned by po...p.Clearly. m sn,since the vectors Po,pi, ··are lnearly1n(de- pendent.We may,accordingly,choose scalars (pird 0:= (pip) (4:1a) ca,··.am-1 such that /n=C0P0于···-mm-'w-1 1i+l=了:T0P (4:1b) Hence, r+1=一U+卫 (4:1c) h=了0÷anP+···+am-iPm- \oreover, simultaneous equat ions m3 tics e.2.14-173(194s, r%=k一.1r0=1(h-Ia》=ar1n+···十am-11Pm- 412

Computing the inner product (piro)we find by (4:3a) Geometrically,the equation f(r)=const.defines and (4:4)that a:=a:,and hence that h=rm,as was an ellipsoid of dimension n-1.The point at which to be proved. f()has a minimum is the center of the ellipsoid and The cd-method can be looked upon as a relaxation is the solution of Ar=k.The i-dimensional plane method.In order to establish this result,we intro- P:,described in the last theorem.cuts the ellipsoid duce the function f(2)=f(ro)in an ellipsoid E of dimension i-1. unless Ei is the point ro itself.(In the cg-method, f(x)=(h-r,A(h-r)=(,Ar)-2(,k)+(hk).(4:5) E:is never degenerate,unless ro=h.)The point t is the center of E:.Hence we have the corollary: Clearly,f(r)20 and f(r)=0 if,and only if,r=h. Corollary 1.The point x is the center of the The function f(r)can be used as a measure of the (i-1)-dimensional ellipsoid in ahich the i-dimensional "goodness"of x as an estimate of h.Since it plays plane P:intersects the (n-1)-dimensional ellipsoid an important role in our considerations,it will be f(x)=f(). referred to as the error function.If p is a direction Although the function f()is the fundamental vector,we have the useful relation error function which decreases at each step of the relaxation.one is unable to compute f(z)without f(r+ap)=f(r)-2a(p,r)+a2(p.Ap), (4:6) knowing the solution h we are seeking.In order to obtain an estimate of the magnitude of f(x)we may where r=k-AI=A(h-z),as one readily verifics use the following: by substitution.Considered as a function of a. Theorem 4:4. The error rector y=h-x,the residual the function f(r-ap)has a minimum value at r=k-AI,and the error function f(r)satisfy the a=0,where relations (P,r) (4:7) a何s)s ri a=(p:Ap) 4:11) (y) This minimum value differs from f(r)by the quantitv where u(z)is the Rayleigh quotient fx)-fr+a川=a(p,Ap)=· (4:8) u(e)=3,d 2 4:12) Comparing (4:7)with (4:1a),we obtain the first two The Rayleigh quotrent of the error rector y does not sentences of the following result: eaceed that of the residual r.that is, Theorem 4:3.The point ri minimizes f(r)on the line r=r1+api-1.At the i-th step the error f() u()≤u(r). 1:13) is rc'ared by the amount Aorcorer, ir (( (4:14) 小- (4:9) The proof of this result is based on the Schwarzian In fact.the point r:minimizes f(r)on the i-dimensional quotients plane P:of points (a.1)(1.1)(12,A22) (,a)≤(a,4)≤(A2,A2) (4:15 x=r0a0Pn十.+a-P-1, (4:10) The first of these follows from the inequality of Schwarz where ao,..ai are parameters. This plane con- tains the points ro......i (p.q)2≤(p,p)(q,q) (4:16) In view of this result the cd-method is a method by choosing p=2,g=42.The second is obtained of relaxation of the error furction f).An iteration by selecting p=Ba,g=B3z,where B2=A. of the routine may accordingly be referred to as In order to prove theorem 4:4 recall that if we a relaxation. set y=h-r,then In order to prove the third sentenee of the theorem observe that at the point (4:10) r=k-1=1(h-了)=y f(r)=f(ro)- 会2apo-p4nl fr)=(y.1y) by (4:5).I'sing the inequalities (4:15)with =4. At.the minimum point we have we see that (p,.ro) a-(piAp 40w0=3.1ws1.10》=2s40 .10=≤(.4.10 and hence a,=a).by (4:4).The minimum point is =, ccordingly the point r.as was to be proved. (,ur. 413