complexity of the algorithm Example 4.8.4:[Johnson] 4.8.3 Decoding of LDPC codes In his Ph.D.thesis of 1961,Gallager also presented two iterative decoding algorithms for LDPC codes.One is for hard-decision decoding.and another for soft-decision decoding Since that time,other researchers have independently discovered that algorithm and relate algorithms,albeit sometimes for different applications.Now,there are various methods fo decoding LDPC codes,ranging from low to high decoding complexities and from reasonably good to very good error performance.These methods include One-step majority logic(OSML)decoding. ■Bit.fni :(BF)dec Iterative decoding based on message passing algorithm (IDMPA).(The term "message passing algorithm"usually refers to the sum-product algorithm,the belief propagation algorithm and their approximations.) these me nods.OSML and BF decodings are hard-decision decodings.Weighted liability-ba ed decoding method.IDMPA is a soft-in soft out(SISO) decoding method,which is actually the sum-product algorithm working on the code's Tanner graph,as discussed in Section 4.7.The OSML decoding is the simplest in terms of decoding complexity.IDMPA provides the best performance but requires higher computational complexity.In the following.we will first outline two hard-decis on decoding algorithms. 4.8.3.1 OSML Decoding of regular LDPC codes Consider a (dd)-regular LDPC code C of length n given by the null space of a qxn parity-check matrix H with column and row weights d,and d,respectively.Let h,h2.,h be the rowsof H.For each code bit position,due to the RC-constraint,there are a set A={h”,h,h} of d,rows in H such that:(1)each row in A,has a 1-component at position /and (2)no two rows in A have a common 1-component at any other position.See the figure below. the /th column 「.001107 0011 A1= 1010. Clearly,the rows in A are orthogonal on the bit position/. Suppose a codeword e=(coC)in Cis transmitted and

11 complexity of the algorithm. Example 4.8.4: [Johnson] 4.8.3 Decoding of LDPC codes In his Ph.D. thesis of 1961, Gallager also presented two iterative decoding algorithms for LDPC codes. One is for hard-decision decoding, and another for soft-decision decoding. Since that time, other researchers have independently discovered that algorithm and related algorithms, albeit sometimes for different applications. Now, there are various methods for decoding LDPC codes, ranging from low to high decoding complexities and from reasonably good to very good error performance. These methods include: One-step majority logic (OSML) decoding; Bit-flipping (BF) decoding; Weighted BF decoding; Iterative decoding based on message passing algorithm (IDMPA). (The term “message passing algorithm” usually refers to the sum-product algorithm, the belief propagation algorithm and their approximations.) Among these methods, OSML and BF decodings are hard-decision decodings. Weighted BF decoding is a reliability-based decoding method. IDMPA is a soft-in soft-out (SISO) decoding method, which is actually the sum-product algorithm working on the code’s Tanner graph, as discussed in Section 4.7. The OSML decoding is the simplest in terms of decoding complexity. IDMPA provides the best performance but requires higher computational complexity. In the following, we will first outline two hard-decision decoding algorithms. 4.8.3.1 OSML Decoding of regular LDPC codes Consider a (dv, dc)-regular LDPC code C of length n given by the null space of a q × n parity-check matrix H with column and row weights dv and dc, respectively. Let h1, h2, ., hq be the rows of H. For each code bit position l, due to the RC-constraint, there are a set { } () () () 1 2 , ,., v ll l A hh h l d = of dv rows in H such that: (1) each row in Al has a 1-component at position l; and (2) no two rows in Al have a common 1-component at any other position. See the figure below. 00110 0011 1010 l ⎡ ⎤ ⎢ ⎥ = ⎢ ⎥ ⎢ ⎥ ⎢ ⎥ ⎣ ⎦ A " " # ### " " Clearly, the rows in Al are orthogonal on the bit position l. Suppose a codeword 01 1 ( , ,., ) n cc c = − c in C is transmitted and the lth column

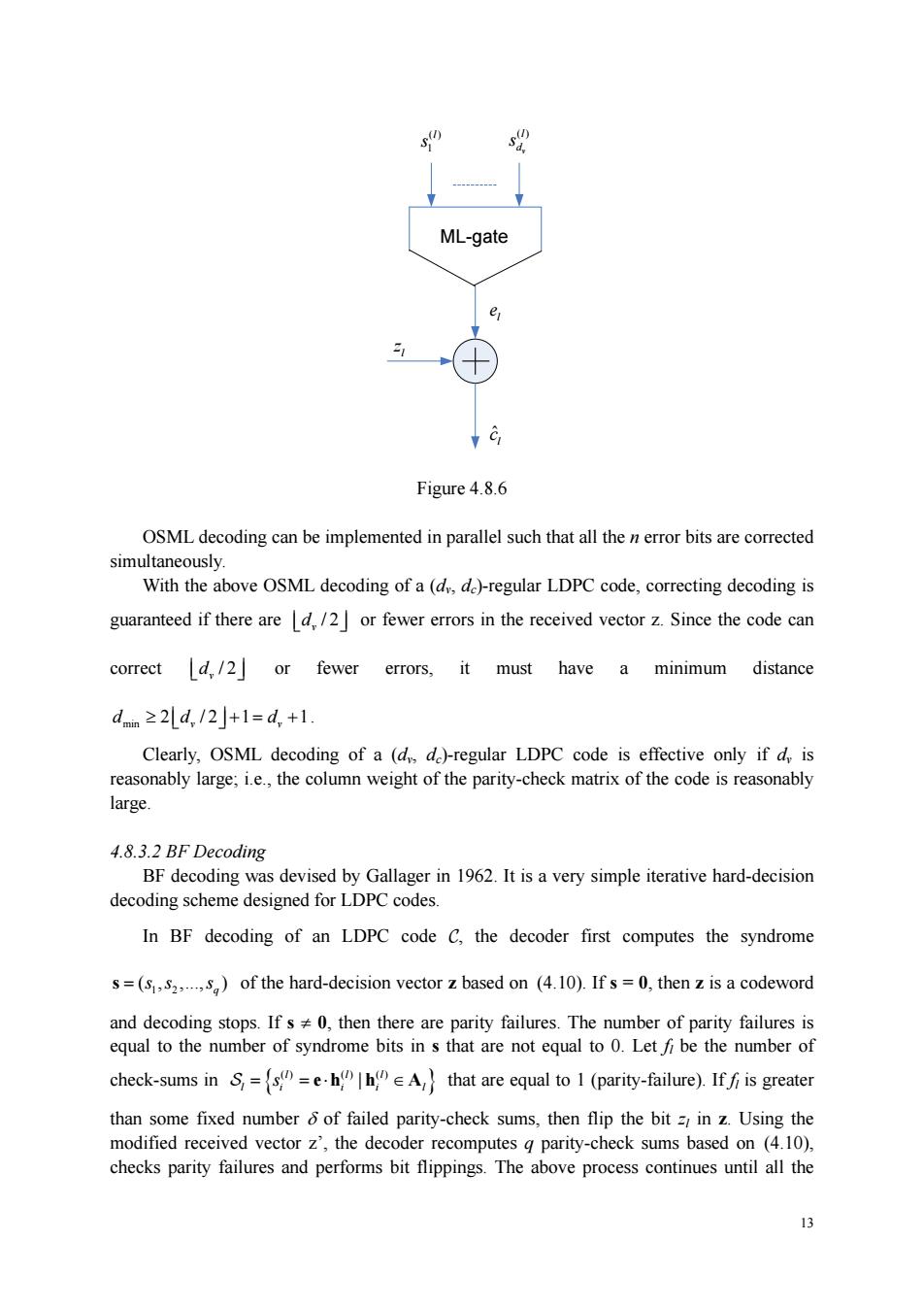

z=(50,51,20-i) is the hard-decision received vector.Thenz=c+e,where e=(eoes.e) is the error pattern in z.The syndrome of z is given by s=(S,52,3,)=zH=(c+e)H=eH where s=eh,=eho+eht.+enhn-l,for Isi≤g (4.10) Eq.(4.10)gives a set of g equations that relate the error digits to the g computed syndrome bits. Remark:=1 indicates that the code bit c is checked bys Consider the following set of d,syndrome bits computed based on the rows of Ar. S=sh =e.h(h eA (4.11) where s =e.h =eoh +eh +.+n,for Is i s dy (4.12) It follows from the structural properties of Ar that every syndrome in Schecks (or contains) the error bit eand no other error bit in e is checked by more than one syndrome in S.Thus. the syndromes in S,are said to be orthogonal on the error bit er.From the discussion in Chapter on majority logic decoding of linear block codes,er can be corrected by using one-step majority logic decoding method.Furthermore,it can be show that if the number of errors ine isd,/2]or fewer,the errors ine can be correctly determined. The decoding algorithm,called one-step majority-logic (OSML)decoding,is stated as follows.The error digit er is decoded as I if a clear majority of the syndromes orthogonal on er is otherwise,eis decoded as .Mathematically,it can be formulated below. OSML decoding:For 0sI<n, ·Decode e1=1if∑ges(2s9-l>l ·Decode1=0if∑.s2s"-l)s0 The sum(2s-1)is called the OSML decision function.Fig.4.8.6 is an illustration of OSML decoding

12 01 1 ( , ,., ) n zz z = − z is the hard-decision received vector. Then z = c + e, where 01 1 ( , ,., ) n ee e = − e is the error pattern in z. The syndrome of z is given by 1 2 ( , ,., ) ( ) T TT q s zH c e H eH = = =+ = ss s where i i i i n in 0 ,0 1 ,1 1 , 1 s eh eh e h =⋅ = + + + − − e h " , for 1≤ i ≤ q (4.10) Eq. (4.10) gives a set of q equations that relate the error digits to the q computed syndrome bits. Remark: , 1 i l h = indicates that the code bit is checked by l i c s . Consider the following set of dv syndrome bits computed based on the rows of Al: { } () () () | l ll l i ii l S = =⋅ ∈ s eh h A (4.11) where () () () () () 0 ,0 1 ,1 1 , 1 l l ll l i i i i n in s eh eh e h =⋅ = + + + − − e h " , for 1≤ i ≤ dv (4.12) It follows from the structural properties of Al that every syndrome in Sl checks (or contains) the error bit el and no other error bit in e is checked by more than one syndrome in Sl. Thus, the syndromes in Sl are said to be orthogonal on the error bit el. From the discussion in Chapter ? on majority logic decoding of linear block codes, el can be corrected by using one-step majority logic decoding method. Furthermore, it can be show that if the number of errors in e is / 2 v ⎢ ⎥ d⎣ ⎦ or fewer, the errors in e can be correctly determined. The decoding algorithm, called one-step majority-logic (OSML) decoding, is stated as follows. The error digit el is decoded as 1 if a clear majority of the syndromes orthogonal on el is 1; otherwise, el is decoded as 0. Mathematically, it can be formulated below. The sum ( ) ( ) ( ) l 2 1 j l l j s s ∈ ∑ − S is called the OSML decision function. Fig. 4.8.6 is an illustration of OSML decoding. OSML decoding: For 0 ≤ <l n , Decode el = 1 if ( ) ( ) ( ) l 2 11 j l l j s s ∈ ∑ − > S ; Decode el = 0 if ( ) ( ) ( ) l 2 10 j l l j s s ∈ ∑ − ≤ S

ML-gate Figure 4.8.6 OSML decoding can be implemented in parallel such that all bits are corrected simultaneously. With the above OSML decoding of a(dd)-regular LDPC code,correcting decoding is guaranteed if there ared,/2 or fewer errors in the received vector z.Since the code can correct d,/2]or fewer errors,it must have a minimum distance d≥2Ld,/2j+1=d+1 Clearly,OSML decoding of a (d de)-regular LDPC code is effective only if d,is reasonably large;i.e.,the column weight of the parity-check matrix of the code is reasonably large. 4.8.3.2 BF Decoding BF decoding was devised by Gallager in 1962.It is a very simple iterative hard-decision decoding scheme designed for LDPC codes. In BF decoding of an LDPC code C.the decoder first computes the syndrome s=(s.)of the hard-decision vector z based on (4.10).Ifs=0,then z is a codeword and decoding stops.If s0,then there are parity failures.The number of parity failures is equal to the number of syndrome bits in s that are not equal to 0.Letfi be the number of check-sums in==eA that are equal to 1(parity-failure).If is greater than some fixed number of failed parity-check sums,then flip the bit=in z.Using the modified received vector z',the decoder recomputes g parity-check sums based on (4.10), checks parity failures and performs bit flippings.The above process continues until all the 3

13 ( ) 1 l s ( ) v l d s ML-gate l z ˆ l c l e Figure 4.8.6 OSML decoding can be implemented in parallel such that all the n error bits are corrected simultaneously. With the above OSML decoding of a (dv, dc)-regular LDPC code, correcting decoding is guaranteed if there are / 2 v ⎢ ⎥ d⎣ ⎦ or fewer errors in the received vector z. Since the code can correct / 2 v ⎢ ⎥ d⎣ ⎦ or fewer errors, it must have a minimum distance min 2 /2 1 1 v v dd d ≥ += + ⎢ ⎥ ⎣ ⎦ . Clearly, OSML decoding of a (dv, dc)-regular LDPC code is effective only if dv is reasonably large; i.e., the column weight of the parity-check matrix of the code is reasonably large. 4.8.3.2 BF Decoding BF decoding was devised by Gallager in 1962. It is a very simple iterative hard-decision decoding scheme designed for LDPC codes. In BF decoding of an LDPC code C, the decoder first computes the syndrome 1 2 ( , ,., ) q s = ss s of the hard-decision vector z based on (4.10). If s = 0, then z is a codeword and decoding stops. If s ≠ 0, then there are parity failures. The number of parity failures is equal to the number of syndrome bits in s that are not equal to 0. Let fl be the number of check-sums in { } () () () | l ll l i ii l S = =⋅ ∈ s eh h A that are equal to 1 (parity-failure). If fl is greater than some fixed number δ of failed parity-check sums, then flip the bit zl in z. Using the modified received vector z’, the decoder recomputes q parity-check sums based on (4.10), checks parity failures and performs bit flippings. The above process continues until all the

parity-check sums(syndrome bits)are equal to0,i.e.,no parity failure. gthreshold,and should be chosen to optimize the The simplest version of BF decoding is the one without threshold o.which is described as follows. 1)Compute the g parity-check sums based on (4.10).If all the parity-check sums are zero.stop the decoding 2) Find the number of failed parity-check sums for each received bit,denoted by f人,0≤i<n 3)Identify the set Fof received bits for which f is the largest. Flip the bits in 5) Repeat steps 1)to 4)until all the parity-check sums are zero or a preset maximum number of iterations is reached. 4.8.3.3 Hard-Decoding Based on Messa e-Passing Algorithm The above BF decoding can also be described code's Tanner graph,resulting in th hard-decision version of message-passing algorithm(MPA).Todoso,we first introduce some notation.Denote by the message sent from a variable node c,toa check nodes and by the message sent from a check node s to a variable node c Both and o take values in the message alphabet M.For hard-decision decoding.M=(0,1).For soft-decision decoding.M=-,)(in the log domain);and M=(0.1)(in the probability domain). Besides,we use the following notation.Let N,()={hn≠0,1sj≤q表示与variable nodec相连的check节点索引集合 N.U)={hn≠0,lsi≤n表示与check node.号相连的variable节点索引集合. 并且,为记号简单起见,我们将使用N,()八j来表示N,()八{U},即排除check node.之 外,与variable node c,相连的check节点索引集合。(the set of check nodes that connect to variable node c excluding check node s)Similarly,let ()\i denote the set of variable nodes that connect to check node s.excluding variable node c. For a variable nodec(a check nodes)we useE()(E))to denote the set of edges that connect c(s)to nodes in()(N()).Clearly,=().(similarly,=) With these notations,we now present the hard-decision MPA.Consider a binary-input 14

14 parity-check sums (syndrome bits) are equal to 0, i.e., no parity failure. The parameter δ is called the flipping threshold, and should be chosen to optimize the error performance while minimizes the number of decoding iterations. The simplest version of BF decoding is the one without threshold δ, which is described as follows. 1) Compute the q parity-check sums based on (4.10). If all the parity-check sums are zero, stop the decoding. 2) Find the number of failed parity-check sums for each received bit, denoted by , 0 i f ≤ <i n . 3) Identify the set F of received bits for which fi is the largest. 4) Flip the bits in F. 5) Repeat steps 1) to 4) until all the parity-check sums are zero or a preset maximum number of iterations is reached. 4.8.3.3 Hard-Decoding Based on Message-Passing Algorithm The above BF decoding can also be described using code’s Tanner graph, resulting in the hard-decision version of message-passing algorithm (MPA). To do so, we first introduce some notation. Denote by i j , q the message sent from a variable node ci to a check node sj, and by σ j i, the message sent from a check node sj to a variable node ci. Both i j , q and σ j i, take values in the message alphabet M. For hard-decision decoding, M={0, 1}. For soft-decision decoding, M=(-∞, ∞) (in the log domain); and M=(0, 1) (in the probability domain). Besides, we use the following notation. Let Nv ji ( ) | 0,1 i jh j q = ≠ ≤≤ { }表示与 variable node ci相连的 check 节点索引集合, Nc ji ( ) | 0,1 j ih i n = ≠ ≤≤ { }表示与 check node sj相连的 variable 节点索引集合. 并且,为记号简单起见,我们将使用 ()\ v N i j 来表示 ( )\{ } v N i j ,即排除 check node sj 之 外,与 variable node ci相连的 check 节点索引集合。(the set of check nodes that connect to variable node ci, excluding check node sj.)Similarly, let ( ) \ c N j i denote the set of variable nodes that connect to check node sj, excluding variable node ci. For a variable node ci (a check node sj), we use Ev(i) (Ec(j)) to denote the set of edges that connect ci (sj) to nodes in Nv(i) (Nc(j)). Clearly, |Nv(i)| = |Ev(i)|. (similarly, |Nc(j)| = |Ec(j)|.) With these notations, we now present the hard-decision MPA. Consider a binary-input

memoryless channel.Lety=0,1)be the received value corresponding to the ith variable node.Gallager's hard-decoding algorithm may be deseribed as follows Gallager's hard-decoding [also called Gallager's algorithm B]: Do the following alternatively: l)Update for q.,:For all edges e=(c,s,),l≤i≤n,l≤j≤q,do the following in parallel: If this is the zeroth round,then set=y.Otherwise compute as follows. If more than (-1)of incoming messages along edges in E(e)were equal to the same value be,1)in the previous round.then set=b. Otherwise set q.=y 2)Update forFor all edges e=(c.s).Isisn,Isjsq,do the following in es nodedheete XoR o round along edges in E)eie= 9,(mod2). Note that the message passed contain only extrinsic information;ie.,the value of depends only on the valuewhereruns over all check nodes incident oncother thans (Similarly,for) Decoding is stopped after a preset number of rounds is reached,or before that,provided zH=0.(Each variable node can determine its most likely value based on its neighbors.)If zH0after a preset number of rounds is reached,then we say that the decoding has failed. Note:All message-passing algorithms must respect the which was introduced with turbo codes Rule [Extrinsic information principle:A message sent from a node along an edge e canot depend on any message previously received on edge e. This message passing is to prevent correlation between consecutive decoding iterations. 4.8.3.4 Weighted BF Decoding The simple BF decoding given in Section 4.8.3.2 can be improved by including reliability information of the received symbols in their decoding decisions. Consider the soft-decision received sequence y=()at the output of the 15

15 memoryless channel. Let yi∈M={0, 1} be the received value corresponding to the ith variable node. Gallager’s hard-decoding algorithm may be described as follows. Gallager’s hard-decoding [also called Gallager’s algorithm B]: Do the following alternatively: 1) Update for i j , q : For all edges ( , ), 1 , 1 i j e cs i n j q = ≤≤ ≤ ≤ , do the following in parallel: If this is the zeroth round, then set ij i , q y = . Otherwise compute i j , q as follows. - If more than δ(|Ev(i)|-1) of incoming messages along edges in Ev(i)\{e} were equal to the same value b∈{0, 1} in the previous round, then set i j , q b = . - Otherwise set ij i , q y = . 2) Update for σ j i, : For all edges ( , ), 1 , 1 i j e cs i n j q = ≤≤ ≤ ≤ , do the following in parallel: The check node sj sends the variable node ci the XOR of the values it received in this round along edges in Ec(j)\{e}; i.e., , ', ' ( )\{ } c ji i j i ji σ q ∈ = ∑ N (mod 2). Note that the message passed contain only extrinsic information; i.e., the value of i j , q depends only on the value σ j i', , where j’ runs over all check nodes incident on ci other than sj. (Similarly, for σ j i, .) Decoding is stopped after a preset number of rounds is reached, or before that, provided T zH 0 = . (Each variable node can determine its most likely value based on its neighbors.) If T zH 0 ≠ after a preset number of rounds is reached, then we say that the decoding has failed. Note: All message-passing algorithms must respect the following rule, which was introduced with turbo codes. Rule [Extrinsic information principle]: A message sent from a node along an edge e cannot depend on any message previously received on edge e. This message passing is to prevent correlation between consecutive decoding iterations. 4.8.3.4 Weighted BF Decoding The simple BF decoding given in Section 4.8.3.2 can be improved by including reliability information of the received symbols in their decoding decisions. Consider the soft-decision received sequence 01 1 ( , ,., ) n y y y = − y at the output of the