G.5.Compute Capability 5.x..... 229 G.5.1.Architecture......... 229 G.5.2.Global Memory.............. 230 G.5.3.Shared Memory................ 230 G.6.Compute Capability 6.x...... …234 G.6.1.Architecture...................... 0.234 G.6.2.Global Memory.................... .234 G.6.3.Shared Memory............... 234 Appendix H.Driver API................ ,235 H.1.C0 ntext.… ….238 H.2.Module.… .239 H.3.Kernel Execution.......................... ...240 H.4.Interoperability between Runtime and Driver APls. .242 Appendix I.CUDA Environment Variables......... .243 Appendix J.Unified Memory Programming................ .246 J.1.Unified Memory Introduction............. ..246 J.1.1.Simplifying GPU Programming................... .247 J.1.2.Data Migration and Coherency...... 248 J.1.3.GPU Memory Oversubscription........249 J.1.4.Multi-GPU Support.............. .249 J.1.5.System Requirements..................... 250 J.2.Programming Model................ ...250 J.2.1.Managed Memory Opt In....................... ….250 J.2.1.1.Explicit Allocation Using cudaMallocManaged()..........................250 J.2.1.2.Global-Scope Managed Variables Usingmanaged_.................................251 J.2.2.Coherency and concurrency.................252 J.2.2.1.GPU Exclusive Access To Managed Memory............252 J.2.2.2.Explicit Synchronization and Logical GPU Activity..........................253 J.2.2.3.Managing Data Visibility and Concurrent CPU GPU Access with Streams.........254 J.2.2.4.Stream Association Examples.................255 J.2.2.5.Stream Attach With Multithreaded Host Programs..56 J.2.2.6.Advanced Topic:Modular Programs and Data Access Constraints....................257 J.2.2.7.Memcpy()/Memset()Behavior With Managed Memory................ 258 J.2.3.Language Integration.............258 J.2.3.1.Host Program Errors withmanaged_Variables.............................. 259 J.2.4.Querying Unified Memory Support....260 J.2.4.1.Device Properties............. ,260 .2.4.2.Pointer Attributes...260 J.2.5.Advanced Topics...................... ...260 J.2.5.1.Managed Memory with Multi-GPU Programs on pre-6.x Architectures...............260 J.2.5.2.Using fork()with Managed Memory.................. 261 J.3.Performance Tuning..................... .261 J.3.1.Data Prefetching................. ..262 www.nvidia.com CUDA C Programming Guide PG-02829-001_v8.01xi

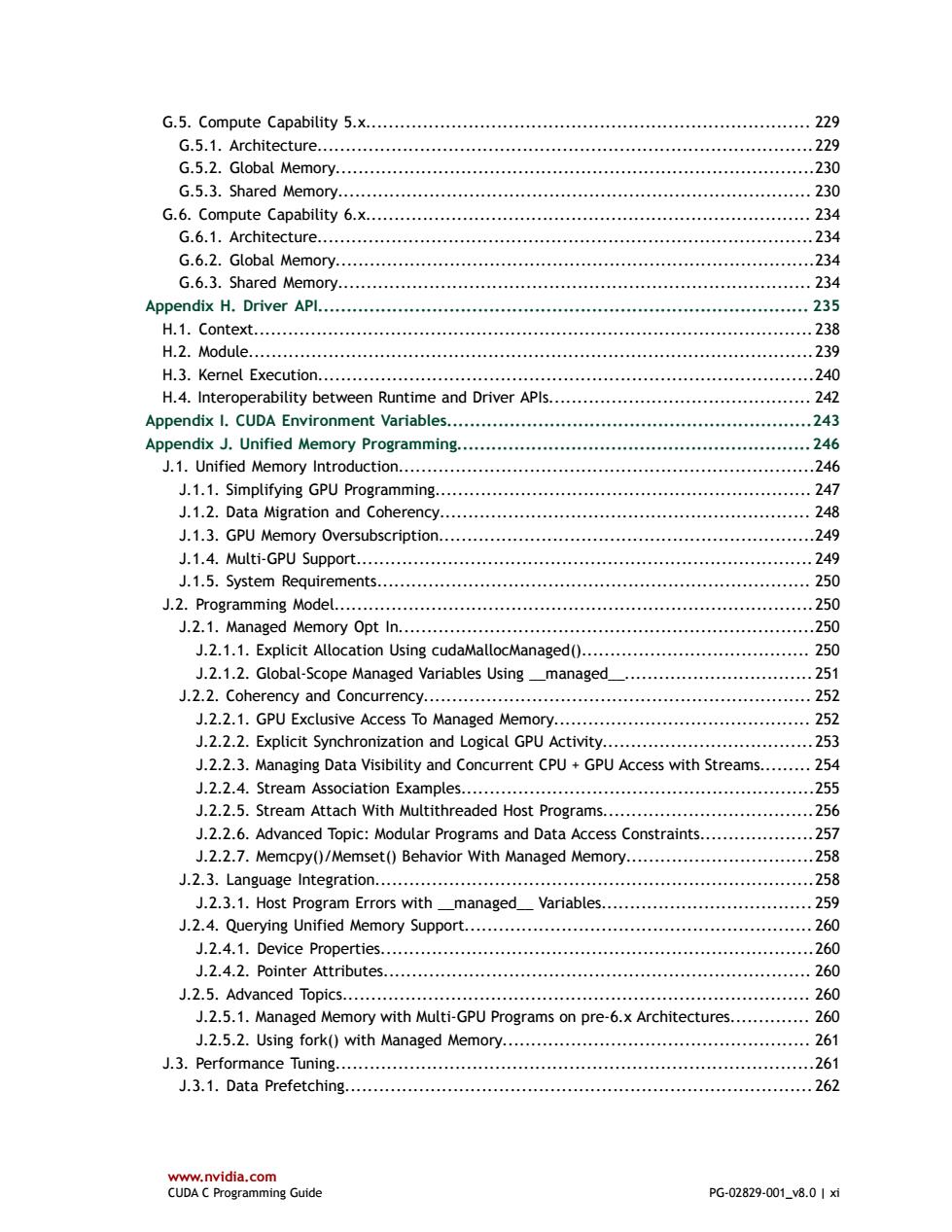

www.nvidia.com CUDA C Programming Guide PG-02829-001_v8.0 | xi G.5. Compute Capability 5.x.............................................................................. 229 G.5.1. Architecture.......................................................................................229 G.5.2. Global Memory....................................................................................230 G.5.3. Shared Memory................................................................................... 230 G.6. Compute Capability 6.x.............................................................................. 234 G.6.1. Architecture.......................................................................................234 G.6.2. Global Memory....................................................................................234 G.6.3. Shared Memory................................................................................... 234 Appendix H. Driver API...................................................................................... 235 H.1. Context.................................................................................................. 238 H.2. Module...................................................................................................239 H.3. Kernel Execution.......................................................................................240 H.4. Interoperability between Runtime and Driver APIs.............................................. 242 Appendix I. CUDA Environment Variables................................................................243 Appendix J. Unified Memory Programming..............................................................246 J.1. Unified Memory Introduction.........................................................................246 J.1.1. Simplifying GPU Programming.................................................................. 247 J.1.2. Data Migration and Coherency................................................................. 248 J.1.3. GPU Memory Oversubscription..................................................................249 J.1.4. Multi-GPU Support................................................................................ 249 J.1.5. System Requirements............................................................................ 250 J.2. Programming Model....................................................................................250 J.2.1. Managed Memory Opt In.........................................................................250 J.2.1.1. Explicit Allocation Using cudaMallocManaged()........................................ 250 J.2.1.2. Global-Scope Managed Variables Using __managed__................................. 251 J.2.2. Coherency and Concurrency.................................................................... 252 J.2.2.1. GPU Exclusive Access To Managed Memory............................................. 252 J.2.2.2. Explicit Synchronization and Logical GPU Activity.....................................253 J.2.2.3. Managing Data Visibility and Concurrent CPU + GPU Access with Streams......... 254 J.2.2.4. Stream Association Examples..............................................................255 J.2.2.5. Stream Attach With Multithreaded Host Programs.....................................256 J.2.2.6. Advanced Topic: Modular Programs and Data Access Constraints....................257 J.2.2.7. Memcpy()/Memset() Behavior With Managed Memory.................................258 J.2.3. Language Integration.............................................................................258 J.2.3.1. Host Program Errors with __managed__ Variables..................................... 259 J.2.4. Querying Unified Memory Support............................................................. 260 J.2.4.1. Device Properties............................................................................260 J.2.4.2. Pointer Attributes........................................................................... 260 J.2.5. Advanced Topics.................................................................................. 260 J.2.5.1. Managed Memory with Multi-GPU Programs on pre-6.x Architectures.............. 260 J.2.5.2. Using fork() with Managed Memory...................................................... 261 J.3. Performance Tuning....................................................................................261 J.3.1. Data Prefetching.................................................................................. 262

J.3.2.Data Usage Hints...... 263 J.3.3.Querying Usage Attributes......264 www.nvidia.com CUDA C Programming Guide PG-02829-001_v8.0|xi

www.nvidia.com CUDA C Programming Guide PG-02829-001_v8.0 | xii J.3.2. Data Usage Hints................................................................................. 263 J.3.3. Querying Usage Attributes...................................................................... 264

LIST OF FIGURES Figure 1 Floating-Point Operations per Second for the CPU and GPU...................................1 Figure 2 Memory Bandwidth for the cpu and GPU.....................................2 Figure 3 The GPU Devotes More Transistors to Data Processing....................................2 Figure 4 GPU Computing Applications........................ 4 Figure 5 Automatic Scalability............ ..6 Figure 6 Grid of Thread Blocks..................... 10 Figure 7 Memory Hierarchy............ .12 Figure 8 Heterogeneous Programming............. .14 Figure 9 Matrix Multiplication without Shared Memory... …25 Figure 10 Matrix Multiplication with Shared Memory..................................... ..28 Figure 11 The Driver API Is Backward but Not Forward Compatible....... .66 Figure 12 Parent-Child Launch Nesting......................... 141 Figure 13 Nearest-Point Sampling Filtering Mode................ .214 Figure 14 Linear Filtering Mode................... 215 Figure 15 One-Dimensional Table Lookup Using Linear Filtering..... 216 Figure 16 Examples of Global Memory Accesses............. 228 Figure 17 Strided Shared Memory Accesses........... 232 Figure 18 Irregular Shared Memory Accesses............. 233 Figure 19 Library Context Management....... 239 www.nvidia.com CUDA C Programming Guide PG-02829-001_v8.01xi

www.nvidia.com CUDA C Programming Guide PG-02829-001_v8.0 | xiii LIST OF FIGURES Figure 1 Floating-Point Operations per Second for the CPU and GPU ...................................1 Figure 2 Memory Bandwidth for the CPU and GPU .........................................................2 Figure 3 The GPU Devotes More Transistors to Data Processing ......................................... 2 Figure 4 GPU Computing Applications ........................................................................ 4 Figure 5 Automatic Scalability ................................................................................. 6 Figure 6 Grid of Thread Blocks ...............................................................................10 Figure 7 Memory Hierarchy ................................................................................... 12 Figure 8 Heterogeneous Programming ...................................................................... 14 Figure 9 Matrix Multiplication without Shared Memory .................................................. 25 Figure 10 Matrix Multiplication with Shared Memory .....................................................28 Figure 11 The Driver API Is Backward but Not Forward Compatible ................................... 66 Figure 12 Parent-Child Launch Nesting .................................................................... 141 Figure 13 Nearest-Point Sampling Filtering Mode ........................................................214 Figure 14 Linear Filtering Mode ............................................................................ 215 Figure 15 One-Dimensional Table Lookup Using Linear Filtering ...................................... 216 Figure 16 Examples of Global Memory Accesses ......................................................... 228 Figure 17 Strided Shared Memory Accesses ...............................................................232 Figure 18 Irregular Shared Memory Accesses .............................................................233 Figure 19 Library Context Management ................................................................... 239

LIST OF TABLES Table 1 Cubemap Fetch................ .50 Table 2 Throughput of Native Arithmetic Instructions..................................... 82 Table 3 Alignment Requirements in Device Code..94 Table 4 New Device-only Launch Implementation Functions........................................ 150 Table 5 Supported API Functions.................. 150 Table 6 Single-Precision Mathematical Standard Library Functions with Maximum ULP Error....159 Table 7 Double-Precision Mathematical Standard Library Functions with Maximum ULP Error...163 Table 8 Functions Affected by -use_fast_math.. 167 Table 9 Single-Precision Floating-Point Intrinsic Functions........ ..168 Table 10 Double-Precision Floating-Point Intrinsic Functions........................ 169 Table 11 C++11 Language Features........................ 170 Table 12 C++14 Language Features.......................... 173 Table 13 Feature Support per Compute Capability........ 217 Table 14 Technical Specifications per Compute Capability...... 218 Table 15 Objects Available in the CUDA Driver APl..... 235 Table 16 CUDA Environment Variables.................. ,243 www.nvidia.com CUDA C Programming Guide PG-02829-001_v8.0|xiv

www.nvidia.com CUDA C Programming Guide PG-02829-001_v8.0 | xiv LIST OF TABLES Table 1 Cubemap Fetch ........................................................................................50 Table 2 Throughput of Native Arithmetic Instructions ................................................... 82 Table 3 Alignment Requirements in Device Code ......................................................... 94 Table 4 New Device-only Launch Implementation Functions .......................................... 150 Table 5 Supported API Functions ........................................................................... 150 Table 6 Single-Precision Mathematical Standard Library Functions with Maximum ULP Error .... 159 Table 7 Double-Precision Mathematical Standard Library Functions with Maximum ULP Error... 163 Table 8 Functions Affected by -use_fast_math .......................................................... 167 Table 9 Single-Precision Floating-Point Intrinsic Functions .............................................168 Table 10 Double-Precision Floating-Point Intrinsic Functions .......................................... 169 Table 11 C++11 Language Features ........................................................................ 170 Table 12 C++14 Language Features ........................................................................ 173 Table 13 Feature Support per Compute Capability ......................................................217 Table 14 Technical Specifications per Compute Capability ............................................ 218 Table 15 Objects Available in the CUDA Driver API ..................................................... 235 Table 16 CUDA Environment Variables .....................................................................243

Chapter 1. INTRODUCTION 1.1.From Graphics Processing to General Purpose Parallel Computing Driven by the insatiable market demand for realtime,high-definition 3D graphics, the programmable Graphic Processor Unit or GPU has evolved into a highly parallel, multithreaded,manycore processor with tremendous computational horsepower and very high memory bandwidth,as illustrated by Figure 1 and Figure 2. Theoretical GFLOP/s at base clock 11000 10500 ◆-MDLA GPU Single Precision 10000 9500 9000 8500 8000 750 7000 6500 6000 500 350 2500 200 1500 500 0 2005 2007 2011 2013 2015 Figure 1 Floating-Point Operations per Second for the CPU and GPU www.nvidia.com CUDA C Programming Guide PG-02829-001_v8.011

www.nvidia.com CUDA C Programming Guide PG-02829-001_v8.0 | 1 Chapter 1. INTRODUCTION 1.1. From Graphics Processing to General Purpose Parallel Computing Driven by the insatiable market demand for realtime, high-definition 3D graphics, the programmable Graphic Processor Unit or GPU has evolved into a highly parallel, multithreaded, manycore processor with tremendous computational horsepower and very high memory bandwidth, as illustrated by Figure 1 and Figure 2. Figure 1 Floating-Point Operations per Second for the CPU and GPU