Markov Property "The future is independent of the past given the present" Definition A state St is Markov if and only if P[S+1|S]=P[S+1|Si,,S] The state captures all relevant information from the history Once the state is known,the history may be thrown away i.e.The state is a sufficient statistic of the future 4口◆464三1=,¥9QC

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Markov Property

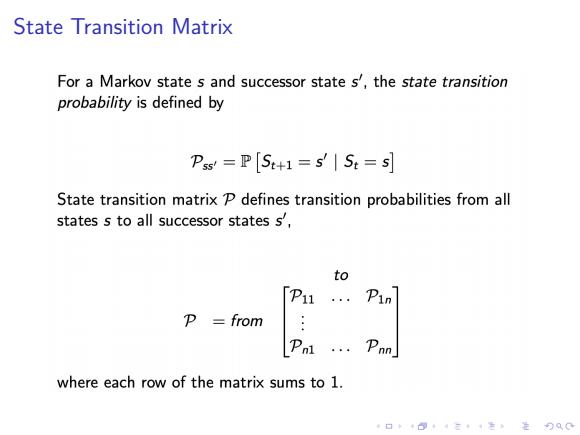

State Transition Matrix For a Markov state s and successor state s',the state transition probability is defined by Pss=P St+1=s St=s State transition matrix P defines transition probabilities from all states s to all successor states s', to [P11 Pin P =from [Pm where each row of the matrix sums to 1. 口卡回·三4色,是分Q0

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . State Transition Matrix

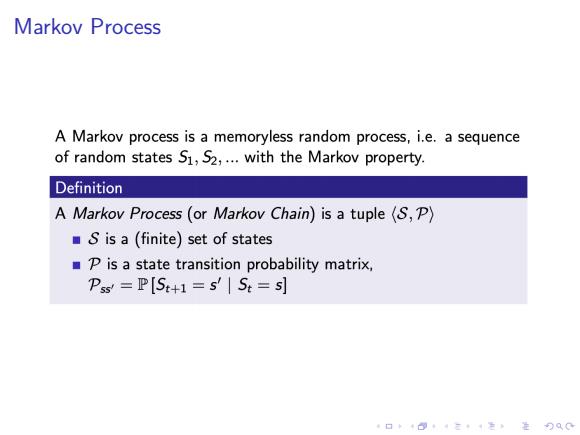

Markov Process A Markov process is a memoryless random process,i.e.a sequence of random states S1,S2,...with the Markov property. Definition A Markov Process (or Markov Chain)is a tuple (S,P) S is a (finite)set of states p is a state transition probability matrix, Pss =P[St+1=s'St=5] 4口◆4⊙t1三1=,¥9QC

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Markov Process

Example:Student Markov Chain 0.9 Facebook Sleep 0.1 0.5 10.2 1.0 08 0.6 Class 1 0.5 Pass 0.4 0.4 0.2 0.4 Pub 口卡B·三4色进分双0

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Example: Student Markov Chain

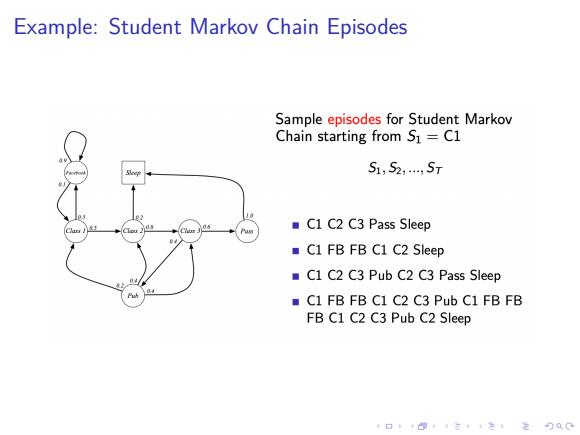

Example:Student Markov Chain Episodes Sample episodes for Student Markov Chain starting from S1=C1 S1;S2....ST C1 C2 C3 Pass Sleep C1 FBFB C1 C2 Sleep C1 C2 C3 Pub C2 C3 Pass Sleep C1 FBFB C1 C2 C3 Pub C1 FBFB FB C1 C2 C3 Pub C2 Sleep 4口◆4⊙t1三1=,¥9QC

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Example: Student Markov Chain Episodes