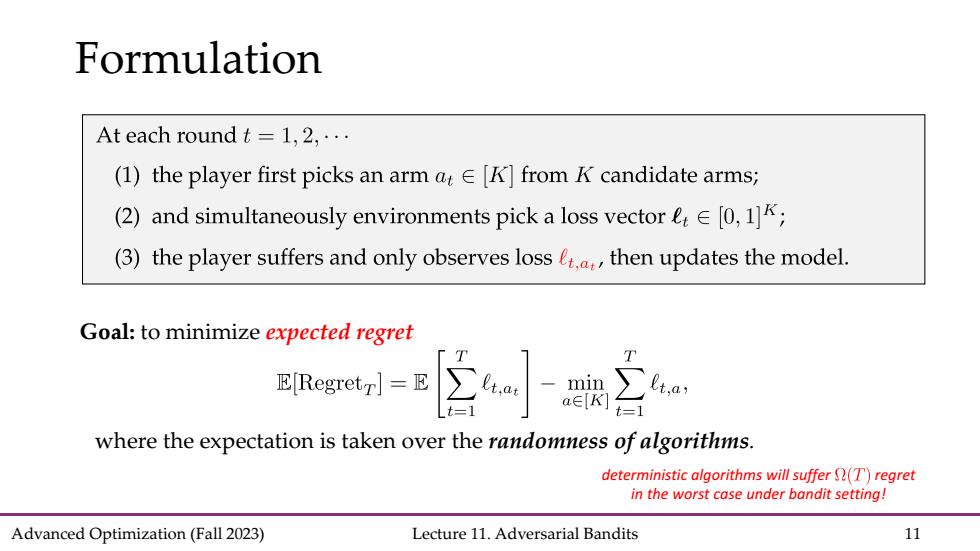

Formulation At each round t=1,2,... (1)the player first picks an arm at E [K]from K candidate arms; (2)and simultaneously environments pick a loss vector eE[0,1]K; (3)the player suffers and only observes loss (.then updates the model. Goal:to minimize expected regret T EfRegretrE REIK -min where the expectation is taken over the randomness of algorithms. deterministic algorithms will suffer (T)regret in the worst case under bandit setting! Advanced Optimization(Fall 2023) Lecture 11.Adversarial Bandits 11

Advanced Optimization (Fall 2023) Lecture 11. Adversarial Bandits 11 Formulation Goal: to minimize expected regret where the expectation is taken over the randomness of algorithms. deterministic algorithms will suffer regret in the worst case under bandit setting!

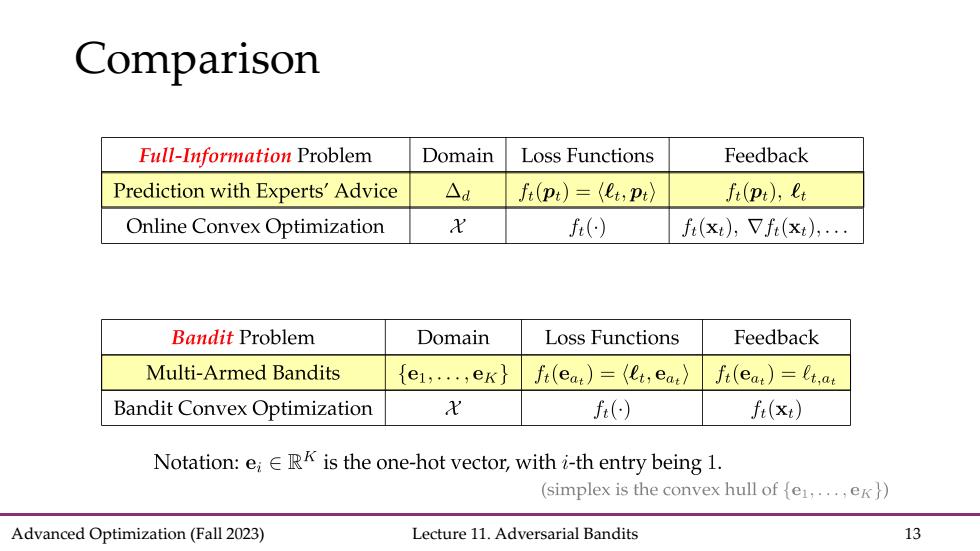

Comparison Full-Information Problem Domain Loss Functions Feedback Prediction with Experts'Advice △d f(pt)=(t,pt〉 fi(pi),et Online Convex Optimization X f() fi(x),Vfi(xt).... Bandit Problem Domain Loss Functions Feedback Multi-Armed Bandits {e1,.,eK} ft(eat)=(et,eat) ft(eat)=lt,at Bandit Convex Optimization X f() fi(xt) Notation:e;ERK is the one-hot vector,with i-th entry being 1. (simplex is the convex hull of [e1,...,ex)) Advanced Optimization(Fall 2023) Lecture 11.Adversarial Bandits 12

Advanced Optimization (Fall 2023) Lecture 11. Adversarial Bandits 12 Comparison

Comparison Full-Information Problem Domain Loss Functions Feedback Prediction with Experts'Advice △d fi(pt)=(et,p) fi(Pt),et Online Convex Optimization X f() fi(x);Vfi(xt);... Bandit Problem Domain Loss Functions Feedback Multi-Armed Bandits {e1,,er} ft(eat)=(et,eat》 ft(eat)=t,at Bandit Convex Optimization X f() fi(x) Notation:e;ERK is the one-hot vector,with i-th entry being 1. (simplex is the convex hull of {e1,...ex}) Advanced Optimization(Fall 2023) Lecture 11.Adversarial Bandits 13

Advanced Optimization (Fall 2023) Lecture 11. Adversarial Bandits 13 Comparison

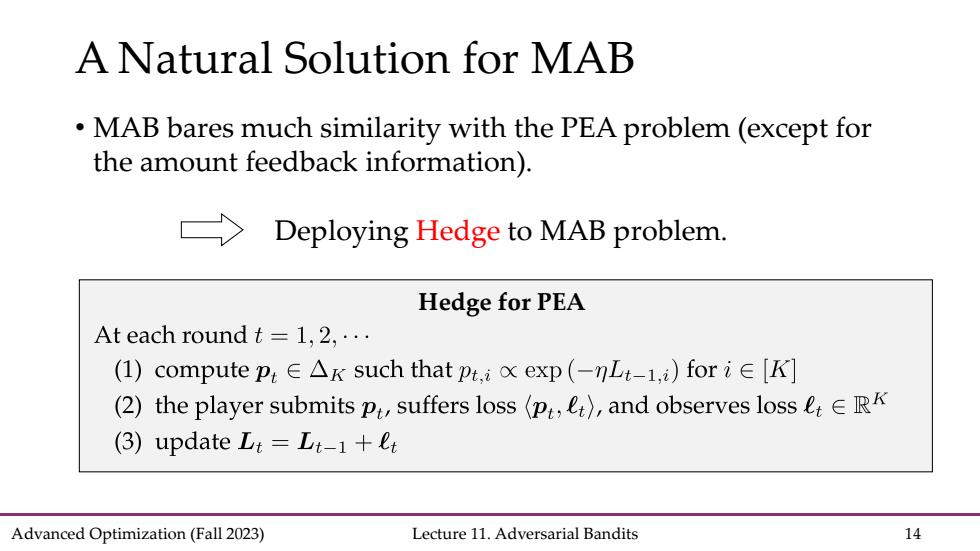

A Natural Solution for MAB MAB bares much similarity with the PEA problem(except for the amount feedback information). Deploying Hedge to MAB problem. Hedge for PEA At each round t=1,2,... (I)compute p:∈△<such that pt,x exp(-nLt-l,i)fori∈[K] (2)the player submits p,suffers loss (p,e),and observes loss eERK (3)update Lt=Lt-1+e Advanced Optimization(Fall 2023) Lecture 11.Adversarial Bandits 14

Advanced Optimization (Fall 2023) Lecture 11. Adversarial Bandits 14 A Natural Solution for MAB • MAB bares much similarity with the PEA problem (except for the amount feedback information). Hedge for PEA Deploying Hedge to MAB problem

A Natural Solution for MAB However,Hedge does not fit for MAB setting due to limited feedback Hedge requires t for all i [K],but only t is available in MAB. Hedge for PEA At each round t=l,2,… (I)compute p:∈△such that pt,.:x exp(-Lt-1,i)fori∈[K] (2)the player submits p,suffers loss(p,e),and observes losseERK (3)update Lt=Lt-1+et Advanced Optimization(Fall 2023) Lecture 11.Adversarial Bandits 15

Advanced Optimization (Fall 2023) Lecture 11. Adversarial Bandits 15 A Natural Solution for MAB • However, Hedge does not fit for MAB setting due to limited feedback. Hedge for PEA