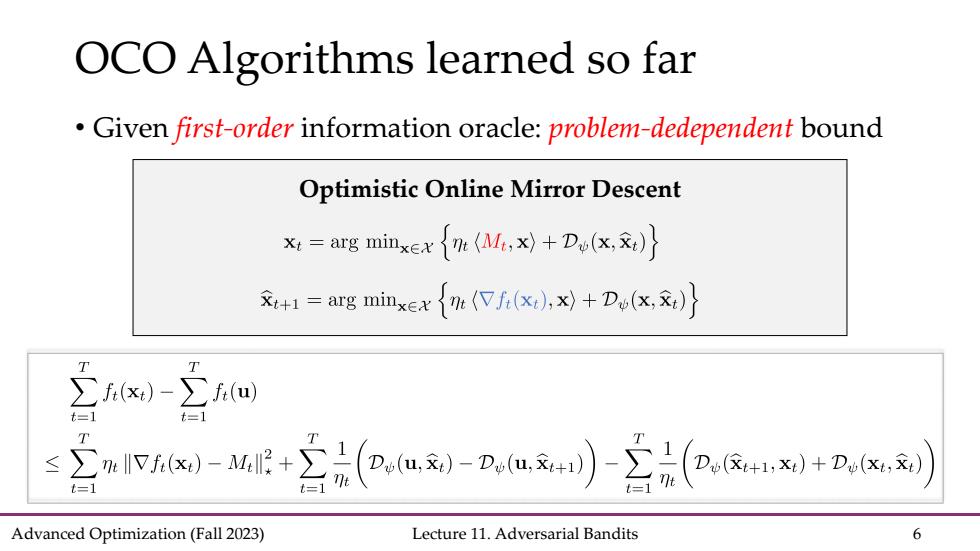

OCO Algorithms learned so far Given first-order information oracle:problem-dedependent bound Optimistic Online Mirror Descent x=arg minxex m(M+Du(x) =arg minxex in (f(x),x)+Du(x) T T -w aN-E+(e,a刻-nu)-(aR心+n,K刘 T Advanced Optimization(Fall 2023) Lecture 11.Adversarial Bandits 6

Advanced Optimization (Fall 2023) Lecture 11. Adversarial Bandits 6 Optimistic Online Mirror Descent OCO Algorithms learned so far • Given first-order information oracle: problem-dedependent bound

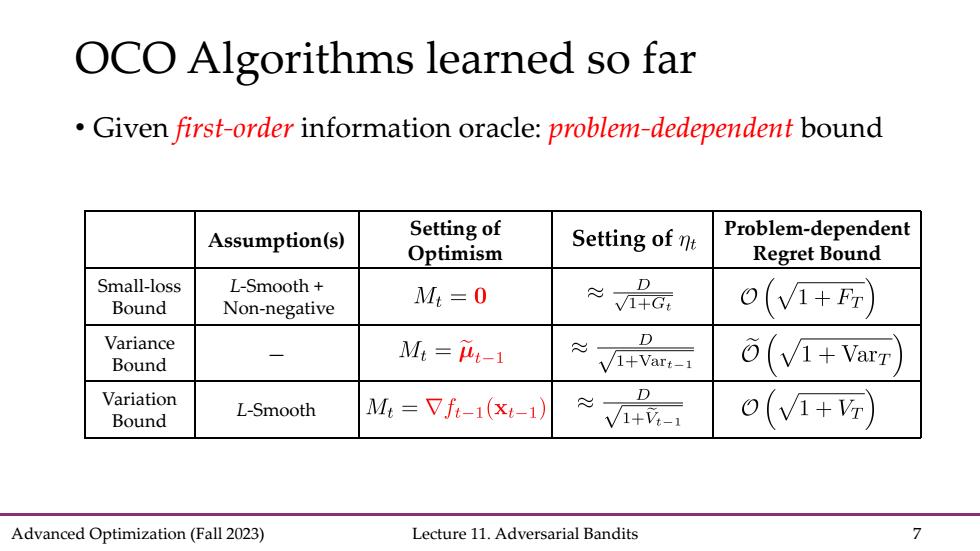

OCO Algorithms learned so far Given first-order information oracle:problem-dedependent bound Assumption(s) Setting of Setting of nt Problem-dependent Optimism Regret Bound Small-loss L-Smooth+ Bound Non-negative M:=0 ≈鼎a (v1+F) Variance D 一 Bound M=t-1 V1+Var:-1 (1+Var Variation D L-Smooth Bound M:=Vf-1(xt-1) V1+V- O(1+) Advanced Optimization(Fall 2023) Lecture 11.Adversarial Bandits 7

Advanced Optimization (Fall 2023) Lecture 11. Adversarial Bandits 7 OCO Algorithms learned so far • Given first-order information oracle: problem-dedependent bound Assumption(s) Setting of Optimism Problem-dependent Regret Bound Small-loss Bound L-Smooth + Non-negative Variance Bound — Variation Bound L-Smooth

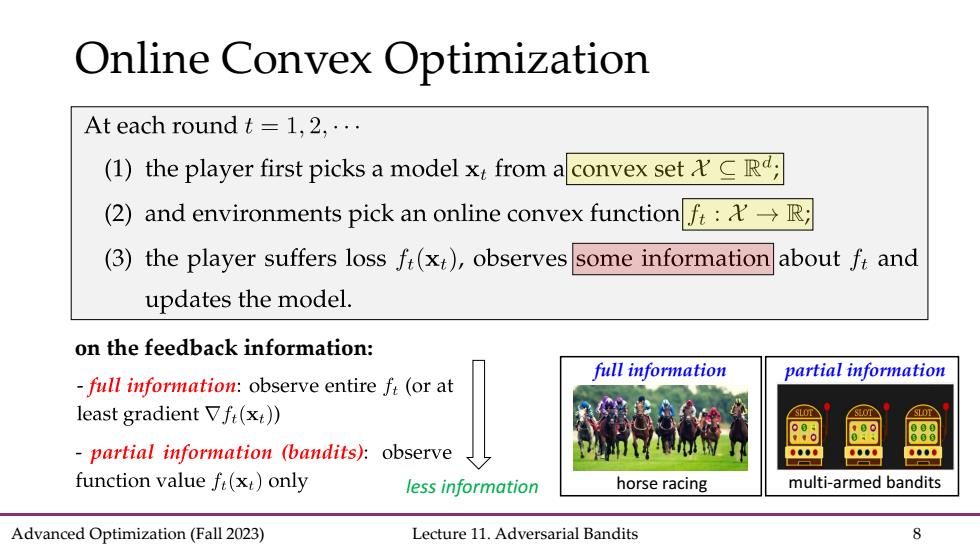

Online Convex Optimization At each round t=1,2,... (1)the player first picks a model x from aconvex set (2)and environments pick an online convex functionf:-R; (3)the player suffers loss fi(xt),observes some information about fr and updates the model. on the feedback information: full information partial information -full information:observe entire f(or at least gradient Vf(x)) 8B88 partial information (bandits):observe function value fi(xt)only less information horse racing multi-armed bandits Advanced Optimization(Fall 2023) Lecture 11.Adversarial Bandits 8

Advanced Optimization (Fall 2023) Lecture 11. Adversarial Bandits 8 Online Convex Optimization less information full information horse racing partial information multi-armed bandits on the feedback information:

Multi-Armed Bandit Trial 1 Trial 2 Trial3 Loss:0.3 Loss:0.2 Loss:0.5 Arms 日日 chosen arm unobserved Advanced Optimization(Fall 2023) Lecture 11.Adversarial Bandits 9

Advanced Optimization (Fall 2023) Lecture 11. Adversarial Bandits 9 Multi-Armed Bandit Trial 1 Trial 2 Trial 3 Loss: 0.3 * Loss: 0.2 * Loss: 0.5 * * * * * * * Arms : chosen arm : unobserved

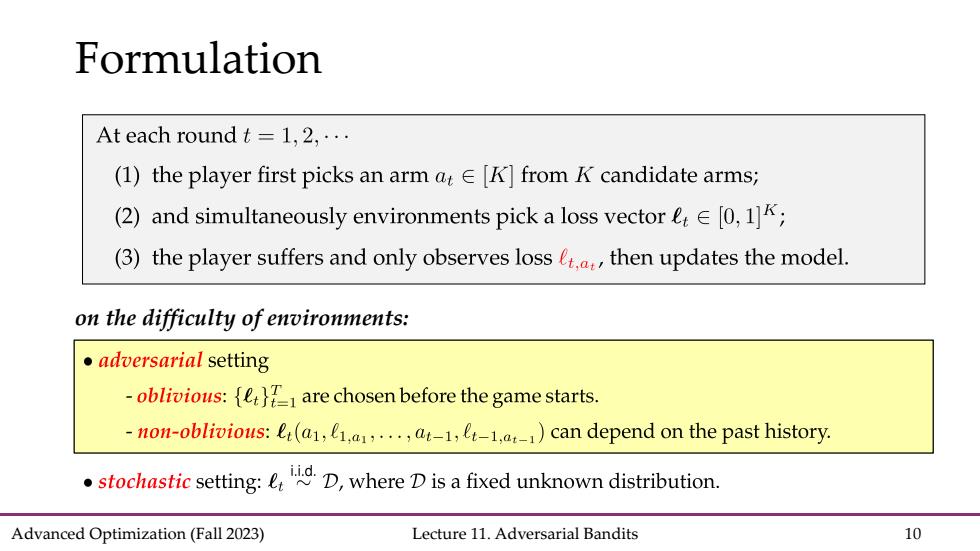

Formulation At each round t=1,2,... (1)the player first picks an arm atE[K]from K candidate arms; (2)and simultaneously environments pick a loss vector eE[0,1]K; (3)the player suffers and only observes loss et.a,,then updates the model. on the difficulty of environments: ●adversarial setting -oblivious:{e are chosen before the game starts. -non-oblivious:(a,.1,1.)can depend on the past history. .stochastic settingD,where Dis a fixed unknown distribution. Advanced Optimization(Fall 2023) Lecture 11.Adversarial Bandits 10

Advanced Optimization (Fall 2023) Lecture 11. Adversarial Bandits 10 Formulation on the difficulty of environments: