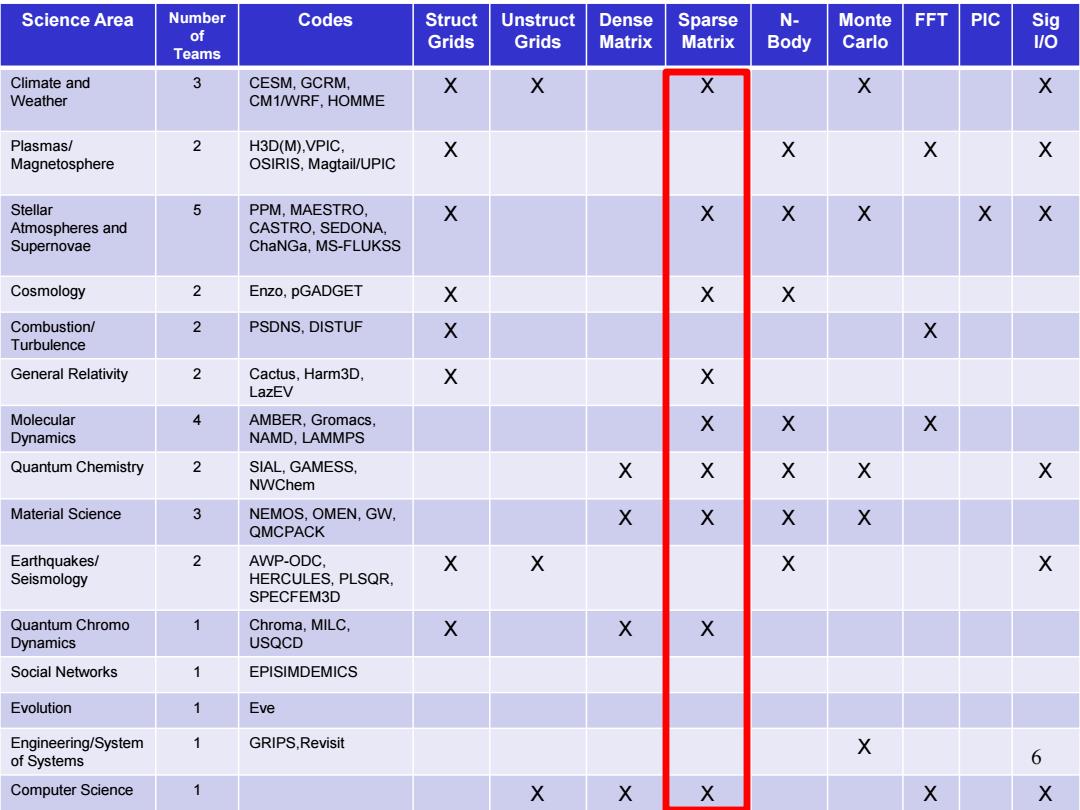

Science Area Number Codes Struct Unstruct Dense Sparse N- Monte FFT PIC Sig of Grids Grids Matrix Matrix Body Carlo 1/o Teams Climate and 3 CESM,GCRM, X X X Weather CM1/WRF.HOMME Plasmas/ 2 H3D(M),VPIC X X X X Magnetosphere OSIRIS,Magtail/UPIC Stellar 5 PPM,MAESTRO X X X X X X Atmospheres and CASTRO,SEDONA, Supernovae ChaNGa,MS-FLUKSS Cosmology 2 Enzo,pGADGET X Combustion/ 2 PSDNS,DISTUF X Turbulence General Relativity 2 Cactus,Harm3D. LazEV Molecular AMBER,Gromacs, X Dynamics NAMD,LAMMPS Quantum Chemistry 2 SIAL,GAMESS, NWChem Material Science 3 NEMOS,OMEN,GW, X QMCPACK Earthquakes/ 2 AWP-ODC X Seismology HERCULES,PLSQR, SPECFEM3D Quantum Chromo 1 Chroma,MILC, X X Dynamics USQCD Social Networks EPISIMDEMICS Evolution Eve Engineering/System GRIPS,Revisit X of Systems 6 Computer Science X X X X ×

Science Area Number of Teams Codes Struct Grids Unstruct Grids Dense Matrix Sparse Matrix N- Body Monte Carlo FFT PIC Sig I/O Climate and Weather 3 CESM, GCRM, CM1/WRF, HOMME X X X X X Plasmas/ Magnetosphere 2 H3D(M),VPIC, OSIRIS, Magtail/UPIC X X X X Stellar Atmospheres and Supernovae 5 PPM, MAESTRO, CASTRO, SEDONA, ChaNGa, MS-FLUKSS X X X X X X Cosmology 2 Enzo, pGADGET X X X Combustion/ Turbulence 2 PSDNS, DISTUF X X General Relativity 2 Cactus, Harm3D, LazEV X X Molecular Dynamics 4 AMBER, Gromacs, NAMD, LAMMPS X X X Quantum Chemistry 2 SIAL, GAMESS, NWChem X X X X X Material Science 3 NEMOS, OMEN, GW, QMCPACK X X X X Earthquakes/ Seismology 2 AWP-ODC, HERCULES, PLSQR, SPECFEM3D X X X X Quantum Chromo Dynamics 1 Chroma, MILC, USQCD X X X Social Networks 1 EPISIMDEMICS Evolution 1 Eve Engineering/System of Systems 1 GRIPS,Revisit X Computer Science 1 X X X X X 6

Sparse Matrix-Vector Multiplication (SpMV) X 十 A X Y Y 7

× A X + Y Y Sparse Matrix-Vector Multiplication (SpMV) 7

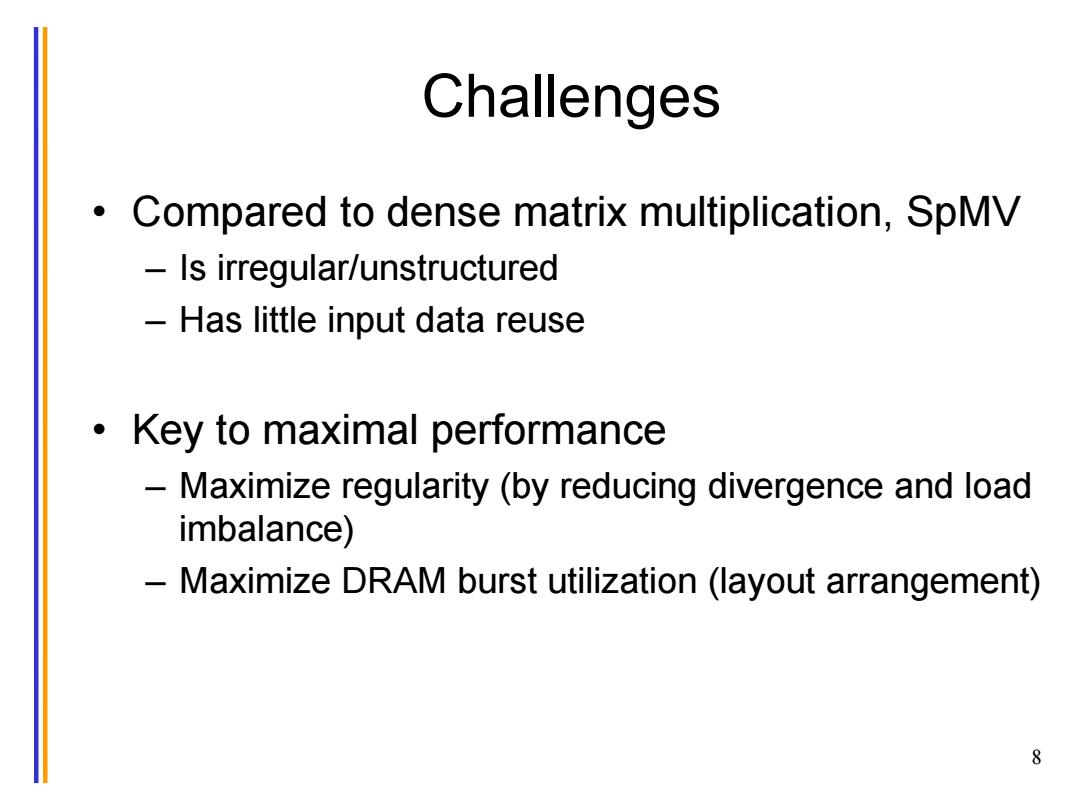

Challenges Compared to dense matrix multiplication,SpMV Is irregular/unstructured Has little input data reuse Key to maximal performance Maximize regularity(by reducing divergence and load imbalance) Maximize DRAM burst utilization (layout arrangement) 8

Challenges • Compared to dense matrix multiplication, SpMV – Is irregular/unstructured – Has little input data reuse • Key to maximal performance – Maximize regularity (by reducing divergence and load imbalance) – Maximize DRAM burst utilization (layout arrangement) 8

A Simple Parallel SpMV Row 0 3 0 1 0 Thread 0 Row 1 0 0 0 0 Thread 1 Row 2 0 2 4 1 Thread 2 Row 3 0 0 1 Thread 3 ·Each thread processes one row 9

Row 0 3 0 1 0 Thread 0 Row 1 0 0 0 0 Thread 1 Row 2 0 2 4 1 Thread 2 Row 3 1 0 0 1 Thread 3 A Simple Parallel SpMV • Each thread processes one row 9

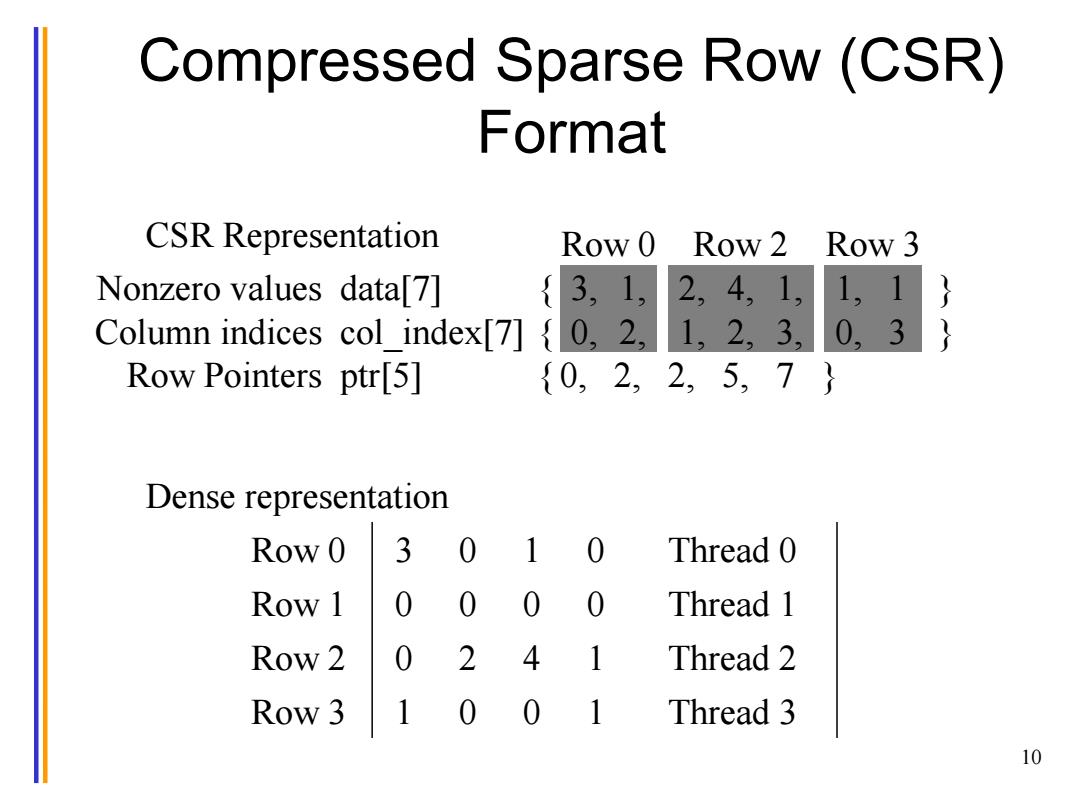

Compressed Sparse Row(CSR) Format CSR Representation Row 0 Row 2 Row 3 Nonzero values data[7] {3,1, 2,4,1, 1,1} Column indices col _index[7]{0,2,1,2,3, 0,3 Row Pointers ptr[5] {0,2,2,5,7} Dense representation Row 0 3 0 Thread 0 Row 1 0 0 0 0 Thread 1 Row 2 0 2 4 1 Thread 2 Row 3 Thread 3 10

Row 0 Row 2 Row 3 Nonzero values data[7] { 3, 1, 2, 4, 1, 1, 1 } Column indices col_index[7] { 0, 2, 1, 2, 3, 0, 3 } Row Pointers ptr[5] { 0, 2, 2, 5, 7 } Compressed Sparse Row (CSR) Format 10 Row 0 3 0 1 0 Thread 0 Row 1 0 0 0 0 Thread 1 Row 2 0 2 4 1 Thread 2 Row 3 1 0 0 1 Thread 3 Dense representation CSR Representation