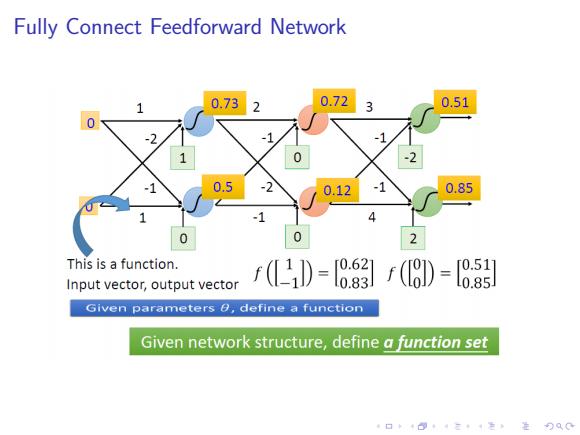

Fully Connect Feedforward Network 0.73 0.72 3 0.51 0.5 0.12 1 0.85 4 This is a function. 0.621 r0.51 Input vector,output vector 10.83 l0.85 Given parameters 0,define a function Given network structure,define a function set 口卡B·三4色进分双0

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Fully Connect Feedforward Network

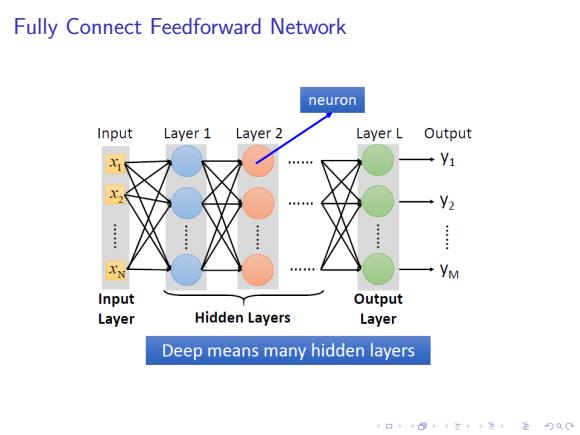

Fully Connect Feedforward Network neuron Input Layer 1 Layer 2 Layer L Output +y1 V2 YM Input Output Layer Hidden Layers Layer Deep means many hidden layers 4口◆4⊙t1三1=,¥9QC

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Fully Connect Feedforward Network

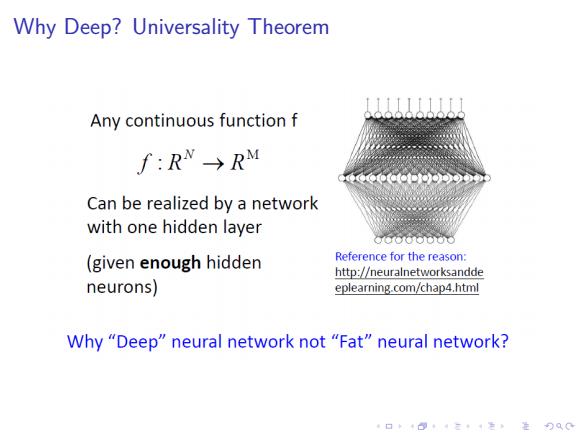

Why Deep?Universality Theorem Any continuous function f f:RW→RM Can be realized by a network with one hidden layer a080bo0o (given enough hidden Reference for the reason: http://neuralnetworksandde neurons) eplearning.com/chap4.html Why "Deep"neural network not"Fat"neural network? 口卡回t·三4色,是分Q0

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Why Deep? Universality Theorem

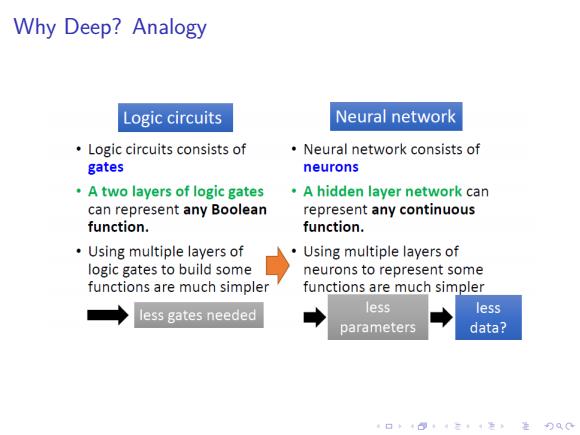

Why Deep?Analogy Logic circuits Neural network Logic circuits consists of Neural network consists of gates neurons A two layers of logic gates A hidden layer network can can represent any Boolean represent any continuous function. function. Using multiple layers of Using multiple layers of logic gates to build some neurons to represent some functions are much simpler functions are much simpler less less gates needed less parameters data? 日+4+4二+1在)QG

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Why Deep? Analogy

Deep Many hidden layers o 22 layers C00的 LC-w0de http://cs231n.stanford.e LC90Je du/slides/winter1516_le 19 layers cture8.pdf 山gb00 04-211 8 layers 山ta 6.7% 7.3% 6u5t 16.4%同 GoL时 coup时 AlexNet (2012) VGG(2014) GoogleNet (2014) ,口+心,,之,色是分Q0

. .. .. .. .. .. .. .. .. .. .. .. .. .. .. .. .. .. .. ..