6.3 Synaptic convergence to centroids:AVQ Algorithms Competitive learning adaptively quantizes the input pattern space R".Probability density function p(x)characterizes the continuous distributions of patterns in R. We shall prove that competitive AVQ synaptic vector m, converge exponentially quickly to pattern-class centroids and. more generally,at equilibrium they vibrate about the centroids in a Browmian motion 2003.11.19 6

2003.11.19 6 6.3 Synaptic convergence to centroids: AVQ Algorithms We shall prove that competitive AVQ synaptic vector converge exponentially quickly to pattern-class centroids and, more generally, at equilibrium they vibrate about the centroids in a Browmian motion. m j Competitive learning adaptively quantizes the input pattern space . Probability density function characterizes the continuous distributions of patterns in . n R p(x) n R

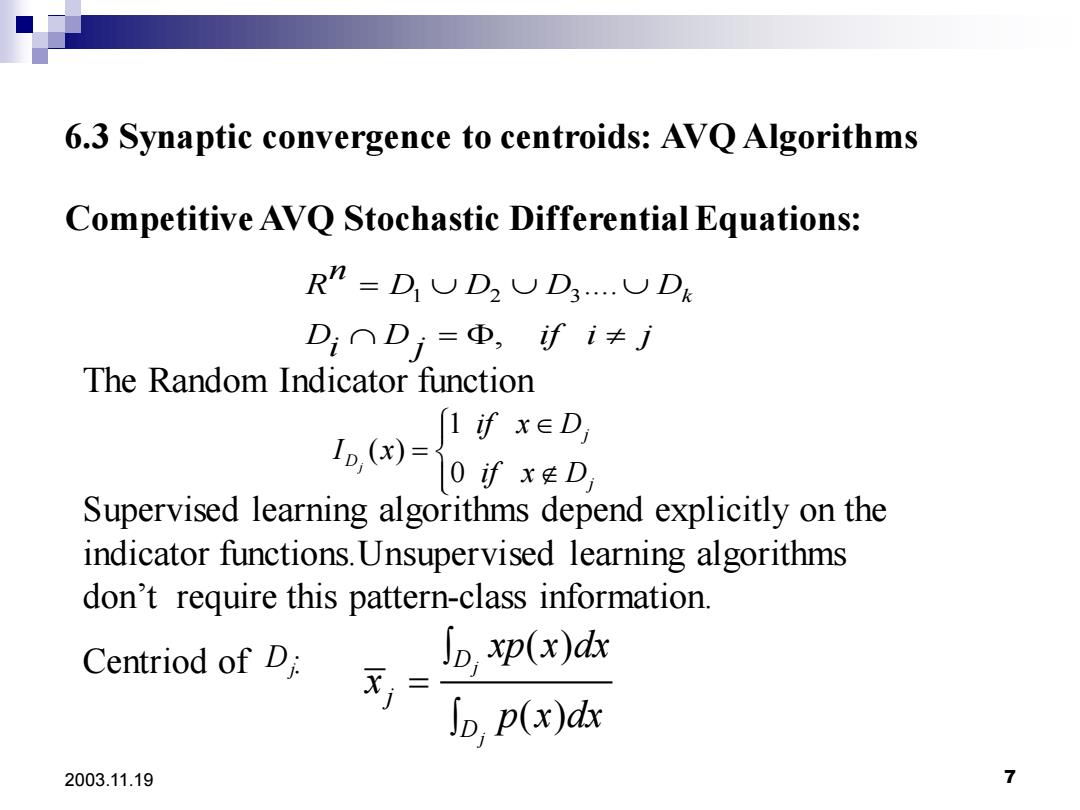

6.3 Synaptic convergence to centroids:AVQ Algorithms Competitive AVQ Stochastic Differential Equations: R”=DUD2UD3.UDk D:∩Dj=D,fi≠j The Random Indicator function ()xED 0fx廷D Supervised learning algorithms depend explicitly on the indicator functions.Unsupervised learning algorithms don't require this pattern-class information. Centriod of Di ∫D.p(x) X,= p(x)dx 2003.11.19 7

2003.11.19 7 6.3 Synaptic convergence to centroids: AVQ Algorithms 1 2 3.... , k n R D D D D D D if i j i j = = The Random Indicator function Supervised learning algorithms depend explicitly on the indicator functions.Unsupervised learning algorithms don’t require this pattern-class information. Centriod of : 1 ( ) 0 j j D j if x D I x if x D = ( ) ( ) j j D j D xp x dx x p x dx = Competitive AVQ Stochastic Differential Equations: Dj

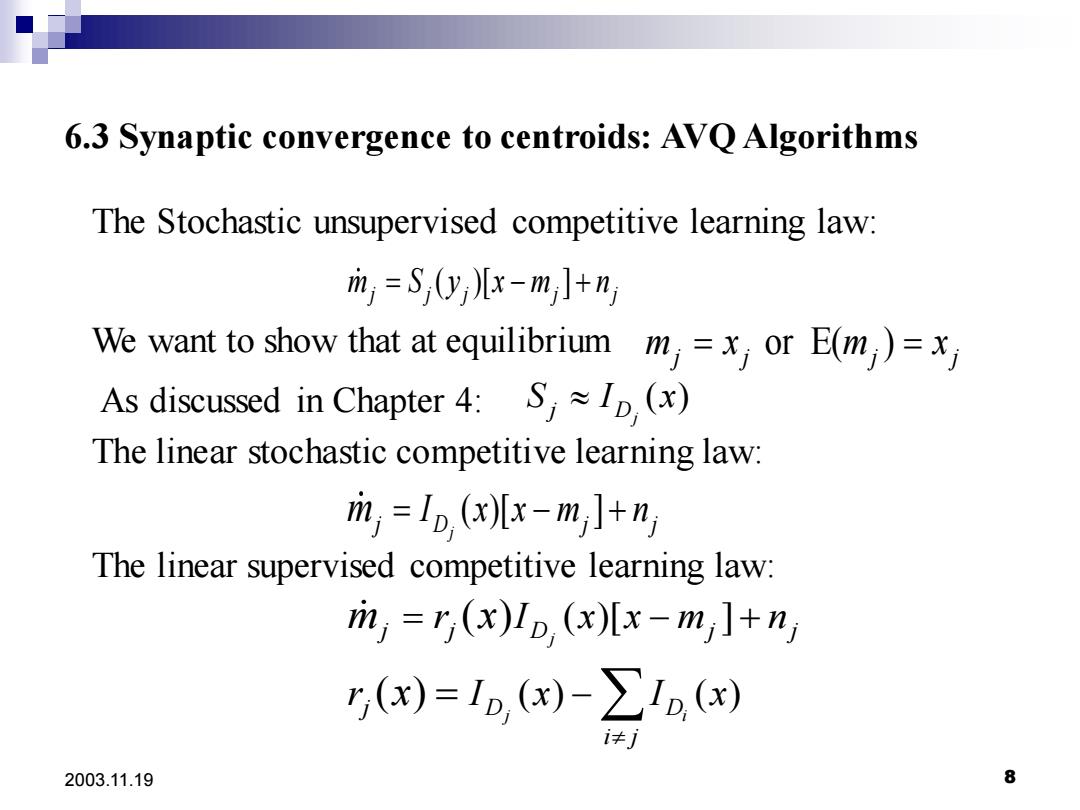

6.3 Synaptic convergence to centroids:AVQ Algorithms The Stochastic unsupervised competitive learning law: m;=S,y儿x-m,]+nj We want to show that at equilibrium m=x or E(m)=x As discussed in Chapter 4:S (x) The linear stochastic competitive learning law: m,=1o,(xx-m,]+n) The linear supervised competitive learning law: ri,=r(x)Ip (x)[x-mjl+n ,(x)=1p,(x)-∑1(x) 2003.11.19 8

2003.11.19 8 6.3 Synaptic convergence to centroids: AVQ Algorithms The Stochastic unsupervised competitive learning law: ( )[ ] m S y x m n j j j j j = − + We want to show that at equilibrium or E( ) m x m x j j j j = = ( ) j j D As discussed in Chapter 4: S I x The linear stochastic competitive learning law: ( )[ ] j j D j j m = − + I x x m n The linear supervised competitive learning law: ( )[ ] ( ) ( ) ( ) ( ) j j i j j D j j j D D i j r I x x m n r I x I x m x x = − + = −

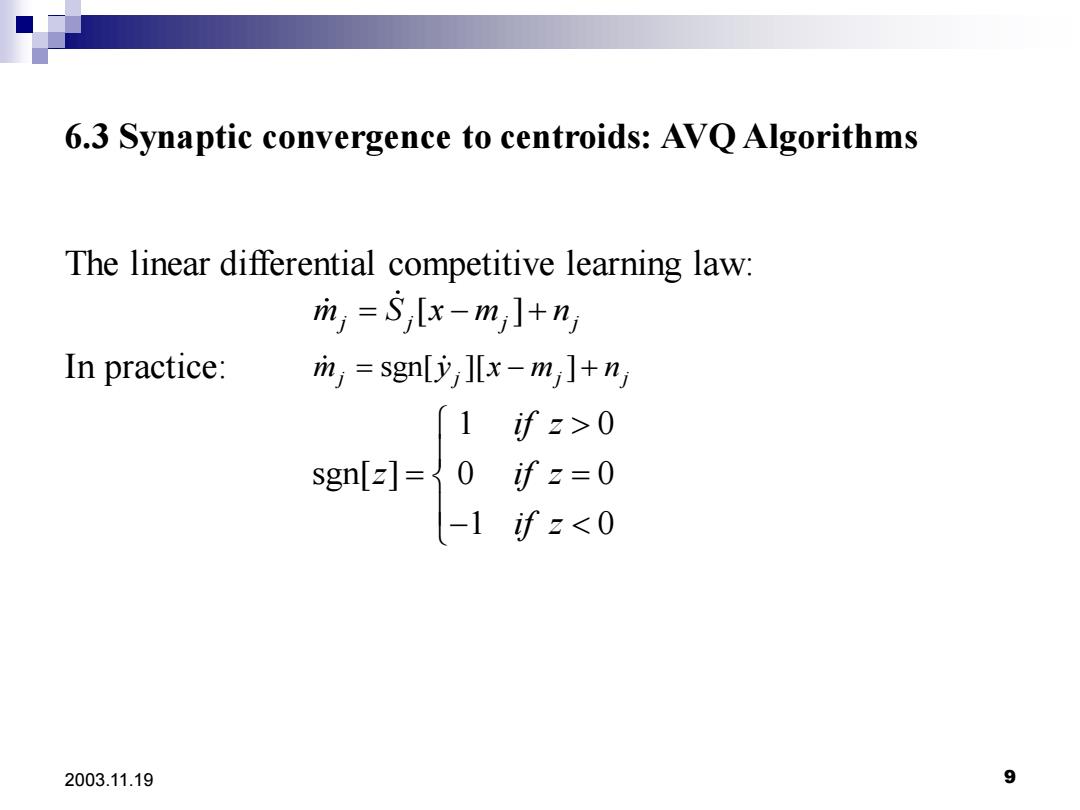

6.3 Synaptic convergence to centroids:AVQ Algorithms The linear differential competitive learning law: m,=S,[x-mj1+nj In practice: in,=sgn[yx-mjl+nj 1 讨z>0 讨z=0 2003.11.19 9

2003.11.19 9 6.3 Synaptic convergence to centroids: AVQ Algorithms The linear differential competitive learning law: In practice: [ ] m S x m n j j j j = − + sgn[ ][ ] 1 0 sgn[ ] 0 0 1 0 m y x m n j j j j if z z if z if z = − + = = −

6.3 Synaptic convergence to centroids:AVQ Algorithms Competitive AVQ Algorithms 1.Initialize synaptic vectors:m(0)=x(i),i=1,......,m 2.For random sample x(t),find the closest (winning)synaptic vector m (t):m,(t)-x(t)=minlm,(t)-x(t where+gives the squared Euclidean norm of x 3.Update the wining synaptic vectors m()by the UCL,SCL,or DCL learning algorithm. 2003.11.19 10

2003.11.19 10 6.3 Synaptic convergence to centroids: AVQ Algorithms Competitive AVQ Algorithms 1. Initialize synaptic vectors: mi (0) = x(i) , i =1,......,m 2.For random sample , find the closest (“winning”) synaptic vector : x(t) m (t) j ( ) ( ) min ( ) ( ) j i i m t x t m t x t − = − 3.Update the wining synaptic vectors by the UCL ,SCL,or DCL learning algorithm. m (t) j 2 2 2 1 ....... where x x x = + + n gives the squared Euclidean norm of x