Statistical Inference Problems Binary hypothesis testing: start with two hypotheses o use the available data to decide which of the two is true. m-ary hypothesis testing:there is a finite number m of competing hypotheses. Evaluation:typically by error probability. Both Bayesian and classical approaches are possible

Statistical Inference Problems ◼ Binary hypothesis testing: ❑ start with two hypotheses ❑ use the available data to decide which of the two is true. ◼ 𝑚-ary hypothesis testing: there is a finite number 𝑚 of competing hypotheses. ❑ Evaluation: typically by error probability. ◼ Both Bayesian and classical approaches are possible

Content Bayesian inference,the posterior distribution Point estimation,hypothesis testing,MAP Bayesian least mean squares estimation Bayesian linear least mean squares estimation

Content ◼ Bayesian inference, the posterior distribution ◼ Point estimation, hypothesis testing, MAP ◼ Bayesian least mean squares estimation ◼ Bayesian linear least mean squares estimation

Bayesian inference In Bayesian inference,the unknown quantity of interest is modeled as a random variable or as a finite collection of random variables. We usually denote it by 0. We aim to extract information about 0,based on observing a collection =(X1,..,X)of related random variables. 0 called observations,measurements,or an observation vector

Bayesian inference ◼ In Bayesian inference, the unknown quantity of interest is modeled as a random variable or as a finite collection of random variables. ❑ We usually denote it by Θ. ◼ We aim to extract information about Θ, based on observing a collection 𝑋 = 𝑋1,… , 𝑋𝑛 of related random variables. ❑ called observations, measurements, or an observation vector

Bayesian inference We assume that we know the joint distribution of o and X. Equivalently,we assume that we know A prior distribution pe or fo,depending on whether 6 is discrete or continuous. A conditional distribution pxle or fxlo,depending on whether X is discrete or continuous

Bayesian inference ◼ We assume that we know the joint distribution of Θ and 𝑋. ◼ Equivalently, we assume that we know ❑ A prior distribution 𝑝Θ or 𝑓Θ, depending on whether Θ is discrete or continuous. ❑ A conditional distribution 𝑝𝑋|Θ or 𝑓𝑋|Θ, depending on whether 𝑋 is discrete or continuous

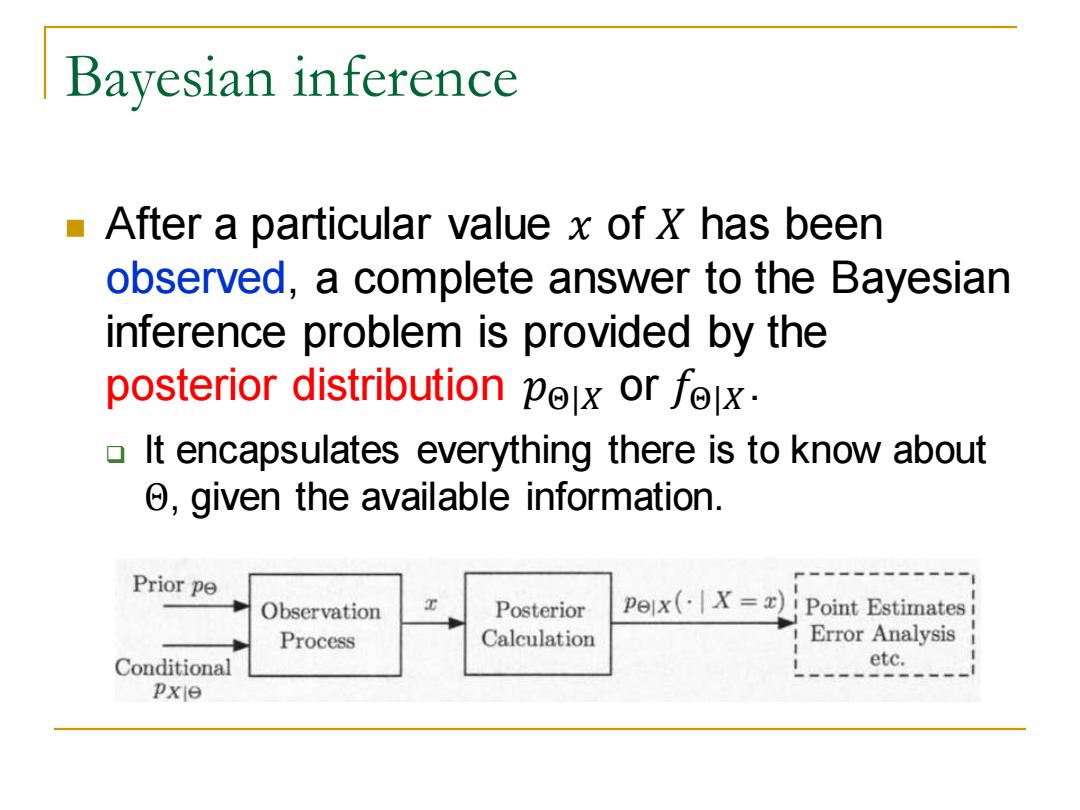

Bayesian inference After a particular value x of X has been observed,a complete answer to the Bayesian inference problem is provided by the posterior distribution pox or fox. It encapsulates everything there is to know about 6,given the available information. Prior pe Observation Posterior Pex(X=x)Point Estimates Process Calculation Error Analysis Conditional etc. pxie

Bayesian inference ◼ After a particular value 𝑥 of 𝑋 has been observed, a complete answer to the Bayesian inference problem is provided by the posterior distribution 𝑝Θ|𝑋 or 𝑓Θ|𝑋. ❑ It encapsulates everything there is to know about Θ, given the available information