Estimation Theory Least Square (LS) Wenhui Xiong NCL UESTC whxiong@uestc.edu.cn

whxiong@uestc.edu.cn Estimation Theory Least Square (LS) Wenhui Xiong NCL UESTC

Review MVU Estimator CRLB→factorize alnp(x;) =I(0)(g(x)-8) 80 Linear Model easy to find MVU RBLS: .Use sufficient statistics:T(x)ET(] unbiased estimator over sufficient statistics:g[T(x)] BLUE:if the unknown is a linear function of the data ●MLE:known PDF Minimize the estimator's variance whxiong@uestc.edu.cn 2

whxiong@uestc.edu.cn Review MVU Estimator 2 CRLB factorize Linear Model easy to find MVU RBLS: Use sufficient statistics: T(x) unbiased estimator over sufficient statistics: g[T(x)] BLUE: if the unknown is a linear function of the data MLE: known PDF E[µ · jT(x)] Minimize the estimator’s variance @lnp(x; µ) @µ = I(µ)(g(x) ¡ µ)

How about minimizing the square error? J=(n-sm)2 Mean square error(MSE) whxiong@uestc.edu.cn 3

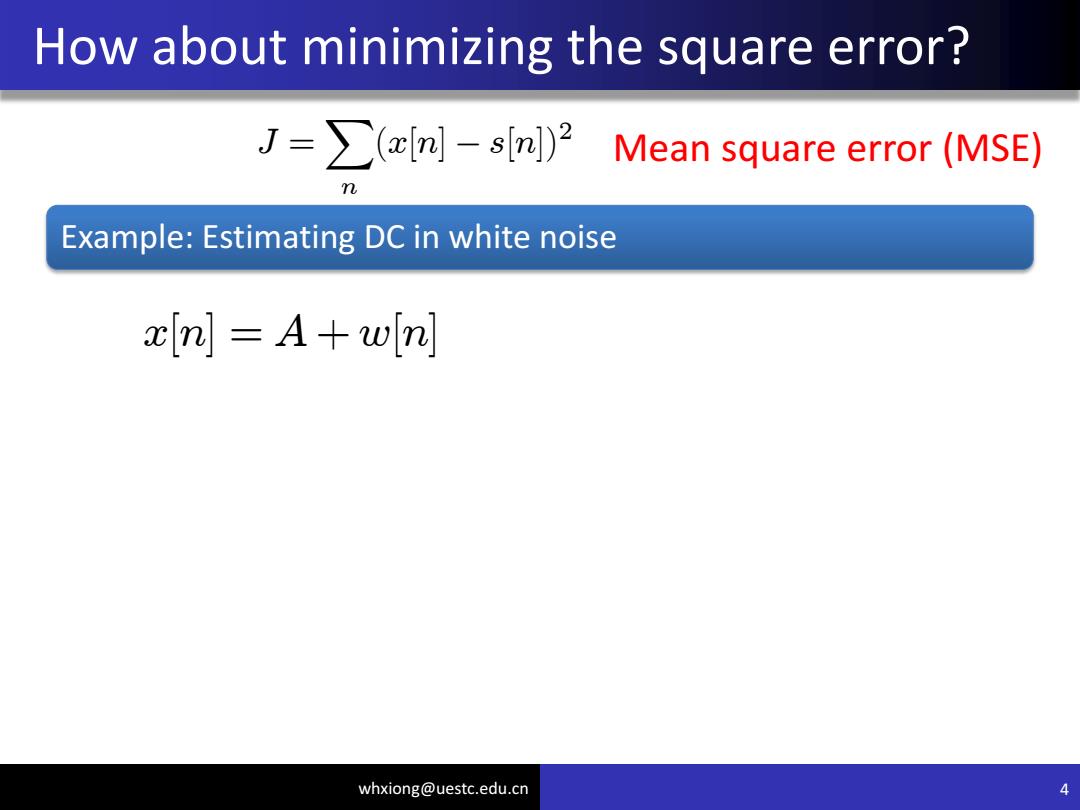

whxiong@uestc.edu.cn 3 J = Mean square error (MSE) X n (x[n] ¡ s[n]) 2 How about minimizing the square error?

How about minimizing the square error? J=>(a[n]-s[nj)2 Mean square error(MSE) m Example:Estimating DC in white noise xln A+wln] whxiong@uestc.edu.cn 4

whxiong@uestc.edu.cn 4 J = Mean square error (MSE) X n (x[n] ¡ s[n]) 2 How about minimizing the square error? Example: Estimating DC in white noise x[n] = A + w[n]

How about minimizing the square error? J=>(x(n]-s[nl)2 Mean square error(MSE) m Example:Estimating DC in white noise xln A+wln] J(A)=(x[n)-A)2 Minimize J(A)w.r.t A whxiong@uestc.edu.cn 5

whxiong@uestc.edu.cn 5 J = Mean square error (MSE) X n (x[n] ¡ s[n]) 2 How about minimizing the square error? Minimize J(A) w.r.t A Example: Estimating DC in white noise x[n] = A + w[n] J(A) = X n (x[n]) ¡ A) 2