Unsupervised Hashing Isotropic Hashing (IsoHash)(Kong and Li,2012b) Idea of IsoHash: Learn an orthogonal matrix QE Rmxm which makes QTWTXXTWQ become a matrix with equal diagonal values. o Effect of Q:to make each projected dimension has the same variance while keeping the Euclidean distances between any two points unchanged. 日卡三4元,互Q0 Li (http://cs.nju.edu.cn/lwj) Learning to Hash CS.NJU 36/210

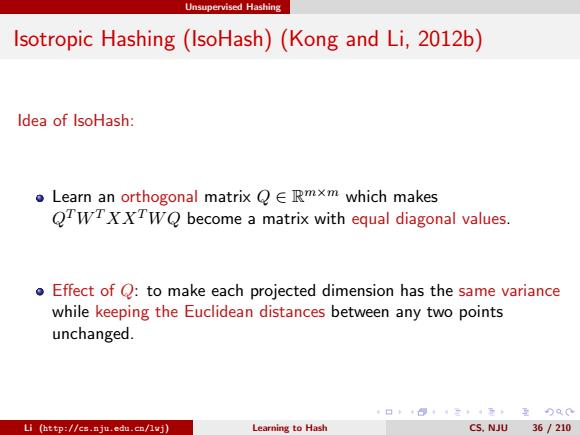

Unsupervised Hashing Isotropic Hashing (IsoHash) (Kong and Li, 2012b) Idea of IsoHash: Learn an orthogonal matrix Q ∈ R m×m which makes QTWT XXTW Q become a matrix with equal diagonal values. Effect of Q: to make each projected dimension has the same variance while keeping the Euclidean distances between any two points unchanged. Li (http://cs.nju.edu.cn/lwj) Learning to Hash CS, NJU 36 / 210

Unsupervised Hashing Isotropic Hashing(IsoHash)(Kong and Li,2012b) Problem Definition: w(QwrxxTwQ-xx-- a=a1,a2,…,m]with a4=a=∑1d m and T(z)={TE Rmxmldiag(T)=diag(z)}, Problem The problem of IsoHash is to find an orthogonal matrix Q making QTWTXXTWQ∈T(a). 日卡0三4元互)Q0 Li (http://cs.nju.edu.cn/lwj) Learning to Hash CS.NJU 37/210

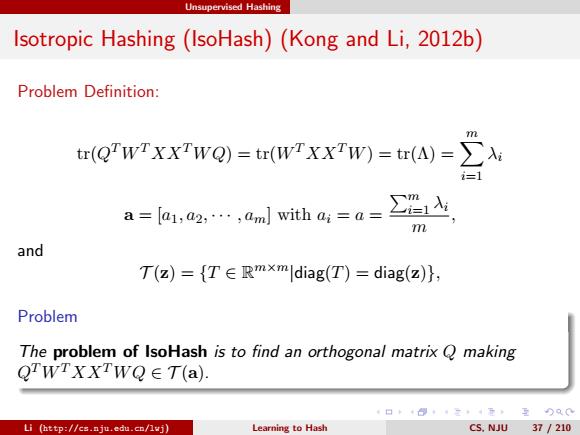

Unsupervised Hashing Isotropic Hashing (IsoHash) (Kong and Li, 2012b) Problem Definition: tr(Q TWT XXTW Q) = tr(WT XXTW) = tr(Λ) = Xm i=1 λi a = [a1, a2, · · · , am] with ai = a = Pm i=1 λi m , and T (z) = {T ∈ R m×m|diag(T) = diag(z)}, Problem The problem of IsoHash is to find an orthogonal matrix Q making QTWT XXTW Q ∈ T (a). Li (http://cs.nju.edu.cn/lwj) Learning to Hash CS, NJU 37 / 210

Unsupervised Hashing Isotropic Hashing (IsoHash)(Kong and Li,2012b) IsoHash Formulation: Because QTAQ=QT [WTXXTW]Q,let M()={QAQJQ∈O(m)}, where O(m)is the set of all orthogonal matrices in Rmxm. Then,the IsoHash problem is equivalent to: IIT ZllF =0, where T∈T(a),Z∈M(A),ll·denotes the Frobenius norm. Li (http://cs.nju.edu.cn/lvj) Learning to Hash CS.NJU 38/210

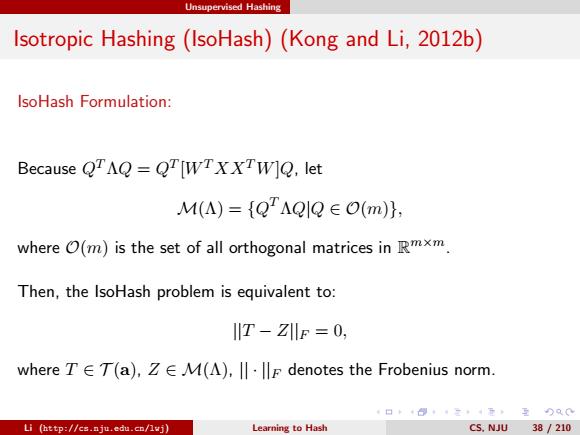

Unsupervised Hashing Isotropic Hashing (IsoHash) (Kong and Li, 2012b) IsoHash Formulation: Because QTΛQ = QT [WT XXTW]Q, let M(Λ) = {Q TΛQ|Q ∈ O(m)}, where O(m) is the set of all orthogonal matrices in R m×m. Then, the IsoHash problem is equivalent to: ||T − Z||F = 0, where T ∈ T (a), Z ∈ M(Λ), || · ||F denotes the Frobenius norm. Li (http://cs.nju.edu.cn/lwj) Learning to Hash CS, NJU 38 / 210

Unsupervised Hashing Isotropic Hashing(IsoHash)(Kong and Li,2012b) Lemma [Schur-Horn Lemma (Horn,1954)]Let c={ci}E Rm and b={bi}E Rm be real vectors in non-increasing order respectively,i.e., c2c2≥…≥cm,b1≥b2≥·…≥bm.There exists a Hermitian matrix H with eigenvalues c and diagonal values b if and only if k k 合∑9 or any=12m =1 m m = 三1 So we can prove: There exists a solution to the IsoHash problem.And this solution is in the intersection of T(a)and M(A). 口卡日卡工,4元 重)Q0 Li (http://cs.nju.edu.cn/lvj) Learning to Hash C5.NU39/210

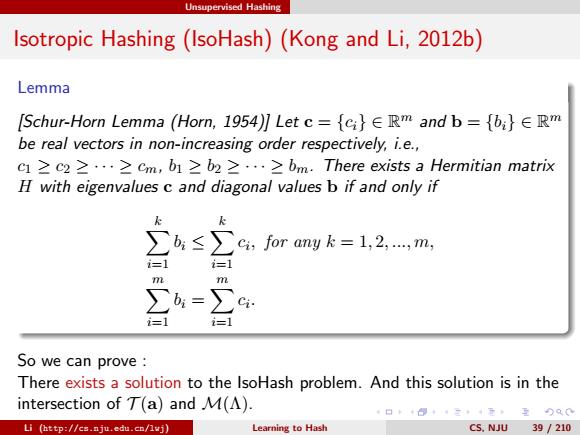

Unsupervised Hashing Isotropic Hashing (IsoHash) (Kong and Li, 2012b) Lemma [Schur-Horn Lemma (Horn, 1954)] Let c = {ci} ∈ R m and b = {bi} ∈ R m be real vectors in non-increasing order respectively, i.e., c1 ≥ c2 ≥ · · · ≥ cm, b1 ≥ b2 ≥ · · · ≥ bm. There exists a Hermitian matrix H with eigenvalues c and diagonal values b if and only if X k i=1 bi ≤ X k i=1 ci , for any k = 1, 2, ..., m, Xm i=1 bi = Xm i=1 ci . So we can prove : There exists a solution to the IsoHash problem. And this solution is in the intersection of T (a) and M(Λ). Li (http://cs.nju.edu.cn/lwj) Learning to Hash CS, NJU 39 / 210

Unsupervised Hashing Isotropic Hashing(IsoHash)(Kong and Li,2012b) Learning Methods: Two methods:(Chu,1995) Lift and projection(LP) Gradient Flow (GF) 日卡三4元,互Q0 Li (http://cs.nju.edu.cn/lwj) Learning to Hash CS.NJU 40/210

Unsupervised Hashing Isotropic Hashing (IsoHash) (Kong and Li, 2012b) Learning Methods: Two methods: (Chu, 1995) Lift and projection (LP) Gradient Flow (GF) Li (http://cs.nju.edu.cn/lwj) Learning to Hash CS, NJU 40 / 210