Unsupervised Hashing Outline Introduction ② Unsupervised Hashing Supervised Hashing Ranking-based Hashing Multimodal Hashing Deep Hashing Quantization Conclusion Reference 口卡得三4元互Q0 Li (http://cs.nju.edu.cn/lwj) Learning to Hash Cs.NU21/210

Unsupervised Hashing Outline 1 Introduction 2 Unsupervised Hashing 3 Supervised Hashing 4 Ranking-based Hashing 5 Multimodal Hashing 6 Deep Hashing 7 Quantization 8 Conclusion 9 Reference Li (http://cs.nju.edu.cn/lwj) Learning to Hash CS, NJU 21 / 210

Unsupervised Hashing Problem Definition Input: Feature vectors:{xi(compact matrix form for all training points: x). Output: Binary codes:{bi(compact matrix form for all training points:B). Instances similar in the original feature space should have similar binary codes. or When xi is close to xj.the Hamming distance between bi and bj should be low. When xi is far away from xj.the Hamming distance between bi and bi should be high. 口卡4日12元,至90 Li (http://cs.nju.edu.cn/lvj) Learning to Hash CS.NJU 22 /210

Unsupervised Hashing Problem Definition Input: Feature vectors: {xi} (compact matrix form for all training points: X). Output: Binary codes: {bi} (compact matrix form for all training points: B). Instances similar in the original feature space should have similar binary codes. or When xi is close to xj , the Hamming distance between bi and bj should be low. When xi is far away from xj , the Hamming distance between bi and bj should be high. Li (http://cs.nju.edu.cn/lwj) Learning to Hash CS, NJU 22 / 210

Unsupervised Hashing PCA Hashing(PCAH) To generate a code of m bits,PCAH performs PCA on X,and then use the top m eigenvectors of the matrix XXT as columns of the projection matrix W ERdxm.Here,top m eigenvectors are those corresponding to the m largest eigenvalues generally arranged with the non-increasing order1≥2≥…≥入m-Let入=[A,A2,…,入mJT. Then A=WTXXTW diag(A) Define hash function h(x)=sgn(WTx) PCA2 PCAI 口卡+得二4元互)Q0 Li (http://cs.nju.edu.cn/lwj) Learning to Hash C5.NJU23/210

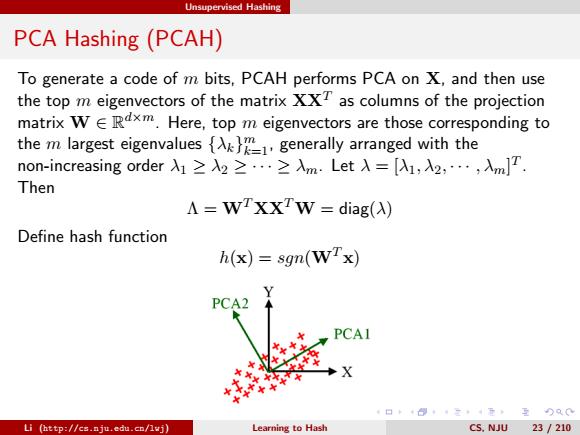

Unsupervised Hashing PCA Hashing (PCAH) To generate a code of m bits, PCAH performs PCA on X, and then use the top m eigenvectors of the matrix XXT as columns of the projection matrix W ∈ R d×m. Here, top m eigenvectors are those corresponding to the m largest eigenvalues {λk} m k=1, generally arranged with the non-increasing order λ1 ≥ λ2 ≥ · · · ≥ λm. Let λ = [λ1, λ2, · · · , λm] T . Then Λ = WT XXTW = diag(λ) Define hash function h(x) = sgn(WT x) Li (http://cs.nju.edu.cn/lwj) Learning to Hash CS, NJU 23 / 210

Unsupervised Hashing Spectral Hashing (Weiss et al.,2008) min∑Wlly:-yl2 subject to:yi∈{-l,l} ∑y=0 A∑wW-1 where Wij is the similarity between xi and xj,the constraint >iyi=0 requires each bit to be fire 50%of the time,and the constraint yiy=I requires the bits to be uncorrelated. NP hard problem! 日卡461工4元,至80 Li (http://cs.nju.edu.cn/lvj) Learning to Hash C5.NJU24/210

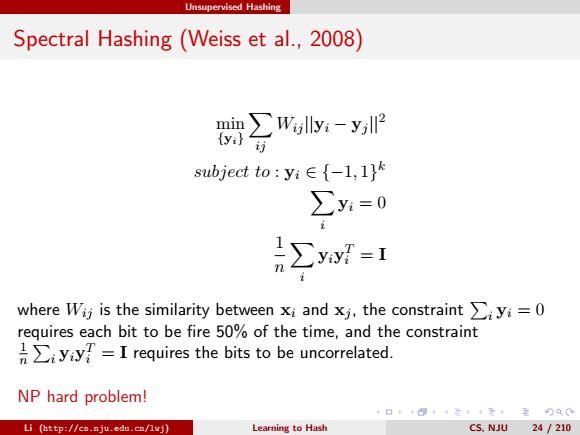

Unsupervised Hashing Spectral Hashing (Weiss et al., 2008) min {yi} X ij Wij ||yi − yj ||2 subject to : yi ∈ {−1, 1} k X i yi = 0 1 n X i yiy T i = I where Wij is the similarity between xi and xj , the constraint P i yi = 0 requires each bit to be fire 50% of the time, and the constraint 1 n P i yiy T i = I requires the bits to be uncorrelated. NP hard problem! Li (http://cs.nju.edu.cn/lwj) Learning to Hash CS, NJU 24 / 210

Unsupervised Hashing Spectral Hashing (Weiss et al.,2008) In matrix form,and by relaxation: mintr(YTCY) subject to:YT1=0 lYTY=I n where Y is a real-valued nxk matrix whose jth row is yf.C=D-W is the Laplacian matrix,D is a diagonal matrix with D(i,i)=>;W(i,j). Solution:simply the k eigenvectors of C with minimal eigenvalues after excluding the trivial eigenvector 1 which has eigenvalue 0. sign(Y)to get the binary codes (quantization stage). Out-of-sample extension with eigenfunctions by simply fitting a multidimensional rectangle distribution to the data (by using PCA to align the axes,and then assuming a uniform distribution on each axis). Li (http://cs.nju.edu.cn/lvj) Learning to Hash CS.NJU 25 /210

Unsupervised Hashing Spectral Hashing (Weiss et al., 2008) In matrix form, and by relaxation: min tr(YTLY) subject to : YT 1 = 0 1 n YT Y = I where Y is a real-valued n × k matrix whose jth row is y T j , L = D − W is the Laplacian matrix, D is a diagonal matrix with D(i, i) = P j W(i, j). Solution: simply the k eigenvectors of L with minimal eigenvalues after excluding the trivial eigenvector 1 which has eigenvalue 0. sign(Y) to get the binary codes (quantization stage). Out-of-sample extension with eigenfunctions by simply fitting a multidimensional rectangle distribution to the data (by using PCA to align the axes, and then assuming a uniform distribution on each axis). Li (http://cs.nju.edu.cn/lwj) Learning to Hash CS, NJU 25 / 210