So the algorithm for calculating the parameter w is W:+1=W:+n(d-wx)x It is also known as the Widrow-Hoff learning rule,which is for the case where has only one sample. For N samples case,it can be modified as follows: N W+1=W+nd-wix)x i=1 Note that each iteration of the algorithm uses the entire training set to update the parameters.It called batch gradient descent(BGD). 10/78

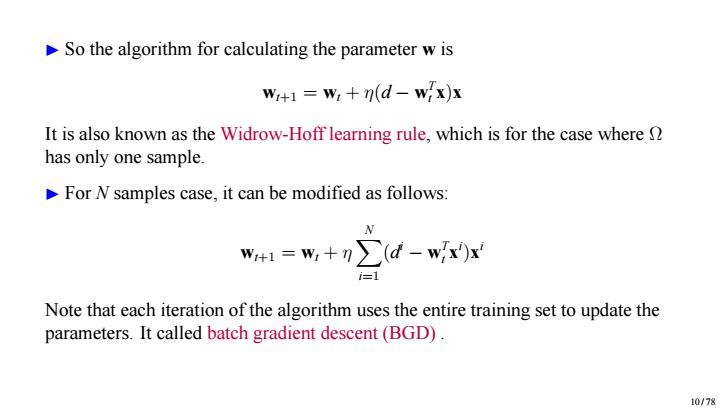

▶ So the algorithm for calculating the parameter w is wt+1 = wt + η(d − w T t x)x It is also known as the Widrow-Hoff learning rule, which is for the case where Ω has only one sample. ▶ For N samples case, it can be modified as follows: wt+1 = wt + η X N i=1 (d i − w T t x i )x i Note that each iteration of the algorithm uses the entire training set to update the parameters. It called batch gradient descent (BGD) . 10 / 78

Outline (Level 2-3) o Numeric Approach o Neural Network Terminologies Gradient Descent Principle o Stochastic Gradient Descent Algorithm 11/78

Outline (Level 2-3) Numeric Approach Neural Network Terminologies Gradient Descent Principle Stochastic Gradient Descent Algorithm 11 / 78

2.1.1.Neural Network Terminologies one pass:one forward pass one backward pass.Note:do not count the forward pass and backward pass as two different passes one epoch:one pass of all the training samples batch size:the number of training samples in one pass,also called mini-batch. The higher the batch size,the more memory space needed number of iterations:number of passes,each pass using [batch size]number of samples 12/78

2.1.1. Neural Network Terminologies ▶ one pass: one forward pass + one backward pass. Note: do not count the forward pass and backward pass as two different passes ▶ one epoch: one pass of all the training samples ▶ batch size: the number of training samples in one pass, also called mini-batch. The higher the batch size, the more memory space needed ▶ number of iterations: number of passes, each pass using [batch size] number of samples 12 / 78

Outline (Level 2-3) 。Numeric Approach o Neural Network Terminologies Gradient Descent Principle o Stochastic Gradient Descent Algorithm 13/78

Outline (Level 2-3) Numeric Approach Neural Network Terminologies Gradient Descent Principle Stochastic Gradient Descent Algorithm 13 / 78

2.1.2.Gradient Descent Principle Minimize the cost function C(x1,x2),and following Calculus,C changes as follows: △C≈ x1 aC Ax2 Cx+0x2 Define△r=(Ar,△r)',VC=(%,)',and get: △C≈VC.△x To make△C negative,set△x=-nVC,and get: △C≈-n7C.7C=-llVC2≤0 Then from x+1-x,=Ax =-nVC,get: X1+1=x1-nVC Gradient is fastest descent direction only,as △C≈7C·△x=I7Cl△cos(0)≤0,π/2≤θ≤π3/2 14/78

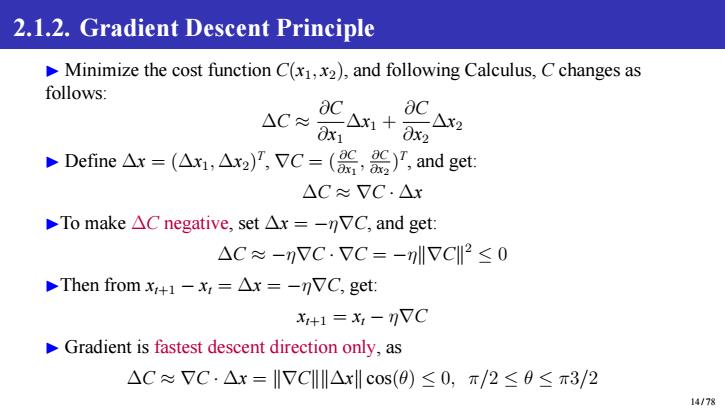

2.1.2. Gradient Descent Principle ▶ Minimize the cost function C(x1, x2), and following Calculus, C changes as follows: ∆C ≈ ∂C ∂x1 ∆x1 + ∂C ∂x2 ∆x2 ▶ Define ∆x = (∆x1, ∆x2) T , ∇C = ( ∂C ∂x1 , ∂C ∂x2 ) T , and get: ∆C ≈ ∇C · ∆x ▶To make ∆C negative, set ∆x = −η∇C, and get: ∆C ≈ −η∇C · ∇C = −η∥∇C∥ 2 ≤ 0 ▶Then from xt+1 − xt = ∆x = −η∇C, get: xt+1 = xt − η∇C ▶ Gradient is fastest descent direction only, as ∆C ≈ ∇C · ∆x = ∥∇C∥∥∆x∥ cos(θ) ≤ 0, π/2 ≤ θ ≤ π3/2 14 / 78