EY] E[Y] /expected satisfied clauses =E[∑Y /definition of Y:Y ZiYi ∑iE[Y] /linearity of expectation i Pr[Ci satisfied]/definition of Yi ∑7/8 7 二 m. 8 This means that if we choose assignment randomly,then we can satisfy =7/8 fraction of clauses on average. 11

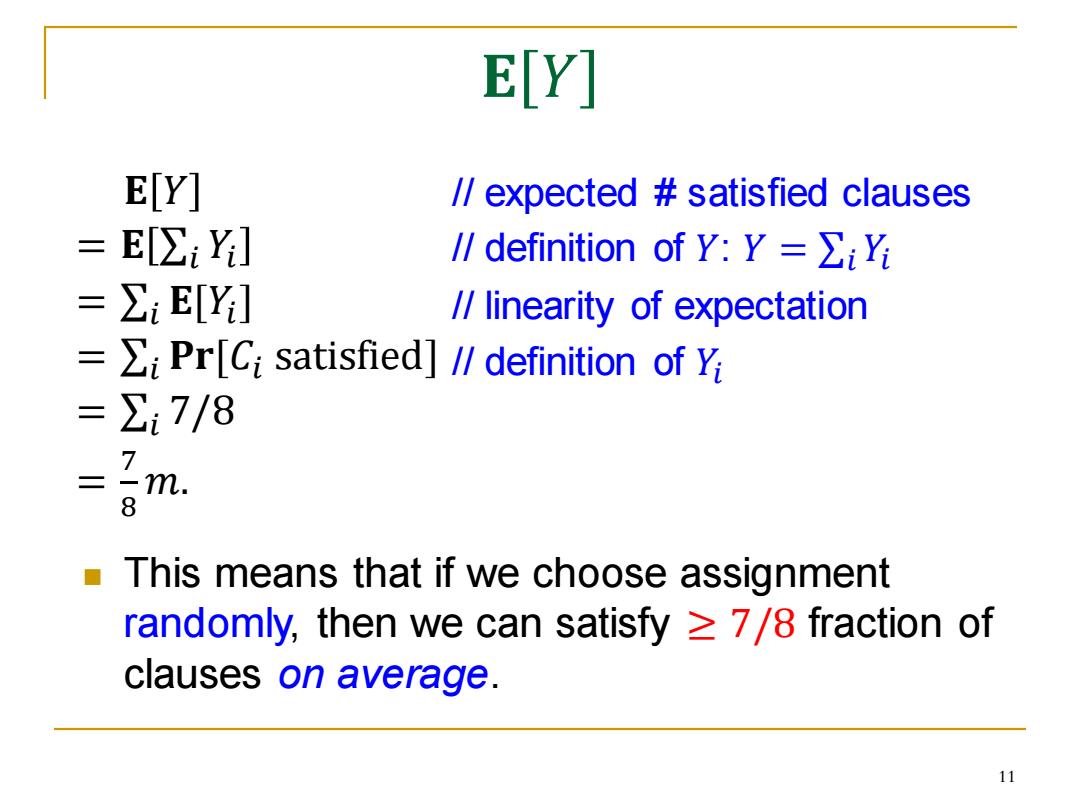

𝐄 𝑌 𝐄 𝑌 = 𝐄 σ𝑖 𝑌𝑖 = σ𝑖 𝐄[𝑌𝑖 ] = σ𝑖 𝐏𝐫[𝐶𝑖 satisfied] = σ𝑖 7/8 = 7 8 𝑚. // expected # satisfied clauses // definition of 𝑌: 𝑌 = σ𝑖 𝑌𝑖 // linearity of expectation // definition of 𝑌𝑖 11 ◼ This means that if we choose assignment randomly, then we can satisfy ≥ 7/8 fraction of clauses on average

Success probability of one assignment We've seen the average number of satisfied clauses on a random assignment. Now we translates this to the average running time of the algorithm? event "success":A random assignment satisfies =7/8 fraction of clauses, We want to estimate the probability p of success. 12

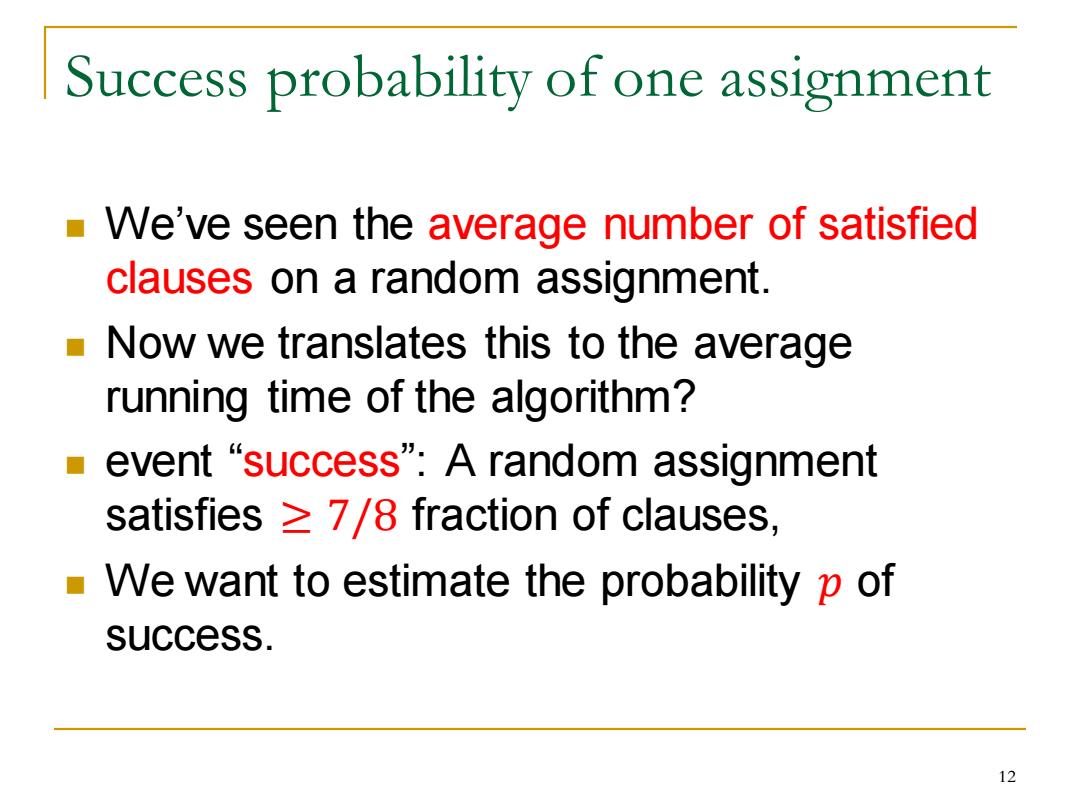

Success probability of one assignment ◼ We’ve seen the average number of satisfied clauses on a random assignment. ◼ Now we translates this to the average running time of the algorithm? ◼ event “success”: A random assignment satisfies ≥ 7/8 fraction of clauses, ◼ We want to estimate the probability 𝑝 of success. 12

Getting a Las Vegas algorithm m-EY]kPr[y= ≤pm+(1-p)(gl-1) ≤pm+(1-p)(Tg-) Rearranging,we get p≥。 8m If we repeatedly take random assignments,it needs 8m times (on average)to see a “success”happening. i.e.the complexity of this Las Vegas algorithm is 8m. 13

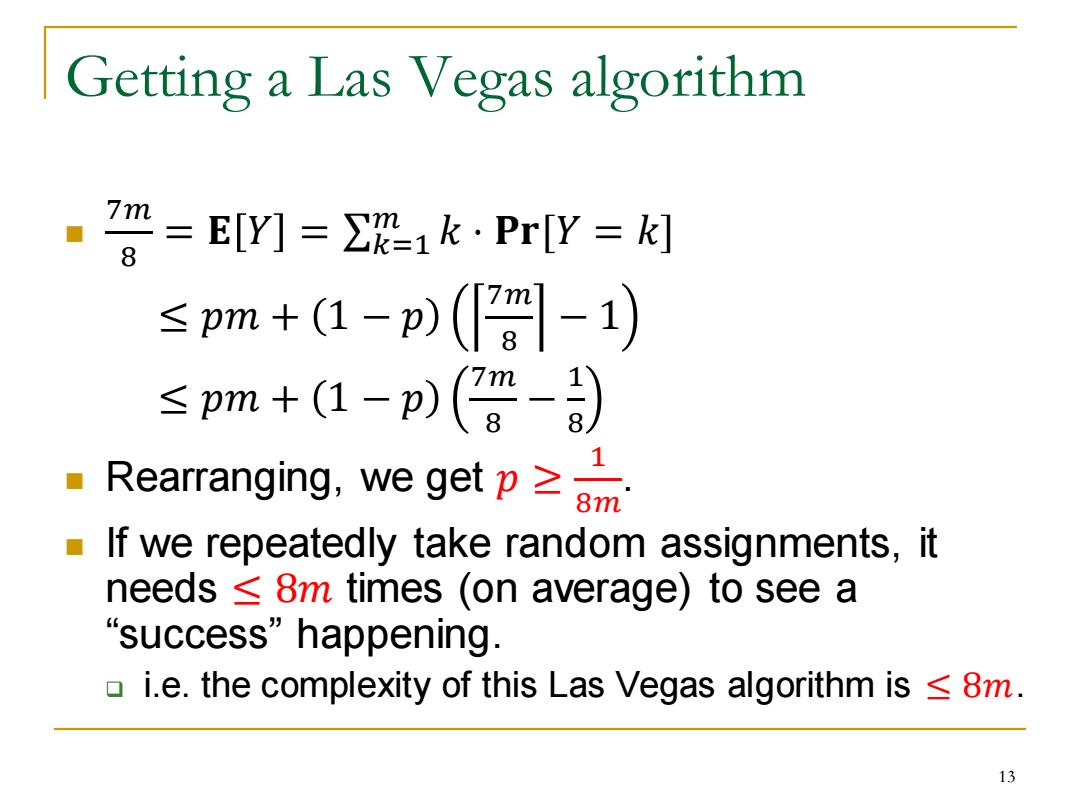

Getting a Las Vegas algorithm ◼ 7𝑚 8 = 𝐄 𝑌 = σ𝑘=1 𝑚 𝑘 ⋅ 𝐏𝐫[𝑌 = 𝑘] ≤ 𝑝𝑚 + 1 − 𝑝 7𝑚 8 − 1 ≤ 𝑝𝑚 + 1 − 𝑝 7𝑚 8 − 1 8 ◼ Rearranging, we get 𝑝 ≥ 1 8𝑚 . ◼ If we repeatedly take random assignments, it needs ≤ 8𝑚 times (on average) to see a “success” happening. ❑ i.e. the complexity of this Las Vegas algorithm is ≤ 8𝑚. 13

Example 2:Approximation algorithm for Vertex Cover 14

Example 2: Approximation algorithm for Vertex Cover 14