41 RF-Kinect:A Wearable RFID-based Approach Towards 3D Body Movement Tracking CHUYU WANG,Nanjing University,CHN JIAN LIU,Rutgers University,USA YINGYING CHEN',Rutgers University,USA LEI XIE',Nanjing University,CHN HONGBO LIU,Indiana University-Purdue University Indianapolis,USA SANGLU LU,Nanjing University,CHN The rising popularity of electronic devices with gesture recognition capabilities makes the gesture-based human-computer interaction more attractive.Along this direction,tracking the body movement in 3D space is desirable to further facilitate behavior recognition in various scenarios.Existing solutions attempt to track the body movement based on computer version or wearable sensors,but they are either dependent on the light or incurring high energy consumption.This paper presents RF-Kinect,a training-free system which tracks the body movement in 3D space by analyzing the phase information of wearable RFID tags attached on the limb.Instead of locating each tag independently in 3D space to recover the body postures,RF-Kinect treats each limb as a whole,and estimates the corresponding orientations through extracting two types of phase features, Phase Difference between Tags(PDT)on the same part of a limb and Phase Difference between Antennas(PDA)of the same tag It then reconstructs the body posture based on the determined orientation of limbs grounded on the human body geometric model,and exploits Kalman filter to smooth the body movement results,which is the temporal sequence of the body postures. The real experiments with 5 volunteers show that RF-Kinect achieves 8.7 angle error for determining the orientation of limbs and 4.4cm relative position error for the position estimation of joints compared with Kinect 2.0 testbed. CCS Concepts:.Networks-Sensor networks;Mobile networks;.Human-centered computing-Mobile devices; Additional Key Words and Phrases:RFID;Body movement tracking ACM Reference Format: Chuyu Wang.Jian Liu,Yingying Chen,Lei Xie,Hongbo Liu,and Sanglu Lu.2018.RF-Kinect:A Wearable RFID-based Approach Towards 3D Body Movement Tracking.Proc.ACM Interact.Mob.Wearable Ubiguitous Technol.2,1,Article 41(March 2018). 28 pages.https://doi.org/10.1145/3191773 "Yingying Chen and Lei Xie are the co-corresponding authors,Email:yingche@scarletmail rutgers.edu,Ixie@nju.edu.cn Authors'addresses:Chuyu Wang.Nanjing University,State Key Laboratory for Novel Software Technology,163 Xianlin Ave,Nanjing,210046, CHN:Jian Liu,Rutgers University,Department of Electrical and Computer Engineering,North Brunswick,NJ,08902,USA;Yingying Chen, Rutgers University,Department of Electrical and Computer Engineering,North Brunswick,NJ,08902,USA;Lei Xie,Nanjing University,State Key Laboratory for Novel Software Technology,163 Xianlin Ave,Nanjing.210046,CHN:Hongbo Liu,Indiana University-Purdue University Indianapolis.Department of Computer,Information and Technology.Indianapolis,IN,46202,USA:Sanglu Lu,Nanjing University,State Key Laboratory for Novel Software Technology,163 Xianlin Ave,Nanjing,210046,CHN. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page.Copyrights for components of this work owned by others than ACM must be honored.Abstracting with credit is permitted.To copy otherwise,or republish,to post on servers or to redistribute to lists,requires prior specific permission and/or a fee.Request permissions from permissions@acm.org. 2018 Association for Computing Machinery. 2474-9567/2018/3-ART41$15.00 https:/doi.org/10.1145/3191773 Proceedings of the ACM on Interactive,Mobile,Wearable and Ubiquitous Technologies,Vol.2,No.1,Article 41.Publication date:March 2018

41 RF-Kinect: A Wearable RFID-based Approach Towards 3D Body Movement Tracking CHUYU WANG, Nanjing University, CHN JIAN LIU, Rutgers University, USA YINGYING CHEN∗ , Rutgers University, USA LEI XIE∗ , Nanjing University, CHN HONGBO LIU, Indiana University-Purdue University Indianapolis, USA SANGLU LU, Nanjing University, CHN The rising popularity of electronic devices with gesture recognition capabilities makes the gesture-based human-computer interaction more attractive. Along this direction, tracking the body movement in 3D space is desirable to further facilitate behavior recognition in various scenarios. Existing solutions attempt to track the body movement based on computer version or wearable sensors, but they are either dependent on the light or incurring high energy consumption. This paper presents RF-Kinect, a training-free system which tracks the body movement in 3D space by analyzing the phase information of wearable RFID tags attached on the limb. Instead of locating each tag independently in 3D space to recover the body postures, RF-Kinect treats each limb as a whole, and estimates the corresponding orientations through extracting two types of phase features, Phase Difference between Tags (PDT) on the same part of a limb and Phase Difference between Antennas (PDA) of the same tag. It then reconstructs the body posture based on the determined orientation of limbs grounded on the human body geometric model, and exploits Kalman filter to smooth the body movement results, which is the temporal sequence of the body postures. The real experiments with 5 volunteers show that RF-Kinect achieves 8.7 ◦ angle error for determining the orientation of limbs and 4.4cm relative position error for the position estimation of joints compared with Kinect 2.0 testbed. CCS Concepts: • Networks → Sensor networks; Mobile networks; • Human-centered computing → Mobile devices; Additional Key Words and Phrases: RFID; Body movement tracking ACM Reference Format: Chuyu Wang, Jian Liu, Yingying Chen, Lei Xie, Hongbo Liu, and Sanglu Lu. 2018. RF-Kinect: A Wearable RFID-based Approach Towards 3D Body Movement Tracking. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2, 1, Article 41 (March 2018), 28 pages. https://doi.org/10.1145/3191773 ∗Yingying Chen and Lei Xie are the co-corresponding authors, Email: yingche@scarletmail.rutgers.edu, lxie@nju.edu.cn. Authors’ addresses: Chuyu Wang, Nanjing University, State Key Laboratory for Novel Software Technology, 163 Xianlin Ave, Nanjing, 210046, CHN; Jian Liu, Rutgers University, Department of Electrical and Computer Engineering, North Brunswick, NJ, 08902, USA; Yingying Chen, Rutgers University, Department of Electrical and Computer Engineering, North Brunswick, NJ, 08902, USA; Lei Xie, Nanjing University, State Key Laboratory for Novel Software Technology, 163 Xianlin Ave, Nanjing, 210046, CHN; Hongbo Liu, Indiana University-Purdue University Indianapolis, Department of Computer, Information and Technology, Indianapolis, IN, 46202, USA; Sanglu Lu, Nanjing University, State Key Laboratory for Novel Software Technology, 163 Xianlin Ave, Nanjing, 210046, CHN. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from permissions@acm.org. © 2018 Association for Computing Machinery. 2474-9567/2018/3-ART41 $15.00 https://doi.org/10.1145/3191773 Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Vol. 2, No. 1, Article 41. Publication date: March 2018

41:2·C.Wang et al. 1 INTRODUCTION The gesture-based Human-Computer Interaction(HCI)embraces an increasing number of practical usage enabled by the growing popularity of electronic devices with gesture recognition capabilities.Recent survey reveals that the global gesture recognition market is anticipated to reach USD 48.56 billion by 2024 [4].In particular,the success of Microsoft Kinect [8]in tracking human gestures in gaming consoles has induced many emerging applications to adopt gesture recognition solutions in the fields like healthcare,smart homes,mobile robot control, etc.For example,numerous applications are developed to monitor human's well-being based on their activities (such as fitness,drinking,sleeping,etc.)with either wearable devices or smartphones.The success of gesture and activity recognition leads to a growing interest in developing new approaches and technologies to track the body movement in 3D space,which can further facilitate behavior recognition in various occasions,such as VR gaming,mobile healthcare,and user access control. Existing solutions for body movement recognition fall into three main categories:(i)Computer vision-based solutions,such as Kinect and LeapMotion [5,8],leverage the depth sensors or infrared cameras to recognize body gestures and allow the user to interact with machines in a natural way.However,these methods suffer from several inherent disadvantages of computer vision including light dependence,dead corner,high computational cost,and ambiguity of multi-people.(ii)Sensor-based solutions,such as the smartwatch and wristband [3],are designed to track the movement of the limbs based on the accelerator or gyroscope readings.But these systems usually require the user to wear different kinds of sensing devices,which present short life cycles due to the high energy consumption.Further,there are also some products(i.e.,Vicon [6])integrating the information from both cameras and wearable sensors to accurately track the body movement,however the high price of the infrastructure is not affordable for many systems.(iii)Wireless signal-based solutions [17,25]capture the specific gestures based on the changes of some wireless signal features,such as the Doppler frequency shift and signal amplitude fluctuation.But only a limited number of gestures could be correctly identified due to the high cost of training data collection and the lack of capabilities for multi-user identification. With the rapid development of RFID techniques [34,41],RFID tag now is not only an identification device, but also a low power battery-free wireless sensor serving for various applications,such as the localization and motion tracking.Previous studies,such as Tagoram [41]and RF-IDraw [35],could achieve cm-level accuracy on tracking an individual RFID tag in 2D space(ie.,a tagged object or finger).Further,Tagyro [39]could accurately track the 3D orientation of objects attached with an array of RFID tags,but it only works for objects with the fixed geometry and rotation center.However,due to the complicated body movement involving multiple degrees of freedom,the problem of 3D RFID tag tracking associated with the human body movement,including both limb orientation and joint displacement (e.g.,elbow displacement),remains elusive. Inspired by these advanced schemes,we explore the possibility of tracking the human body movement in 3D space via RFID system.In particular,we propose a wearable RFID-based approach as shown in Figure 1,which investigates new opportunities for tracking the body movement by attaching the lightweight RFID tags onto the human body.Wearable RFID refers to the gesture recognition towards the human body wearing multiple RFID tags on different parts of the limbs and torso.In actual applications,these tags can be easily embedded into the fabric [11].e.g.,T-shirts,with fixed positions to avoid the complicated configurations.During the process of the human motion,we are able to track the human body movement,including both the rigid body [7]movement(e.g, the torso movement)and non-rigid body movement (e.g.,the arm/leg movement),by analyzing the relationship between these movements and the RF-signals from the corresponding tag sets.Due to the inherent identification function,wearable RFID solves the distinguishing problem of tracking multiple subjects in most device-free sensing schemes.For example,in regard to tracking the body movement of multiple human subjects,different human subjects or even different arms/legs can be easily distinguished according to the tag ID,which is usually difficult to achieve in the computer vision or wireless-based sensing schemes.Even RF-IDraw [35]makes the first Proceedings of the ACM on Interactive,Mobile,Wearable and Ubiquitous Technologies,Vol.2,No.1,Article 41.Publication date:March 2018

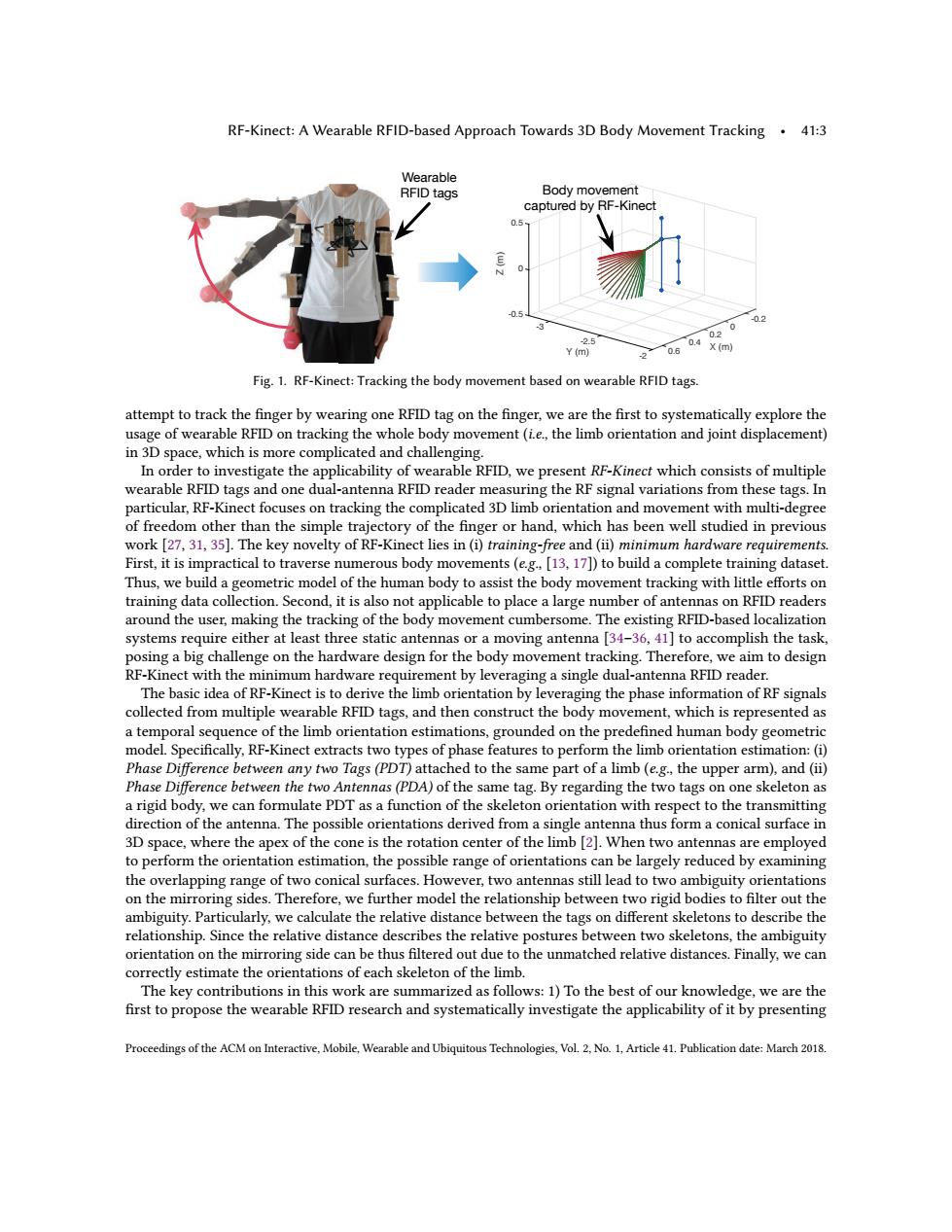

41:2 • C. Wang et al. 1 INTRODUCTION The gesture-based Human-Computer Interaction (HCI) embraces an increasing number of practical usage enabled by the growing popularity of electronic devices with gesture recognition capabilities. Recent survey reveals that the global gesture recognition market is anticipated to reach USD 48.56 billion by 2024 [4]. In particular, the success of Microsoft Kinect [8] in tracking human gestures in gaming consoles has induced many emerging applications to adopt gesture recognition solutions in the fields like healthcare, smart homes, mobile robot control, etc. For example, numerous applications are developed to monitor human’s well-being based on their activities (such as fitness, drinking, sleeping, etc.) with either wearable devices or smartphones. The success of gesture and activity recognition leads to a growing interest in developing new approaches and technologies to track the body movement in 3D space, which can further facilitate behavior recognition in various occasions, such as VR gaming, mobile healthcare, and user access control. Existing solutions for body movement recognition fall into three main categories: (i) Computer vision-based solutions, such as Kinect and LeapMotion [5, 8], leverage the depth sensors or infrared cameras to recognize body gestures and allow the user to interact with machines in a natural way. However, these methods suffer from several inherent disadvantages of computer vision including light dependence, dead corner, high computational cost, and ambiguity of multi-people. (ii) Sensor-based solutions, such as the smartwatch and wristband [3], are designed to track the movement of the limbs based on the accelerator or gyroscope readings. But these systems usually require the user to wear different kinds of sensing devices, which present short life cycles due to the high energy consumption. Further, there are also some products (i.e., Vicon [6]) integrating the information from both cameras and wearable sensors to accurately track the body movement, however the high price of the infrastructure is not affordable for many systems. (iii) Wireless signal-based solutions [17, 25] capture the specific gestures based on the changes of some wireless signal features, such as the Doppler frequency shift and signal amplitude fluctuation. But only a limited number of gestures could be correctly identified due to the high cost of training data collection and the lack of capabilities for multi-user identification. With the rapid development of RFID techniques [34, 41], RFID tag now is not only an identification device, but also a low power battery-free wireless sensor serving for various applications, such as the localization and motion tracking. Previous studies, such as Tagoram [41] and RF-IDraw [35], could achieve cm-level accuracy on tracking an individual RFID tag in 2D space (i.e., a tagged object or finger). Further, Tagyro [39] could accurately track the 3D orientation of objects attached with an array of RFID tags, but it only works for objects with the fixed geometry and rotation center. However, due to the complicated body movement involving multiple degrees of freedom, the problem of 3D RFID tag tracking associated with the human body movement, including both limb orientation and joint displacement (e.g., elbow displacement), remains elusive. Inspired by these advanced schemes, we explore the possibility of tracking the human body movement in 3D space via RFID system. In particular, we propose a wearable RFID-based approach as shown in Figure 1, which investigates new opportunities for tracking the body movement by attaching the lightweight RFID tags onto the human body. Wearable RFID refers to the gesture recognition towards the human body wearing multiple RFID tags on different parts of the limbs and torso. In actual applications, these tags can be easily embedded into the fabric [11], e.g., T-shirts, with fixed positions to avoid the complicated configurations. During the process of the human motion, we are able to track the human body movement, including both the rigid body [7] movement (e.g., the torso movement) and non-rigid body movement (e.g., the arm/leg movement), by analyzing the relationship between these movements and the RF-signals from the corresponding tag sets. Due to the inherent identification function, wearable RFID solves the distinguishing problem of tracking multiple subjects in most device-free sensing schemes. For example, in regard to tracking the body movement of multiple human subjects, different human subjects or even different arms/legs can be easily distinguished according to the tag ID, which is usually difficult to achieve in the computer vision or wireless-based sensing schemes. Even RF-IDraw [35] makes the first Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Vol. 2, No. 1, Article 41. Publication date: March 2018

RF-Kinect:A Wearable RFID-based Approach Towards 3D Body Movement Tracking.41:3 Wearable RFID tags Body movement captured by RF-Kinect 0.5 0.5 02 25 030 Y (m) 21 060 X (m) Fig.1.RF-Kinect:Tracking the body movement based on wearable RFID tags. attempt to track the finger by wearing one RFID tag on the finger,we are the first to systematically explore the usage of wearable RFID on tracking the whole body movement(i.e.,the limb orientation and joint displacement) in 3D space,which is more complicated and challenging. In order to investigate the applicability of wearable RFID,we present RF-Kinect which consists of multiple wearable RFID tags and one dual-antenna RFID reader measuring the RF signal variations from these tags.In particular,RF-Kinect focuses on tracking the complicated 3D limb orientation and movement with multi-degree of freedom other than the simple trajectory of the finger or hand,which has been well studied in previous work [27,31,35].The key novelty of RF-Kinect lies in (i)training-free and(ii)minimum hardware requirements. First,it is impractical to traverse numerous body movements(e.g,[13,17])to build a complete training dataset. Thus,we build a geometric model of the human body to assist the body movement tracking with little efforts on training data collection.Second,it is also not applicable to place a large number of antennas on RFID readers around the user,making the tracking of the body movement cumbersome.The existing RFID-based localization systems require either at least three static antennas or a moving antenna [34-36,41]to accomplish the task, posing a big challenge on the hardware design for the body movement tracking.Therefore,we aim to design RF-Kinect with the minimum hardware requirement by leveraging a single dual-antenna RFID reader. The basic idea of RF-Kinect is to derive the limb orientation by leveraging the phase information of RF signals collected from multiple wearable RFID tags,and then construct the body movement,which is represented as a temporal sequence of the limb orientation estimations,grounded on the predefined human body geometric model.Specifically,RF-Kinect extracts two types of phase features to perform the limb orientation estimation:(i) Phase Difference between any two Tags(PDT)attached to the same part of a limb(e.g,the upper arm),and (ii) Phase Difference between the two Antennas(PDA)of the same tag.By regarding the two tags on one skeleton as a rigid body,we can formulate PDT as a function of the skeleton orientation with respect to the transmitting direction of the antenna.The possible orientations derived from a single antenna thus form a conical surface in 3D space,where the apex of the cone is the rotation center of the limb [2].When two antennas are employed to perform the orientation estimation,the possible range of orientations can be largely reduced by examining the overlapping range of two conical surfaces.However,two antennas still lead to two ambiguity orientations on the mirroring sides.Therefore,we further model the relationship between two rigid bodies to filter out the ambiguity.Particularly,we calculate the relative distance between the tags on different skeletons to describe the relationship.Since the relative distance describes the relative postures between two skeletons,the ambiguity orientation on the mirroring side can be thus filtered out due to the unmatched relative distances.Finally,we can correctly estimate the orientations of each skeleton of the limb The key contributions in this work are summarized as follows:1)To the best of our knowledge,we are the first to propose the wearable RFID research and systematically investigate the applicability of it by presenting Proceedings of the ACM on Interactive,Mobile,Wearable and Ubiquitous Technologies,Vol.2,No.1,Article 41.Publication date:March 2018

RF-Kinect: A Wearable RFID-based Approach Towards 3D Body Movement Tracking • 41:3 !"#$ " %&'() "#$ "#* "#+ !$ !$#, -&'() !. !"#, " "#, /&'() !"#$#%&"' ()*+',#-. /012'304"3"5,' 6#7,8$"1'%2'()9:;5"6, Fig. 1. RF-Kinect: Tracking the body movement based on wearable RFID tags. attempt to track the finger by wearing one RFID tag on the finger, we are the first to systematically explore the usage of wearable RFID on tracking the whole body movement (i.e., the limb orientation and joint displacement) in 3D space, which is more complicated and challenging. In order to investigate the applicability of wearable RFID, we present RF-Kinect which consists of multiple wearable RFID tags and one dual-antenna RFID reader measuring the RF signal variations from these tags. In particular, RF-Kinect focuses on tracking the complicated 3D limb orientation and movement with multi-degree of freedom other than the simple trajectory of the finger or hand, which has been well studied in previous work [27, 31, 35]. The key novelty of RF-Kinect lies in (i) training-free and (ii) minimum hardware requirements. First, it is impractical to traverse numerous body movements (e.g., [13, 17]) to build a complete training dataset. Thus, we build a geometric model of the human body to assist the body movement tracking with little efforts on training data collection. Second, it is also not applicable to place a large number of antennas on RFID readers around the user, making the tracking of the body movement cumbersome. The existing RFID-based localization systems require either at least three static antennas or a moving antenna [34–36, 41] to accomplish the task, posing a big challenge on the hardware design for the body movement tracking. Therefore, we aim to design RF-Kinect with the minimum hardware requirement by leveraging a single dual-antenna RFID reader. The basic idea of RF-Kinect is to derive the limb orientation by leveraging the phase information of RF signals collected from multiple wearable RFID tags, and then construct the body movement, which is represented as a temporal sequence of the limb orientation estimations, grounded on the predefined human body geometric model. Specifically, RF-Kinect extracts two types of phase features to perform the limb orientation estimation: (i) Phase Difference between any two Tags (PDT) attached to the same part of a limb (e.g., the upper arm), and (ii) Phase Difference between the two Antennas (PDA) of the same tag. By regarding the two tags on one skeleton as a rigid body, we can formulate PDT as a function of the skeleton orientation with respect to the transmitting direction of the antenna. The possible orientations derived from a single antenna thus form a conical surface in 3D space, where the apex of the cone is the rotation center of the limb [2]. When two antennas are employed to perform the orientation estimation, the possible range of orientations can be largely reduced by examining the overlapping range of two conical surfaces. However, two antennas still lead to two ambiguity orientations on the mirroring sides. Therefore, we further model the relationship between two rigid bodies to filter out the ambiguity. Particularly, we calculate the relative distance between the tags on different skeletons to describe the relationship. Since the relative distance describes the relative postures between two skeletons, the ambiguity orientation on the mirroring side can be thus filtered out due to the unmatched relative distances. Finally, we can correctly estimate the orientations of each skeleton of the limb. The key contributions in this work are summarized as follows: 1) To the best of our knowledge, we are the first to propose the wearable RFID research and systematically investigate the applicability of it by presenting Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Vol. 2, No. 1, Article 41. Publication date: March 2018

41:4·C.Wang et al. RF-Kinect.It is the first training-free and low-cost human body movement tracking system,including both the limb orientation and joint displacement,by leveraging multiple wearable RFID tags,and it overcomes many drawbacks on existing light-dependent works.2)We demonstrate that RF-Kinect could accurately track the 3D body movement other than simply tracking one joint on the body,with the minimum hardware requirements involving only a dual-antenna RFID reader and several low-cost wearable RFID tags.3)Instead of locating the absolute position of each joint for tracking,we regard the human body as the combination of several rigid bodies(i.e.,skeletons)and use a kinematic method to connect each skeleton as the human body model.Then,we exploit the features PDT and PDA to estimate the orientations of each skeleton and use the relative distances to measure the relationship between different skeletons for tracking.4)The fast adoption and low-cost deployment of RF-Kinect are also validated through our prototype implementation.Given the groundtruth from the Kinect 2.0 testbed,our systematic evaluation shows that RF-Kinect could achieve the average angle and position error as low as 8.7 and 4.4cm for the limb orientation and joints'position estimation,respectively. 2 RELATED WORK Existing studies on the gesture/posture recognition can be classified into three main categories: Computer Vision-based.The images and videos captured by the camera could truthfully record the human body movement in different levels of granularity,so there have been active studies on tracking and analyzing the human motion based on the computer vision.For example,Microsoft Kinect [8]provides the fine-grained body movement tracking by fusing the RGB and depth image.Other works try to communicate or sense the human location and activities based on the visible light [15,16,18].LiSense [20]reconstructs the human skeleton in real-time by analyzing the shadows produced by the human body blockage on the encoded visible light sources.It is obvious that the computer vision-based methods are highly light-dependent,so they could fail in tracking the body movement if the line-of-sight(LOS)light channel is unavailable.Besides,the videos may incur the privacy problem of the users in some sensitive scenarios.Unlike the computer vision-based approaches,RF-Kinect relies on the RF device,which can work well in most Non-line-of-sight(NLOS)channel environments.Moreover,given the unique ID for each tag,it can also be easily extended to the body movement tracking scenario involving multiple users. Motion Sensor-based.Previous research has shown that the built-in motion sensors on wearable devices can also be utilized for the body movement recognition [19,44].Wearable devices such as the smartwatch and wristband can detect a variety of body movements,including walking,running,jumping,arm movement etc., based on the accelerometer and gyroscope readings [22,23,33,40,45].For example,ArmTrack [30]proposes to track the posture of the entire arm solely relying on the smartwatch.However,the motion sensors in wearable devices are only able to track the movement of a particular part of the human body,and more importantly, their availability is highly limited by the battery life.Some academic studies [32]and commercial products(e.g, Vicon [6])have the whole human body attached with the special sensors,and then rely on the high-speed cameras to capture the motion of different sensors for the accurate gesture recognition.Nevertheless,the high-speed cameras are usually so expensive that are not affordable by everyone,and the tracking process with camera is also highly light-dependent.Different from the above motion sensor-based systems,RF-Kinect aims to track the body movement with RFID tags,which are battery-free and more low-cost.Moreover,since each RFID tag only costs from 5 to 15 U.S.cents today,such price is affordable for almost everyone,even if the tags are embedded into clothes Wireless Signal-based.More recently,several studies propose to utilize wireless signals to sense human gestures [10,17,25,35,37,38,42,43].Pu et al.[25]leverage the Doppler influence from Wi-Fi signals caused by body gestures to recognize several pre-defined gestures;Kellogg et al.[17]recognize a set of gestures by analyzing the amplitude changes of RF signals without wearing any device;Adib et al.[9]propose to reconstruct a human Proceedings of the ACM on Interactive,Mobile,Wearable and Ubiquitous Technologies,Vol.2,No.1,Article 41.Publication date:March 2018

41:4 • C. Wang et al. RF-Kinect. It is the first training-free and low-cost human body movement tracking system, including both the limb orientation and joint displacement, by leveraging multiple wearable RFID tags, and it overcomes many drawbacks on existing light-dependent works. 2) We demonstrate that RF-Kinect could accurately track the 3D body movement other than simply tracking one joint on the body, with the minimum hardware requirements involving only a dual-antenna RFID reader and several low-cost wearable RFID tags. 3) Instead of locating the absolute position of each joint for tracking, we regard the human body as the combination of several rigid bodies (i.e., skeletons) and use a kinematic method to connect each skeleton as the human body model. Then, we exploit the features PDT and PDA to estimate the orientations of each skeleton and use the relative distances to measure the relationship between different skeletons for tracking. 4) The fast adoption and low-cost deployment of RF-Kinect are also validated through our prototype implementation. Given the groundtruth from the Kinect 2.0 testbed, our systematic evaluation shows that RF-Kinect could achieve the average angle and position error as low as 8.7 ◦ and 4.4cm for the limb orientation and joints’ position estimation, respectively. 2 RELATED WORK Existing studies on the gesture/posture recognition can be classified into three main categories: Computer Vision-based. The images and videos captured by the camera could truthfully record the human body movement in different levels of granularity, so there have been active studies on tracking and analyzing the human motion based on the computer vision. For example, Microsoft Kinect [8] provides the fine-grained body movement tracking by fusing the RGB and depth image. Other works try to communicate or sense the human location and activities based on the visible light [15, 16, 18]. LiSense [20] reconstructs the human skeleton in real-time by analyzing the shadows produced by the human body blockage on the encoded visible light sources. It is obvious that the computer vision-based methods are highly light-dependent, so they could fail in tracking the body movement if the line-of-sight (LOS) light channel is unavailable. Besides, the videos may incur the privacy problem of the users in some sensitive scenarios. Unlike the computer vision-based approaches, RF-Kinect relies on the RF device, which can work well in most Non-line-of-sight (NLOS) channel environments. Moreover, given the unique ID for each tag, it can also be easily extended to the body movement tracking scenario involving multiple users. Motion Sensor-based. Previous research has shown that the built-in motion sensors on wearable devices can also be utilized for the body movement recognition [19, 44]. Wearable devices such as the smartwatch and wristband can detect a variety of body movements, including walking, running, jumping, arm movement etc., based on the accelerometer and gyroscope readings [22, 23, 33, 40, 45]. For example, ArmTrack [30] proposes to track the posture of the entire arm solely relying on the smartwatch. However, the motion sensors in wearable devices are only able to track the movement of a particular part of the human body, and more importantly, their availability is highly limited by the battery life. Some academic studies [32] and commercial products (e.g., Vicon [6]) have the whole human body attached with the special sensors, and then rely on the high-speed cameras to capture the motion of different sensors for the accurate gesture recognition. Nevertheless, the high-speed cameras are usually so expensive that are not affordable by everyone, and the tracking process with camera is also highly light-dependent. Different from the above motion sensor-based systems, RF-Kinect aims to track the body movement with RFID tags, which are battery-free and more low-cost. Moreover, since each RFID tag only costs from 5 to 15 U.S. cents today, such price is affordable for almost everyone, even if the tags are embedded into clothes. Wireless Signal-based. More recently, several studies propose to utilize wireless signals to sense human gestures [10, 17, 25, 35, 37, 38, 42, 43]. Pu et al. [25] leverage the Doppler influence from Wi-Fi signals caused by body gestures to recognize several pre-defined gestures; Kellogg et al. [17] recognize a set of gestures by analyzing the amplitude changes of RF signals without wearing any device; Adib et al. [9] propose to reconstruct a human Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Vol. 2, No. 1, Article 41. Publication date: March 2018

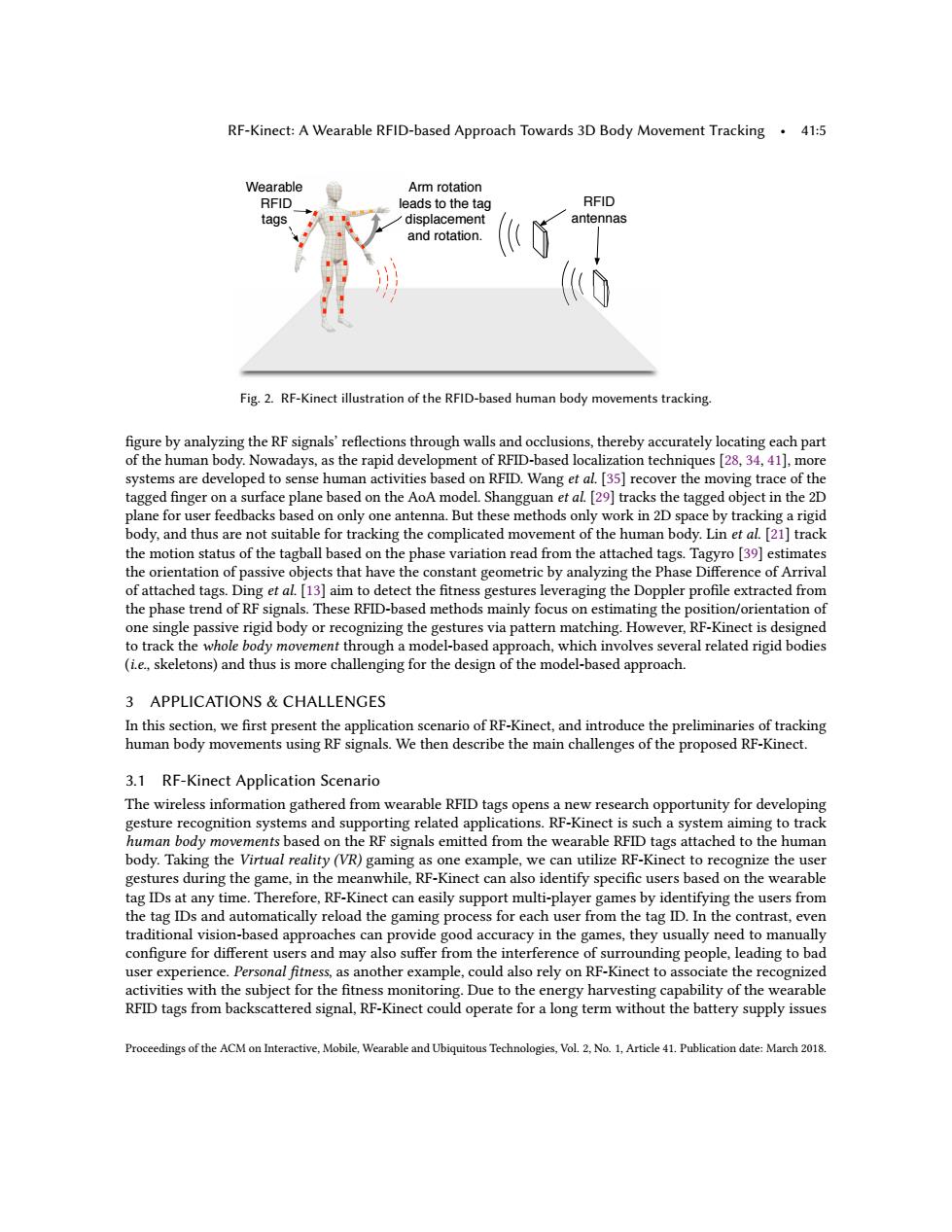

RF-Kinect:A Wearable RFID-based Approach Towards 3D Body Movement Tracking.41:5 Wearable Arm rotation RFID leads to the tag RFID tags displacement antennas and rotation. Fig.2.RF-Kinect illustration of the RFID-based human body movements tracking figure by analyzing the RF signals'reflections through walls and occlusions,thereby accurately locating each part of the human body.Nowadays,as the rapid development of RFID-based localization techniques [28,34,41],more systems are developed to sense human activities based on RFID.Wang et al.[35]recover the moving trace of the tagged finger on a surface plane based on the AoA model.Shangguan et al.[29]tracks the tagged object in the 2D plane for user feedbacks based on only one antenna.But these methods only work in 2D space by tracking a rigid body,and thus are not suitable for tracking the complicated movement of the human body.Lin et al.[21]track the motion status of the tagball based on the phase variation read from the attached tags.Tagyro [39]estimates the orientation of passive objects that have the constant geometric by analyzing the Phase Difference of Arrival of attached tags.Ding et al.[13]aim to detect the fitness gestures leveraging the Doppler profile extracted from the phase trend of RF signals.These RFID-based methods mainly focus on estimating the position/orientation of one single passive rigid body or recognizing the gestures via pattern matching.However,RF-Kinect is designed to track the whole body movement through a model-based approach,which involves several related rigid bodies (i.e.,skeletons)and thus is more challenging for the design of the model-based approach. 3 APPLICATIONS CHALLENGES In this section,we first present the application scenario of RF-Kinect,and introduce the preliminaries of tracking human body movements using RF signals.We then describe the main challenges of the proposed RF-Kinect. 3.1 RF-Kinect Application Scenario The wireless information gathered from wearable RFID tags opens a new research opportunity for developing gesture recognition systems and supporting related applications.RF-Kinect is such a system aiming to track human body movements based on the RF signals emitted from the wearable RFID tags attached to the human body.Taking the Virtual reality(VR)gaming as one example,we can utilize RF-Kinect to recognize the user gestures during the game,in the meanwhile,RF-Kinect can also identify specific users based on the wearable tag IDs at any time.Therefore,RF-Kinect can easily support multi-player games by identifying the users from the tag IDs and automatically reload the gaming process for each user from the tag ID.In the contrast,even traditional vision-based approaches can provide good accuracy in the games,they usually need to manually configure for different users and may also suffer from the interference of surrounding people,leading to bad user experience.Personal fitness,as another example,could also rely on RF-Kinect to associate the recognized activities with the subject for the fitness monitoring.Due to the energy harvesting capability of the wearable RFID tags from backscattered signal,RF-Kinect could operate for a long term without the battery supply issues Proceedings of the ACM on Interactive,Mobile,Wearable and Ubiquitous Technologies,Vol.2,No.1,Article 41.Publication date:March 2018

RF-Kinect: A Wearable RFID-based Approach Towards 3D Body Movement Tracking • 41:5 RFID antennas Wearable RFID tags Arm rotation leads to the tag displacement and rotation. Fig. 2. RF-Kinect illustration of the RFID-based human body movements tracking. figure by analyzing the RF signals’ reflections through walls and occlusions, thereby accurately locating each part of the human body. Nowadays, as the rapid development of RFID-based localization techniques [28, 34, 41], more systems are developed to sense human activities based on RFID. Wang et al. [35] recover the moving trace of the tagged finger on a surface plane based on the AoA model. Shangguan et al. [29] tracks the tagged object in the 2D plane for user feedbacks based on only one antenna. But these methods only work in 2D space by tracking a rigid body, and thus are not suitable for tracking the complicated movement of the human body. Lin et al. [21] track the motion status of the tagball based on the phase variation read from the attached tags. Tagyro [39] estimates the orientation of passive objects that have the constant geometric by analyzing the Phase Difference of Arrival of attached tags. Ding et al. [13] aim to detect the fitness gestures leveraging the Doppler profile extracted from the phase trend of RF signals. These RFID-based methods mainly focus on estimating the position/orientation of one single passive rigid body or recognizing the gestures via pattern matching. However, RF-Kinect is designed to track the whole body movement through a model-based approach, which involves several related rigid bodies (i.e., skeletons) and thus is more challenging for the design of the model-based approach. 3 APPLICATIONS & CHALLENGES In this section, we first present the application scenario of RF-Kinect, and introduce the preliminaries of tracking human body movements using RF signals. We then describe the main challenges of the proposed RF-Kinect. 3.1 RF-Kinect Application Scenario The wireless information gathered from wearable RFID tags opens a new research opportunity for developing gesture recognition systems and supporting related applications. RF-Kinect is such a system aiming to track human body movements based on the RF signals emitted from the wearable RFID tags attached to the human body. Taking the Virtual reality (VR) gaming as one example, we can utilize RF-Kinect to recognize the user gestures during the game, in the meanwhile, RF-Kinect can also identify specific users based on the wearable tag IDs at any time. Therefore, RF-Kinect can easily support multi-player games by identifying the users from the tag IDs and automatically reload the gaming process for each user from the tag ID. In the contrast, even traditional vision-based approaches can provide good accuracy in the games, they usually need to manually configure for different users and may also suffer from the interference of surrounding people, leading to bad user experience. Personal fitness, as another example, could also rely on RF-Kinect to associate the recognized activities with the subject for the fitness monitoring. Due to the energy harvesting capability of the wearable RFID tags from backscattered signal, RF-Kinect could operate for a long term without the battery supply issues Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Vol. 2, No. 1, Article 41. Publication date: March 2018