Data Technologies-CERN School af Compuing 2019 Data Technologes-CERN School of Computing 2019 Reed-Solomon error correction .. Reed-Solomon (simplified)Example .is an error-correcting code that works by oversampling 4 Numbers to encode:{1,-6,4,9}(m=4) (by n points)a polynomial constructed from the data polynomial of degree 3(m-1): .Any m distinct points uniquely determine a polynomial of degree,at most,m-1 The sender determines the polynomial (of degree m-1), y=x3-6x2+4x+9 that represents the m data points.The polynomial is "encoded"by its evaluation at n+m points.If during transmission,the number of corrupted values is n the receiver can recover the original polynomial. -T Implementation examples: ◆n=0 no redundancy We encode the polynomial with n+m=7 points ◆n=1 is Raid5(parity) {-2,9,8,1,-6,-7,4} n=2 is Raid 6(Reed Solomon,double diagonal parity) n=3 is...(Triple parity) httpcnen.wiipedia.oro/nki/Roed-Solomon Data Technologles-CERN School af Compuang 2019 Data Technologies-CERN School of Computing 2019 Reed-Solomon (simplified)Example Error detection vs Error correction .To reconstruct the polynomial,any 4 points are enough:we ◆With Reed-Solomon: can lose any 3 points. If the number of corrupted values is n we can only detect the error If the number of corrupted values is n we can correct the error However,by adding a checksum or hash on each We can have an error on any 2 points that can be corrected: point,we can individually identify the corrupted We need to identify the 5 points "aligned"on the only one values polynomial of degree 3 possible If checksum has been added,Reed-Solomon can correct corrupted values s n htp:/kemel.orgput/inu/kemelpeoplomhparaigs.pa

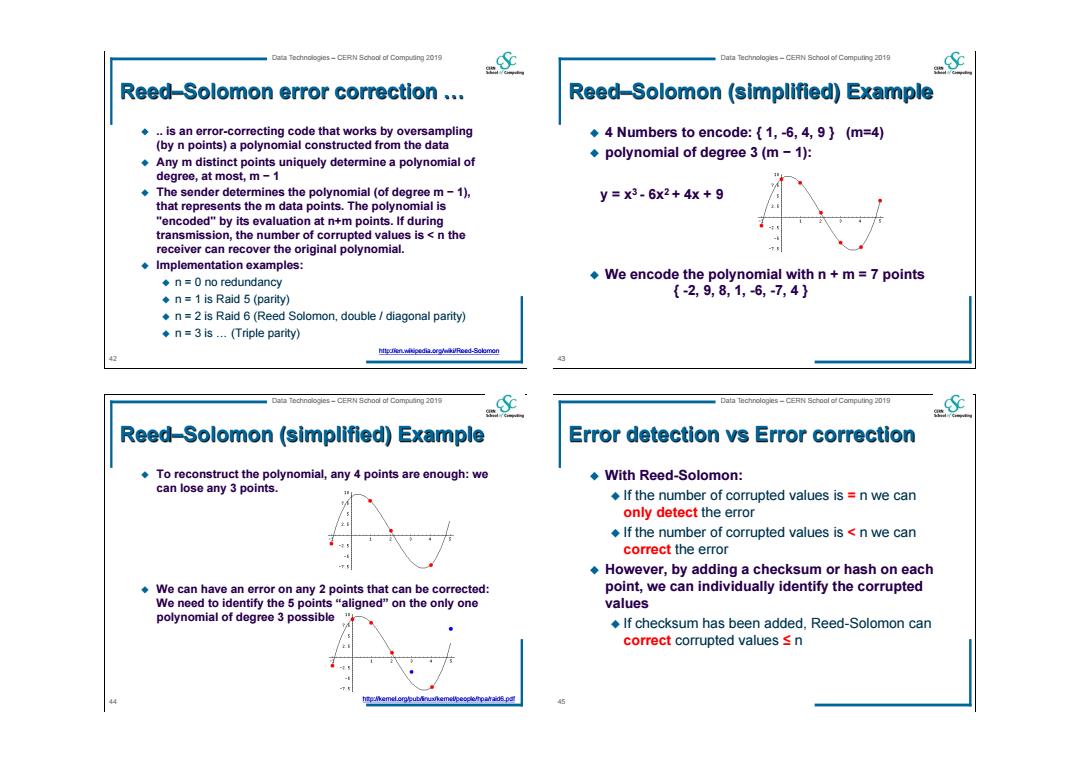

42 Data Technologies – CERN School of Computing 2019 Reed–Solomon error correction … .. is an error-correcting code that works by oversampling (by n points) a polynomial constructed from the data Any m distinct points uniquely determine a polynomial of degree, at most, m − 1 The sender determines the polynomial (of degree m − 1), that represents the m data points. The polynomial is "encoded" by its evaluation at n+m points. If during transmission, the number of corrupted values is < n the receiver can recover the original polynomial. Implementation examples: n = 0 no redundancy n = 1 is Raid 5 (parity) n = 2 is Raid 6 (Reed Solomon, double / diagonal parity) n = 3 is … (Triple parity) http://en.wikipedia.org/wiki/Reed-Solomon 43 Data Technologies – CERN School of Computing 2019 Reed–Solomon (simplified) Example 4 Numbers to encode: { 1, -6, 4, 9 } (m=4) polynomial of degree 3 (m − 1): We encode the polynomial with n + m = 7 points { -2, 9, 8, 1, -6, -7, 4 } y = x3 - 6x2 + 4x + 9 44 Data Technologies – CERN School of Computing 2019 Reed–Solomon (simplified) Example To reconstruct the polynomial, any 4 points are enough: we can lose any 3 points. We can have an error on any 2 points that can be corrected: We need to identify the 5 points “aligned” on the only one polynomial of degree 3 possible http://kernel.org/pub/linux/kernel/people/hpa/raid6.pdf 45 Data Technologies – CERN School of Computing 2019 Error detection vs Error correction With Reed-Solomon: If the number of corrupted values is = n we can only detect the error If the number of corrupted values is < n we can correct the error However, by adding a checksum or hash on each point, we can individually identify the corrupted values If checksum has been added, Reed-Solomon can correct corrupted values ≤ n

Data Technologies-CERN School af Compuaing 2019 Data Technologes-CERN School of Computing 2019 (Sc Rellability calculations Raid 5 reliability With RAID,the final reliability depends on several parameters .The reliability of the hardware ◆The type of RAID Disk are regrouped in sets of equal size.If c is the capacity .The number of disks in the set of the disk and n is the number of disks,the sets will have a Already this gives lot of flexibility in capacity of implementing arbitrary reliability c(n-1) The set is immune to the loss of 1 disk in the set.The loss of 2 disks implies the loss of the entire set content. Data Technologles-CERN School af Compuang 2019 Data Technologies-CERN School of Computing 2019 Some calculations for Raid 5 Some calculations for Raid 5 Disks MTBF is between 3 x 105 and 1.2 x 105 hours Disks MTBF is between 3 x 105 and 1.2 x 10%hours Replacement time of a failed disk is 4 hours Replacement time of a failed disk is 4 hours Probability of 1 disk to fail within the next 4 hours Probability of 1 disk to fail within the next 4 hours 器高0 器高13w Probability to have a failing disk in the next 4 hours in a 15 PB computer centre (15'000 disks) Fhame -1-(1-P)-0.18

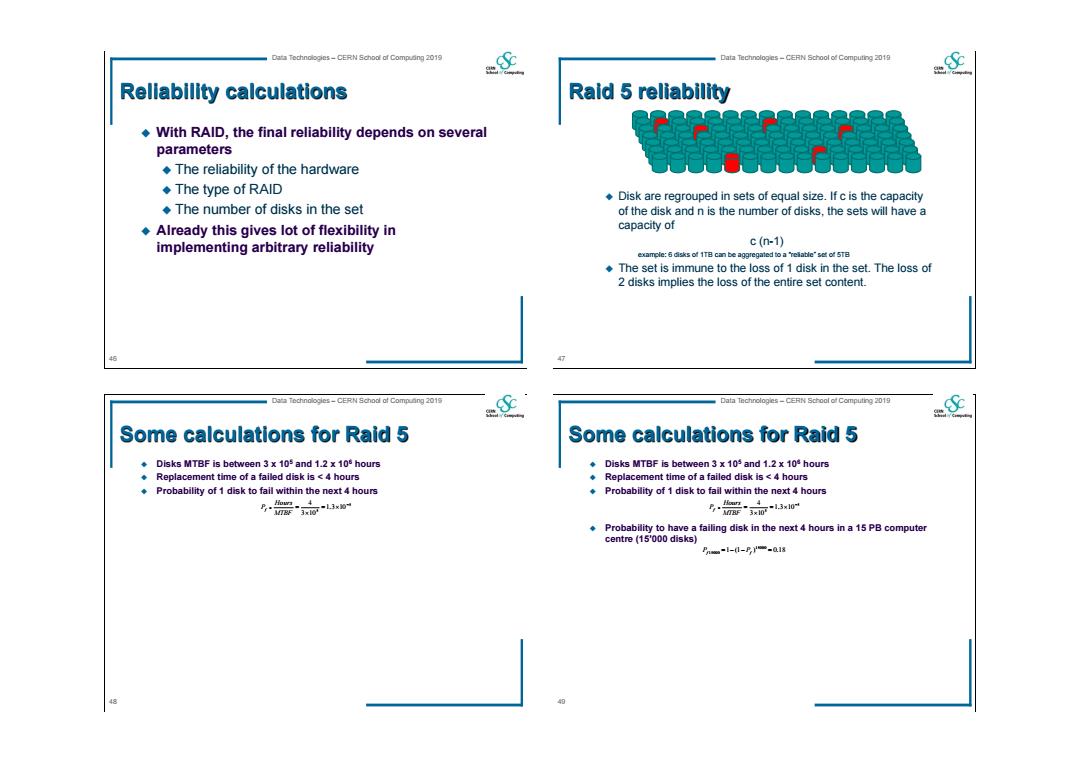

46 Data Technologies – CERN School of Computing 2019 Reliability calculations With RAID, the final reliability depends on several parameters The reliability of the hardware The type of RAID The number of disks in the set Already this gives lot of flexibility in implementing arbitrary reliability 47 Data Technologies – CERN School of Computing 2019 Raid 5 reliability Disk are regrouped in sets of equal size. If c is the capacity of the disk and n is the number of disks, the sets will have a capacity of c (n-1) example: 6 disks of 1TB can be aggregated to a “reliable” set of 5TB The set is immune to the loss of 1 disk in the set. The loss of 2 disks implies the loss of the entire set content. 48 Data Technologies – CERN School of Computing 2019 Some calculations for Raid 5 Disks MTBF is between 3 x 105 and 1.2 x 106 hours Replacement time of a failed disk is < 4 hours Probability of 1 disk to fail within the next 4 hours 5 5 1.3 10 3 10 4 MTBF Hours Pf 49 Data Technologies – CERN School of Computing 2019 Some calculations for Raid 5 Disks MTBF is between 3 x 105 and 1.2 x 106 hours Replacement time of a failed disk is < 4 hours Probability of 1 disk to fail within the next 4 hours Probability to have a failing disk in the next 4 hours in a 15 PB computer centre (15’000 disks) 5 5 1.3 10 3 10 4 MTBF Hours Pf 1 (1 ) 0.18 15000 Pf 15000 Pf

Data Technologies-CERN School af Compuing 2019 Data Technologes-CERN School of Computing 2019 Some calculations for Raid 5 Some calculations for Raid 5 Disks MTBF is between 3 x 105 and 1.2 x 105 hours Disks MTBF is between 3 x 105 and 1.2 x 104 hours Replacement time of a failed disk is <4 hours Replacement time of a failed disk is 4 hours p[A and B)=p(A)*p(B/A) p(Aand B]=p(A"pBUA间 Probability of 1 disk to fail within the next 4 hours Probability of 1 disk to fail within the next 4 hours 道A.B independent:P时A"PB 4 i A.B independent:p(A)"p() Probability to have a failing disk in the next 4 hours in a 15 PB computer Probability to have a failing disk in the next 4 hours in a 15 PB computer centre(15'000 disks) centre (15'000 disks) P-1---a.18 Pn-l-0--a18 Imagine a Raid set of 10 disks.Probability to have one of the remaining Imagine a Raid set of 10 disks.Probability to have one of the remaining disk failing within 4 hours disk failing within 4 hours Pn-1-(1-PI-12xlor B,-1-l-P-l2x10 .However the second failure may not be independent from the first one. There is no way to calculate this probability I We can arbitrarily increase it by two orders of magnitude to account the dependencies (over temperature,high noise,EMP,high voltage,faulty common controller,.... P-1-I-P-00119 Data Technologles-CERN School af Compuang 2019 Data Technologies-CERN School of Computing 2019 Some calculations for Raid 5 Raid 6 reliability Disks MTBF is between 3 x 105 and 1.2 x 105 hours Replacement time of a failed disk is <4 hours p(AandB)=pWA'P时BA Probability of 1 disk to fail within the next 4 hours 器高 量B independent:pAy"p周 .Probability to have a failing disk in the next 4 hours in a 15 PB computer centre (15'000 disks) Proee-1-(1-P,y-0.18 Disk are regrouped in sets of arbitrary size.If c is the capacity of the disk and n is the number of disks.the sets Imagine a Raid set of 10 disks.Probability to have one of the remaining will have a capacity of disk failing within 4 hours P.1-1-PP-12xl0 c(n-2) .However the second failure may not be independent from the first one. example:12 disks of 1TB can be aggregated to a"reliable"set of 10TB There is no way to calculate this probability I We can arbitrarily increase it .The set is immune to the loss of 2 disks in the set.The loss by two orders of magnitude to account the dependencies (over of 3 disks implies the loss of the entire set content. temperature,high noise,EMP,high voltage,faulty common controller,.... -l-0--a019 Probability to lose computer centre data in the next 4 hours Probability to lose data in the next 10 years 月.-l-l-R-l-0a1 53

50 Data Technologies – CERN School of Computing 2019 Some calculations for Raid 5 Disks MTBF is between 3 x 105 and 1.2 x 106 hours Replacement time of a failed disk is < 4 hours Probability of 1 disk to fail within the next 4 hours Probability to have a failing disk in the next 4 hours in a 15 PB computer centre (15’000 disks) Imagine a Raid set of 10 disks. Probability to have one of the remaining disk failing within 4 hours 5 5 1.3 10 3 10 4 MTBF Hours Pf 1 (1 ) 0.18 15000 Pf 15000 Pf 9 4 9 1 (1 ) 1.2 10 Pf Pf p( A and B ) = p(A) * p(B/A) if A,B independent : p(A) * p(B) 51 Data Technologies – CERN School of Computing 2019 Some calculations for Raid 5 Disks MTBF is between 3 x 105 and 1.2 x 106 hours Replacement time of a failed disk is < 4 hours Probability of 1 disk to fail within the next 4 hours Probability to have a failing disk in the next 4 hours in a 15 PB computer centre (15’000 disks) Imagine a Raid set of 10 disks. Probability to have one of the remaining disk failing within 4 hours However the second failure may not be independent from the first one. There is no way to calculate this probability ! We can arbitrarily increase it by two orders of magnitude to account the dependencies (over temperature, high noise, EMP, high voltage, faulty common controller, ....) 5 5 1.3 10 3 10 4 MTBF Hours Pf 1 (1 ) 0.18 15000 Pf 15000 Pf 9 4 9 1 (1 ) 1.2 10 Pf Pf 1 (1 ) 0.0119 900 Pf 9corrected Pf p( A and B ) = p(A) * p(B/A) if A,B independent : p(A) * p(B) 52 Data Technologies – CERN School of Computing 2019 Some calculations for Raid 5 Disks MTBF is between 3 x 105 and 1.2 x 106 hours Replacement time of a failed disk is < 4 hours Probability of 1 disk to fail within the next 4 hours Probability to have a failing disk in the next 4 hours in a 15 PB computer centre (15’000 disks) Imagine a Raid set of 10 disks. Probability to have one of the remaining disk failing within 4 hours However the second failure may not be independent from the first one. There is no way to calculate this probability ! We can arbitrarily increase it by two orders of magnitude to account the dependencies (over temperature, high noise, EMP, high voltage, faulty common controller, ....) Probability to lose computer centre data in the next 4 hours Probability to lose data in the next 10 years 5 5 1.3 10 3 10 4 MTBF Hours Pf 1 (1 ) 0.18 15000 Pf 15000 Pf 9 4 9 1 (1 ) 1.2 10 Pf Pf 4 15000 9 6.16 10 Ploss Pf Pf corrected 1 (1 ) 1-10 1 10 365 6 -21 10 Ploss yrs Ploss 1 (1 ) 0.0119 900 Pf 9corrected Pf p( A and B ) = p(A) * p(B/A) if A,B independent : p(A) * p(B) 53 Data Technologies – CERN School of Computing 2019 Raid 6 reliability Disk are regrouped in sets of arbitrary size. If c is the capacity of the disk and n is the number of disks, the sets will have a capacity of c (n-2) example: 12 disks of 1TB can be aggregated to a “reliable” set of 10TB The set is immune to the loss of 2 disks in the set. The loss of 3 disks implies the loss of the entire set content

Data Technologies-CERN School af Compuing 2019 Data Technologes-CERN School of Computing 2019 Same calculations for Raid 6 Arbitrary reliability Probability of 1 disk to fail within the next 4 hours RAID is "disks"based.This lacks of granularity For increased flexibility,an alternative would be Imagine a raid set of 10 disks.Probability to have one of the to use files...but files do not have constant size remaining 9 disks failing within 4 hours(increased by two orders ◆File“chunks”(or“blocks")is the solution of magnitudes) Pn-l-1-P✉1.19x1r Split files in chunks of size"s" Probability to have another of the remaining 8 disks failing within Group them in sets of"m"chunks 4 hours (also increased by two orders of magnitudes) P-1-(1-P)-1.06x10 ◆For each group of"m”chunks,generate"n" Probability to lose data in the next 4 hours additional chunks so that 月-Primoe PrmxPrm-229x10r For any set of 'm"chunks chosen among the "m+n"you ca reconstruct the missing "n"chunks Probability to lose data in the next 10 years Scatter the "m+n"chunks on independent storage 乃ao-1-1-月产-0394 Arbitrary relability with theh Data Technologles-CERN School af Compuaing 2019 Data Technologies-CERN School of Computing 2019 based solution Analogy with the gambling world We just demonstrated that you can achieve "arbitrary reliability"at ◆The reliability is independent form the size“s”which is the cost of an "arbitrary low"amount of disk space.This is possible arbitrary. because you increase the amount of data you accept loosing when this rare event happens. Note:both large and small"s"impact performance In the gambling world there are several playing schemes that Whatever the reliability of the hardware is,the system is allows you to win an arbitrary amount of money with an arbitrary immune to the loss of"n"simultaneous failures from pools probability. of"m+n"storage chunks Example:you can easily win 100 Euros at>99%probability... Both "m"and "n"are arbitrary.Therefore arbitrary reliability By playing up to 7 times on the "Red"of a French Roulette and doubling the bet unti you win. can be achieved The probability of not having a Red"for 7 times is (19/37)70.0094) The fraction of raw storage space loss is n /(n+m) .You just need to take the risk of loosing 12'700 euros with a 0.94%probability .Note that space loss can also be reduced arbitrarily by increasing m 4值5% 1c0 1.5% 180 At the cost of increasing the amount of data loss if this would 10 ever happen 10 6% 50 三 00 6 10 3157% 孩17% 180 13路 6320 160 D.94% 12780

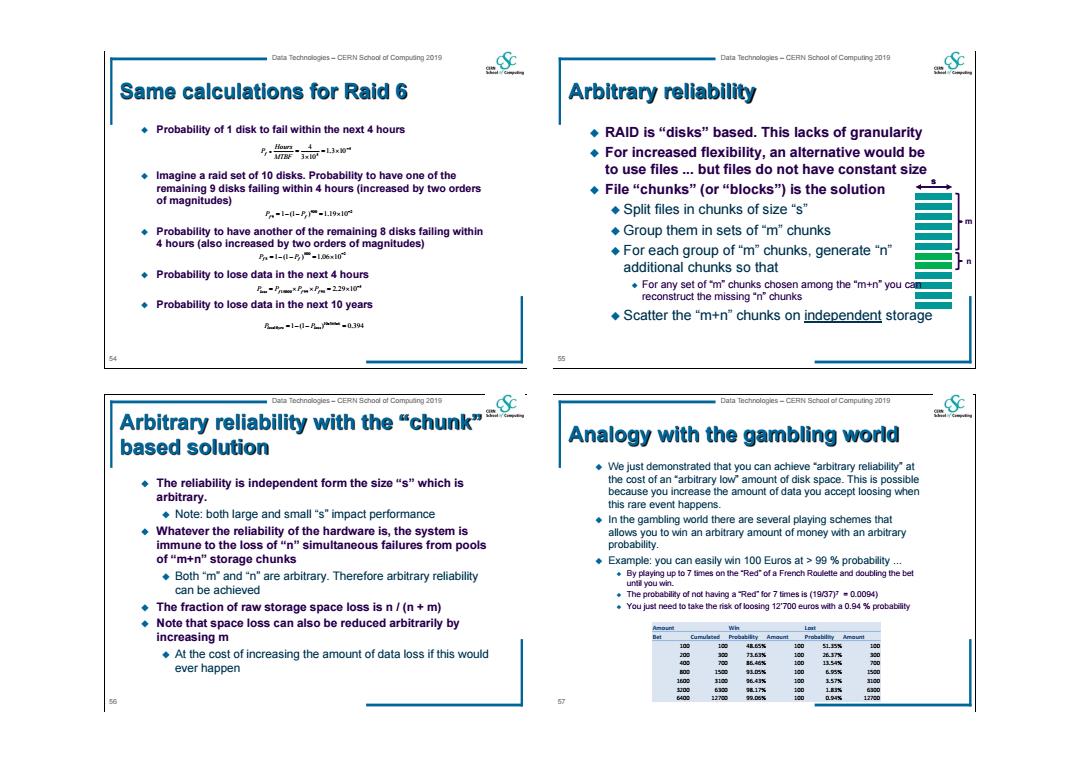

54 Data Technologies – CERN School of Computing 2019 Same calculations for Raid 6 Probability of 1 disk to fail within the next 4 hours Imagine a raid set of 10 disks. Probability to have one of the remaining 9 disks failing within 4 hours (increased by two orders of magnitudes) Probability to have another of the remaining 8 disks failing within 4 hours (also increased by two orders of magnitudes) Probability to lose data in the next 4 hours Probability to lose data in the next 10 years 5 5 1.3 10 3 10 4 MTBF Hours Pf 900 2 9 1 (1 ) 1.19 10 Pf Pf 5 15000 99 98 2.29 10 Ploss Pf Pf Pf 1 (1 ) 0.394 10 365 6 10 Ploss yrs Ploss 800 2 8 1 (1 ) 1.06 10 Pf Pf 55 Data Technologies – CERN School of Computing 2019 s Arbitrary reliability RAID is “disks” based. This lacks of granularity For increased flexibility, an alternative would be to use files ... but files do not have constant size File “chunks” (or “blocks”) is the solution Split files in chunks of size “s” Group them in sets of “m” chunks For each group of “m” chunks, generate “n” additional chunks so that For any set of “m” chunks chosen among the “m+n” you can reconstruct the missing “n” chunks Scatter the “m+n” chunks on independent storage n s m 56 Data Technologies – CERN School of Computing 2019 Arbitrary reliability with the “chunk” based solution The reliability is independent form the size “s” which is arbitrary. Note: both large and small “s” impact performance Whatever the reliability of the hardware is, the system is immune to the loss of “n” simultaneous failures from pools of “m+n” storage chunks Both “m” and “n” are arbitrary. Therefore arbitrary reliability can be achieved The fraction of raw storage space loss is n / (n + m) Note that space loss can also be reduced arbitrarily by increasing m At the cost of increasing the amount of data loss if this would ever happen 57 Data Technologies – CERN School of Computing 2019 Analogy with the gambling world We just demonstrated that you can achieve “arbitrary reliability” at the cost of an “arbitrary low” amount of disk space. This is possible because you increase the amount of data you accept loosing when this rare event happens. In the gambling world there are several playing schemes that allows you to win an arbitrary amount of money with an arbitrary probability. Example: you can easily win 100 Euros at > 99 % probability ... By playing up to 7 times on the “Red” of a French Roulette and doubling the bet until you win. The probability of not having a “Red” for 7 times is (19/37)7 = 0.0094) You just need to take the risk of loosing 12’700 euros with a 0.94 % probability Amount Win Lost Bet Cumulated Probability Amount Probability Amount 100 100 48.65% 100 51.35% 100 200 300 73.63% 100 26.37% 300 400 700 86.46% 100 13.54% 700 800 1500 93.05% 100 6.95% 1500 1600 3100 96.43% 100 3.57% 3100 3200 6300 98.17% 100 1.83% 6300 6400 12700 99.06% 100 0.94% 12700

Data Technologies-CERN School of Compuaing 2019 Data Technologes-CERN School of Computing 2019 Practical comments Chunk transfers ◆n can be.. Among many protocols,Bittorrent is the most popular ◆1=Parity .An SHA1 hash(160 bit digest)is created for each chunk 2=Parity Reed-Solomon,double parity 3=Reed Solomon,ZFS triple parity All digests are assembled in a"torrent file"with all relevant m chunks of any (m+n)sets are enough to obtain the information.Must be metadata information saved on independent media Torrent files are published and registered with a tracker Performance can depend on m (and thus on s,the size of the chunks):The which maintains lists of the clients currently sharing the larger m is,the more the reading can be parallefized torrent's chunks Unfil the client bandwidth is reached For n>2 Reed Solomon has a computational impact affecting performances In particular,torrent files have: Alternate encoding algorithms are available requiring z chunks to reconstruct an"announce"section,which specifies the URL of the the data,being m<z<m+n (see example later on with LDPC). tracker These guarantees high performance at the expenses of additional storage When m=z we fall back in the optimal"storage scenario an"info"section,containing(suggested)names for the files, their lengths,the list of SHA-1 digests .Reminder:it is the client's duty to reassemble the initial file and therefore it is the client that always verifies the integrity of the data received Data Technologles-CERN School af Compuaing 2019 Data Technologles-CERN School of Computing 2019 Reassembling the chunks Ensure integrity,identify corruptions You must be able to identify broken files .A hash is required for every file. You must be able to identify broken chunks A hash for every chunk (example SHA1 160 bit digest)guarantees chunks integrity. It tells you the corrupted chunks and allows you to correct n errors (instead of n-1 if you would not know which chunks are corrupted) File hash ■ ash or checksum infrastnuchure

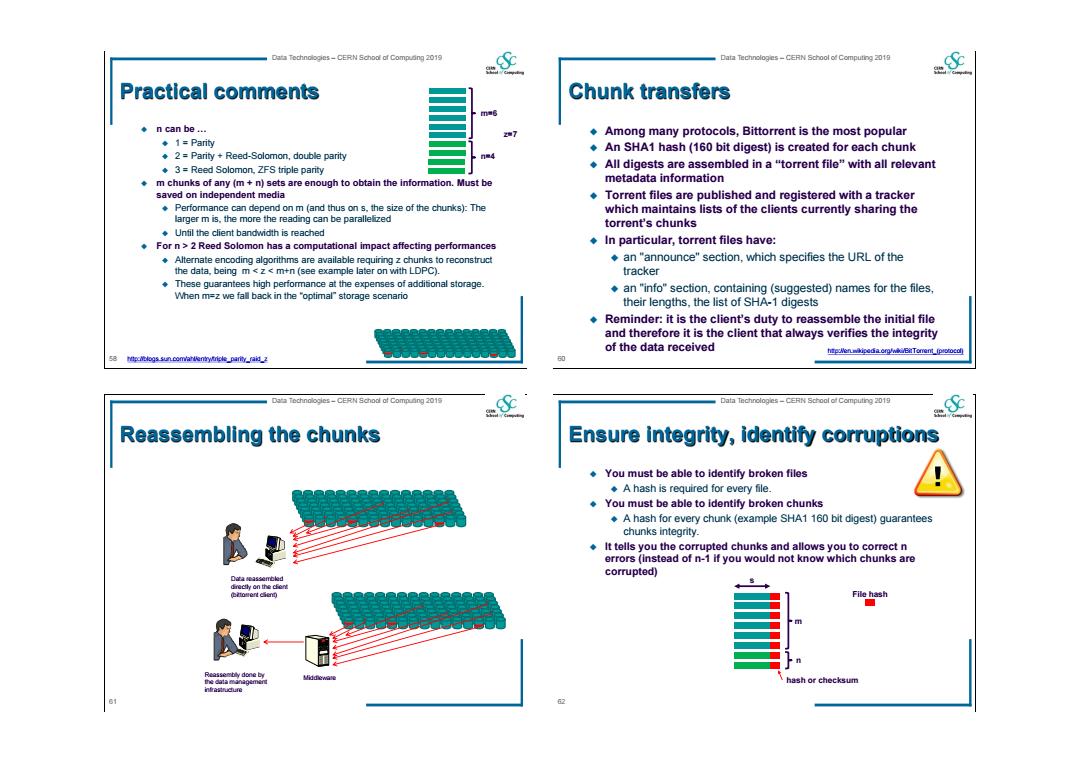

58 Data Technologies – CERN School of Computing 2019 Practical comments n can be … 1 = Parity 2 = Parity + Reed-Solomon, double parity 3 = Reed Solomon, ZFS triple parity m chunks of any (m + n) sets are enough to obtain the information. Must be saved on independent media Performance can depend on m (and thus on s, the size of the chunks): The larger m is, the more the reading can be parallelized Until the client bandwidth is reached For n > 2 Reed Solomon has a computational impact affecting performances Alternate encoding algorithms are available requiring z chunks to reconstruct the data, being m < z < m+n (see example later on with LDPC). These guarantees high performance at the expenses of additional storage. When m=z we fall back in the “optimal” storage scenario n=4 m=6 http://blogs.sun.com/ahl/entry/triple_parity_raid_z z=7 60 Data Technologies – CERN School of Computing 2019 Chunk transfers Among many protocols, Bittorrent is the most popular An SHA1 hash (160 bit digest) is created for each chunk All digests are assembled in a “torrent file” with all relevant metadata information Torrent files are published and registered with a tracker which maintains lists of the clients currently sharing the torrent’s chunks In particular, torrent files have: an "announce" section, which specifies the URL of the tracker an "info" section, containing (suggested) names for the files, their lengths, the list of SHA-1 digests Reminder: it is the client’s duty to reassemble the initial file and therefore it is the client that always verifies the integrity of the data received http://en.wikipedia.org/wiki/BitTorrent_(protocol) 61 Data Technologies – CERN School of Computing 2019 Reassembling the chunks Data reassembled directly on the client (bittorrent client) Reassembly done by the data management infrastructure Middleware 62 Data Technologies – CERN School of Computing 2019 Ensure integrity, identify corruptions You must be able to identify broken files A hash is required for every file. You must be able to identify broken chunks A hash for every chunk (example SHA1 160 bit digest) guarantees chunks integrity. It tells you the corrupted chunks and allows you to correct n errors (instead of n-1 if you would not know which chunks are corrupted) n s m hash or checksum File hash