The Complexity of Algorithms 17 The assumption is made that C is not in the array (and thus the complexity is the worst case).For n =0,one test (U<L)and one assignment are needed.For n=1,one test (U<L),two arithmetic and an assignment (index :=(L+U)div 2), one array access and an assignment (element:=A[index]),one test (element =C) and finally a recursive call are needed,the latter requiring one subtraction,one assignment and a control transfer to the procedure.This assumes the worst case when C is not in the array.When n>1,a similar expression can be formed.Thus 2 n=0 T(n)= 13 n=1 11+Tn/2)n>1 To solve this recurrence relation we proceed as follows: T(n=11+T(n/2) =11+11+T(n/4)=2×11+T =3×11+1 23 =k×11+T n So,whenn=1,we can solve the recurrence relation by substituting the value of T(1)in the above formula. )k=1→n=2k→k=log2n Therefore T(n)=111og2n+13 and T(n)=O(logzn) Recurrence relations such as this one occur very frequently in the analysis of computer algorithms.For the purpose of the algorithms in the remainder of this book,we can generalise this result and give the following formulae for solving similar recurrence relations: a,bc≥0 b n=1 1) T(n)= a1 bn n>1

18 Abstract Data Types and Algorithms (on a<c Then T(n)= O(n logen) a=C O(n logen) a>c b n=1 (2) T(n)= a()in n>1 0(n2) a<c2 Then T(n)= O(n2logen) a=c2 (O(n logen) a>c2 Using techniques similar to the one used in the b_search function,these formulae are easy to verify (see exercise 1.17). Let us return to the segl function and examine its time complexity.The function A is recursive.Let us first look at the asymptotic complexity of A.If F(n)denotes the time complexity of function A,in a similar way to the b_search above,we can formulate F(n)as 1+1 n=0 F(n)= 1+1+1 n=1 1+1+F(n-1)+F(n-2)+2+1+3n>1 When n =0,one test and one assignment are necessary.For n=1,two tests and one assignment are needed and finally for n>1,two tests,two calls to the same function of sizes n-1 and n-2 (that is,F(n-1)and F(n-2))and one addition, two subtractions and one assignment,are needed.Therefore 2 n=0 F(n)= 3 n=1 (8+F(n-1)+F(n-2) n>1 Now the time for the entire procedure segl can be determined.Let T(n)be the time complexity of seq1.Segl only calls function A n+1 times.Thus T(n)=F0)+F(1)+F(2)+..+F(n)+1 T(n)=1+卫F() =0 To find T(n),first a closed formula should be found for F(n)(in terms of n)and then substituted in T(n).A close examination of the recurrence relation F shows that the techniques used for the b_search function does not yield any results. Instead,for F(n)we have to use some approximation techniques.To begin with let us introduce the recurrence relation R(n)as

The Complexity of Algorithms 19 n=0 R(n)= 1 n=1 (R(n-1)+R(n-2) n>1 It is clear that F(n)>R(n)for all n.Now we should try to find a closed formula for R(n).We can find such a formula using the approximation R(n)=kx for some constants k and x If we substitute this value in the above recurrence relation we get R(n)=R(n-1)+R(n-2) kxn=kxn-1+kxn-2 Dividing both sides by x-2: x2=x+1 x2-x-1=0 x=1±V+4) 2 x=1±V5 2 x=(1+2.223/2)=1.618≈1.62 Thus:R(n)=k (1.62)"4 for some k. Now F(n)>R(n),therefore F(n)>k (1.62)"for some constant k According to the definition of we can assert F(m)=2(1.62m) This indicates that algorithm A requires at least an exponential amount of time. To calculate T(n): T(n)=1+2F(0 =0 T(n)>1+x+x+x3+...+x"wherex=1.62 >xm1-1 x-1 >1.618(xm+1-1) Therefore we conclude that T(n)=(1.62+1).Again this suggests that the procedure seql is an exponential algorithm.However,the procedure seq2 can be

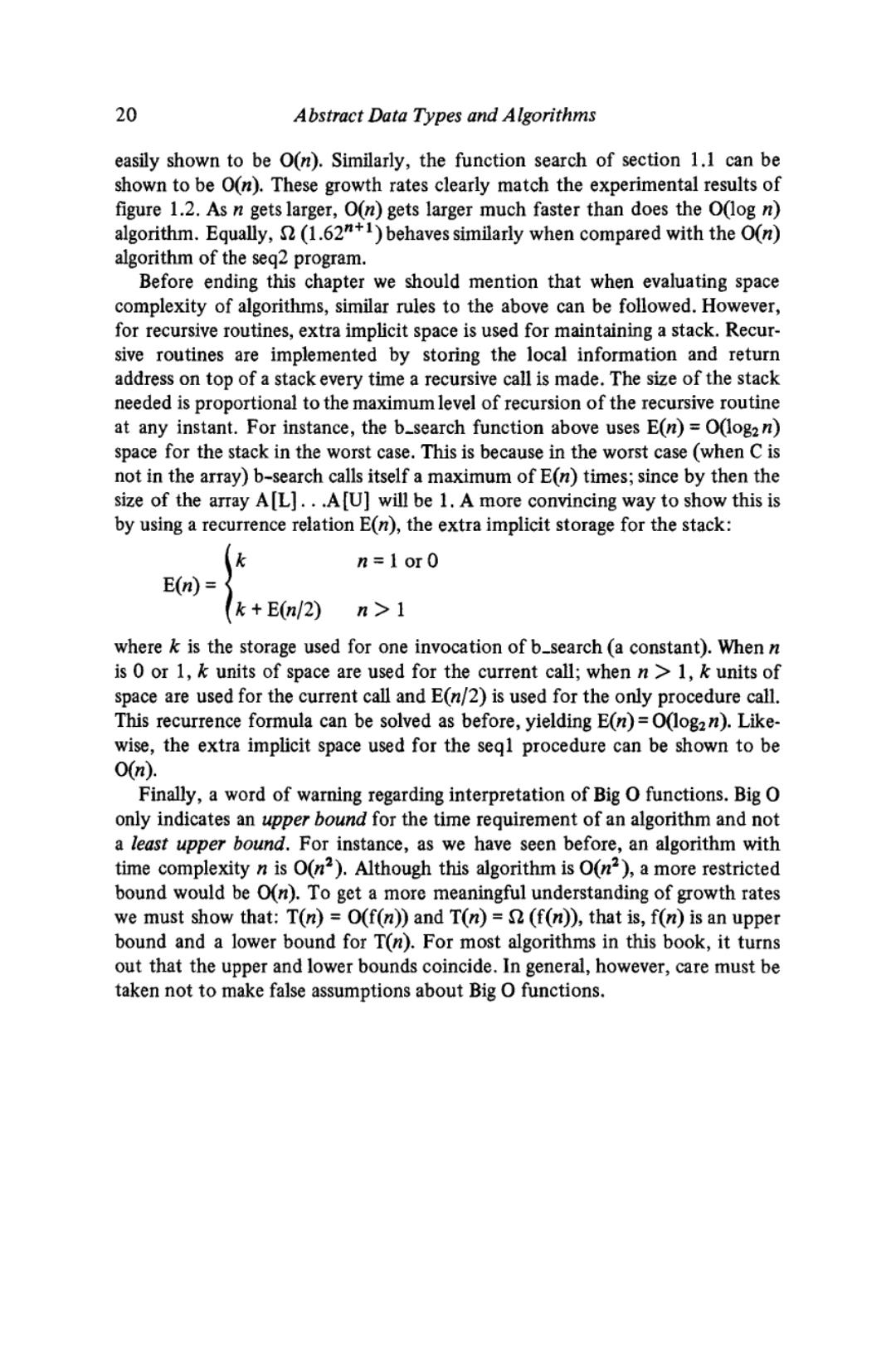

20 Abstract Data Types and Algorithms easily shown to be O(n).Similarly,the function search of section 1.1 can be shown to be O(n).These growth rates clearly match the experimental results of figure 1.2.As n gets larger,O(n)gets larger much faster than does the O(log n) algorithm.Equally,(1.621)behaves similarly when compared with the O(n) algorithm of the seg2 program. Before ending this chapter we should mention that when evaluating space complexity of algorithms,similar rules to the above can be followed.However, for recursive routines,extra implicit space is used for maintaining a stack.Recur- sive routines are implemented by storing the local information and return address on top of a stack every time a recursive call is made.The size of the stack needed is proportional to the maximum level of recursion of the recursive routine at any instant.For instance,the b_search function above uses E(n)=O(log2n) space for the stack in the worst case.This is because in the worst case (when C is not in the array)b-search calls itself a maximum of E(n)times;since by then the size of the array A[L]...A[U]will be 1.A more convincing way to show this is by using a recurrence relation E(n),the extra implicit storage for the stack: k n=1 or0 E(n)= k+E(n/2) n>1 where k is the storage used for one invocation of b_search (a constant).When n is 0 or 1,k units of space are used for the current call;when n>1,k units of space are used for the current call and E(n/2)is used for the only procedure call. This recurrence formula can be solved as before,yielding E(n)=O(log2n).Like- wise,the extra implicit space used for the seql procedure can be shown to be 0(n). Finally,a word of warning regarding interpretation of Big O functions.Big O only indicates an upper bound for the time requirement of an algorithm and not a least upper bound.For instance,as we have seen before,an algorithm with time complexity n is O(n2).Although this algorithm is O(n2),a more restricted bound would be O(n).To get a more meaningful understanding of growth rates we must show that:T(n)=O(f(n))and T(n)=(f(n)),that is,f(n)is an upper bound and a lower bound for T(n).For most algorithms in this book,it turns out that the upper and lower bounds coincide.In general,however,care must be taken not to make false assumptions about Big O functions

The Complexity of Algorithms 21 Exercises 1.1.What does the following procedure do? (A is an ordered array【l..n】of integer x) procedure search (C:integer;var I integer); var J integer; found boolean; begin J:=1;I =0;found false;initialise J,I and found while not found and J <n do ifA[J】=c then found :true else J :J 1; If found then I :J end; How does this procedure compare with the one given in section 1.1? 1.2.In the sequential search program of section 1.1,what are the best-case, worst-case and average-case complexities in terms of assignment and test operations if C may not be in the array?State your assumptions. 1.3.Modify the function search of section 1.1 so that it would be suitable for unordered arrays.What is the average time complexity of this new func- tion? 1.4.In the search problem of section 1.1,assume that the array A is defined A:array [1..n]of integer;in non-decreasing order Are the two sequential and binary search algorithms equivalent?If not, why not? 1.5.In the sequential search program,modify the list as A:array [1..nPlus1]of integer; where nPlus1 n 1 and the array is not in sorted order.Naturally,a slightly modified version of the function search can be used.An alternative solution would be:to search for C,first execute A[nPlus1]:=C;then search sequentially for C.This loop is bound to find C.Finally if C is found in A[nPlus1],the search has failed.Implement these two programs and compare their execution times for a large sequence (n 1000).Which one is faster and why? 1.6. Consider the following two programs which find A[m],the minimum element of an unordered array A[1..n]: What are average-case,worst-case and best-case time complexities of these algorithms?Which one is fastest?Asymptotically?