第3卷第5期 智能系统学报 Vol 3 No 5 2008年10月 CAA I Transactions on Intelligent Systems 0ct2008 基于局部SM分类器的表情识别方法 孙正兴,徐文晖 (南京大学计算机软件新技术国家重点实验室,江苏南京210093) 摘要:提出了一种新的视频人脸表情识别方法.该方法将识别过程分成人脸表情特征提取和分类2个部分,首先采用 基于点跟踪的活动形状模型(ASM)从视频人脸中提取人脸表情几何特征:然后,采用一种新的局部支撑向量机分类器对 表情进行分类.在Cohn-Kanade数据库上对N、SM、NN-SM和LSM4种分类器的比较实验结果验证了所提出方 法的有效性 关键字:人脸表情识别:局部支撑向量机活动形状模型;几何特征 中图分类号:IP391文献标识码:A文章编号:1673-4785(2008)050455-12 Facal expression recogn ition based on local SVI classifiers SUN Zheng-xing,XU Wen-hui (State Key Lab or Novel Sofware Technobgy,Nanjing University,Nanjing 210093,China) Abstract:This paper presents a novel technique developed for the identification of facial expressions in video sources The method uses two steps facial expression feature extraction and expression classification Firstwe used an active shape model (ASM)based on a facial point tracking system to extract the geometric features of facial ex- pressions in videos Then a new type of local support vecpormachine (LSVM)was created to classify the facial ex- pressions Four different classifiers using KNN,SVM,KNN-SVM,and LSVM were compared with the new LSVM. The results on the Cohn-Kanade database showed the effectiveness of our method Keywords:facial expression recognition;bcal SVM;active shape model,geometry feature Automatic facial expression recogniton has attrac-erature to automate the recognition of facial expressions ted a lot of attention in recent years due o its potential in mug shots or video sequences Early methods used ly vital role in applications,particularly those using mug shots of expressions that captured characteristic human centered interfaces Many applications,such as mages at the apex However,according o psy virtual reality,video-conferencing.user pofiling.and chobgists,analysis of video sequences produces customer satisfaction studies for broadcast and web more accurate and robust recognition of facial expres- services,require efficient facial expresson recognition sions These methods can be categorized based on the in order to achieve their desired results Therefore,the data and features they use,as well as the classifiers mpact of facial expression recognition on the above-created for expression recognition In summary,the mentoned applications is constantly growing classifiers include Nearest Neighbor classifier,Neu- Several app oaches have been reported in the lit ral Neworks,SVM,Bayesian Neworks,Ada- Boost classifie and hidden Markov mode The 收稿日期:2008-07-11 data used for automated facial expresson analysis 基金项目:National Hig-Technolgy Research and Develpment Program (863)of China (2007AA01Z334);National Natural Science (AFEA)can be geometric features or texture features, Foundaton of China(69903006,60373065,0721002). 通信作者:孙正兴.Email:sx@nju.edu.cn for each there are different feature extracton methods Though facial expression recognition has made remark- 1994-2009 China Academic Journal Electronic Publishing House.All rights reserved.http://www.cnki.net

第 3卷第 5期 智 能 系 统 学 报 Vol. 3 №. 5 2008年 10月 CAA I Transactions on Intelligent System s Oct. 2008 基于局部 SVM分类器的表情识别方法 孙正兴 ,徐文晖 (南京大学 计算机软件新技术国家重点实验室 ,江苏 南京 210093) 摘 要 :提出了一种新的视频人脸表情识别方法. 该方法将识别过程分成人脸表情特征提取和分类 2个部分 ,首先采用 基于点跟踪的活动形状模型 (ASM)从视频人脸中提取人脸表情几何特征 ;然后 ,采用一种新的局部支撑向量机分类器对 表情进行分类. 在 Cohn2Kanade数据库上对 KNN、SVM、KNN2SVM和 LSVM 4种分类器的比较实验结果验证了所提出方 法的有效性. 关键字 :人脸表情识别 ;局部支撑向量机 ;活动形状模型 ;几何特征 中图分类号 : TP391 文献标识码 : A 文章编号 : 167324785 (2008) 0520455212 Fac ial expression recogn ition based on local SVM classifiers SUN Zheng2xing, XU W en2hui ( State Key Lab for Novel Software Technology, Nanjing University, Nanjing 210093, China) Abstract: This paper p resents a novel technique developed for the identification of facial exp ressions in video sources. The method uses two step s: facial exp ression feature extraction and exp ression classification. Firstwe used an active shape model (ASM) based on a facial point tracking system to extract the geometric features of facial ex2 p ressions in videos. Then a new type of local support vectormachine (LSVM) was created to classify the facial ex2 p ressions. Four different classifiers using KNN, SVM, KNN2SVM, and LSVM were compared with the new LSVM. The results on the Cohn2Kanade database showed the effectiveness of our method. Keywords: facial exp ression recognition; local SVM; active shape model; geometry feature 收稿日期 : 2008207211. 基金项目 : National High Technology Research and Development Program (863) of China ( 2007AA01Z334) ; National Natural Science Automatic facial exp ression recognition has attrac2 ted a lot of attention in recent years due to its potential2 ly vital role in app lications, particularly those using human centered interfaces. Many app lications, such as virtual reality, video2conferencing, user p rofiling, and customer satisfaction studies for broadcast and web services, require efficient facial exp ression recognitio Foundation of China (69903006, 60373065, 0721002) . 通信作者 :孙正兴. E2mail: szx@nju.edu.cn n in order to achieve their desired results. Therefore, the impact of facial exp ression recognition on the above2 mentioned app lications is constantly growing. Several app roaches have been reported in the lit2 erature to automate the recognition of facial exp ressions in mug shots or video sequences. Early methods used mug shots of exp ressions that cap tured characteristic images at the apex [ 122 ] . However, according to p sy2 chologists [ 3 ] , analysis of video sequences p roduces more accurate and robust recognition of facial exp res2 sions. These methods can be categorized based on the data and features they use, as well as the classifiers created for exp ression recognition. In summary, the classifiers include Nearest Neighbor classifier [ 4 ] , Neu2 ral Networks [ 5 ] , SVM [ 6 ] , Bayesian Networks [ 7 ] , Ada2 Boost classifier [ 6 ] and hidden Markov model [ 8 ] . The data used for automated facial exp ression analysis (AFEA) can be geometric features or texture features, for each there are different feature extraction methods. Though facial exp ression recognition has made remark2

·456· 智能系统学报 第3卷 able progress,recognizing facial expressions with high active shape model (ASM)based tracking In each accuracy is a difficult problem!AFEA and its effec- video sequence,the first frame shows a neutral exp res- tive use in computing presents a number of difficult sion while the last frame shows an expression with max- challenges In general,wo main processes can be dis-mum intensity For each frame,we extract geometric tinguished in tackling the problem:1)ldentification of features as a static feature vector,which represents fa- features that contain useful infomation and reduction of cial contour infomation during changes of expression the dmensions of feature vectors in order to design bet- At the end,by subtracting the static features of the first ter classifiers 2)Design and mplementation of robust frame from those of the last,we get dynam ic geometric classifiers that can leam the underlying models of facial infomation or classifier input Then an LSVM classifi- expressons er is used for classification into the six basic expression We propose a new classifier for facial expression types recognition,which comes from the ideas used in the The rest of the paper is organized as follows Sec- KNN-SVM algorithm.Ref [10 proposed this algo- tion 2 reviews facial expression recognition studies In rithm for visual object recognition This method com- Secton 3 we briefly describe our facial point tracking bines SVM and KNN classifiers and iplements accu- system and the features extracted for classification of rate local classification by using KNN for selecting rele- facial expressions Section 4 describes the Local SVM classifier used for classifying the six basic facial ex- vant training data for the SVM.In order to classify a pressions in the video sequences Experments,per sample x,it first selects k training samples nearest to fomance evaluations,and discussions are given in sec- the sample x,and then uses these k samples o train an tion 5.Finally,section 6 gives conclusions about our SVM model which is then used to make decisions work KNN-SVM builds a maxmal margin classifier in the neighborhood of a test sample using the feature space 1 Rela ted work induced by the SVM's kemel function But this classifi- Psychological studies have suggested that facial er discards nearest-neighbor searches from the SVM motion is fundamental to the recognition of facial ex- leaming algorithm.Once the K-nearest neighbors have pression Expermnents conducted by Bassili demon- been identified,the SVM algorithm completely ignores strated that humans do a better job recognizing exp res- their si ilarities to the given test example So we pres- sions from dynam ic mages as opposed to mug shots ent a new classifier based on KNN-SVM,called bcal Facial expressions are usually described in to ways SVM (LSVM),which incorporates neighborhood infor as combinations of action units,or as universal expres- mation into SVM leaming The principle behind LSVM sions The facial action coding system (FACS)was is that it reduces the mpact of support vectors located devebped to describe facial exp ressions using a combi- far away from a given test example nation of action units (AU)Each action unit co In this paper,a system for automatically recogniz responds to specific muscular activity that produces ing the six universal facial expressions anger,dis- momentary changes in facial appearance Universal ex- gust,fear,joy,sadness,and surprise)in video se- pressions are studied as a complete representation of a quences using geometrical feature and a novel class of specific type of intemal emotion,without breaking up SVM called LSVM is proposed The system detects expressions into muscular units Most commonly stud- frontal faces in video sequences and then geometrical ied universal expressions include happ iness,anger, features of some key facial points are extracted using sadness,fear,and disgust In this study,universal 1994-2009 China Academic Journal Electronic Publishing House.All rights reserved.http://www.cnki.net

able p rogress, recognizing facial exp ressions with high accuracy is a difficult p roblem [ 9 ] . AFEA and its effec2 tive use in computing p resents a number of difficult challenges. In general, two main p rocesses can be dis2 tinguished in tackling the p roblem: 1) Identification of features that contain useful information and reduction of the dimensions of feature vectors in order to design bet2 ter classifiers. 2) Design and imp lementation of robust classifiers that can learn the underlyingmodels of facial exp ressions. W e p ropose a new classifier for facial exp ression recognition, which comes from the ideas used in the KNN2SVM algorithm. Ref. [ 10 ] p roposed this algo2 rithm for visual object recognition. This method com2 bines SVM and KNN classifiers and imp lements accu2 rate local classification by using KNN for selecting rele2 vant training data for the SVM. In order to classify a samp le x, it first selects k training samp les nearest to the samp le x, and then uses these k samp les to train an SVM model which is then used to make decisions. KNN2SVM builds a maximal margin classifier in the neighborhood of a test samp le using the feature space induced by the SVM’s kernel function. But this classifi2 er discards nearest2neighbor searches from the SVM learning algorithm. Once the K2nearest neighbors have been identified, the SVM algorithm comp letely ignores their sim ilarities to the given test examp le. So we p res2 ent a new classifier based on KNN2SVM, called local SVM (LSVM) , which incorporates neighborhood infor2 mation into SVM learning. The p rincip le behind LSVM is that it reduces the impact of support vectors located far away from a given test examp le. In this paper, a system for automatically recogniz2 ing the six universal facial exp ressions ( anger, dis2 gust, fear, joy, sadness, and surp rise) in video se2 quences using geometrical feature and a novel class of SVM called LSVM is p roposed. The system detects frontal faces in video sequences and then geometrical features of some key facial points are extracted using active shape model (ASM ) based tracking. In each video sequence, the first frame shows a neutral exp res2 sion while the last frame shows an exp ression with max2 imum intensity. For each frame, we extract geometric features as a static feature vector, which rep resents fa2 cial contour information during changes of exp ression. A t the end, by subtracting the static features of the first frame from those of the last, we get dynam ic geometric information for classifier input. Then an LSVM classifi2 er is used for classification into the six basic exp ression types. The rest of the paper is organized as follows. Sec2 tion 2 reviews facial exp ression recognition studies. In Section 3 we briefly describe our facial point tracking system and the features extracted for classification of facial exp ressions. Section 4 describes the Local SVM classifier used for classifying the six basic facial ex2 p ressions in the video sequences. Experiments, per2 formance evaluations, and discussions are given in sec2 tion 5. Finally, section 6 gives conclusions about our work. 1 Rela ted work Psychological studies have suggested that facial motion is fundamental to the recognition of facial ex2 p ression. Experiments conducted by Bassili [ 11 ] demon2 strated that humans do a better job recognizing exp res2 sions from dynam ic images as opposed to mug shots. Facial exp ressions are usually described in two ways: as combinations of action units, or as universal exp res2 sions. The facial action coding system ( FACS) was developed to describe facial exp ressions using a combi2 nation of action units (AU) [ 12 ] . Each action unit cor2 responds to specific muscular activity that p roduces momentary changes in facial appearance. Universal ex2 p ressions are studied as a comp lete rep resentation of a specific type of internal emotion, without breaking up exp ressions into muscular units. Most commonly stud2 ied universal exp ressions include happ iness, anger, sadness, fear, and disgust. In this study, universal ·456· 智 能 系 统 学 报 第 3卷

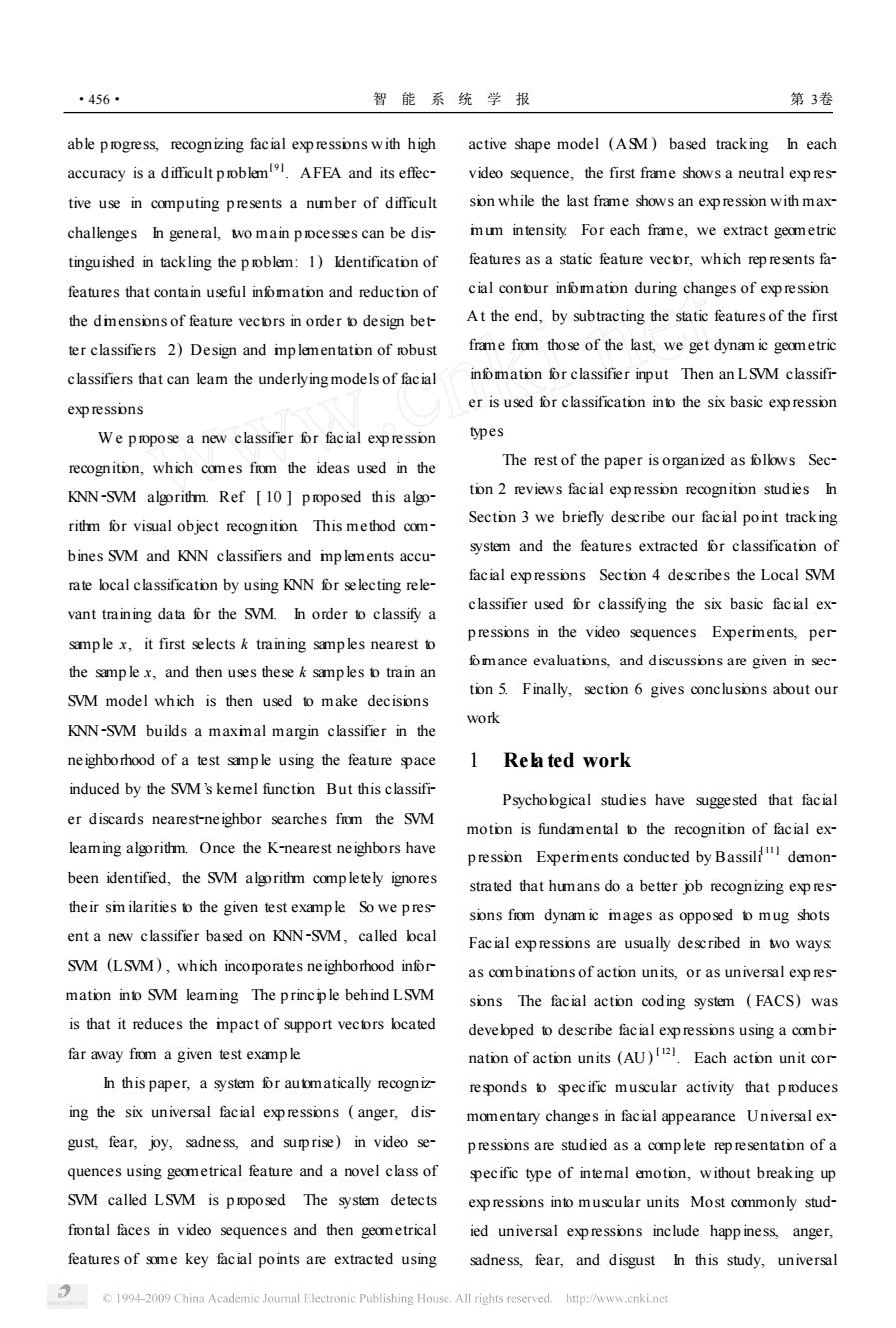

第5期 孙正兴,等:基于局部SM分类器的表情识别方法 ·457- expressons were analyzed using the facial exp ression sions For the static case,a DBN is used,organized in coding system. a tree structure For the dynam ic approach,a multi- Many automated facial expression analysis meth- level hidden Markov models (HMMs)classifier is em- ods have been devebped Masued optical plyed flow (OF)to recognize facial exp ressions He was one These methods are sm ilar in that they first extract of the first to use mage-processing techniques to recog- some features from the mages,then these features are nize facial expressions Black and Yacoobls1 used used as inputs into a classification system,and the out cal parameterized models of mage motion to recover come is one of the pre-selected emotion categories non-rigid motion Once recovered,these parameters They differ mainly in the features extracted fiom the were used as inputs o a rule-based classifier o recog video mages and in the classifiers used to distinguish nize the six basic facial expressions Ref [16]used beteen the different emotions In the follwing sec- bwer face tracking to extract mouth shape features and tions,an automatic geometric feature based method is used them as inputs to an HMM based facial expression proposed,and then LSVM classifiers are used for rec- recognition system recognizing neutral,happy,sad, ognizing facial expressions fiom video sequences and an open mouth).Bartlett autmatically detects 2 Geom etrical fea ture extraction frontal faces in the video stream and classifies them in seven classes in real tme:neutral,anger,disgust, Our work focused on the design of classifiers for fear,joy,sadness,and surprise An exp ression recog- mproving recognition accuracy,follwing the extrac- nizer receives mage regions produced by a face detec- tion of geometric features using a model-based face tor and then a Gabor representation of the facial mage tracking system.That is,the proposed process for fa- region is fomed to be later processed by a bank of cial expression recognition is composed of to steps SVM classifiers Facial feature detection and tracking one AS based geometric infomation extraction;the is based on active InfraRed illum ination in Ref [18], next LSVM based classification Geometric feature in- in order to provide visual infomation under variable fomation extraction is perfomed by ASM based auto- lighting and head motion The classification is per matic locating and tracking,while the classification of fomed using a dynam ic Bayesian netork (DBN).geometric infomation is peromed by an LSVM Classi- COHEN eta popod a methodor static and dy- fier Fig 1 shows the proposed facial expression recog- nam ic segentation and classification of facial expres- nition scheme First frame Distance Six general classification parameters of facial expressions 个 Face video ASM-based Geometric Local SVM sequences tracking features classifiers ◆ Distance Classifier Last frame parameters Samples collection training Fig 1 Process of facial expression recognition for video sequences 1994-2009 China Academic Journal Electronic Publishing House.All rights reserved.http://www.cnki.net

exp ressions were analyzed using the facial exp ression coding system. Many automated facial exp ression analysis meth2 ods have been developed [ 13 ] . Mase [ 14 ] used op tical flow (OF) to recognize facial exp ressions. He was one of the first to use image2p rocessing techniques to recog2 nize facial exp ressions. B lack and Yacoob [ 15 ] used lo2 cal parameterized models of image motion to recover non2rigid motion. Once recovered, these parameters were used as inputs to a rule2based classifier to recog2 nize the six basic facial exp ressions. Ref. [ 16 ] used lower face tracking to extract mouth shape features and used them as inputs to an HMM based facial exp ression recognition system ( recognizing neutral, happy, sad, and an open mouth). Bartlett [ 17 ] automatically detects frontal faces in the video stream and classifies them in seven classes in real time: neutral, anger, disgust, fear, joy, sadness, and surp rise. An exp ression recog2 nizer receives image regions p roduced by a face detec2 tor and then a Gabor rep resentation of the facial image region is formed to be later p rocessed by a bank of SVM classifiers. Facial feature detection and tracking is based on active InfraRed illum ination in Ref. [ 18 ], in order to p rovide visual information under variable lighting and head motion. The classification is per2 formed using a dynam ic Bayesian network (DBN ). COHEN et al [ 18 ] p roposed a method for static and dy2 nam ic segmentation and classification of facial exp res2 sions. For the static case, a DBN is used, organized in a tree structure. For the dynam ic app roach, a multi2 level hidden Markov models (HMM s) classifier is em2 p loyed. These methods are sim ilar in that they first extract some features from the images, then these features are used as inputs into a classification system, and the out2 come is one of the p re2selected emotion categories. They differ mainly in the features extracted from the video images and in the classifiers used to distinguish between the different emotions. In the following sec2 tions, an automatic geometric feature based method is p roposed, and then LSVM classifiers are used for rec2 ognizing facial exp ressions from video sequences. 2 Geom etr ica l fea ture extraction Our work focused on the design of classifiers for imp roving recognition accuracy, following the extrac2 tion of geometric features using a model2based face tracking system. That is, the p roposed p rocess for fa2 cial exp ression recognition is composed of two step s: one ASM based geometric information extraction; the next LSVM based classification. Geometric feature in2 formation extraction is performed by ASM based auto2 matic locating and tracking, while the classification of geometric information is performed by an LSVM Classi2 fier. Fig. 1 shows the p roposed facial exp ression recog2 nition scheme. Fig. 1 Process of facial exp ression recognition for video sequences 第 5期 孙正兴 ,等 :基于局部 SVM分类器的表情识别方法 ·457·

·458· 智能系统学报 第3卷 For each input video sequence,an AdaBoost Where,B is a regulating parameter usually set beteen based face detector is applied to detect frontal and I and 3 according to the desired degree of flexibility in near-frontal faces in the first frame Inside detected the shape model m is the number of retained eigen- faces,our method identifies some mportant facial vectors,and is the eigenvalues of the covariance landmarks using the active shape model (ASM).ASM matrix The intensity model is constructed by compu- automatically bcalizes the facial feature points in the ting the second order statistics of nomalized mage gra- first frame and then trackes the feature points through dients,sampled at each side of the landmarks,perpen- the video frames as the facial expression evolves dicular to the shape's contour,hereinafter referred to as through tme The first frame shows a neutral expres-the profile In other words,the profile is a fixed-size sion while the last frame shows an expression with the vector of values in this case,pixel intensity values) greatest intensity For each frame,we extract distance smpled alng the pependicular o the conour such parameters beween some key facial points At the that the contour passes right through the middle of the end,by subtracting distance parameters from the first perpendicular The matching procedure is an altema- frame from those of the last frame,we get the geomet-tion of mage driven landmark disp lacements and statis- ric features for classification Then a LSVM classifier is tical shape constraining based on the PDM.It is usual- used for classification into the six basic expression ly perfomed in a multi-resolution fashion in order to types enhance the capture range of the algorithm.The land- 2 1 ASM based loca tng and tracking mark displacements are individually detem ined using AM is empboyed to extract shape infomation the intensity model,by m inm izing the Mahalanobis on specific faces in each frame of the video sequence distance beteen the candidate gradient and the model's The use of a face detection algorithm as a prior step has mean the advantage of speeding up the search for the shape To extract facial feature points in case of expres- parameters during ASM based processing ASM is built sion variation,we trained an active shape model from from sets of prom inent points known as landmarks, the JAFFE (Japanese female facial expression)data- computing a point distribution model (PDM)and a ba,which contains219 mages from 10 individual cal mage intensity model around each of those points Japanese females For each subject there are six basic The PDM is constructed by app lying PCA to an aligned facial expressions (anger,disgust,fear,happ iness, set of shapes,each represented by landmarks The o- sadness,suprise)and a neutral face 68 landmarks riginal shapes and their model representation b,(i=1.are used to define the face shape,as shown in Fig 2 2..N)are related by means of the mean shape u and the eigenvector matrixφ: b=φT(u-d,w,=u+中b. (1) To reduce the dmensions of the representation,it is possible to use only the eigenvectors corresponding to the largest eigenvalues Therefore,Equ (1)becomes an approxmation,with an error depending on the mag- nitude of the excluded eigenvalues Furthemore,un- der Gaussian assumptions,each component of the b, vectors is constrained to ensure that only valid shapes are represented,as follows |1≤BNn,1≤i≤N,1≤m≤M2) Fig 2 ASM training smple 1994-2009 China Academic Journal Electronic Publishing House.All rights reserved.http://www.cnki.net

For each input video sequence, an AdaBoost based face detector is app lied to detect frontal and near2frontal faces in the first frame. Inside detected faces, our method identifies some important facial landmarks using the active shape model (ASM). ASM automatically localizes the facial feature points in the first frame and then trackes the feature points through the video frames as the facial exp ression evolves through time. The first frame shows a neutral exp res2 sion while the last frame shows an exp ression with the greatest intensity. For each frame, we extract distance parameters between some key facial points. A t the end, by subtracting distance parameters from the first frame from those of the last frame, we get the geomet2 ric features for classification. Then a LSVM classifier is used for classification into the six basic exp ression types. 2. 1 ASM ba sed loca ting and tracking ASM [ 19 ] is emp loyed to extract shape information on specific faces in each frame of the video sequence. The use of a face detection algorithm as a p rior step has the advantage of speeding up the search for the shape parameters during ASM based p rocessing. ASM is built from sets of p rom inent points known as landmarks, computing a point distribution model (PDM) and a lo2 cal image intensity model around each of those points. The PDM is constructed by app lying PCA to an aligned set of shapes, each rep resented by landmarks. The o2 riginal shapes and their model rep resentation bi ( i = 1, 2, …, N ) are related by means of the mean shape u and the eigenvector matrixφ: bi =φT ( ui - u) , ui = u +φbi . (1) To reduce the dimensions of the rep resentation, it is possible to use only the eigenvectors corresponding to the largest eigenvalues. Therefore, Equ. (1) becomes an app roximation, with an error depending on the mag2 nitude of the excluded eigenvalues. Furthermore, un2 der Gaussian assump tions, each component of the bi vectors is constrained to ensure that only valid shapes are rep resented, as follows: | b m i | ≤β λm , 1 ≤ i ≤N, 1 ≤m ≤M. (2) W here, β is a regulating parameter usually set between 1 and 3 according to the desired degree of flexibility in the shape model. m is the number of retained eigen2 vectors, and λm is the eigenvalues of the covariance matrix. The intensity model is constructed by compu2 ting the second order statistics of normalized image gra2 dients, samp led at each side of the landmarks, perpen2 dicular to the shape’s contour, hereinafter referred to as the p rofile. In other words, the p rofile is a fixed2size vector of values ( in this case, p ixel intensity values) samp led along the perpendicular to the contour such that the contour passes right through the m iddle of the perpendicular. The matching p rocedure is an alterna2 tion of image driven landmark disp lacements and statis2 tical shape constraining based on the PDM. It is usual2 ly performed in a multi2resolution fashion in order to enhance the cap ture range of the algorithm. The land2 mark disp lacements are individually determ ined using the intensity model, by m inim izing the Mahalanobis distance between the candidate gradient and the model’s mean. To extract facial feature points in case of exp res2 sion variation, we trained an active shape model from the JAFFE (Japanese female facial exp ression) data2 base [ 19 ] , which contains 219 images from 10 individual Japanese females. For each subject there are six basic facial exp ressions ( anger, disgust, fear, happ iness, sadness, surp rise) and a neutral face. 68 landmarks are used to define the face shape, as shown in Fig. 2. Fig. 2 ASM training samp le ·458· 智 能 系 统 学 报 第 3卷

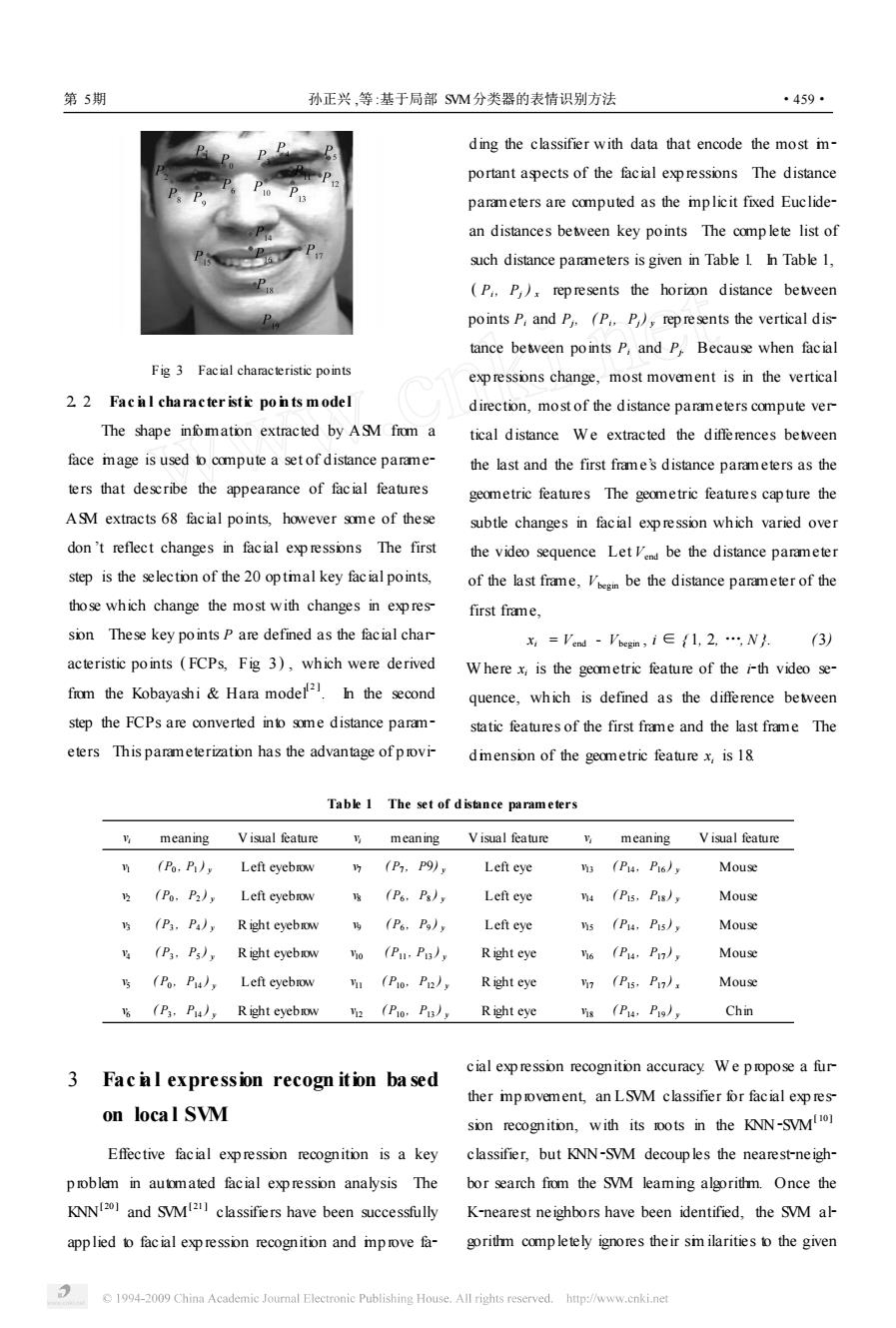

第5期 孙正兴,等:基于局部SM分类器的表情识别方法 ·459- ding the classifier with data that encode the most m- portant aspects of the facial expressions The distance parameters are computed as the mplicit fixed Euclide- an distances between key points The complete list of such distance parameters is given in Table 1 In Table 1, (P,.P),represents the horion distance beteen points P,and P,.(P,.P),represents the vertical dis- tance beteen points P,and P.Because when facial Fig 3 Facial characteristic points expressions change,most movement is in the vertical 22 Facil characteristic points model direction,most of the distance parameters compute ver The shape infomation extracted by AM from a tical distance We extracted the differences beteen face mage is used to compute a set of distance parame- the last and the first frame's distance parameters as the ters that describe the appearance of facial features geometric features The geometric features capture the ASM extracts 68 facial points,however some of these subtle changes in facial expression which varied over don't reflect changes in facial exp ressions The first the video sequence Let Vena be the distance parameter step is the selection of the 20 optmal key facial points, of the last frame,Ve be the distance parameter of the those which change the most with changes in expres- first frame. sion These key points P are defined as the facial char =Vead-eem,i∈fl,2,…sNk.(3) acteristic points (FCPs,Fig 3),which were derived Where x,is the geometric feature of the i-th video se- from the Kobayashi Hara model2.In the second quence,which is defined as the difference beteen step the FCPs are converted into some distance param- static features of the first frame and the last frame The eters This parameterization has the advantage of provi- diension of the geometric feature x,is 18 Table 1 The set of distance parameters meaning Visual feature v meaning Visual feature meaning Visual feature n(Po.P) Left eyebrow h(P,P9), Left eye n3 (Pu.P16)y Mouse 2(Po,P2), Left eyebrow (P6.Ps) Left eye M4 (Pis,Pis)y Mouse (P,P, Right eyebrow (P6.P)y Left eye ns (Pu.Pis)y Mouse v(P3.Ps)Right eyebrow Vho (Pu.Pu)y Right eye h6(P4,P, Mouse s (Po.Pu),Left eyebrow vu (Pio.Pu)y Right eye Vi (Pis.Pu)x Mouse 1(P3.Pu),Right eyebrow 2(P10,P3, R ight eye Vis (Pu:Pis)y Chin 3 Facil expression recogn ition based cial expression recognition accuracy We propose a fur ther mprovement,an LSVM classifier for facial exp res- on local SVM sion recognition,with its oots in the KNN-SVM!0 Effective facial expression recognition is a key classifier,but KNN-SVM decouples the nearest-neigh- problem in automated facial expression analysis The bor search from the SVM leaming algorithm.Once the KNNI201 and SVMI21 classifiers have been successfully K-nearest neighbors have been identified,the SVM al- applied to facial expression recognition and mprove fa- gorithm comp letely ignores their sm ilarities to the given 1994-2009 China Academic Journal Electronie Publishing House.All rights reserved.http://www.cnki.net

Fig. 3 Facial characteristic points 2. 2 Fac ia l character istic po ints m odel The shape information extracted by ASM from a face image is used to compute a set of distance parame2 ters that describe the appearance of facial features. ASM extracts 68 facial points, however some of these don ’t reflect changes in facial exp ressions. The first step is the selection of the 20 op timal key facial points, those which change the most with changes in exp res2 sion. These key points P are defined as the facial char2 acteristic points (FCPs, Fig. 3) , which were derived from the Kobayashi & Hara model [ 2 ] . In the second step the FCPs are converted into some distance param2 eters. This parameterization has the advantage of p rovi2 ding the classifier with data that encode the most im2 portant aspects of the facial exp ressions. The distance parameters are computed as the imp licit fixed Euclide2 an distances between key points. The comp lete list of such distance parameters is given in Table 1. In Table 1, ( Pi , Pj ) x rep resents the horizon distance between points Pi and Pj , ( Pi , Pj ) y rep resents the vertical dis2 tance between points Pi and Pj . Because when facial exp ressions change, most movement is in the vertical direction, most of the distance parameters compute ver2 tical distance. We extracted the differences between the last and the first frame’s distance parameters as the geometric features. The geometric features cap ture the subtle changes in facial exp ression which varied over the video sequence. Let Vend be the distance parameter of the last frame, Vbegin be the distance parameter of the first frame, xi = Vend - Vbegin , i ∈ { 1, 2, …, N }. (3) W here xi is the geometric feature of the i2th video se2 quence, which is defined as the difference between static features of the first frame and the last frame. The dimension of the geometric feature xi is 18. Table 1 The set of d istance param eters vi meaning V isual feature vi meaning V isual feature vi meaning V isual feature v1 ( P0 , P1 ) y Left eyebrow v7 ( P7 , P9) y Left eye v13 ( P14 , P16 ) y Mouse v2 ( P0 , P2 ) y Left eyebrow v8 ( P6 , P8 ) y Left eye v14 ( P15 , P18 ) y Mouse v3 ( P3 , P4 ) y Right eyebrow v9 ( P6 , P9 ) y Left eye v15 ( P14 , P15 ) y Mouse v4 ( P3 , P5 ) y Right eyebrow v10 ( P11 , P13 ) y Right eye v16 ( P14 , P17 ) y Mouse v5 ( P0 , P14 ) y Left eyebrow v11 ( P10 , P12 ) y Right eye v17 ( P15 , P17 ) x Mouse v6 ( P3 , P14 ) y Right eyebrow v12 ( P10 , P13 ) y Right eye v18 ( P14 , P19 ) y Chin 3 Fac ia l expression recogn ition ba sed on loca l SVM Effective facial exp ression recognition is a key p roblem in automated facial exp ression analysis. The KNN [ 20 ] and SVM [ 21 ] classifiers have been successfully app lied to facial exp ression recognition and imp rove fa2 cial exp ression recognition accuracy. W e p ropose a fur2 ther imp rovement, an LSVM classifier for facial exp res2 sion recognition, with its roots in the KNN2SVM [ 10 ] classifier, but KNN2SVM decoup les the nearest2neigh2 bor search from the SVM learning algorithm. Once the K2nearest neighbors have been identified, the SVM al2 gorithm comp letely ignores their sim ilarities to the given 第 5期 孙正兴 ,等 :基于局部 SVM分类器的表情识别方法 ·459·