Data storage and preservation 4e// A generic solution for the stripe size Idea o disentangle "stripe size"from "object size" o"stripe size"is the size of one slice of data o"object size"is the size of one block of data on disk o several stripes are put together into one bigger object striping mapreduce 16/62 S.Ponce-CERN

Data storage and preservation 16 / 62 S. Ponce - CERN devices // risks consistency safety c/c striping mapreduce A generic solution for the stripe size Idea disentangle “stripe size” from “object size” “stripe size” is the size of one slice of data “object size” is the size of one block of data on disk several stripes are put together into one bigger object

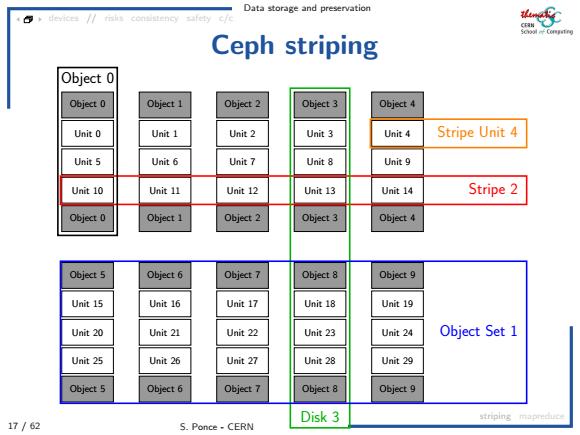

Data storage and preservation Ceph striping Object 0 Object 0 Object 1 Object 2 Object 3 Object 4 Unit 0 Unit 1 Unit 2 Unit 3 Unit 4 Stripe Unit 4 Unit 5 Unit 6 Unit 7 Unit 8 Unit 9 Unit 10 Unit 11 Unit 12 Unit 13 Unit 14 Stripe 2 Object 0 Object 1 Object 2 Object 3 Object 4 Object 5 Object 6 Object 7 Object 8 Object 9 Unit 15 Unit 16 Unit 17 Unit 18 Unit 19 Unit 20 Unit 21 Unit 22 Unit 23 Unit 24 Object Set 1 Unit 25 Unit 26 Unit 27 Unit 28 Unit 29 Object 5 Object 6 Object 7 Object 8 Object 9 Disk 3 striping 17/62 S.Ponce-CERN

Data storage and preservation 17 / 62 S. Ponce - CERN devices // risks consistency safety c/c striping mapreduce Ceph striping Object 0 Unit 0 Unit 5 Unit 10 Object 0 Object 1 Unit 1 Unit 6 Unit 11 Object 1 Object 2 Unit 2 Unit 7 Unit 12 Object 2 Object 3 Unit 3 Unit 8 Unit 13 Object 3 Object 4 Unit 4 Unit 9 Unit 14 Object 4 Object 5 Unit 15 Unit 20 Unit 25 Object 5 Object 6 Unit 16 Unit 21 Unit 26 Object 6 Object 7 Unit 17 Unit 22 Unit 27 Object 7 Object 8 Unit 18 Unit 23 Unit 28 Object 8 Object 9 Unit 19 Unit 24 Unit 29 Object 9 Stripe Unit 4 Stripe 2 Object 0 Disk 3 Object Set 1

Data storage and preservation Practical striping-number of disks Why to have many o to increase parallelism o to get better performances Why to have few o to limit the risk of losing files o as losing a disk now means losing all files of all disks o if p is the probability to lose a disk the probability to lose one in n is pn=np(1-p)-1~np striping mapreduce 18/62 S.Ponce-CERN

Data storage and preservation 18 / 62 S. Ponce - CERN devices // risks consistency safety c/c striping mapreduce Practical striping - number of disks Why to have many to increase parallelism to get better performances Why to have few to limit the risk of losing files as losing a disk now means losing all files of all disks if p is the probability to lose a disk the probability to lose one in n is pn = np(1 − p) n−1 ∼ np

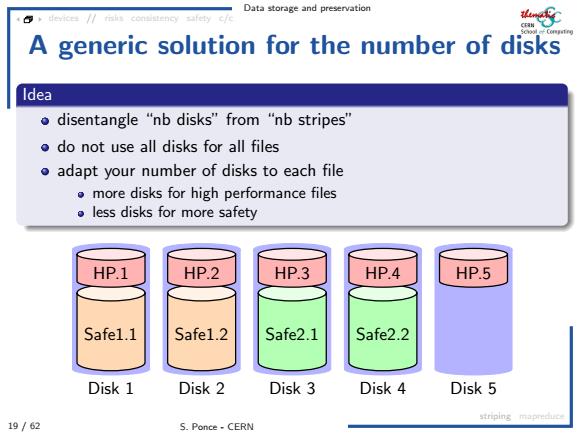

Data storage and preservation A generic solution for the number of disks Idea o disentangle "nb disks"from "nb stripes" o do not use all disks for all files o adapt your number of disks to each file more disks for high performance files o less disks for more safety HP.1 HP.2 HP.3 HP.4 HP.5 Safel.1 Safe1.2 Safe2.1 Safe2.2 Disk 1 Disk 2 Disk 3 Disk 4 Disk 5 striping mapreduce 19/62 S.Ponce-CERN

Data storage and preservation 19 / 62 S. Ponce - CERN devices // risks consistency safety c/c striping mapreduce A generic solution for the number of disks Idea disentangle “nb disks” from “nb stripes” do not use all disks for all files adapt your number of disks to each file more disks for high performance files less disks for more safety Disk 1 Disk 2 Disk 3 Disk 4 Disk 5 Safe1.1 Safe1.2 Safe2.1 Safe2.2 HP.1 HP.2 HP.3 HP.4 HP.5

Data storage and preservation Going further Map/Reduce What do we have with striping o striping allows to distribute server I/O on several devices but client still faces the total I/O o and CPU is not distributed Map/Reduce ldea o send computation to the data nodes o"the most efficient network I/O is the one you don't do" snping mapreduce 20/62 S.Ponce-CERN

Data storage and preservation 20 / 62 S. Ponce - CERN devices // risks consistency safety c/c striping mapreduce Going further : Map/Reduce What do we have with striping ? striping allows to distribute server I/O on several devices but client still faces the total I/O and CPU is not distributed Map/Reduce Idea send computation to the data nodes “the most efficient network I/O is the one you don’t do