2.2.1.Distance of point to hyperplane Consider some point x.Let d be the vector from hyperplane H to x of minimum length.Letx be the projection ofx onto H.It follows then that: xP=x-d d is parallel to w,so d=ow for some a E R. xEH which implies wxP+b=0,therefore wxP+b=wT(x-d)+b=w(x-aw)+b=0 which implies a=(wx+b)/(ww) 15/66

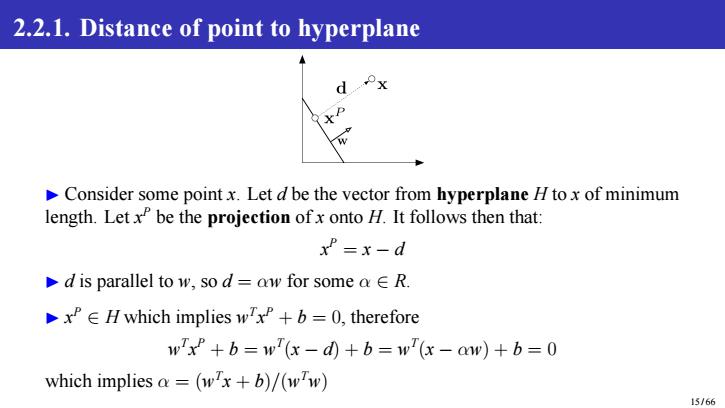

2.2.1. Distance of point to hyperplane ▶ Consider some point x. Let d be the vector from hyperplane H to x of minimum length. Let x P be the projection of x onto H. It follows then that: x P = x − d ▶ d is parallel to w, so d = αw for some α ∈ R. ▶ x P ∈ H which implies w T x P + b = 0, therefore w T x P + b = w T (x − d) + b = w T (x − αw) + b = 0 which implies α = (w T x + b)/(w Tw) 15 / 66

The length of d: lldll2 Vard=VazhTw lw'x+bl lw'x+bl VwIw w2 Margin of H with respect to data set D: (w,b)=min w'x+_ XED wll2 min w'x +b hw2x∈D w2 can be moved out of minimization term since w is not a function ofx. By definition,the margin and hyperplane are scale invariant: (6w,Bb)=(w,b),V3丰0 16/66

▶ The length of d: ∥d∥2 = √ d Td = √ α2wTw = |w T x + b| √ wTw = |w T x + b| ∥w∥2 ▶ Margin of H with respect to data set D: γ¯(w, b) = min x∈D |w T x + b| ∥w∥2 = 1 ∥w∥2 min x∈D |w T x + b| ∥w∥2 can be moved out of minimization term since w is not a function of x. ▶ By definition, the margin and hyperplane are scale invariant: γ¯(βw, βb) = ¯γ(w, b), ∀β ̸= 0 16 / 66

Outline (Level 2-3) o Geometric interpretation of perceptron o Distance of point to hyperplane ●Geometric margin o Functional margin 17/66

Outline (Level 2-3) Geometric interpretation of perceptron Distance of point to hyperplane Geometric margin Functional margin 17 / 66

2.2.2.Geometric margin Distance from any point x in the input space to hyperplane S is: 1 w is L2 norm of w (length) is unit vector in w's direction. ew·x in inner product e·|is absolute value If distance from any point x in the input space to hyperplane S is 0,thenx in S: 可m+创=0→w0十h=0 1 18/66

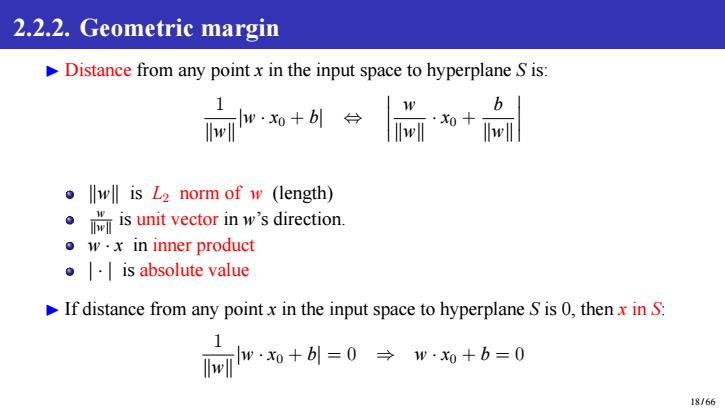

2.2.2. Geometric margin ▶ Distance from any point x in the input space to hyperplane S is: 1 ∥w∥ |w · x0 + b| ⇔ w ∥w∥ · x0 + b ∥w∥ ∥w∥ is L2 norm of w (length) w ∥w∥ is unit vector in w’s direction. w · x in inner product | · | is absolute value ▶ If distance from any point x in the input space to hyperplane S is 0, then x in S: 1 ∥w∥ |w · x0 + b| = 0 ⇒ w · x0 + b = 0 18 / 66

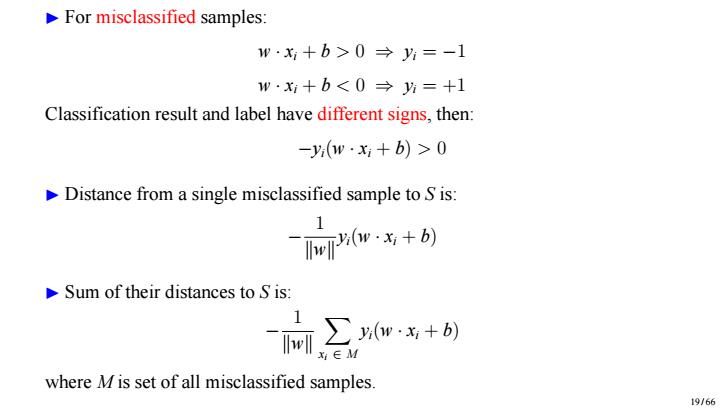

For misclassified samples: w·x+b>0→y片=-1 w·x+b<0→片=+1 Classification result and label have different signs,then: -y(w·x+b)>0 Distance from a single misclassified sample to S is: m可即·考+b) Sum of their distances to S is: 两∑,w+) x∈M where M is set of all misclassified samples 19/66

▶ For misclassified samples: w · xi + b > 0 ⇒ yi = −1 w · xi + b < 0 ⇒ yi = +1 Classification result and label have different signs, then: −yi(w · xi + b) > 0 ▶ Distance from a single misclassified sample to S is: − 1 ∥w∥ yi(w · xi + b) ▶ Sum of their distances to S is: − 1 ∥w∥ X xi ∈ M yi(w · xi + b) where M is set of all misclassified samples. 19 / 66