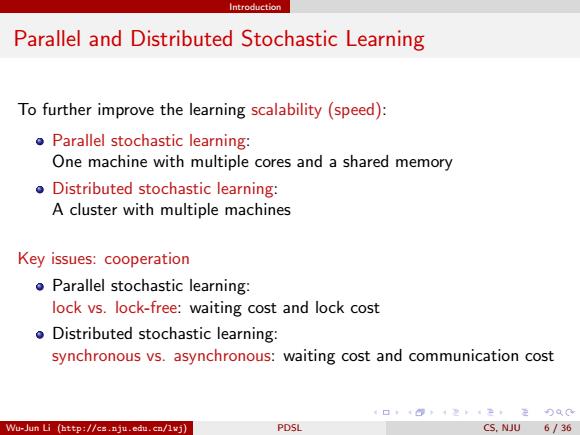

Introduction Parallel and Distributed Stochastic Learning To further improve the learning scalability (speed): o Parallel stochastic learning: One machine with multiple cores and a shared memory o Distributed stochastic learning: A cluster with multiple machines Key issues:cooperation Parallel stochastic learning: lock vs.lock-free:waiting cost and lock cost Distributed stochastic learning: synchronous vs.asynchronous:waiting cost and communication cost 4口,4@下¥2生,2分Q0 Wu-Jun Li (http://cs.nju.edu.cn/lwj) PDSL CS,NJU 6/36

Introduction Parallel and Distributed Stochastic Learning To further improve the learning scalability (speed): Parallel stochastic learning: One machine with multiple cores and a shared memory Distributed stochastic learning: A cluster with multiple machines Key issues: cooperation Parallel stochastic learning: lock vs. lock-free: waiting cost and lock cost Distributed stochastic learning: synchronous vs. asynchronous: waiting cost and communication cost Wu-Jun Li (http://cs.nju.edu.cn/lwj) PDSL CS, NJU 6 / 36

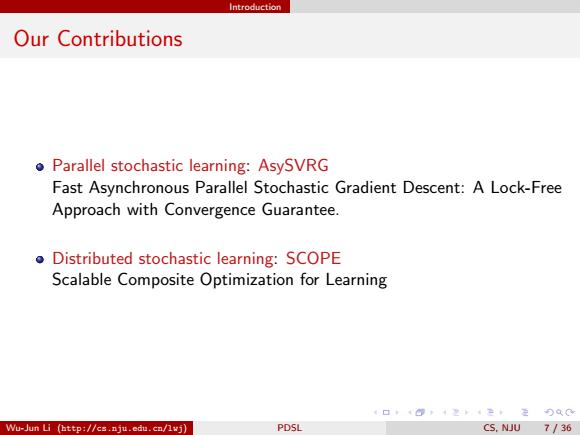

Introduction Our Contributions Parallel stochastic learning:AsySVRG Fast Asynchronous Parallel Stochastic Gradient Descent:A Lock-Free Approach with Convergence Guarantee. Distributed stochastic learning:SCOPE Scalable Composite Optimization for Learning 4口,4@,4242,定分Q0 Wu-Jun Li (http://cs.nju.edu.cn/lwj) PDSL CS,NJU 7/36

Introduction Our Contributions Parallel stochastic learning: AsySVRG Fast Asynchronous Parallel Stochastic Gradient Descent: A Lock-Free Approach with Convergence Guarantee. Distributed stochastic learning: SCOPE Scalable Composite Optimization for Learning Wu-Jun Li (http://cs.nju.edu.cn/lwj) PDSL CS, NJU 7 / 36

ASySVRG Outline Introduction ② AsySVRG SCOPE Conclusion 4口,4@下4242,定分QC Wu-Jun Li (http://cs.nju.edu.cn/lvj) PDSL CS,NJU 8/36

AsySVRG Outline 1 Introduction 2 AsySVRG 3 SCOPE 4 Conclusion Wu-Jun Li (http://cs.nju.edu.cn/lwj) PDSL CS, NJU 8 / 36

AsySVRG Motivation and Contribution Motivation: Existing asynchronous parallel SGD:Hogwild![Recht et al.2011], and PASSCoDe [Hsieh,Yu,and Dhillon 2015] No parallel methods for SVRG. Lock-free:empirically effective,but no theoretical proof. Contribution: A fast asynchronous method to parallelize SVRG,called AsySVRG. A lock-free parallel strategy for both read and write Linear convergence rate with theoretical proof o Outperforms Hogwild!in experiments AsySVRG is the first lock-free parallel SGD method with theoretical proof of convergence. 4口,4@下¥24=, Wu-Jun Li (http://cs.nju.edu.cn/lvj) PDSL CS,NJU 9/36

AsySVRG Motivation and Contribution Motivation: Existing asynchronous parallel SGD: Hogwild! [Recht et al. 2011], and PASSCoDe [Hsieh, Yu, and Dhillon 2015] No parallel methods for SVRG. Lock-free: empirically effective, but no theoretical proof. Contribution: A fast asynchronous method to parallelize SVRG, called AsySVRG. A lock-free parallel strategy for both read and write Linear convergence rate with theoretical proof Outperforms Hogwild! in experiments AsySVRG is the first lock-free parallel SGD method with theoretical proof of convergence. Wu-Jun Li (http://cs.nju.edu.cn/lwj) PDSL CS, NJU 9 / 36

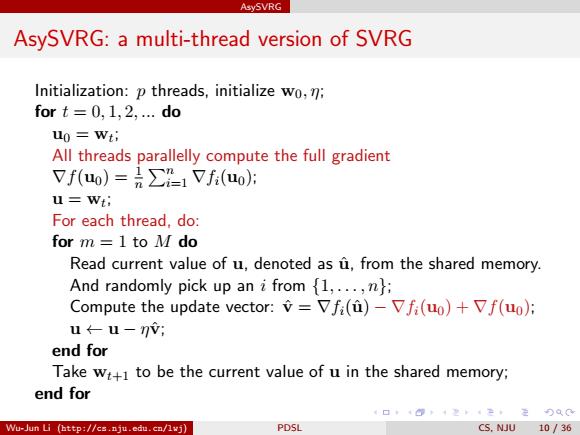

AsySVRG AsySVRG:a multi-thread version of SVRG Initialization:p threads,initialize wo,n; fort=0,1,2,…do uo Wt; All threads parallelly compute the full gradient Vf(uo)=员∑2-1Vf(o: u=Wt: For each thread,do: for m =1 to M do Read current value of u,denoted as u,from the shared memory. And randomly pick up an i from {1,...,n}; Compute the update vector:=Vfi(u)-Vfi(uo)+Vf(uo); u←-u-7V: end for Take wt+1 to be the current value of u in the shared memory; end for 4口,49卡,重,4=,2QC Wu-Jun Li (http://cs.nju.edu.cn/lvj) PDSL CS.NJU 10/36

AsySVRG AsySVRG: a multi-thread version of SVRG Initialization: p threads, initialize w0, η; for t = 0, 1, 2, ... do u0 = wt ; All threads parallelly compute the full gradient ∇f(u0) = 1 n Pn i=1 ∇fi(u0); u = wt ; For each thread, do: for m = 1 to M do Read current value of u, denoted as uˆ, from the shared memory. And randomly pick up an i from {1, . . . , n}; Compute the update vector: vˆ = ∇fi(uˆ) − ∇fi(u0) + ∇f(u0); u ← u − ηvˆ; end for Take wt+1 to be the current value of u in the shared memory; end for Wu-Jun Li (http://cs.nju.edu.cn/lwj) PDSL CS, NJU 10 / 36